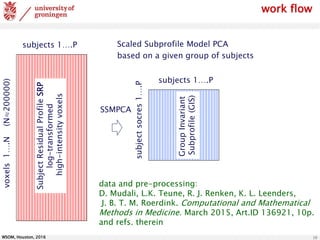

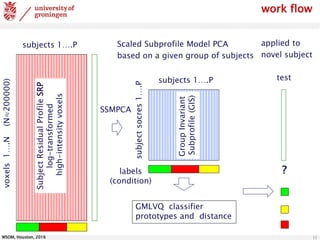

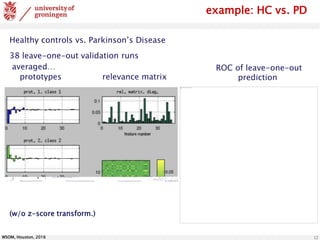

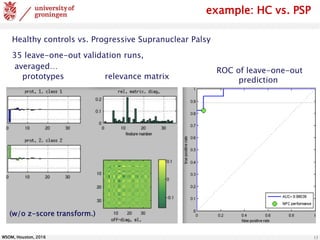

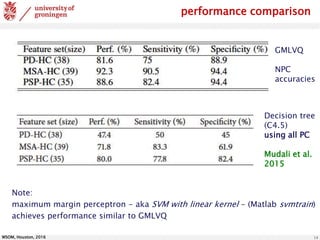

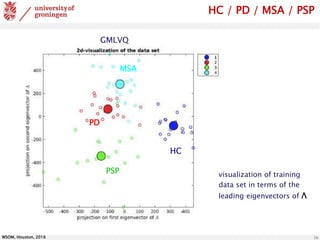

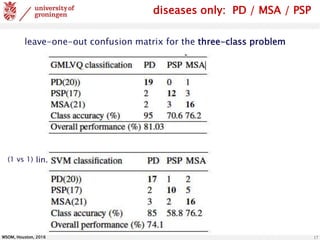

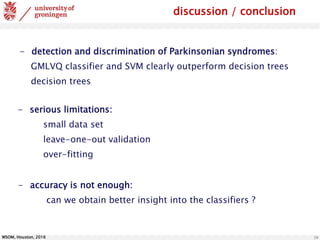

The document discusses the classification of brain data using FDG-PET scans to differentiate between various parkinsonian syndromes through advanced machine learning techniques like Generalized Matrix Relevance Learning Vector Quantization (GMLVQ) and Principal Component Analysis (PCA). The research highlights the benefits of prototype-based classification methods for improved accuracy compared to traditional decision trees, particularly in multi-class scenarios involving healthy controls and patients with Parkinson's disease, multiple system atrophy, and progressive supranuclear palsy. Future work involves optimizing feature selection and understanding relevance in voxel-space to further enhance classifier performance.

![WSOM, Houston, 2016

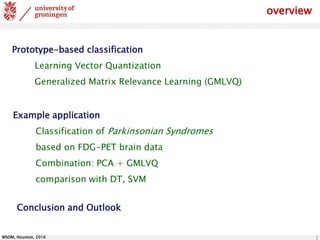

∙ identification of prototype vectors from labeled example data

∙ (dis)-similarity based classification (e.g. Euclidean distance)

Learning Vector Quantization

N-dimensional data, feature vectors

• initialize prototype vectors

for different classes

competitive learning: Winner-Takes-All LVQ1 [Kohonen, 1990, 1997]

• identify the winner

(closest prototype)

• present a single example

• move the winner

- closer towards the data (same class)

- away from the data (different class)

feature space](https://image.slidesharecdn.com/wsom-2016-fdgpet-200706055637/85/2016-Classification-of-FDG-PET-Brain-Data-3-320.jpg)

![WSOM, Houston, 2016

Relevance Matrix LVQ

generalized quadratic distance in LVQ:

variants: global/local matrices (piecewise quadratic boundaries)

diagonal relevances (single feature weights)

rectangular (low-dim. representation)

[Schneider et al., 2009]

relevance matrix:

quantifies importance of features and pairs of features

summarizes relevance of feature j

( for equally scaled features )

training: optimize prototypes and Λ w.r.t. classification of examples](https://image.slidesharecdn.com/wsom-2016-fdgpet-200706055637/85/2016-Classification-of-FDG-PET-Brain-Data-6-320.jpg)

![WSOM, Houston, 2016

cost function based training

one example: Generalized LVQ [Sato & Yamada, 1995]

sigmoidal (linear for small arguments), e.g.

E approximates number of misclassifications

linear

E favors large margin separation of classes, e.g.

two winning prototypes:

minimize

small , large

E favors class-typical prototypes](https://image.slidesharecdn.com/wsom-2016-fdgpet-200706055637/85/2016-Classification-of-FDG-PET-Brain-Data-7-320.jpg)

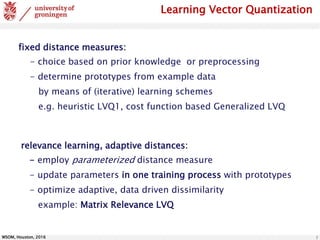

![WSOM, Houston, 2016 9

FDG-PET (Fluorodeoxyglucose positron emission tomography, 3d-images)

condition

Glucoseuptake

n=18 HC

Healhy controls

n= 20 PD

Parkinson’s Disease

n=21 MSA

Multiple System Atrophy

n=17 PSP

Progressive Supranuclear

Palsy

classification of FDG-PET data

[http://glimpsproject.com]](https://image.slidesharecdn.com/wsom-2016-fdgpet-200706055637/85/2016-Classification-of-FDG-PET-Brain-Data-9-320.jpg)

![WSOM, Houston, 2016 20

outlook/work in progress

- optimization of the number of PCs used as features

shown to improve decision tree performance

potential improvement for other classifiers

- larger data sets

- understanding relevances in voxel-space

relevant PC hint at discriminative between-patient variability

PCA:

recent example:

diagnosis of rheumatoid arthritis based on cytokine expression

[L. Yeo et al., Ann. of the Rheumatic Diseases, 2015]](https://image.slidesharecdn.com/wsom-2016-fdgpet-200706055637/85/2016-Classification-of-FDG-PET-Brain-Data-20-320.jpg)