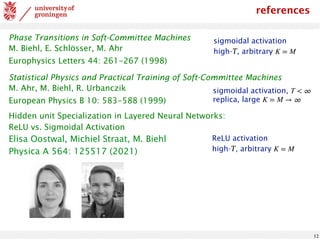

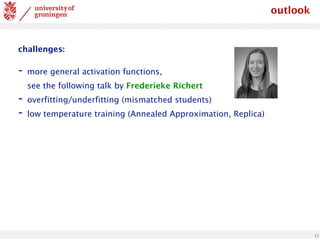

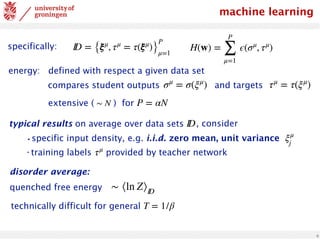

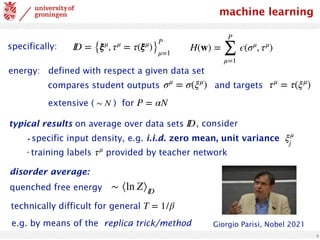

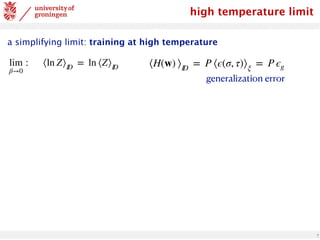

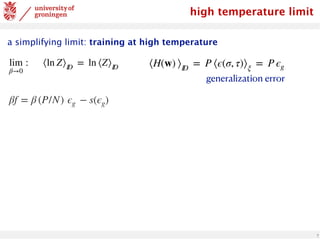

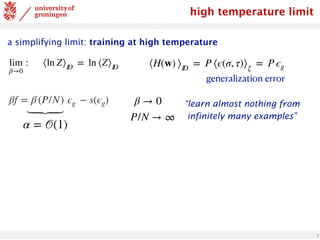

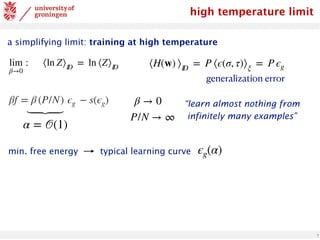

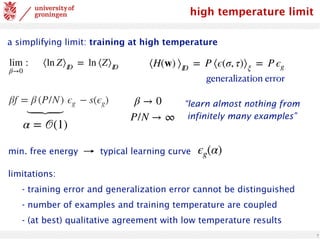

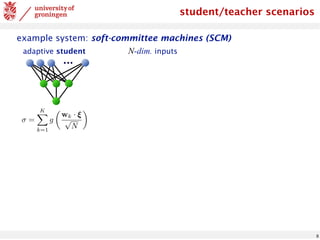

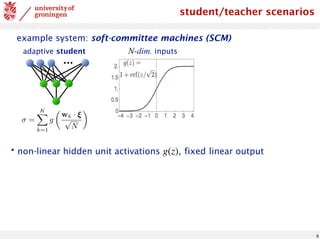

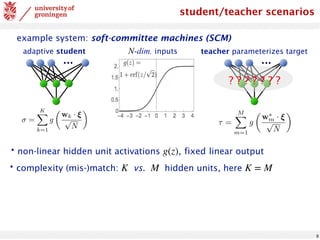

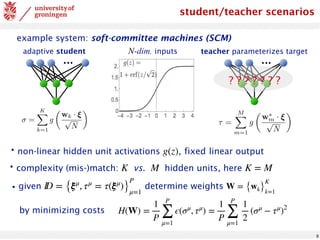

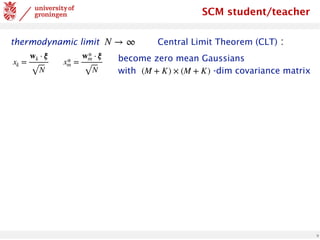

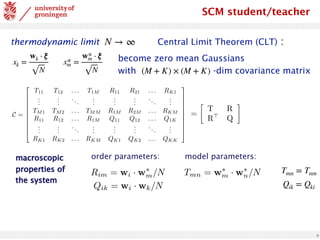

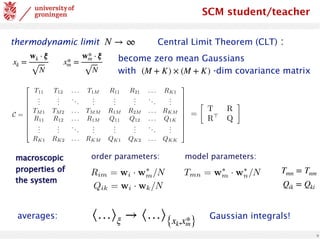

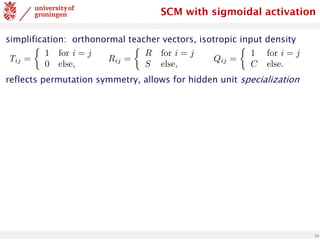

The document discusses the statistical physics perspective on learning, particularly focusing on student/teacher models and the typical learning curves associated with them. It covers topics like stochastic optimization, phase transitions in neural networks, and the complexities involved in training, including high temperature limits and the interplay between training error and generalization error. Additionally, it examines specific systems like soft-committee machines to illustrate the concepts involved in neural network training and optimization.

![2

Statistical Physics of Neural Networks

John Hopfield

Neural Networks and physical systems with emergent

collective computational abilities

PNAS 79(8): 2554, 1982

[activity of model neurons for given synaptic weights]](https://image.slidesharecdn.com/stat-phys-appis-reduced-230310212502-286e77fb/85/stat-phys-appis-reduced-pdf-7-320.jpg)

![2

Statistical Physics of Neural Networks

John Hopfield

Neural Networks and physical systems with emergent

collective computational abilities

PNAS 79(8): 2554, 1982

[activity of model neurons for given synaptic weights]

Elizabeth Gardner (1957-1988)

The space of interactions in neural networks

J. Phys. A 21: 257, 1988

[synaptic weights determined for given activity patterns]](https://image.slidesharecdn.com/stat-phys-appis-reduced-230310212502-286e77fb/85/stat-phys-appis-reduced-pdf-8-320.jpg)

![3

stochastic optimization

objective/cost/ energy function , e.g. (later: )

consider stochastic optimization process, for example:

H(w) w ∈ ℝN

N → ∞

Metropolis-like updates: small random changes of

accepted with probability

Δw w

min{1, exp[−βΔH]}](https://image.slidesharecdn.com/stat-phys-appis-reduced-230310212502-286e77fb/85/stat-phys-appis-reduced-pdf-10-320.jpg)

![3

stochastic optimization

objective/cost/ energy function , e.g. (later: )

consider stochastic optimization process, for example:

H(w) w ∈ ℝN

N → ∞

Metropolis-like updates: small random changes of

accepted with probability

Δw w

min{1, exp[−βΔH]}

Langevin dynamics: noisy gradient descent

∂w

∂t

= − ∇wH(w) + f(t)

⟨fj(t)fk(s)⟩ =

2

β

δjkδ(t − s)

with delta-correlated noise:](https://image.slidesharecdn.com/stat-phys-appis-reduced-230310212502-286e77fb/85/stat-phys-appis-reduced-pdf-11-320.jpg)

![3

stochastic optimization

objective/cost/ energy function , e.g. (later: )

consider stochastic optimization process, for example:

H(w) w ∈ ℝN

N → ∞

Metropolis-like updates: small random changes of

accepted with probability

Δw w

min{1, exp[−βΔH]}

Langevin dynamics: noisy gradient descent

∂w

∂t

= − ∇wH(w) + f(t)

⟨fj(t)fk(s)⟩ =

2

β

δjkδ(t − s)

with delta-correlated noise:

… acceptance rate for uphill moves in Metropolis algorithms

... noise level, i.e. random deviation from gradient

… “how serious we are about minimizing ”

H

temperature-like parameter controls](https://image.slidesharecdn.com/stat-phys-appis-reduced-230310212502-286e77fb/85/stat-phys-appis-reduced-pdf-12-320.jpg)

![thermal equilibrium

stationary density of configurations:

normalization:

Zustandssumme, partition function

4

P(w) =

1

Z

exp [−βH(w)]

Z =

∫

dN

w exp [−βH(w)]](https://image.slidesharecdn.com/stat-phys-appis-reduced-230310212502-286e77fb/85/stat-phys-appis-reduced-pdf-13-320.jpg)

![thermal equilibrium

stationary density of configurations:

normalization:

Zustandssumme, partition function

Gibbs-Boltzmann density of states

• physics: thermal equilibrium of a physical system at temperature T

• optimization: formal equilibrium situation, control parameter T

4

P(w) =

1

Z

exp [−βH(w)]

Z =

∫

dN

w exp [−βH(w)]](https://image.slidesharecdn.com/stat-phys-appis-reduced-230310212502-286e77fb/85/stat-phys-appis-reduced-pdf-14-320.jpg)

![thermal equilibrium

stationary density of configurations:

normalization:

Zustandssumme, partition function

Gibbs-Boltzmann density of states

• physics: thermal equilibrium of a physical system at temperature T

• optimization: formal equilibrium situation, control parameter T

4

P(w) =

1

Z

exp [−βH(w)]

• thermal averages, e.g.

equilibrium properties given by (derivatives of) ln Z

E = ⟨H⟩T

=

∫

dN

w H(w) P(w) = −

∂

∂β

ln Z

Z =

∫

dN

w exp [−βH(w)]](https://image.slidesharecdn.com/stat-phys-appis-reduced-230310212502-286e77fb/85/stat-phys-appis-reduced-pdf-15-320.jpg)

![5

~ volume of states with energy E

Z =

∫

dN

w exp[−β(Hw)] =

∫

dE

∫

dN

w δ[H(w) − E] e−βE

free energy](https://image.slidesharecdn.com/stat-phys-appis-reduced-230310212502-286e77fb/85/stat-phys-appis-reduced-pdf-16-320.jpg)

![5

~ volume of states with energy E

Z =

∫

dN

w exp[−β(Hw)] =

∫

dE

∫

dN

w δ[H(w) − E] e−βE

assume extensive energy , for

E = Ne N → ∞

Z =

∫

dE exp [−Nβ (e − s(e)/β)]

entropy ,

s(E)

f = e − s/β ∼ − ln Z/(βN)

dominated by the minimum of the

free energy

free energy](https://image.slidesharecdn.com/stat-phys-appis-reduced-230310212502-286e77fb/85/stat-phys-appis-reduced-pdf-17-320.jpg)

![5

~ volume of states with energy E

Z =

∫

dN

w exp[−β(Hw)] =

∫

dE

∫

dN

w δ[H(w) − E] e−βE

assume extensive energy , for

E = Ne N → ∞

Z =

∫

dE exp [−Nβ (e − s(e)/β)]

entropy ,

s(E)

f = e − s/β ∼ − ln Z/(βN)

dominated by the minimum of the

free energy

free energy

controls the competition between

minimization of energy e and

maximization of entropy s(e)

T = 1/β](https://image.slidesharecdn.com/stat-phys-appis-reduced-230310212502-286e77fb/85/stat-phys-appis-reduced-pdf-18-320.jpg)

![10

simplification: orthonormal teacher vectors, isotropic input density

reflects permutation symmetry, allows for hidden unit specialization

Tij =

⇢

1 for i = j

0 else,

Rij =

⇢

R for i = j

S else,

Qij =

⇢

1 for i = j

C else.

<latexit

sha1_base64="z3U/1x66FQ8CCAKv5dCLWIcbiuE=">AAADPXicpVI7b9RAEF6bQBLzukBJMyICUSDLTiSSJlIgDWVel0S6PZ3We+O7TdZra3eNOFm+X5Ffk4aCf0BHR0PBQ4guLeu7gMhDCMRIK3365vHNzE5SSGFsFL33/Gsz12/Mzs0HN2/dvnO3tXBvz+Sl5tjmucz1QcIMSqGwbYWVeFBoZFkicT852mj8+69QG5GrXTsqsJuxgRKp4Mw6qrfg7ez2KnFYwxoAlZhaWgFNcCBUxbRmoxoqKesAYngMNEvy11Wa63ENYu0QKAUIIPrlQWnwad3QAVBU/Z8VqBaDoQ2DadR4PK6D7b8S3b5SdD6AnX/XdKJb/zGpE904Jxr+QbTXWozCaGJwGcRnYHH92ZfjF8/fft/std7Rfs7LDJXlkhnTiaPCdl1ZK7jEOqClwYLxIzbAjoOKZWi61eT3a3jkmD64Zt1TFibs7xkVy4wZZYmLzJgdmou+hrzK1yltutqthCpKi4pPhdJSgs2hOSXoC43cypEDjGvhegU+ZJpx6w6uWUJ8ceTLYG8pjJfDpS23jVUytTnygDwkT0hMVsg6eUk2SZtw78T74H3yPvtv/I/+V//bNNT3znLuk3Pmn/4AGwYIbw==</latexit>

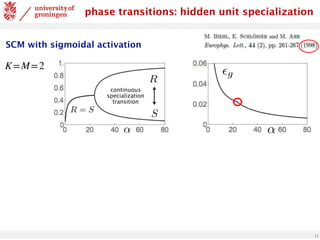

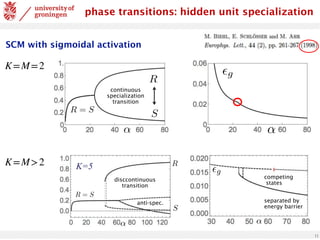

SCM with sigmoidal activation

✏g =

1

K

⇢

1

3

+

K 1

⇡

sin 1

✓

C

2

◆

2 sin 1

✓

S

2

◆

2

⇡

sin 1

✓

R

2

◆

<latexit

sha1_base64="050svsyqr1f80CEz0aVNkCq3F3g=">AAACx3icbVHLbtQwFHVSHiU8OpQlG4cKqQh1lKQSdIPUUhagbspj2krjMHI8zoyp85B9U3VkecE/8Ef8ATs2/AAbPgFPMkKlM1eyfHwesn1vVkuhIYp+ev7ajZu3bq/fCe7eu/9go/dw80RXjWJ8wCpZqbOMai5FyQcgQPKzWnFaZJKfZueHc/30gistqvITzGqeFnRSilwwCo4a9X4RXmshHZxgEr4iISa5oszE1hxZjInkORDzj9u1JHxOwqAjjki4Q0JHk1rY1jrERIvys9mJu/N2Zzy0JrFEickUnrUZnKz0fbzqa7c0wG2g05PFVauyH5ayxAaj3lbUj9rCyyBegK39F7+/vT74/ud41PtBxhVrCl4Ck1TrYRzVkBqqQDDJbUAazWvKzumEDx0sacF1ato5WPzUMWOcV8qtEnDLXk0YWmg9KzLnLChM9XVtTq7Shg3ke6kRZd0AL1l3Ud5IDBWeDxWPheIM5MwBypRwb8VsSl1XwI1+3oT4+peXwUnSj3f7yXvXjT3U1Tp6jJ6gbRSjl2gfvUXHaICY98b74mkP/Hd+5V/4l53V9xaZR+i/8r/+BbLn4Fo=</latexit>

=

⟨

1

2

(σ − τ)2

⟩

ξ

s =

1

2

ln det[ C ] (+ constant)

s =

1

2

ln

h

1+(K 1)C ((R S)+KS)

2

i

+K 1

2 ln

⇥

1 C (R S)2

⇤

<latexit

sha1_base64="R1D/dvB51gM9AtxPyLBvGiIN87s=">AAAC53icbZLLjtMwFIadcBvCrcCSjUtF1TKiSsKC2SCNNBukbgaGzoxUl8pxTlprHCfYDiKK+gJsWIAQW16JHS+DcC6gzgxHsvz7nN/57ONEueDa+P4vx71y9dr1Gzs3vVu379y917v/4FhnhWIwY5nI1GlENQguYWa4EXCaK6BpJOAkOjuo6ycfQGmeybemzGGR0pXkCWfU2NSy95tEsOKygveSKkXLpxtMBI1AVHpqpYGPRptSAPb08OUQk0RRVgWbKqx90g5IzBwHpL9L+qMp6T8j/WB80MxNbTR60yyOxo1lio+I4qu1Gb8LcasWmMhMFmkEChPiDfEQ7+Jqi9wyu2/XZIuOuc4FLTvDuYNYV8v/R7aovyyPgIy3LrvsDfyJ3wS+LIJODFAXh8veTxJnrEhBGiao1vPAz82iospwJmDjkUJDTtkZXcHcSklT0IuqeacNfmIzMU4yZYc0uMlu76hoqnWZRtaZUrPWF2t18n+1eWGSvUXFZV4YkKwFJYXAJsP1o+OYK2BGlFZQprg9K2Zrattq7K/h2SYEF698WRyHk+D5JHwdDvb3unbsoEfoMRqhAL1A++gVOkQzxJzY+eR8cb663P3sfnO/t1bX6fY8ROfC/fEHhtPgvQ==</latexit>

(+ constant)](https://image.slidesharecdn.com/stat-phys-appis-reduced-230310212502-286e77fb/85/stat-phys-appis-reduced-pdf-37-320.jpg)

![10

simplification: orthonormal teacher vectors, isotropic input density

reflects permutation symmetry, allows for hidden unit specialization

Tij =

⇢

1 for i = j

0 else,

Rij =

⇢

R for i = j

S else,

Qij =

⇢

1 for i = j

C else.

<latexit

sha1_base64="z3U/1x66FQ8CCAKv5dCLWIcbiuE=">AAADPXicpVI7b9RAEF6bQBLzukBJMyICUSDLTiSSJlIgDWVel0S6PZ3We+O7TdZra3eNOFm+X5Ffk4aCf0BHR0PBQ4guLeu7gMhDCMRIK3365vHNzE5SSGFsFL33/Gsz12/Mzs0HN2/dvnO3tXBvz+Sl5tjmucz1QcIMSqGwbYWVeFBoZFkicT852mj8+69QG5GrXTsqsJuxgRKp4Mw6qrfg7ez2KnFYwxoAlZhaWgFNcCBUxbRmoxoqKesAYngMNEvy11Wa63ENYu0QKAUIIPrlQWnwad3QAVBU/Z8VqBaDoQ2DadR4PK6D7b8S3b5SdD6AnX/XdKJb/zGpE904Jxr+QbTXWozCaGJwGcRnYHH92ZfjF8/fft/std7Rfs7LDJXlkhnTiaPCdl1ZK7jEOqClwYLxIzbAjoOKZWi61eT3a3jkmD64Zt1TFibs7xkVy4wZZYmLzJgdmou+hrzK1yltutqthCpKi4pPhdJSgs2hOSXoC43cypEDjGvhegU+ZJpx6w6uWUJ8ceTLYG8pjJfDpS23jVUytTnygDwkT0hMVsg6eUk2SZtw78T74H3yPvtv/I/+V//bNNT3znLuk3Pmn/4AGwYIbw==</latexit>

SCM with sigmoidal activation

✏g =

1

K

⇢

1

3

+

K 1

⇡

sin 1

✓

C

2

◆

2 sin 1

✓

S

2

◆

2

⇡

sin 1

✓

R

2

◆

<latexit

sha1_base64="050svsyqr1f80CEz0aVNkCq3F3g=">AAACx3icbVHLbtQwFHVSHiU8OpQlG4cKqQh1lKQSdIPUUhagbspj2krjMHI8zoyp85B9U3VkecE/8Ef8ATs2/AAbPgFPMkKlM1eyfHwesn1vVkuhIYp+ev7ajZu3bq/fCe7eu/9go/dw80RXjWJ8wCpZqbOMai5FyQcgQPKzWnFaZJKfZueHc/30gistqvITzGqeFnRSilwwCo4a9X4RXmshHZxgEr4iISa5oszE1hxZjInkORDzj9u1JHxOwqAjjki4Q0JHk1rY1jrERIvys9mJu/N2Zzy0JrFEickUnrUZnKz0fbzqa7c0wG2g05PFVauyH5ayxAaj3lbUj9rCyyBegK39F7+/vT74/ud41PtBxhVrCl4Ck1TrYRzVkBqqQDDJbUAazWvKzumEDx0sacF1ato5WPzUMWOcV8qtEnDLXk0YWmg9KzLnLChM9XVtTq7Shg3ke6kRZd0AL1l3Ud5IDBWeDxWPheIM5MwBypRwb8VsSl1XwI1+3oT4+peXwUnSj3f7yXvXjT3U1Tp6jJ6gbRSjl2gfvUXHaICY98b74mkP/Hd+5V/4l53V9xaZR+i/8r/+BbLn4Fo=</latexit>

=

⟨

1

2

(σ − τ)2

⟩

ξ

s =

1

2

ln det[ C ] (+ constant)

s =

1

2

ln

h

1+(K 1)C ((R S)+KS)

2

i

+K 1

2 ln

⇥

1 C (R S)2

⇤

<latexit

sha1_base64="R1D/dvB51gM9AtxPyLBvGiIN87s=">AAAC53icbZLLjtMwFIadcBvCrcCSjUtF1TKiSsKC2SCNNBukbgaGzoxUl8pxTlprHCfYDiKK+gJsWIAQW16JHS+DcC6gzgxHsvz7nN/57ONEueDa+P4vx71y9dr1Gzs3vVu379y917v/4FhnhWIwY5nI1GlENQguYWa4EXCaK6BpJOAkOjuo6ycfQGmeybemzGGR0pXkCWfU2NSy95tEsOKygveSKkXLpxtMBI1AVHpqpYGPRptSAPb08OUQk0RRVgWbKqx90g5IzBwHpL9L+qMp6T8j/WB80MxNbTR60yyOxo1lio+I4qu1Gb8LcasWmMhMFmkEChPiDfEQ7+Jqi9wyu2/XZIuOuc4FLTvDuYNYV8v/R7aovyyPgIy3LrvsDfyJ3wS+LIJODFAXh8veTxJnrEhBGiao1vPAz82iospwJmDjkUJDTtkZXcHcSklT0IuqeacNfmIzMU4yZYc0uMlu76hoqnWZRtaZUrPWF2t18n+1eWGSvUXFZV4YkKwFJYXAJsP1o+OYK2BGlFZQprg9K2Zrattq7K/h2SYEF698WRyHk+D5JHwdDvb3unbsoEfoMRqhAL1A++gVOkQzxJzY+eR8cb663P3sfnO/t1bX6fY8ROfC/fEHhtPgvQ==</latexit>

(+ constant)

David Saad, Sara Solla

Robert Urbanczik](https://image.slidesharecdn.com/stat-phys-appis-reduced-230310212502-286e77fb/85/stat-phys-appis-reduced-pdf-38-320.jpg)

![10

simplification: orthonormal teacher vectors, isotropic input density

reflects permutation symmetry, allows for hidden unit specialization

Tij =

⇢

1 for i = j

0 else,

Rij =

⇢

R for i = j

S else,

Qij =

⇢

1 for i = j

C else.

<latexit

sha1_base64="z3U/1x66FQ8CCAKv5dCLWIcbiuE=">AAADPXicpVI7b9RAEF6bQBLzukBJMyICUSDLTiSSJlIgDWVel0S6PZ3We+O7TdZra3eNOFm+X5Ffk4aCf0BHR0PBQ4guLeu7gMhDCMRIK3365vHNzE5SSGFsFL33/Gsz12/Mzs0HN2/dvnO3tXBvz+Sl5tjmucz1QcIMSqGwbYWVeFBoZFkicT852mj8+69QG5GrXTsqsJuxgRKp4Mw6qrfg7ez2KnFYwxoAlZhaWgFNcCBUxbRmoxoqKesAYngMNEvy11Wa63ENYu0QKAUIIPrlQWnwad3QAVBU/Z8VqBaDoQ2DadR4PK6D7b8S3b5SdD6AnX/XdKJb/zGpE904Jxr+QbTXWozCaGJwGcRnYHH92ZfjF8/fft/std7Rfs7LDJXlkhnTiaPCdl1ZK7jEOqClwYLxIzbAjoOKZWi61eT3a3jkmD64Zt1TFibs7xkVy4wZZYmLzJgdmou+hrzK1yltutqthCpKi4pPhdJSgs2hOSXoC43cypEDjGvhegU+ZJpx6w6uWUJ8ceTLYG8pjJfDpS23jVUytTnygDwkT0hMVsg6eUk2SZtw78T74H3yPvtv/I/+V//bNNT3znLuk3Pmn/4AGwYIbw==</latexit>

SCM with sigmoidal activation

✏g =

1

K

⇢

1

3

+

K 1

⇡

sin 1

✓

C

2

◆

2 sin 1

✓

S

2

◆

2

⇡

sin 1

✓

R

2

◆

<latexit

sha1_base64="050svsyqr1f80CEz0aVNkCq3F3g=">AAACx3icbVHLbtQwFHVSHiU8OpQlG4cKqQh1lKQSdIPUUhagbspj2krjMHI8zoyp85B9U3VkecE/8Ef8ATs2/AAbPgFPMkKlM1eyfHwesn1vVkuhIYp+ev7ajZu3bq/fCe7eu/9go/dw80RXjWJ8wCpZqbOMai5FyQcgQPKzWnFaZJKfZueHc/30gistqvITzGqeFnRSilwwCo4a9X4RXmshHZxgEr4iISa5oszE1hxZjInkORDzj9u1JHxOwqAjjki4Q0JHk1rY1jrERIvys9mJu/N2Zzy0JrFEickUnrUZnKz0fbzqa7c0wG2g05PFVauyH5ayxAaj3lbUj9rCyyBegK39F7+/vT74/ud41PtBxhVrCl4Ck1TrYRzVkBqqQDDJbUAazWvKzumEDx0sacF1ato5WPzUMWOcV8qtEnDLXk0YWmg9KzLnLChM9XVtTq7Shg3ke6kRZd0AL1l3Ud5IDBWeDxWPheIM5MwBypRwb8VsSl1XwI1+3oT4+peXwUnSj3f7yXvXjT3U1Tp6jJ6gbRSjl2gfvUXHaICY98b74mkP/Hd+5V/4l53V9xaZR+i/8r/+BbLn4Fo=</latexit>

=

⟨

1

2

(σ − τ)2

⟩

ξ

s =

1

2

ln det[ C ] (+ constant)

s =

1

2

ln

h

1+(K 1)C ((R S)+KS)

2

i

+K 1

2 ln

⇥

1 C (R S)2

⇤

<latexit

sha1_base64="R1D/dvB51gM9AtxPyLBvGiIN87s=">AAAC53icbZLLjtMwFIadcBvCrcCSjUtF1TKiSsKC2SCNNBukbgaGzoxUl8pxTlprHCfYDiKK+gJsWIAQW16JHS+DcC6gzgxHsvz7nN/57ONEueDa+P4vx71y9dr1Gzs3vVu379y917v/4FhnhWIwY5nI1GlENQguYWa4EXCaK6BpJOAkOjuo6ycfQGmeybemzGGR0pXkCWfU2NSy95tEsOKygveSKkXLpxtMBI1AVHpqpYGPRptSAPb08OUQk0RRVgWbKqx90g5IzBwHpL9L+qMp6T8j/WB80MxNbTR60yyOxo1lio+I4qu1Gb8LcasWmMhMFmkEChPiDfEQ7+Jqi9wyu2/XZIuOuc4FLTvDuYNYV8v/R7aovyyPgIy3LrvsDfyJ3wS+LIJODFAXh8veTxJnrEhBGiao1vPAz82iospwJmDjkUJDTtkZXcHcSklT0IuqeacNfmIzMU4yZYc0uMlu76hoqnWZRtaZUrPWF2t18n+1eWGSvUXFZV4YkKwFJYXAJsP1o+OYK2BGlFZQprg9K2Zrattq7K/h2SYEF698WRyHk+D5JHwdDvb3unbsoEfoMRqhAL1A++gVOkQzxJzY+eR8cb663P3sfnO/t1bX6fY8ROfC/fEHhtPgvQ==</latexit>

(+ constant)

minimize (βf ) = α ϵg − s(ϵg) →

R(α)

C(α)

S(α)

→ ϵg(α)

success of learning

as a function of

the training set size

David Saad, Sara Solla

Robert Urbanczik](https://image.slidesharecdn.com/stat-phys-appis-reduced-230310212502-286e77fb/85/stat-phys-appis-reduced-pdf-39-320.jpg)