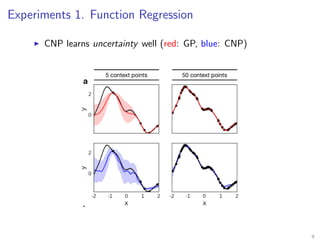

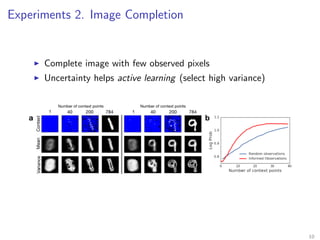

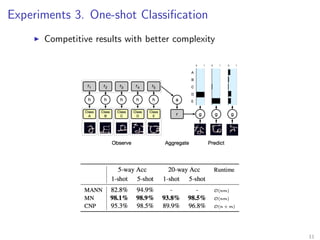

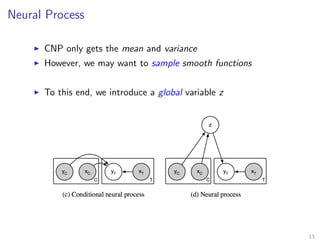

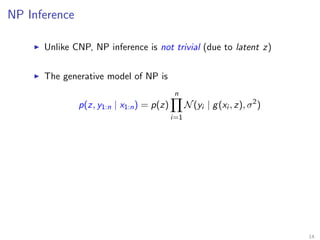

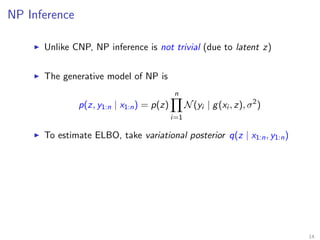

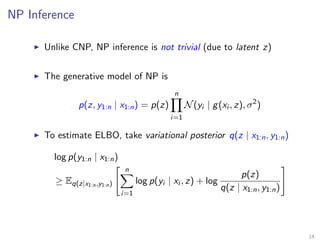

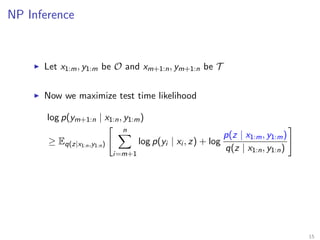

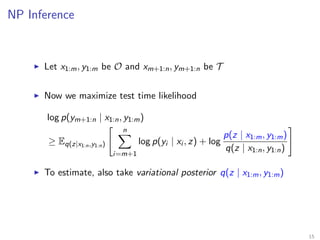

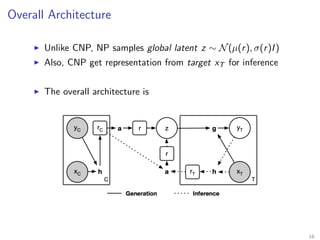

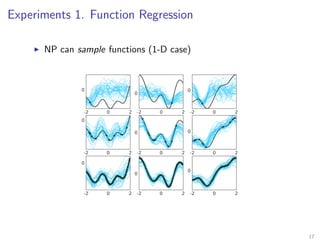

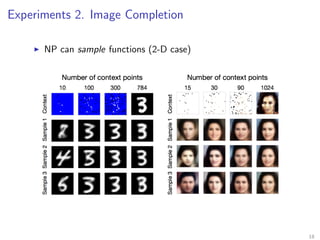

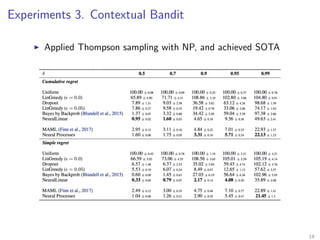

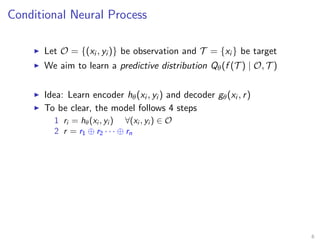

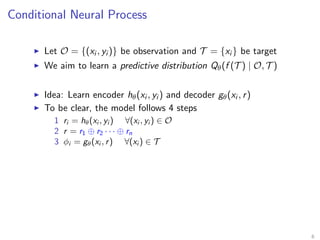

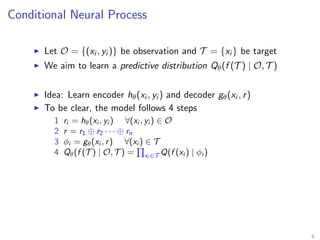

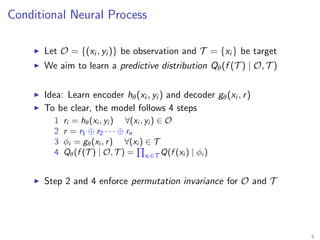

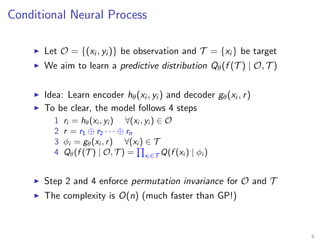

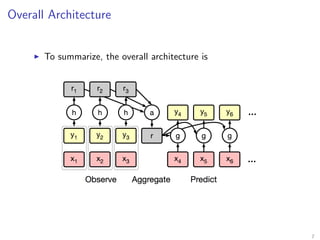

The document introduces Neural Processes (NP) and Conditional Neural Processes (CNP), which are models that aim to learn distributions of functions rather than single functions, improving upon traditional neural networks and Gaussian Processes. CNP focuses on predictive distributions given observations, while NP incorporates global latent variables for inference, allowing it to provide function samples and improve uncertainty estimates. The paper discusses architecture, complexity, training methods, and experiments demonstrating the efficacy of these models in tasks like regression and image completion.

![Training CNP

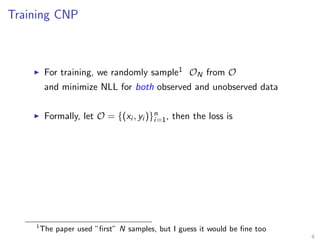

For training, we randomly sample1 ON from O

and minimize NLL for both observed and unobserved data

Formally, let O = {(xi , yi )}n

i=1, then the loss is

L(θ) = −Ef ∼P [EN [log Qθ(y1:n | ON, x1:n)]]

1

The paper used ”first” N samples, but I guess it would be fine too

8](https://image.slidesharecdn.com/180828neuralprocess-180828041301/85/Neural-Processes-25-320.jpg)