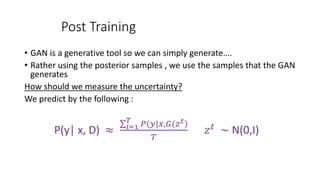

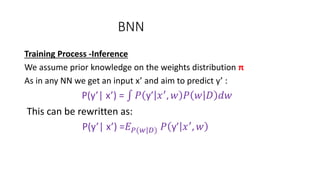

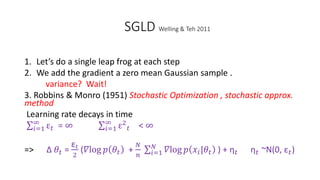

Generative adversarial networks (GANs) can be used to approximate the posterior distributions of Bayesian neural networks (BNNs). GANs are trained to generate samples from the posterior distribution learned by a BNN using stochastic gradient Langevin dynamics (SGLD). Specifically, a Wasserstein GAN with gradient penalty (WGAN-GP) is trained to match the posterior distribution by minimizing the Wasserstein distance between samples from the BNN's SGLD-approximated posterior and samples from the GAN's generator. This adversarial distillation technique allows parallel sampling from the BNN's posterior using only the GAN's parameters, providing computational and storage advantages over traditional Markov chain Monte Carlo methods for BNNs.

![GAN

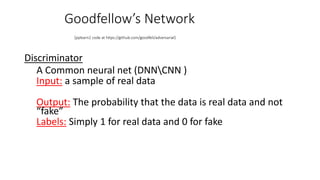

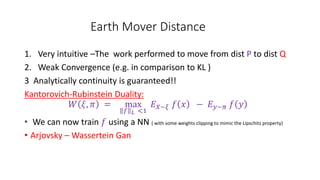

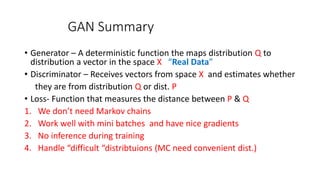

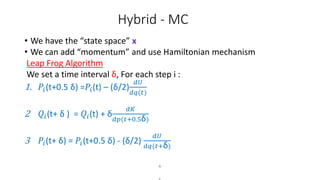

Generator

A Common neural net (DNN Goodfellow’s work)

Input: A generic distribution (GaussianUniform)

Output: Data sample from “real data” space such as fake images

Loss

𝒎𝒊𝒏

𝑮

𝒎𝒂𝒙

𝑫

(𝑽 𝑮, 𝑫 ) = 𝑬 𝒙~𝑷 𝒅𝒂𝒕𝒂(𝒙)[𝒍𝒐𝒈( 𝑫(𝒙))] + 𝑬 𝒛~𝑷(𝒛)[𝟏 − 𝒍𝒐𝒈( 𝑫(𝑮(𝒛))]](https://image.slidesharecdn.com/gbnn1autosaved-190107081957/85/GAN-for-Bayesian-Inference-objectives-10-320.jpg)

![Wasserstein Distance

A distance between prob. Measures:

𝑊𝑝(𝜉, 𝜋) = min

𝛾∊𝛤

E[𝑑(𝑥, 𝑦) 𝑝 ]

ξ and π are the marginals of X and Y respectively

We discuss only 𝑊1 the Earth Mover Distance](https://image.slidesharecdn.com/gbnn1autosaved-190107081957/85/GAN-for-Bayesian-Inference-objectives-12-320.jpg)

![WGAN - GP

• As said Lipschitz property has not fully achieved

Gulrajani, Arjovsky Improved WGAN “WGAN-GP”

Rather weights clipping we add gradient penalty

L= 𝐸 𝑋~𝜉 𝐷 𝑥 − 𝐸 𝑦~𝜋 [𝐷 𝑦 ]+λ𝐸𝑧 [( 𝛻z 𝐷(𝑧) 2−1)2]

z =𝜀𝑥 + (1 − 𝜀) 𝑦 𝜉, 𝜋 Distributions

𝜀 ~ U[0,1]](https://image.slidesharecdn.com/gbnn1autosaved-190107081957/85/GAN-for-Bayesian-Inference-objectives-14-320.jpg)

![Uncertainty Estimation Methods

1. Conditional entropy:

H(P(y|x)) = 𝑦∈𝑌 𝑃(𝑦|𝑥) log 𝑃 𝑦 𝑥

Entropy can’t differentiate between epistemic & aleatoric uncertainty

2. Inform. Gain (info gain over params values upon input prediction)

I(w,y |x,D) =H[p(y|x, D)]- 𝐸 𝑝 𝑤 𝐷 𝐻[𝑝 𝑦 𝑥, 𝑤 ]

It well measures the epistemic uncertainty because little info implies that

the parameter is well known

3. (VR) variation-ratio :

VR(x) =1- 𝒕 𝟏 𝒚 𝒕=𝒄∗

𝑻](https://image.slidesharecdn.com/gbnn1autosaved-190107081957/85/GAN-for-Bayesian-Inference-objectives-18-320.jpg)

![What is Hamiltonian?

• Operator that measures the total energy of an system

Two sets of coordinates

q -State coordinates (generalized coordinates)

p- Momentum

H(p, q) =U(q) +K(p)

U(q) = log[π 𝑞 𝐿(𝑞|𝐷)] K(P)=

𝑝 2

2𝑚

U-Potential energy, K –Kinetic

𝑑𝐻

𝑑𝑝

= 𝑞 ,

𝑑𝐻

𝑑𝑞

= - 𝑝](https://image.slidesharecdn.com/gbnn1autosaved-190107081957/85/GAN-for-Bayesian-Inference-objectives-28-320.jpg)

![HMC

Algorithm (Neal 1995, 2012, Duane 1987)

1. Draw 𝑥0 from our prior

Draw 𝑝0 from standard normal dist.

2. Perform L steps of leapfrog

3 Pick the 𝑥 𝑡 upon M.H step

min [ 1, exp(−U(q ∗ ) + U(q) − K(p ∗ ) + K(p))]](https://image.slidesharecdn.com/gbnn1autosaved-190107081957/85/GAN-for-Bayesian-Inference-objectives-31-320.jpg)

![GAN meets BNN

Adversarial Distillation of Bayesian Neural Network Posteriors

Basic Idea

• Train GAN to create posterior distribution of BNN

• We use WGAN-GP as loss function:

L = 𝑬 𝜭~𝑷 𝜭

[𝑫(θ)] -𝑬 𝝃~𝑷 𝒓

[𝑫(𝝃)] + λ 𝑬 𝜭~𝑷 𝜭

(〖 𝜵𝑫 θ 𝟐〗 − 𝟏) 𝟐

Two Steps Training

1. Create sample from the posterior using SGLD mechanism

2. Train the WGAN-GP to sample from this posterior](https://image.slidesharecdn.com/gbnn1autosaved-190107081957/85/GAN-for-Bayesian-Inference-objectives-38-320.jpg)