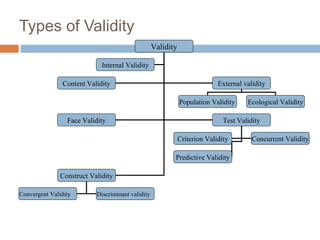

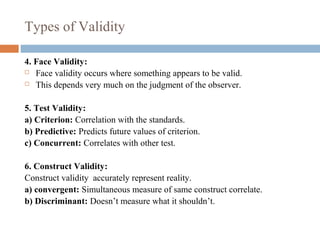

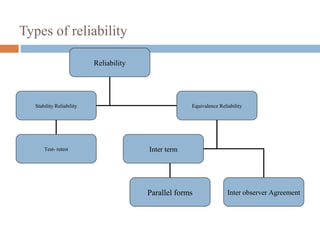

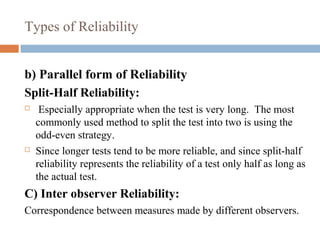

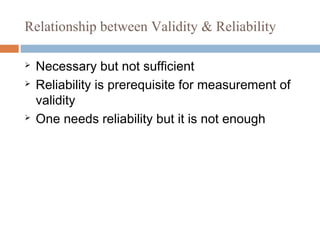

This document discusses validity and reliability in testing. Validity refers to how well a test measures what it is intended to measure. There are several types of validity including external, internal, content, face, criterion, construct, and predictive validity. Reliability refers to how consistently a test measures whatever it is measuring. The main types of reliability are stability (test-retest), equivalence (inter-item and parallel forms), and inter-observer reliability. A test must be reliable in order to validly measure a construct, so reliability is a necessary but not sufficient condition for validity.