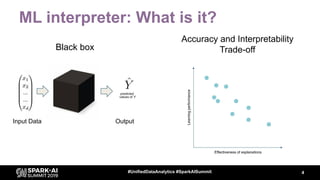

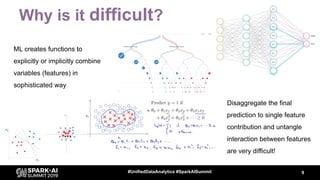

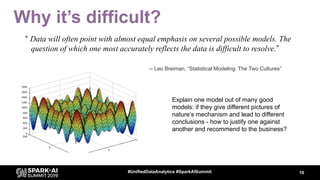

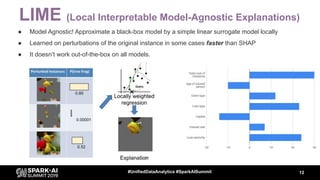

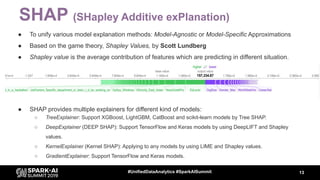

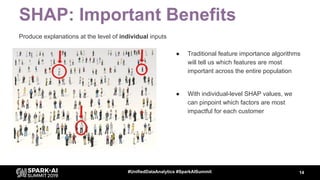

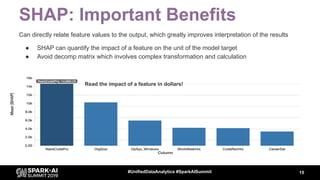

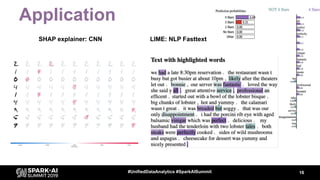

The document discusses the importance and methodologies of machine learning model interpretability, focusing on SHAP (Shapley Additive Explanations) and LIME (Local Interpretable Model-agnostic Explanations) as key techniques. It highlights challenges in understanding model decisions, including the complexity of machine learning functions and potential biases that can affect vulnerable groups. The document emphasizes the need for transparency in AI decision-making for trust, legal compliance, and ethical considerations.