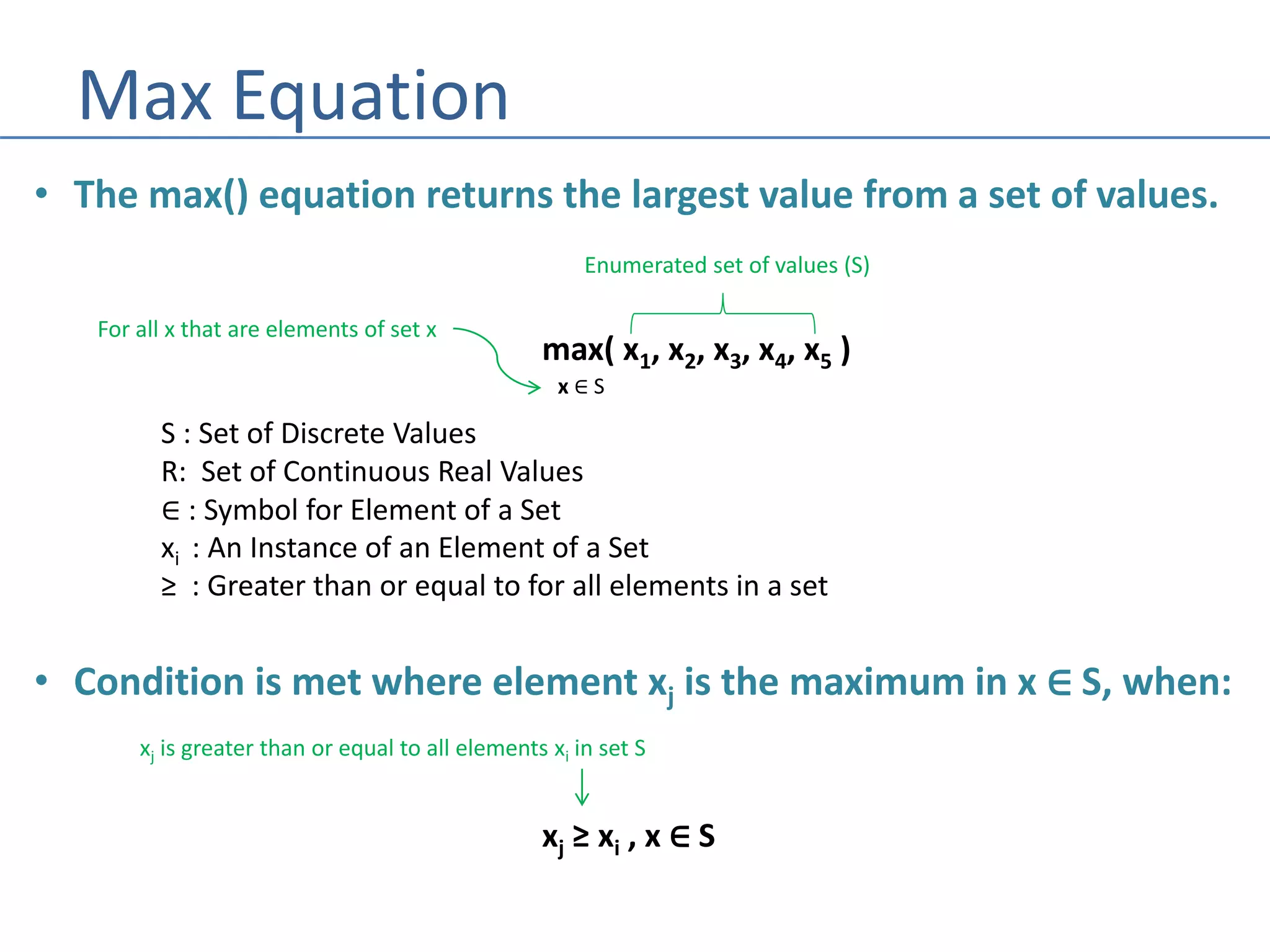

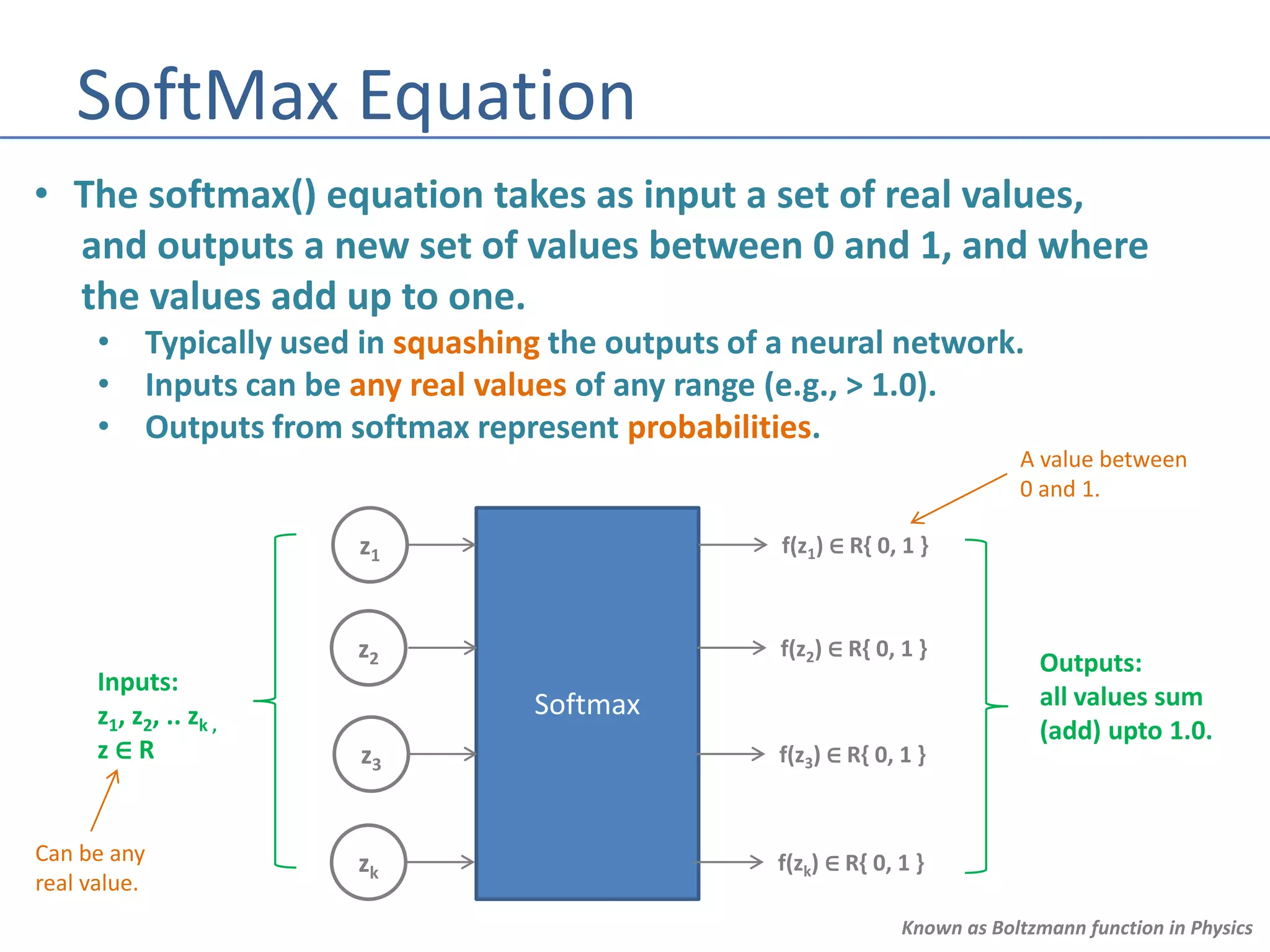

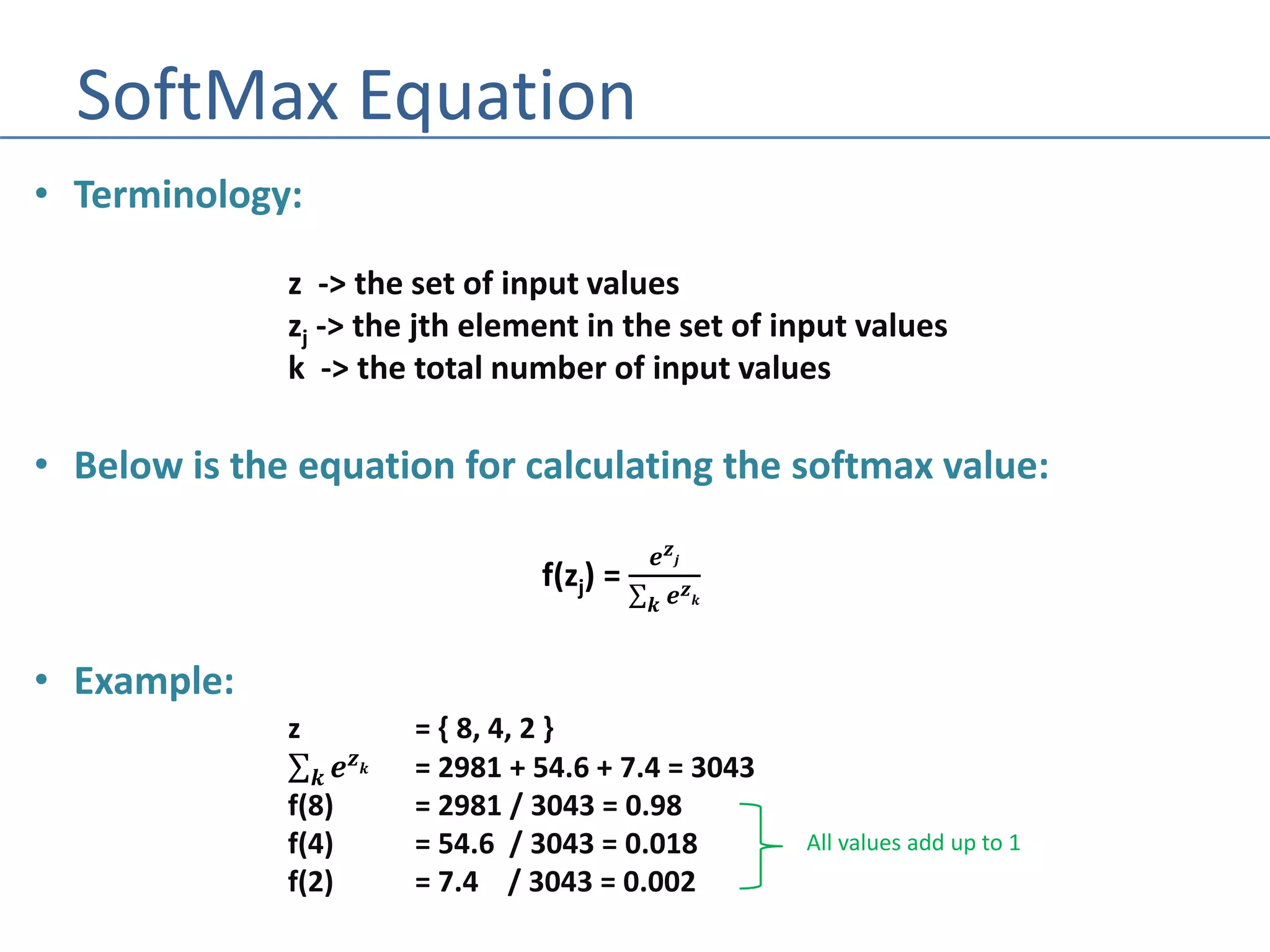

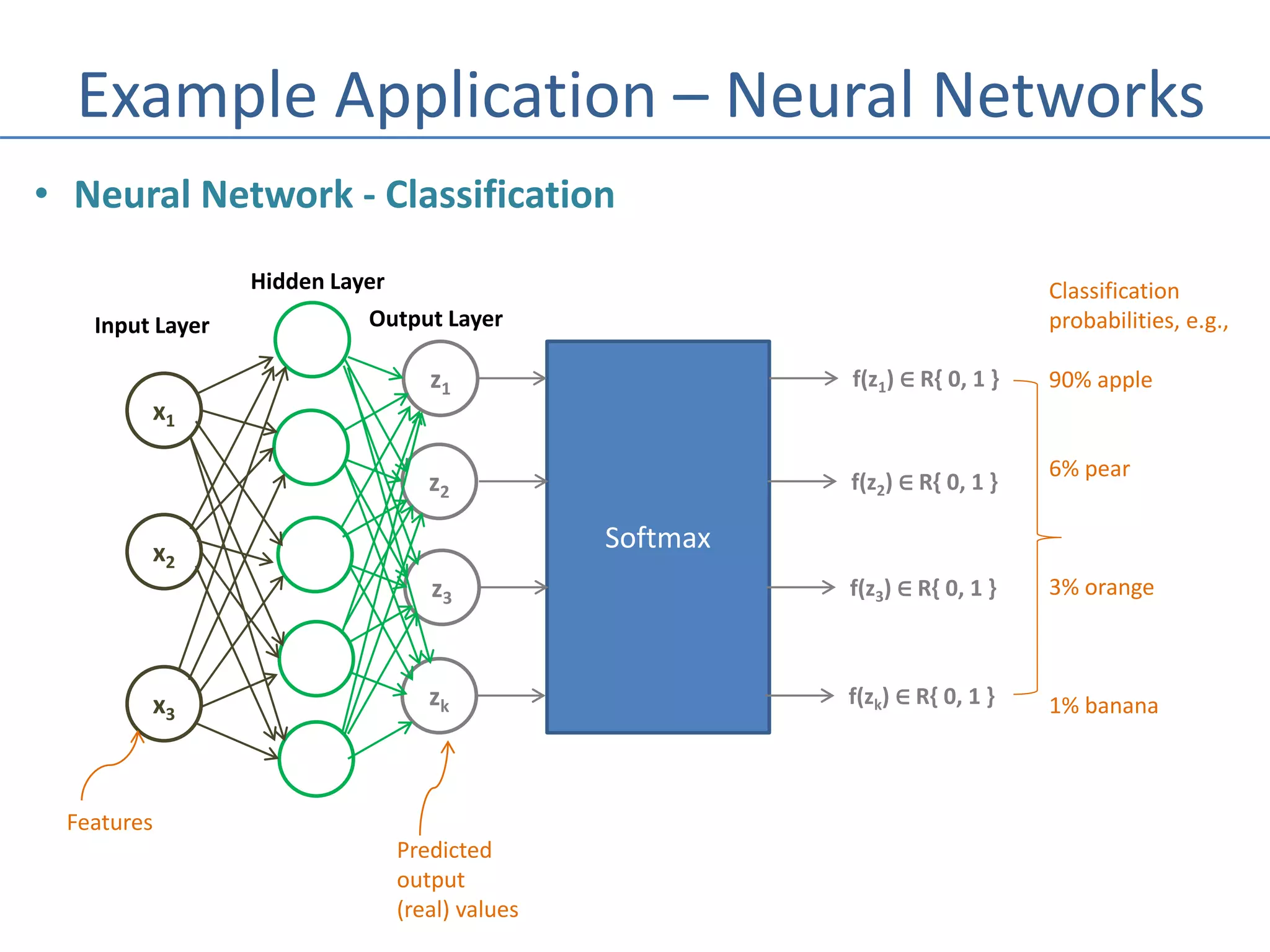

The document explains the max and softmax equations, used for identifying maximum values and transforming a set of real numbers into a probability distribution, respectively. The softmax function outputs values between 0 and 1 that sum to one, typically applied in neural networks for classification tasks. It also provides an example of applying the softmax function using the Torch library in Python, demonstrating the calculation of output probabilities from input values.

![Torch Library

torch is a python library for machine learning

Neural Networks Support Functions Name Alias

import torch.nn.functional as F

probabilities = F.softmax( list )

Results returned as a list list of values outputted

probabilities adding up to 1 by neural network

Example:

0.98, 0.012, 0.002 = F.softmax( [ 8, 4, 2 ] )](https://image.slidesharecdn.com/stats-softmax-170911230929/75/Statistics-SoftMax-Equation-6-2048.jpg)