Embed presentation

Downloaded 111 times

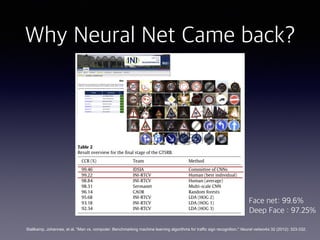

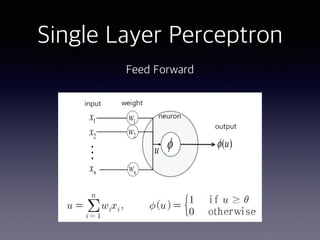

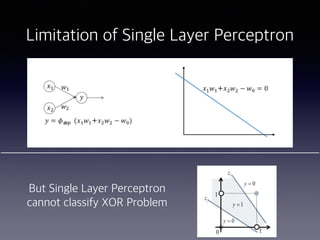

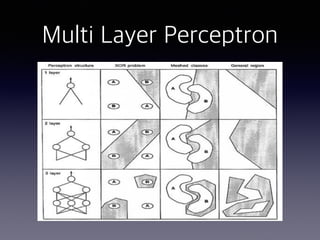

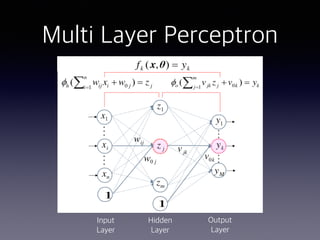

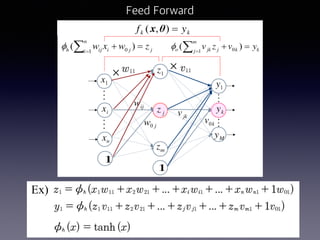

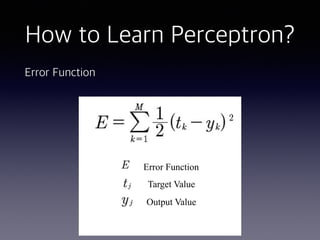

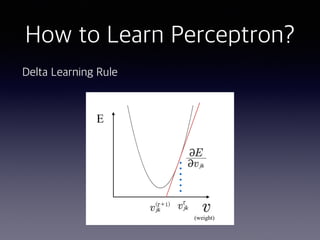

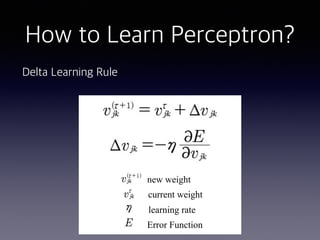

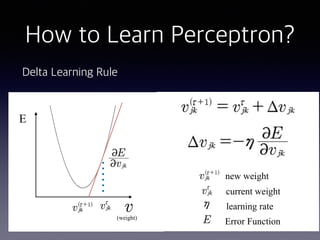

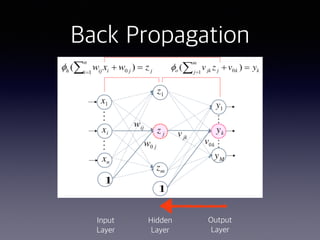

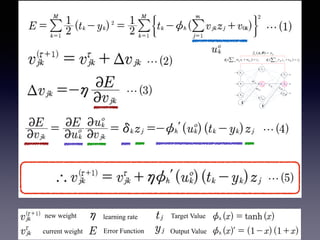

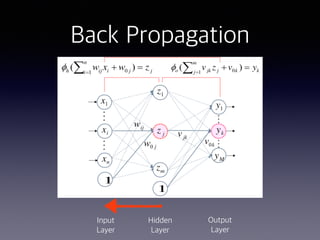

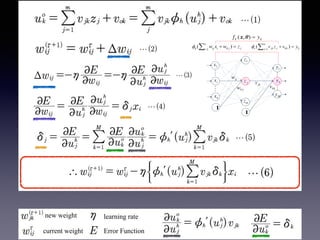

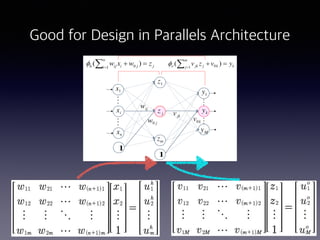

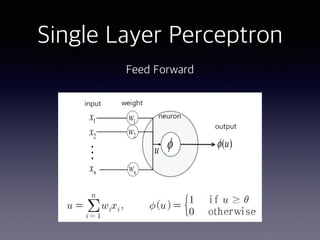

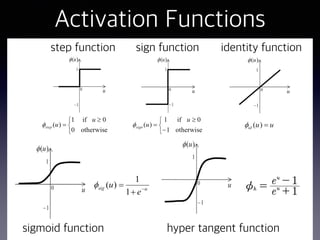

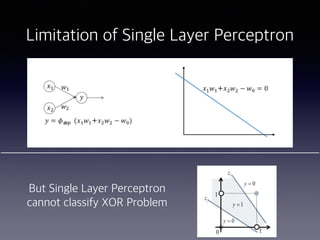

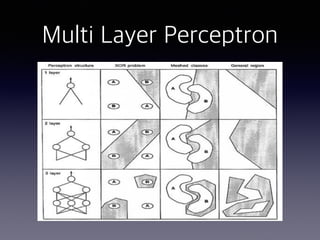

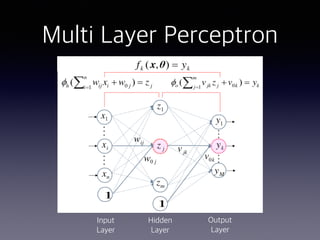

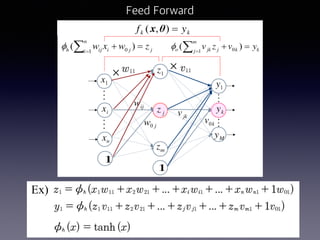

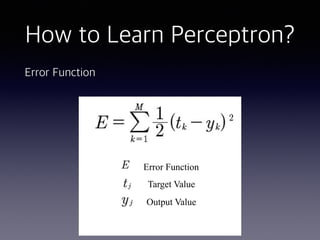

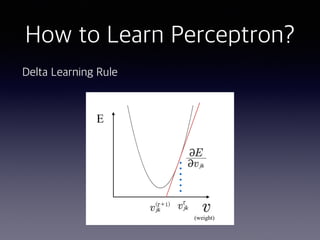

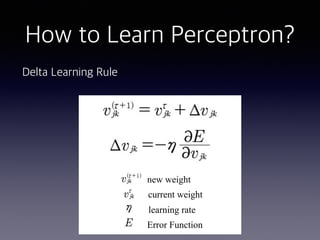

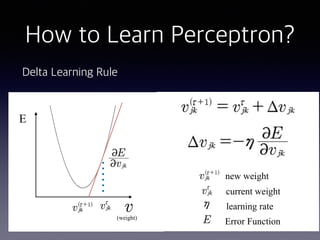

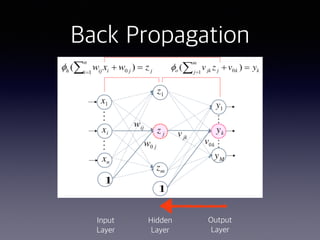

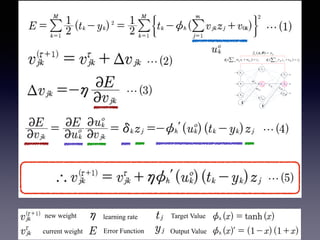

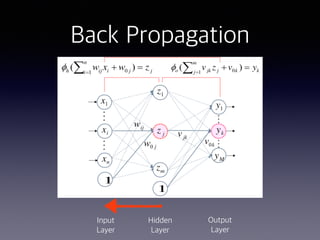

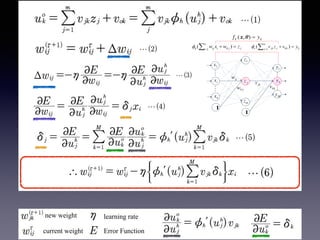

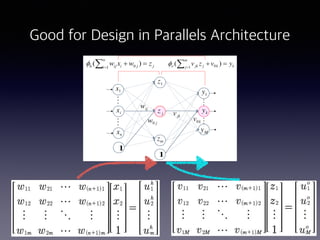

This document discusses multi-layer perceptrons (MLPs), including their advantages over single-layer perceptrons. MLPs can classify problems that single-layer perceptrons cannot by using multiple hidden layers between the input and output layers. MLPs are trained using an error-based learning method called backpropagation, which calculates errors between the target and actual output values and adjusts weights in the network accordingly starting from the output layer and propagating backwards. MLPs are well-suited for parallel processing architectures.