Embed presentation

Downloaded 17 times

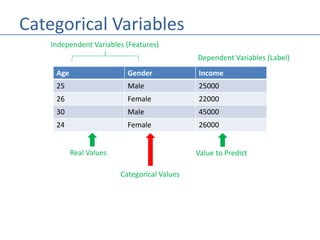

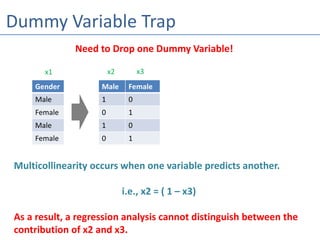

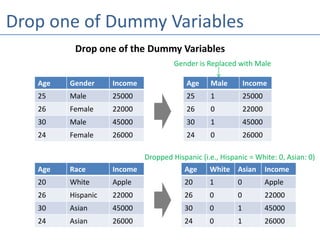

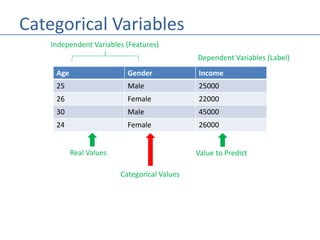

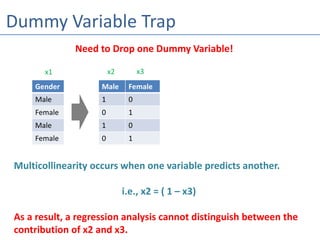

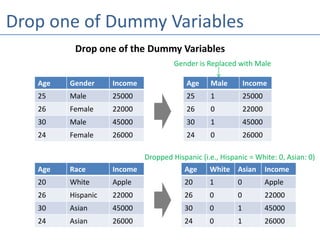

The document discusses linear regression in machine learning, specifically the need to convert categorical variables into numeric formats for analysis. It explains the process of creating dummy variables and highlights multicollinearity issues that can arise with these variables. The example demonstrates how to implement one-hot encoding while avoiding the dummy variable trap by dropping one of the generated features.