This document provides an overview of augmented reality (AR) and discusses several key aspects of AR including:

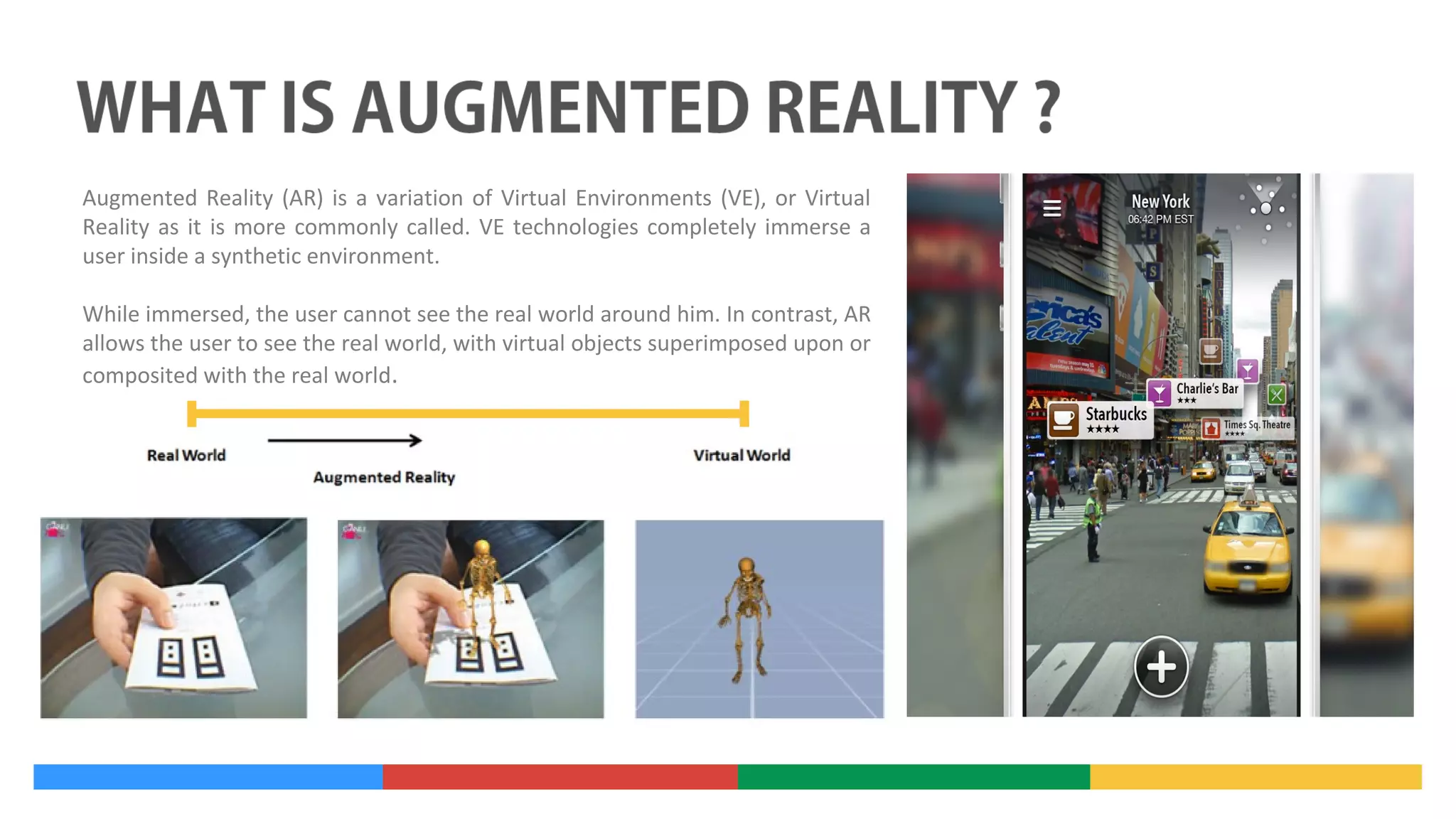

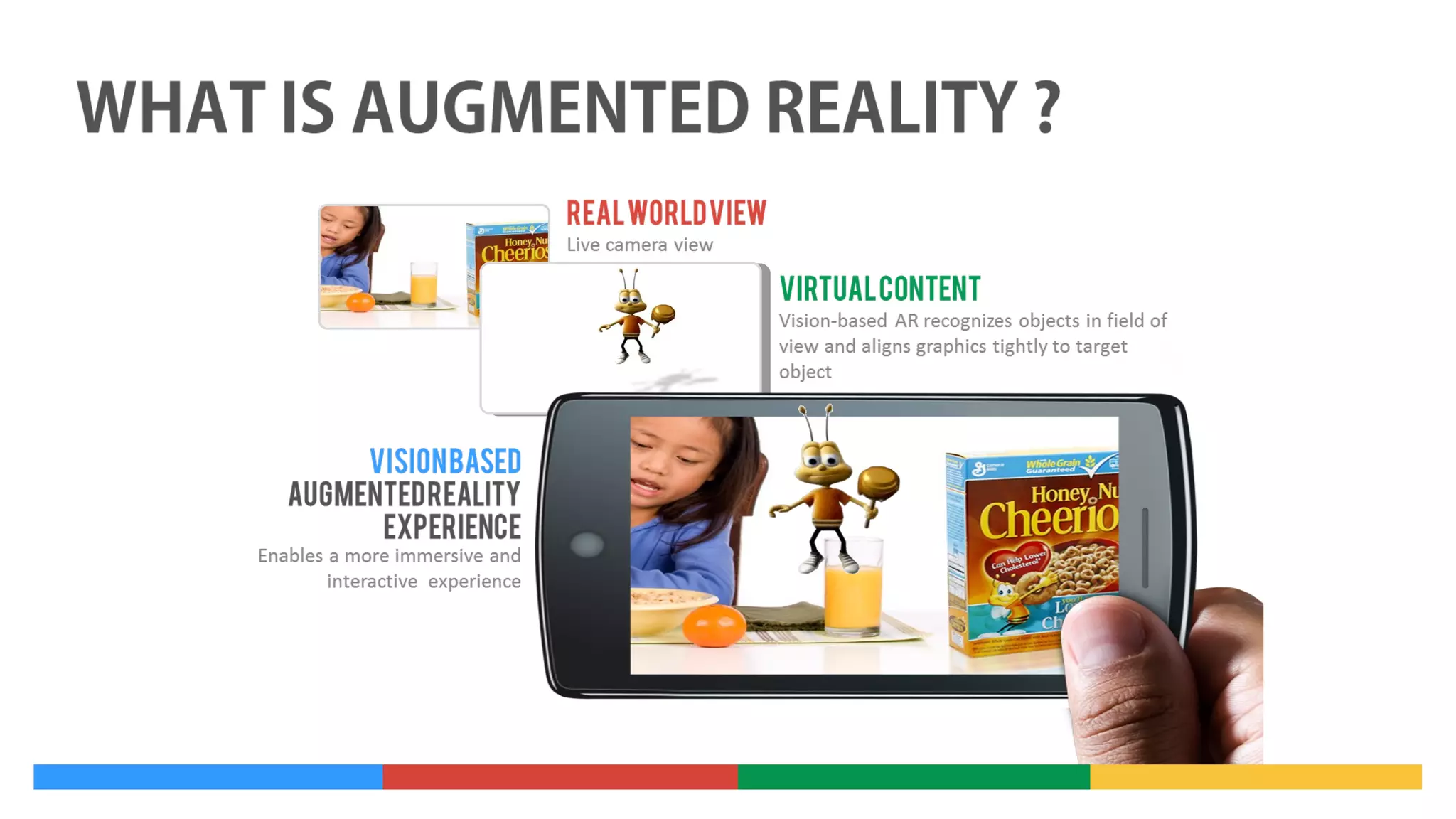

- The history and foundational concepts of AR including how it differs from virtual reality by allowing users to see the real world with virtual objects overlaid.

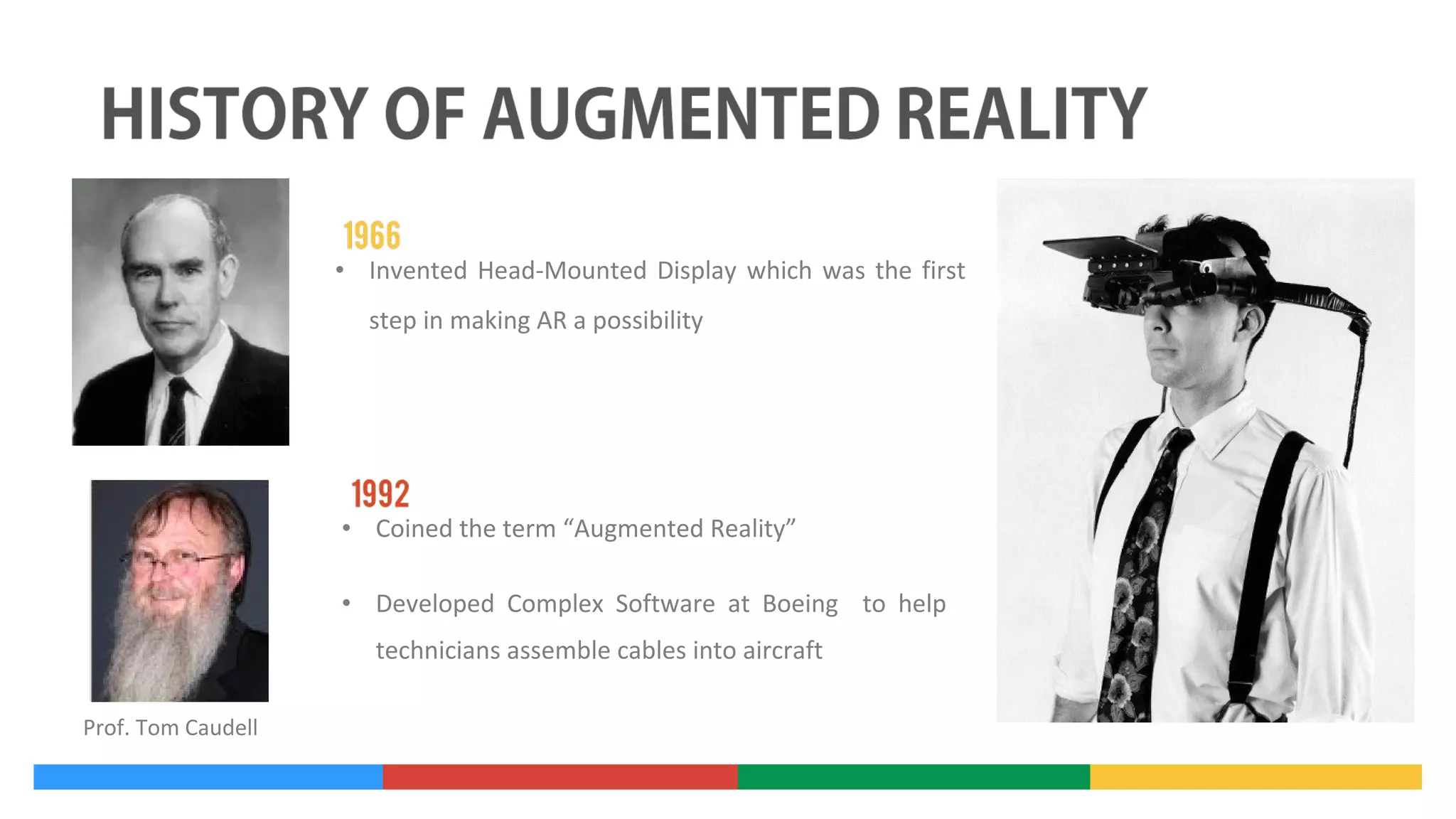

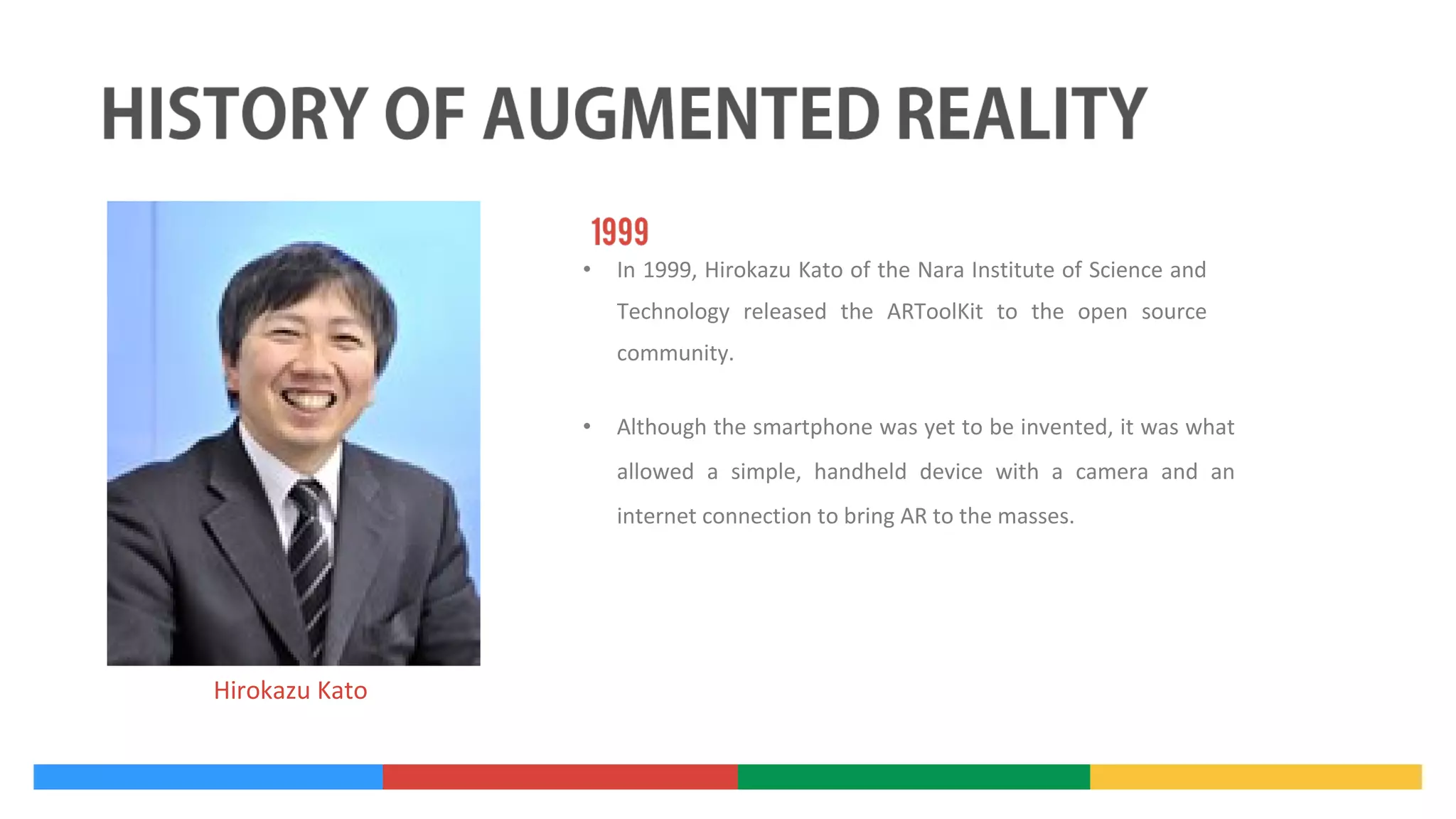

- Important figures in the development of AR technology such as Tom Caudell who coined the term "augmented reality" and Hirokazu Kato who released the open-source ARToolkit.

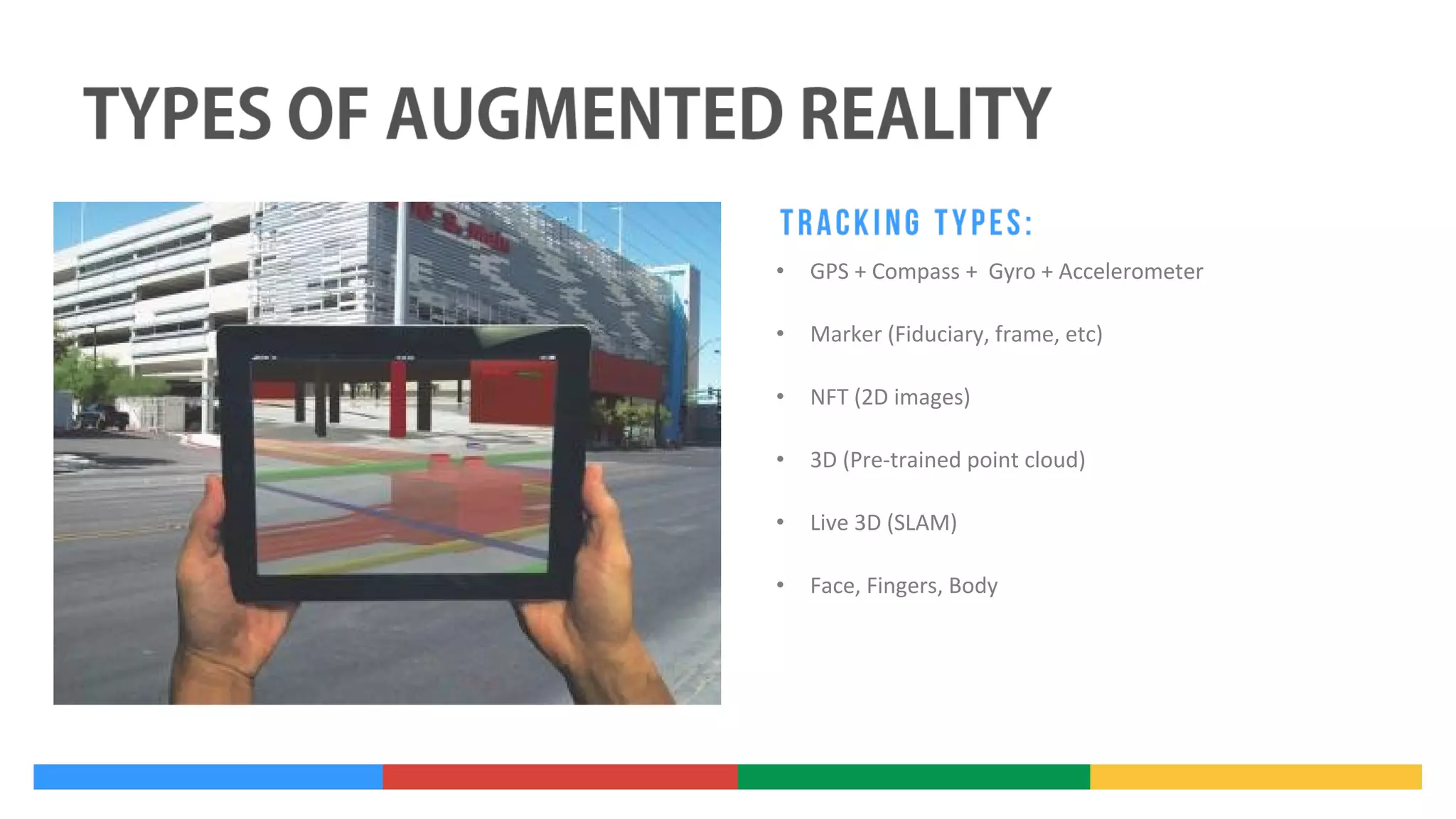

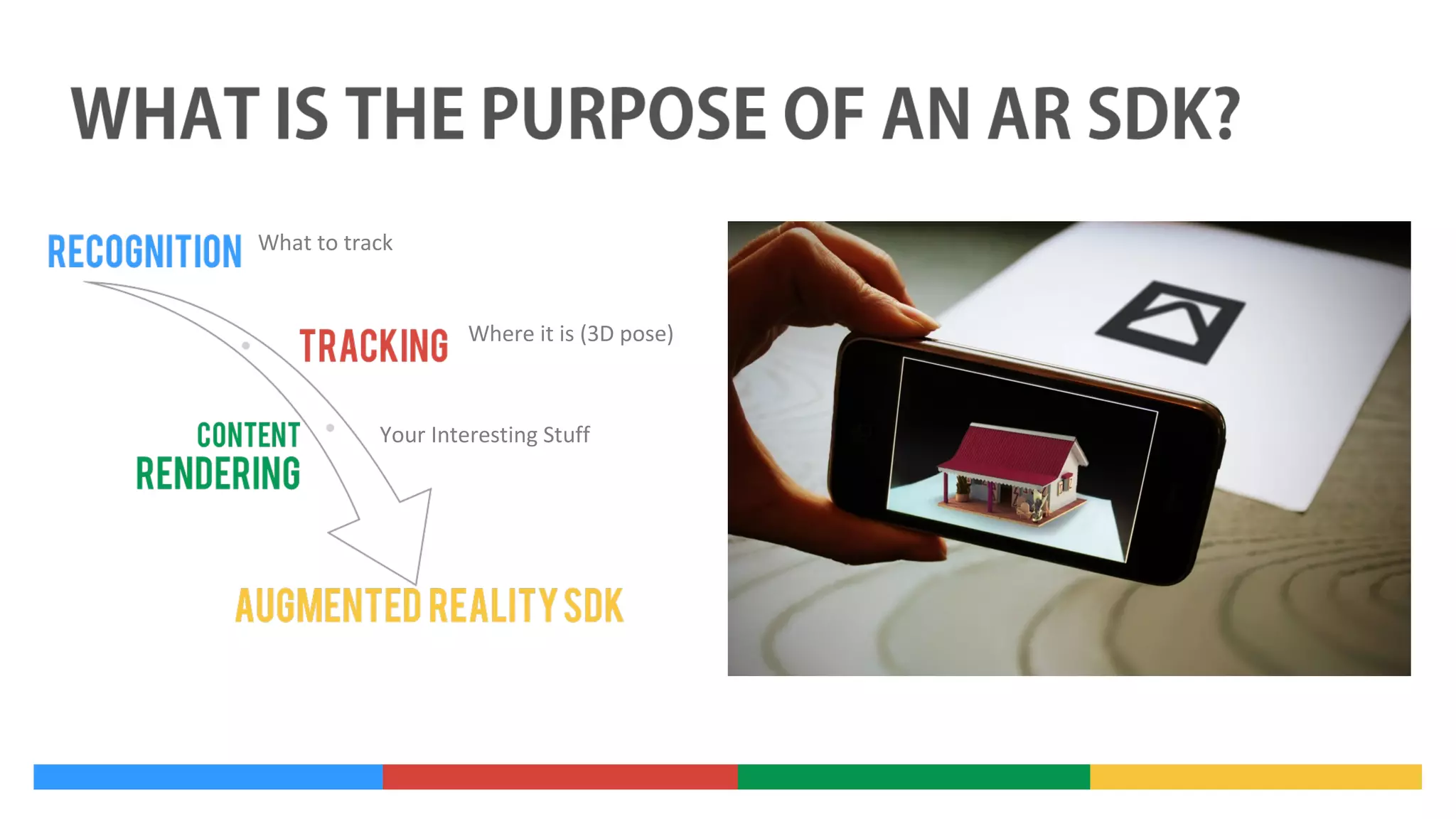

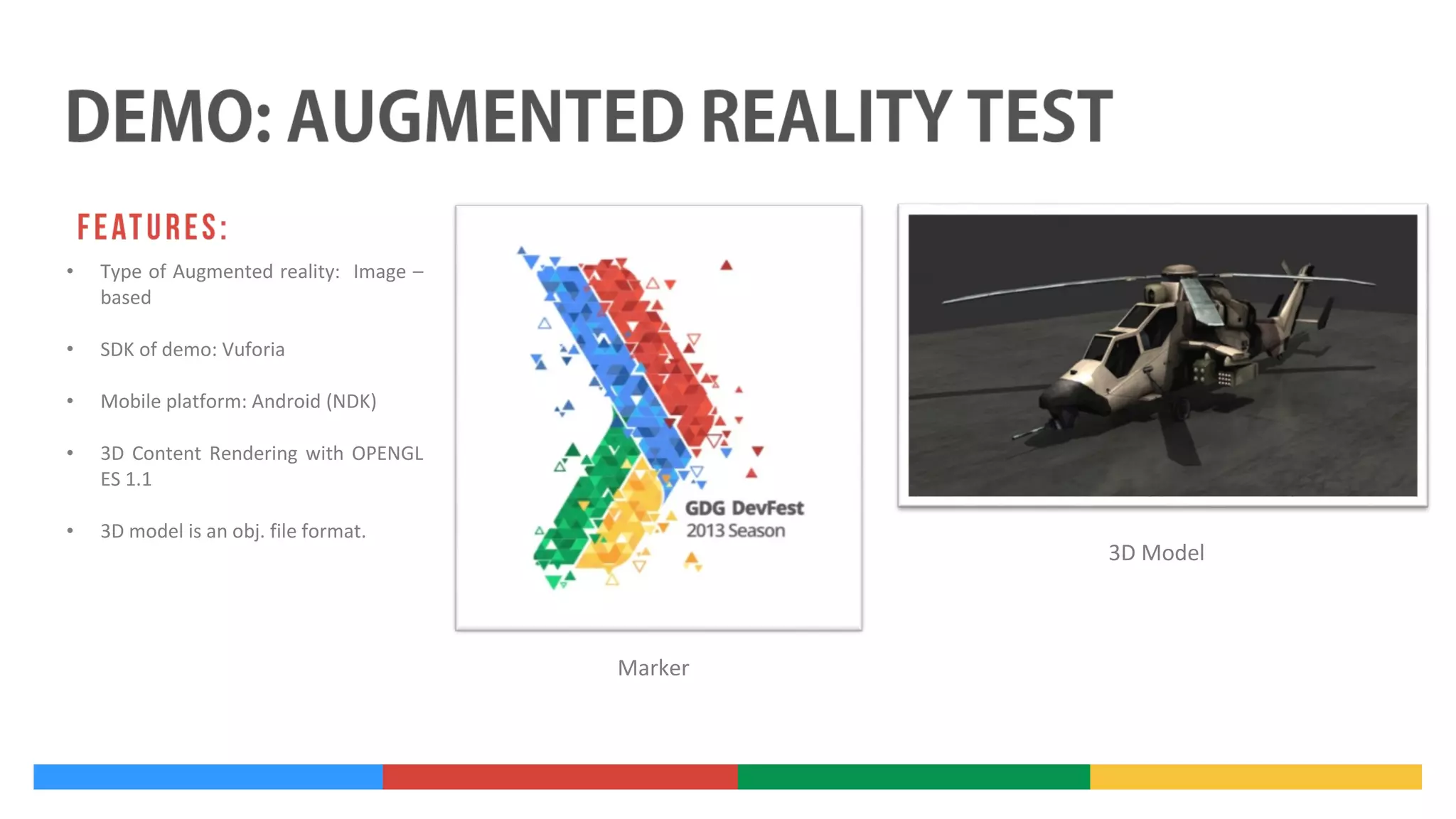

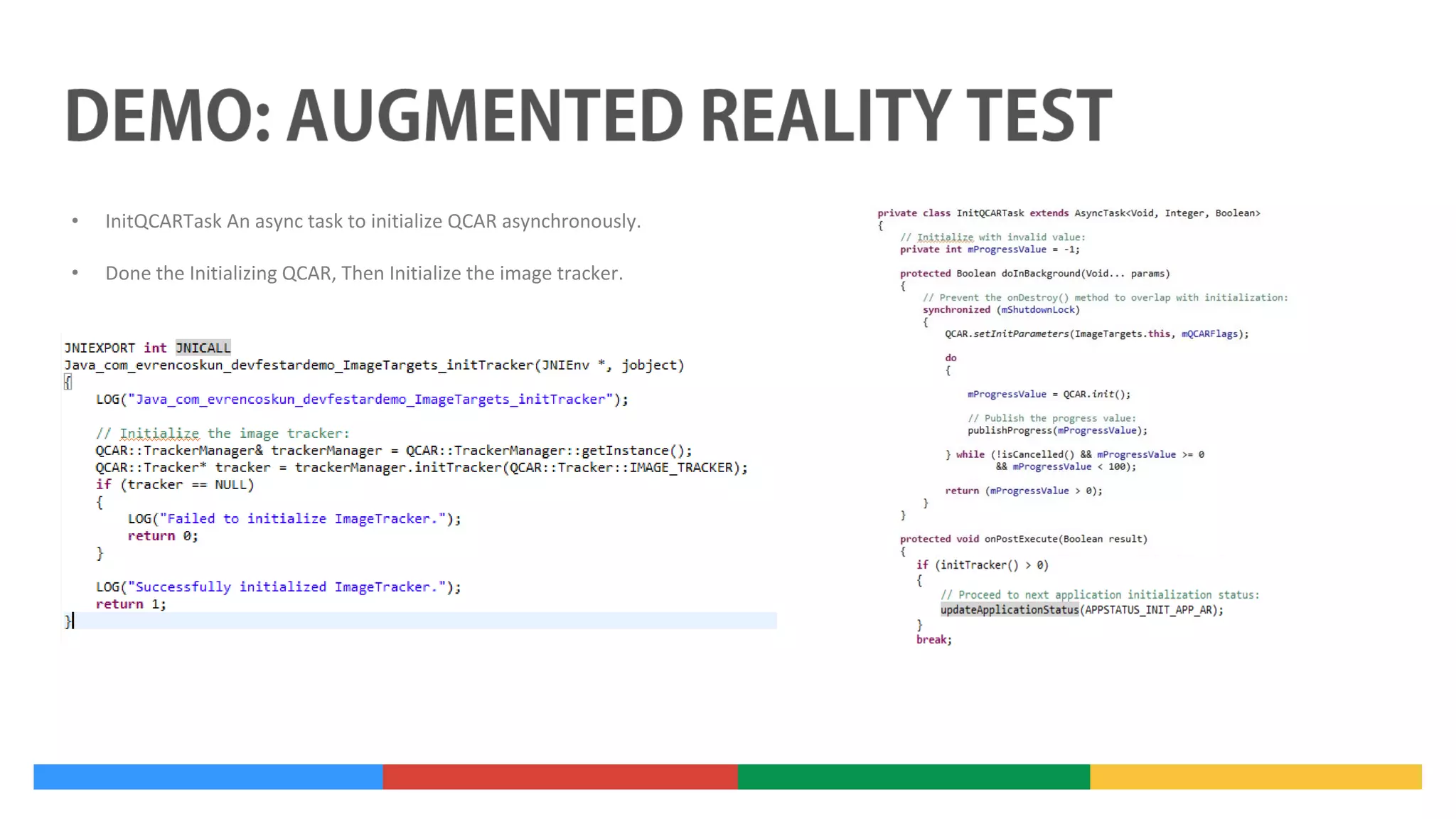

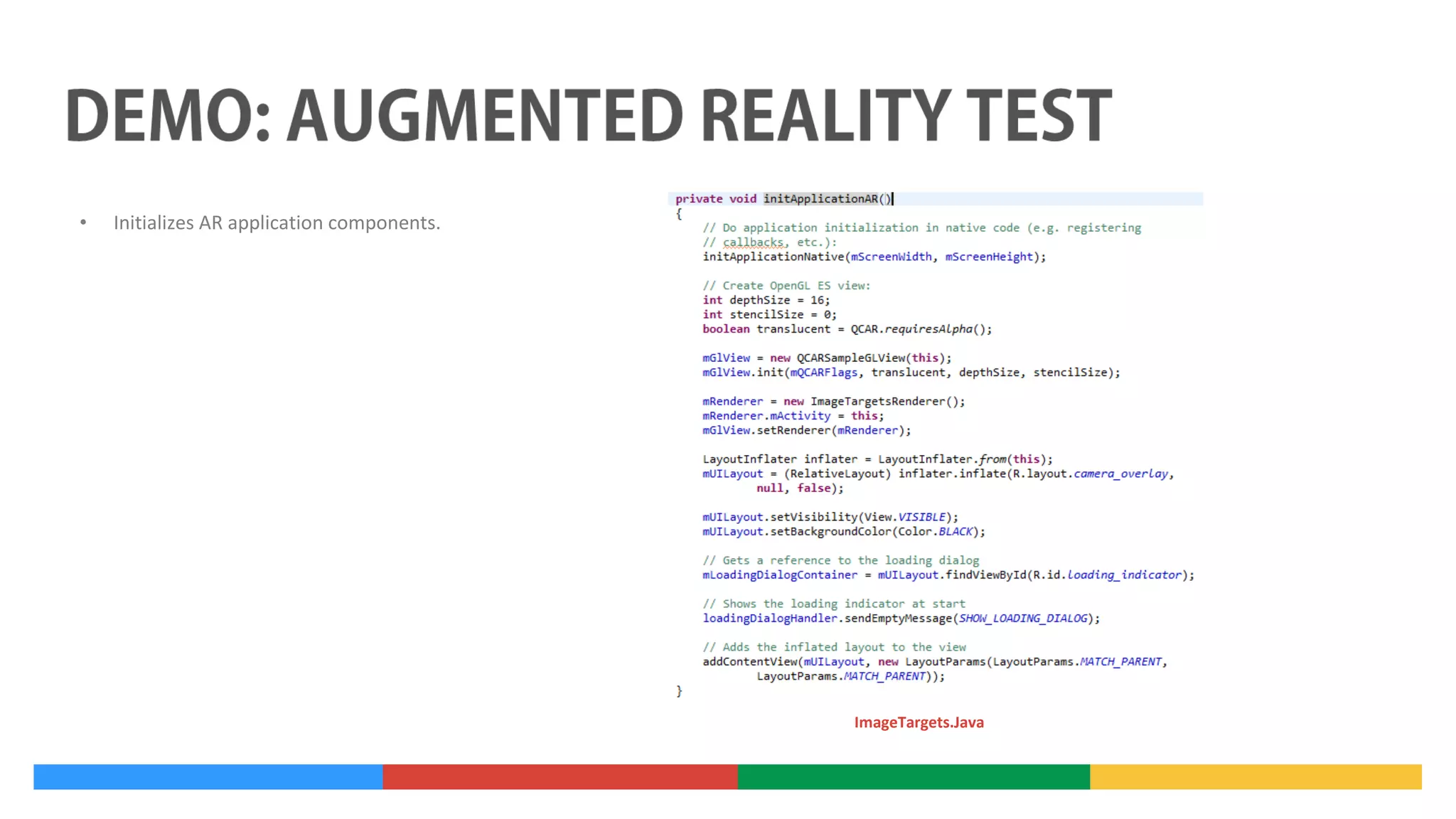

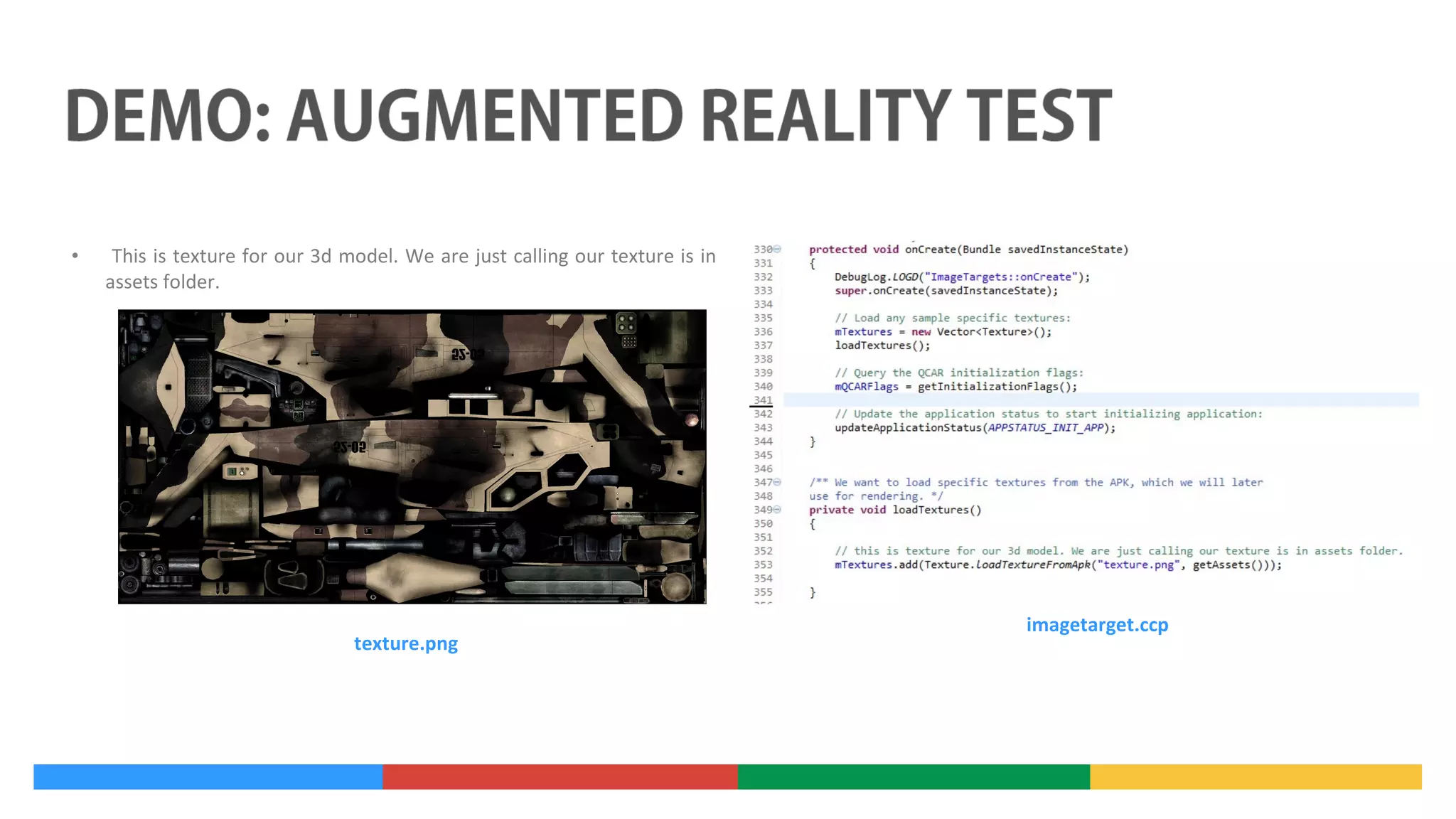

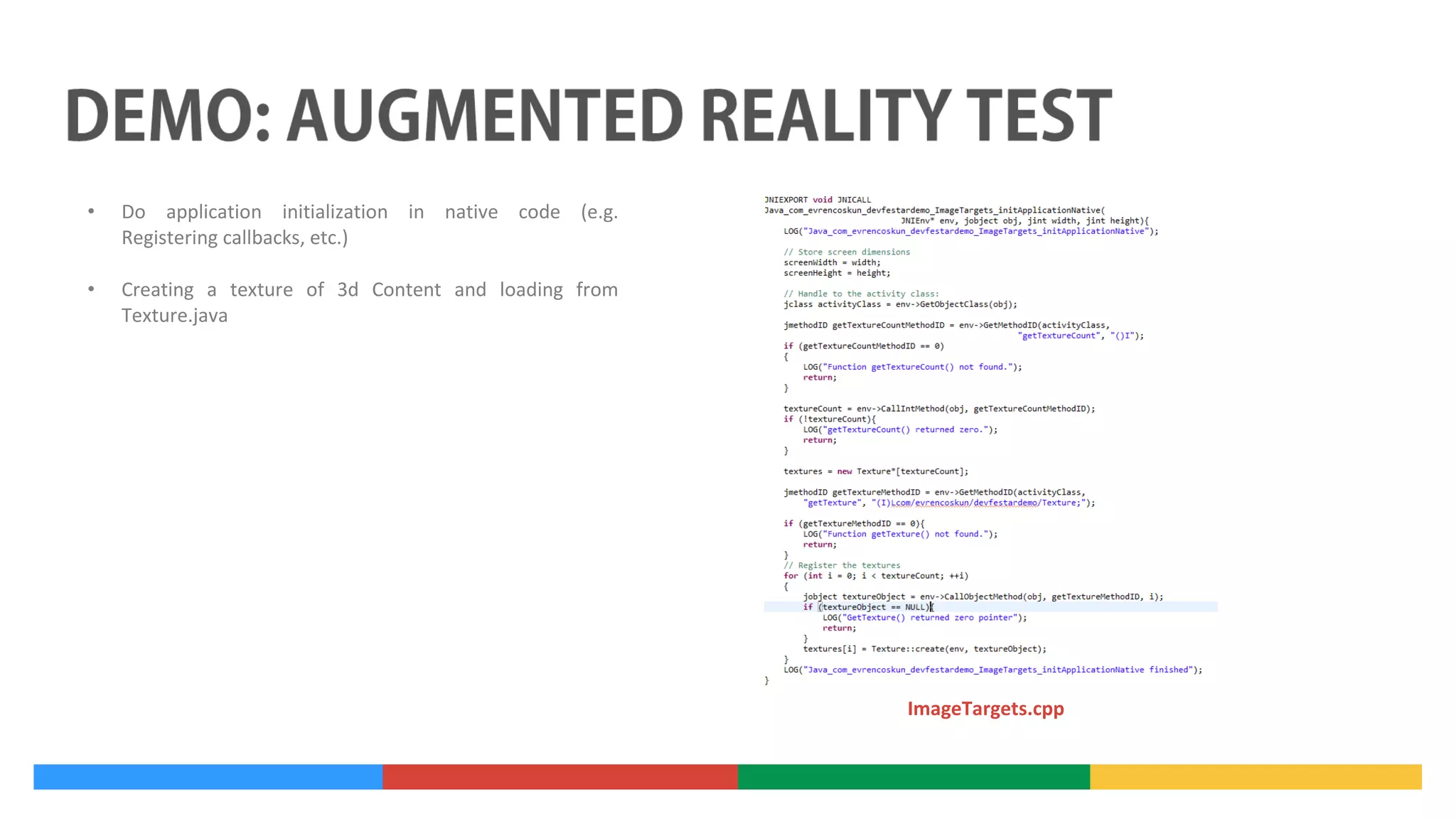

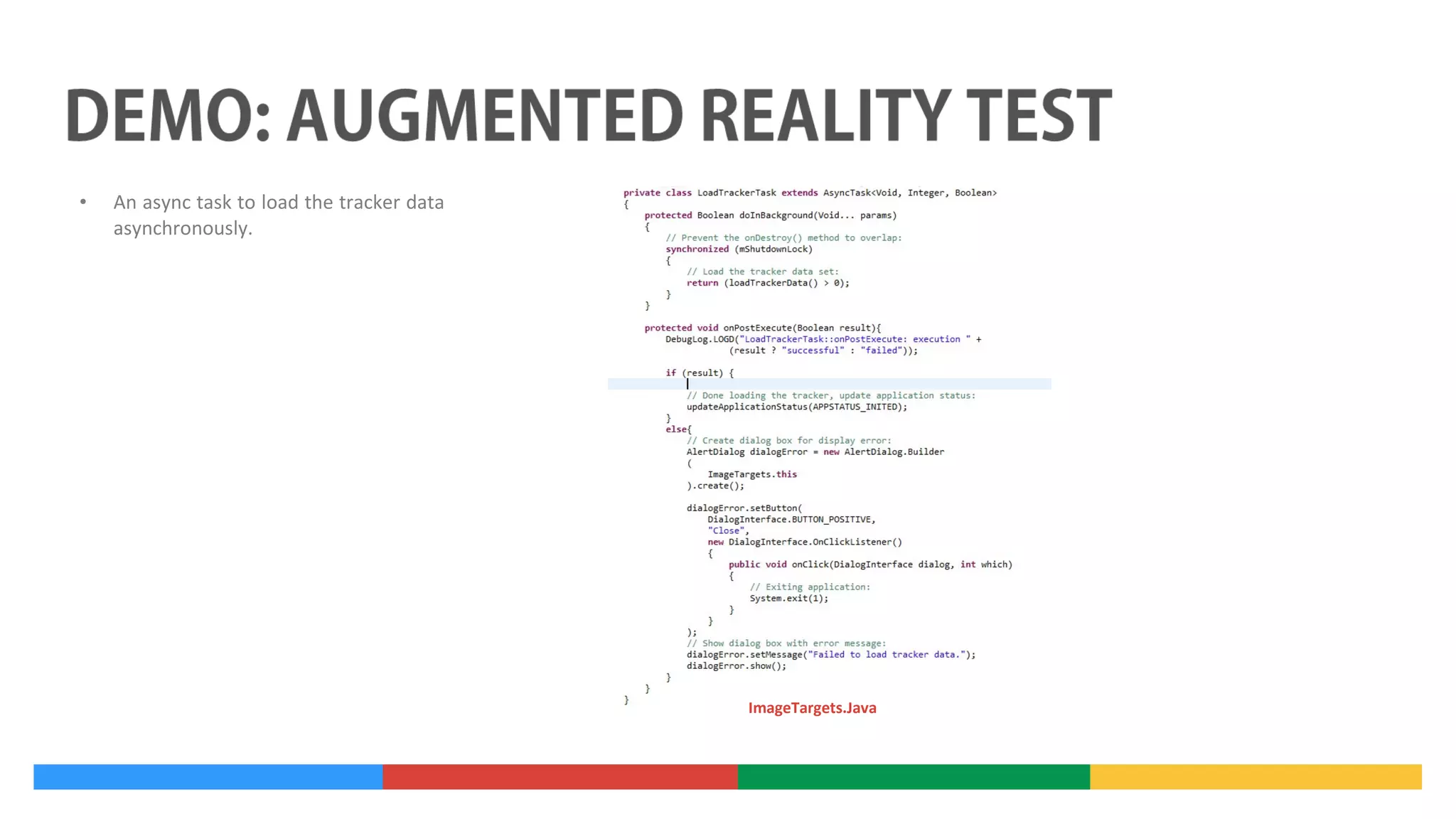

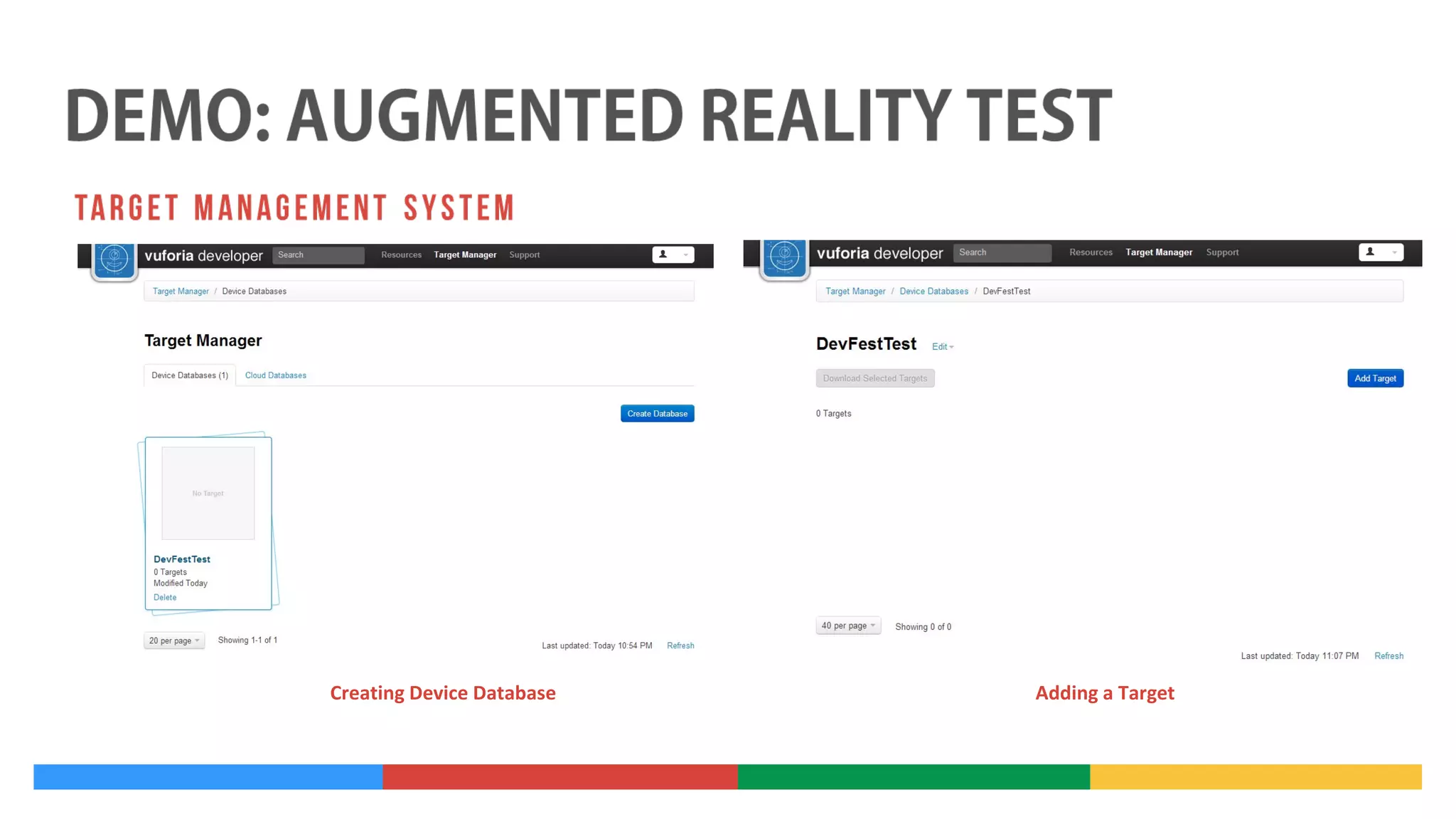

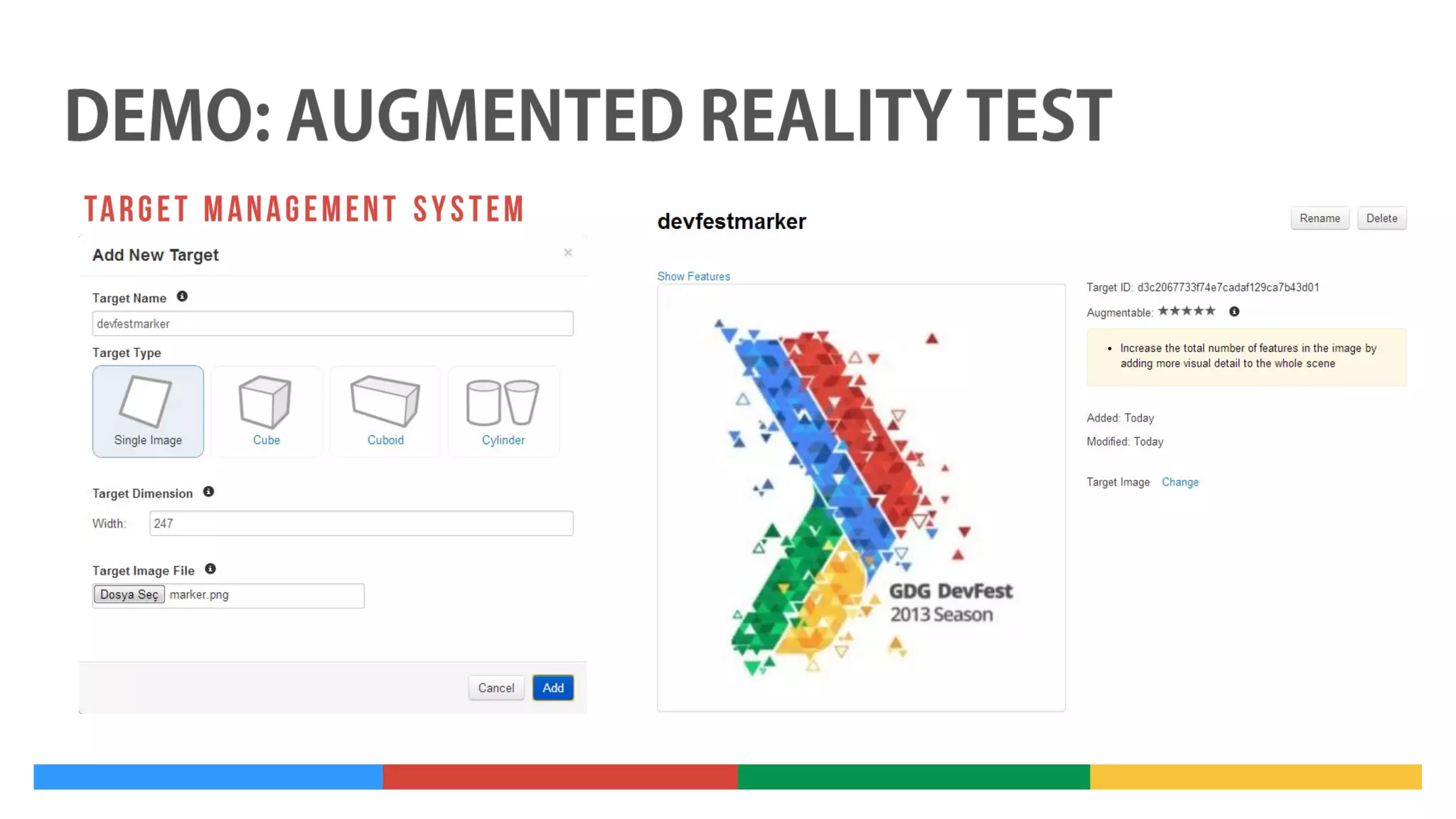

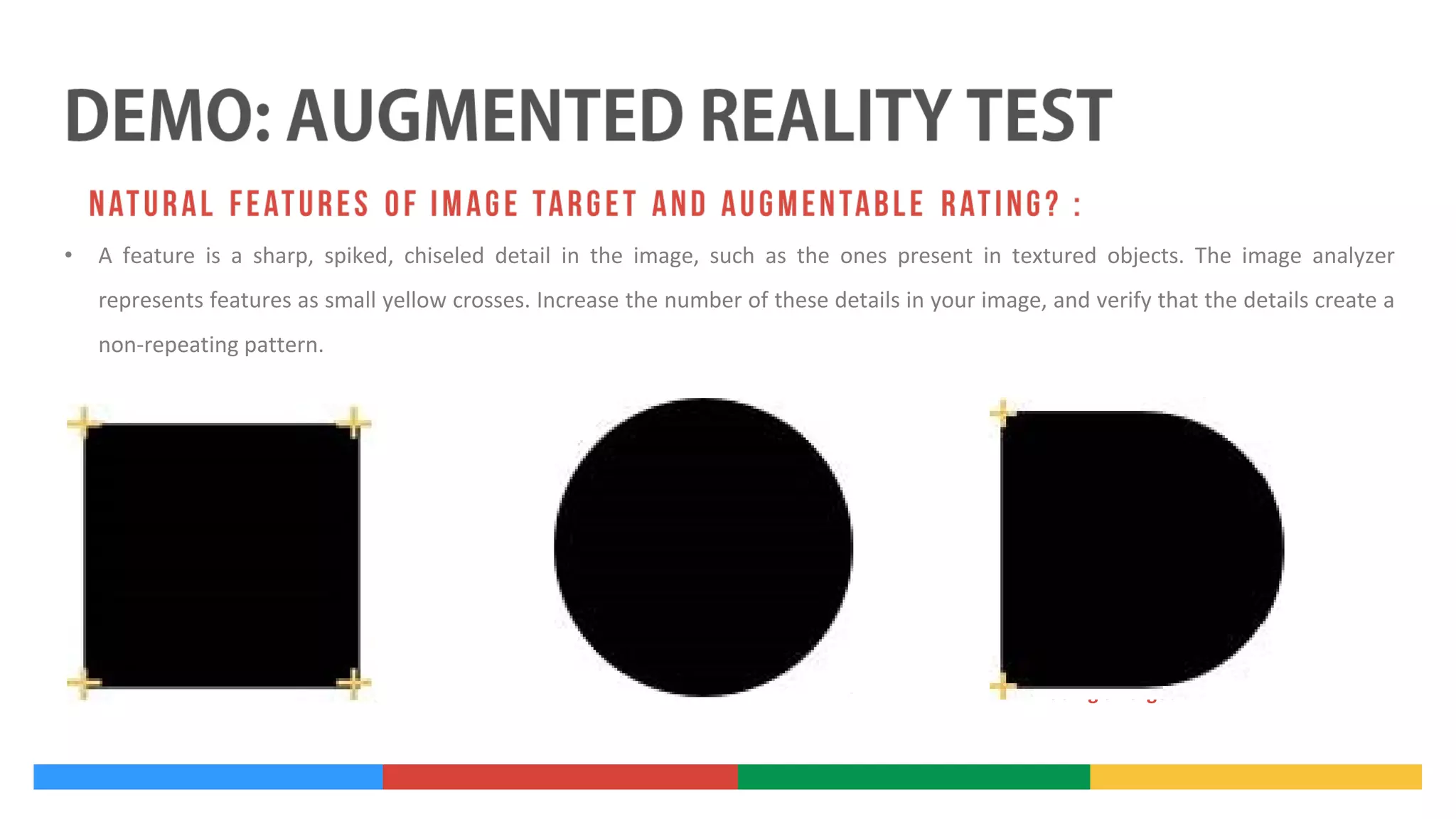

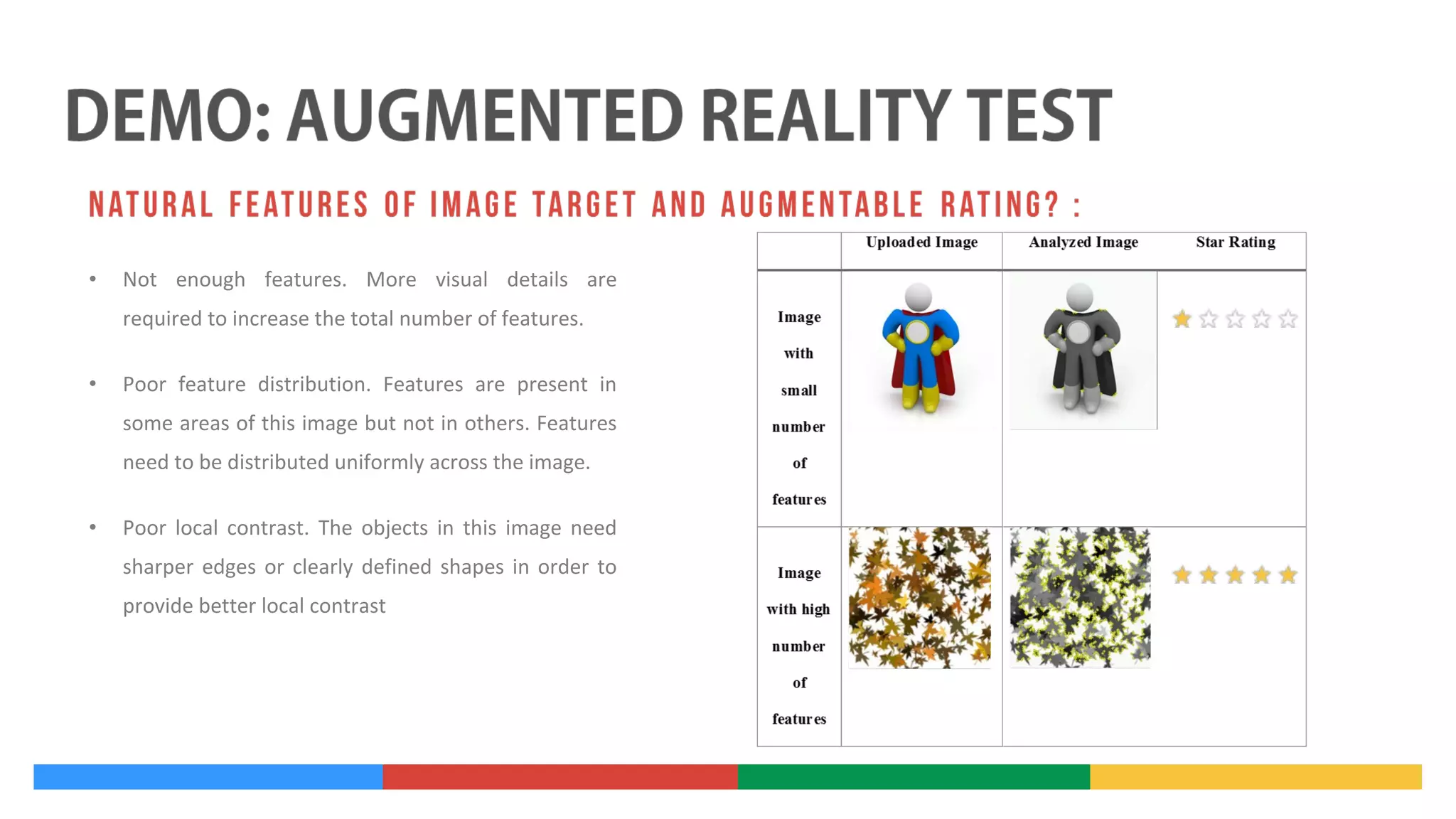

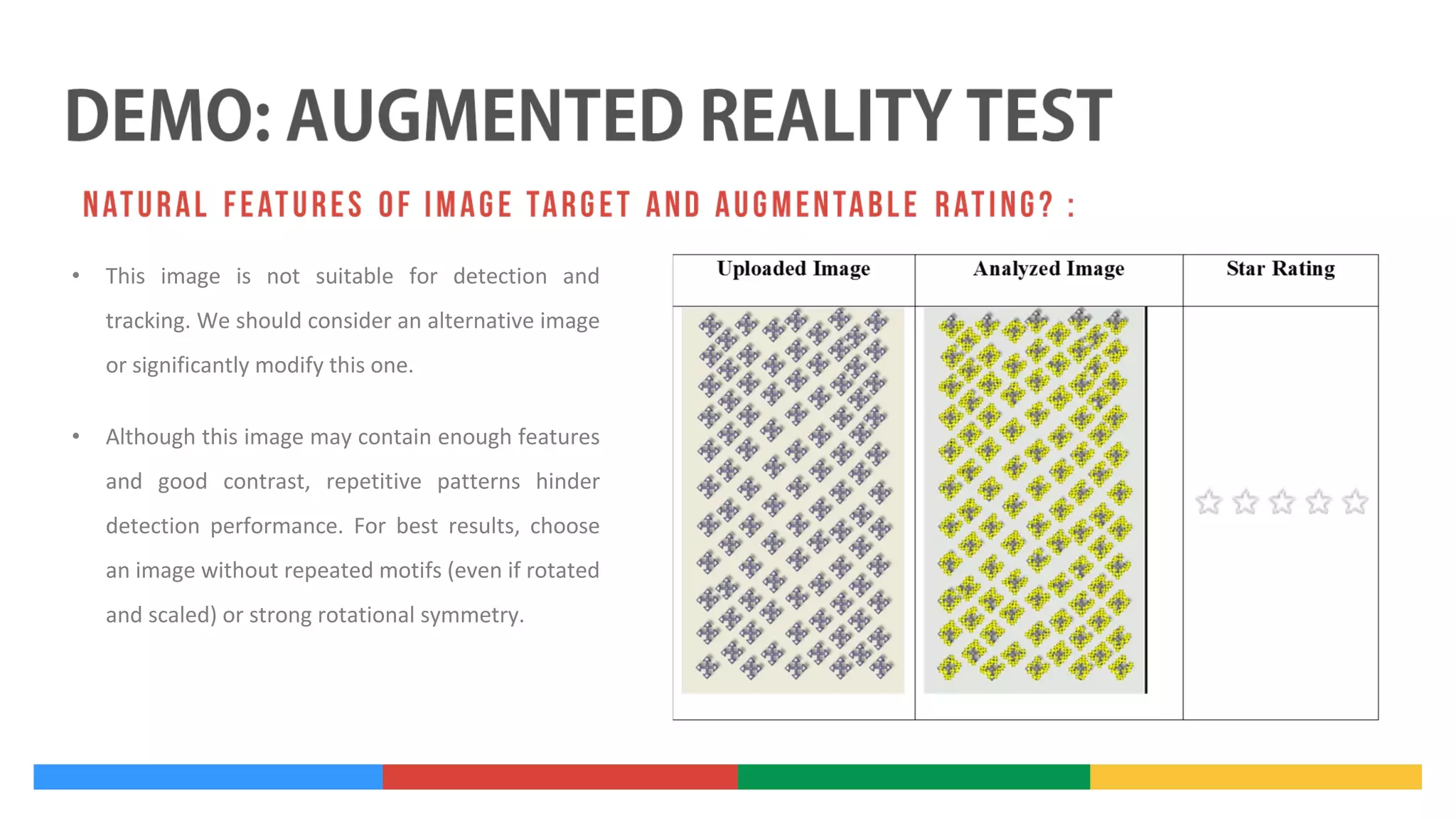

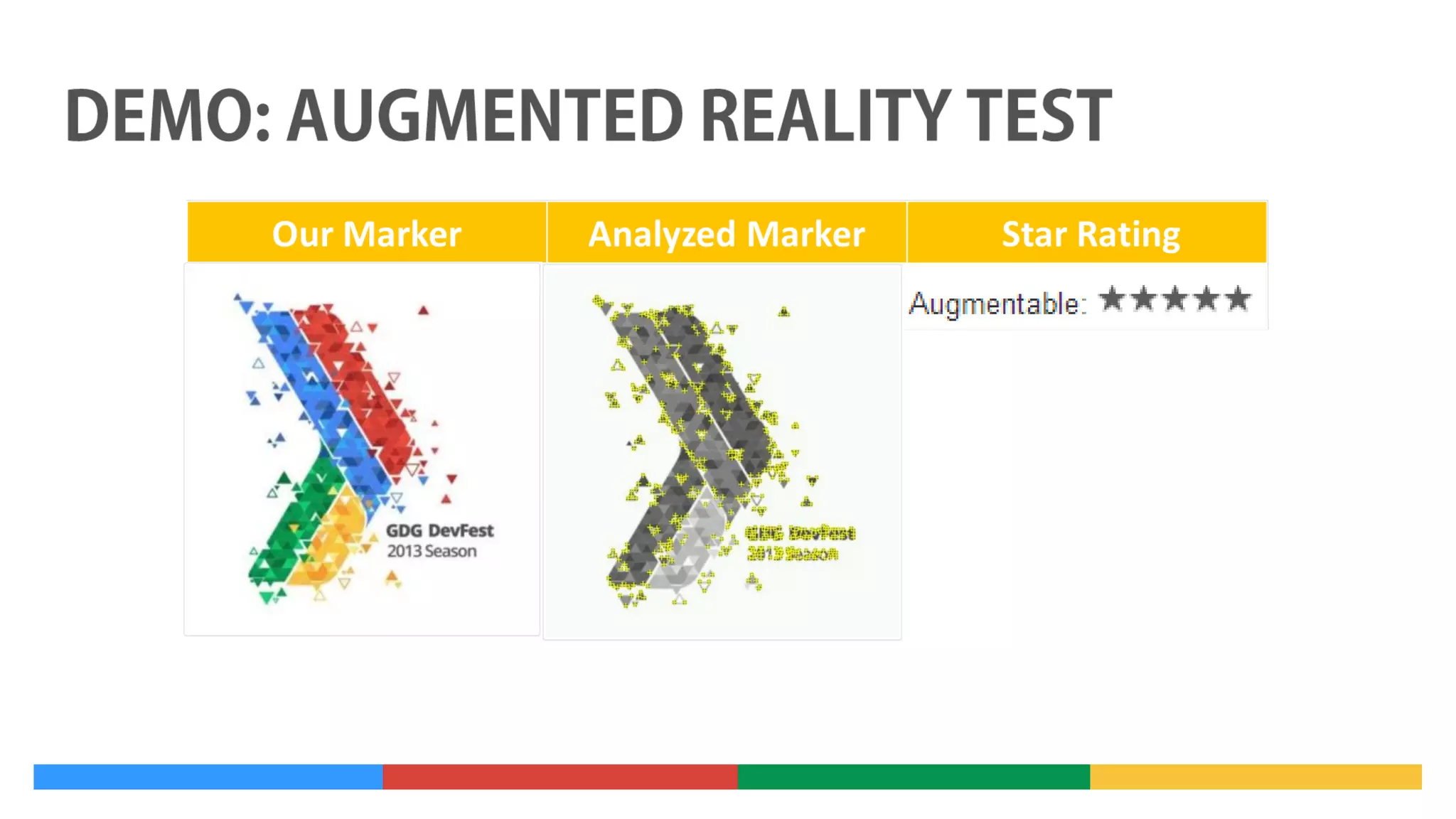

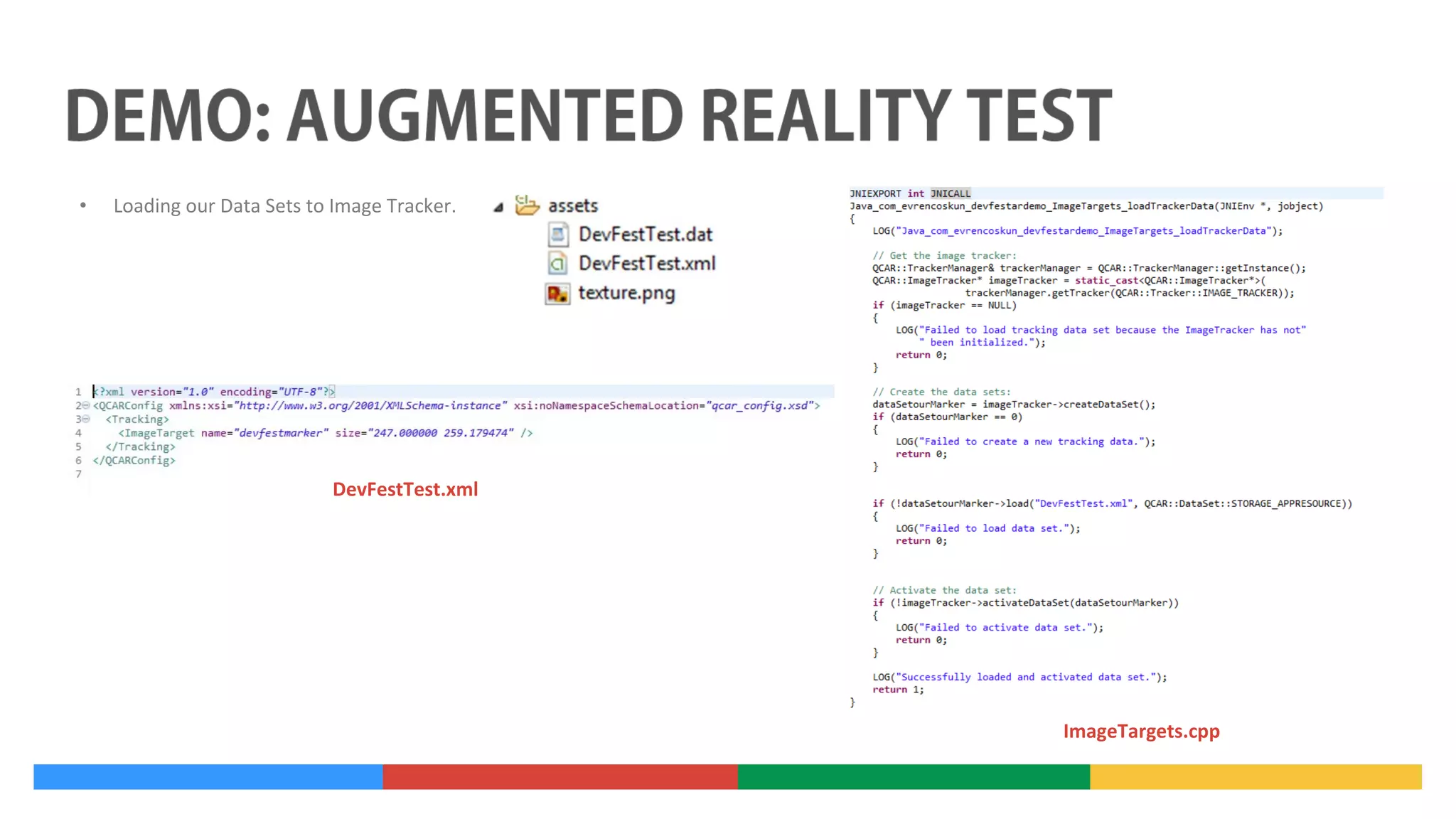

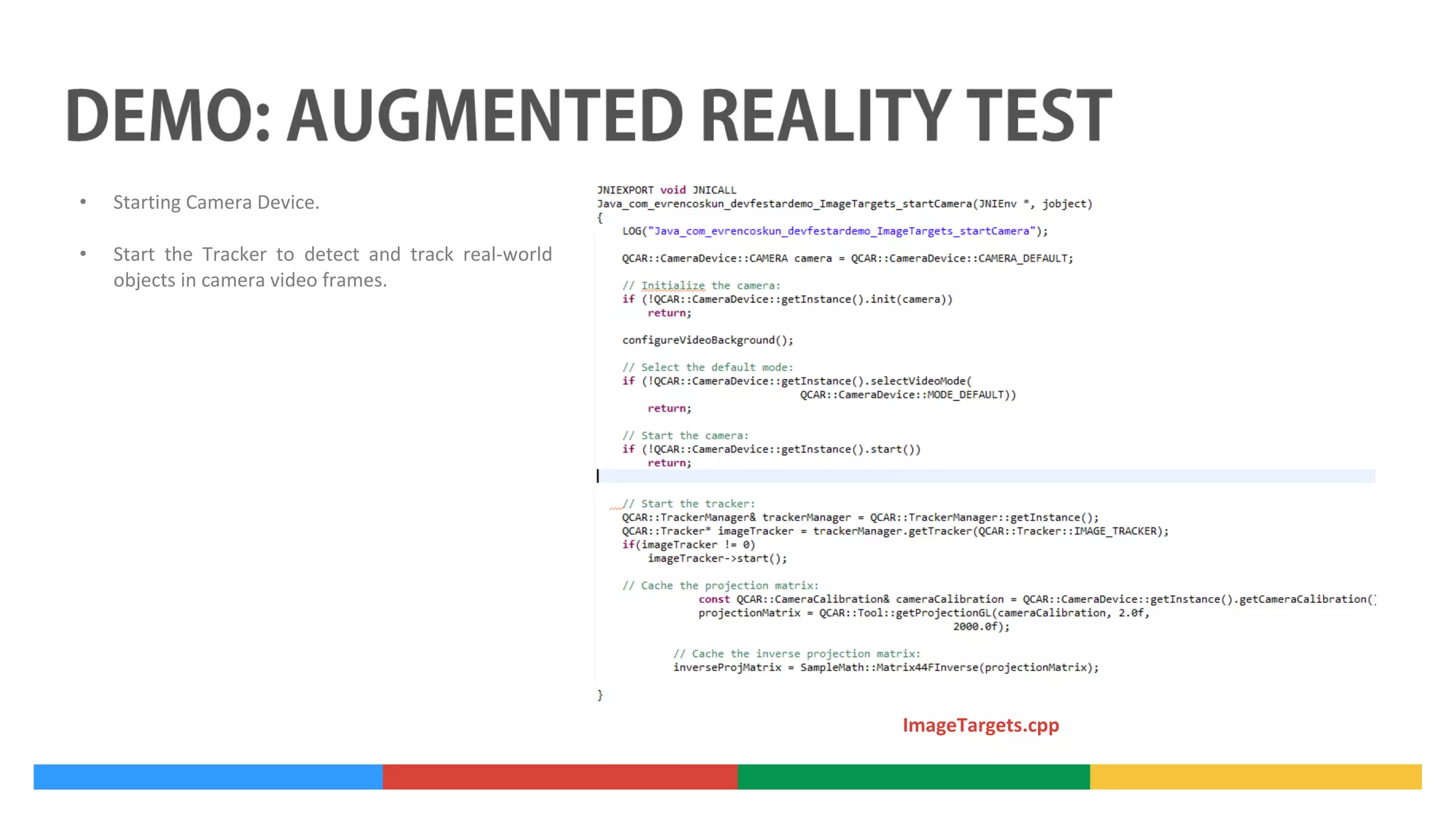

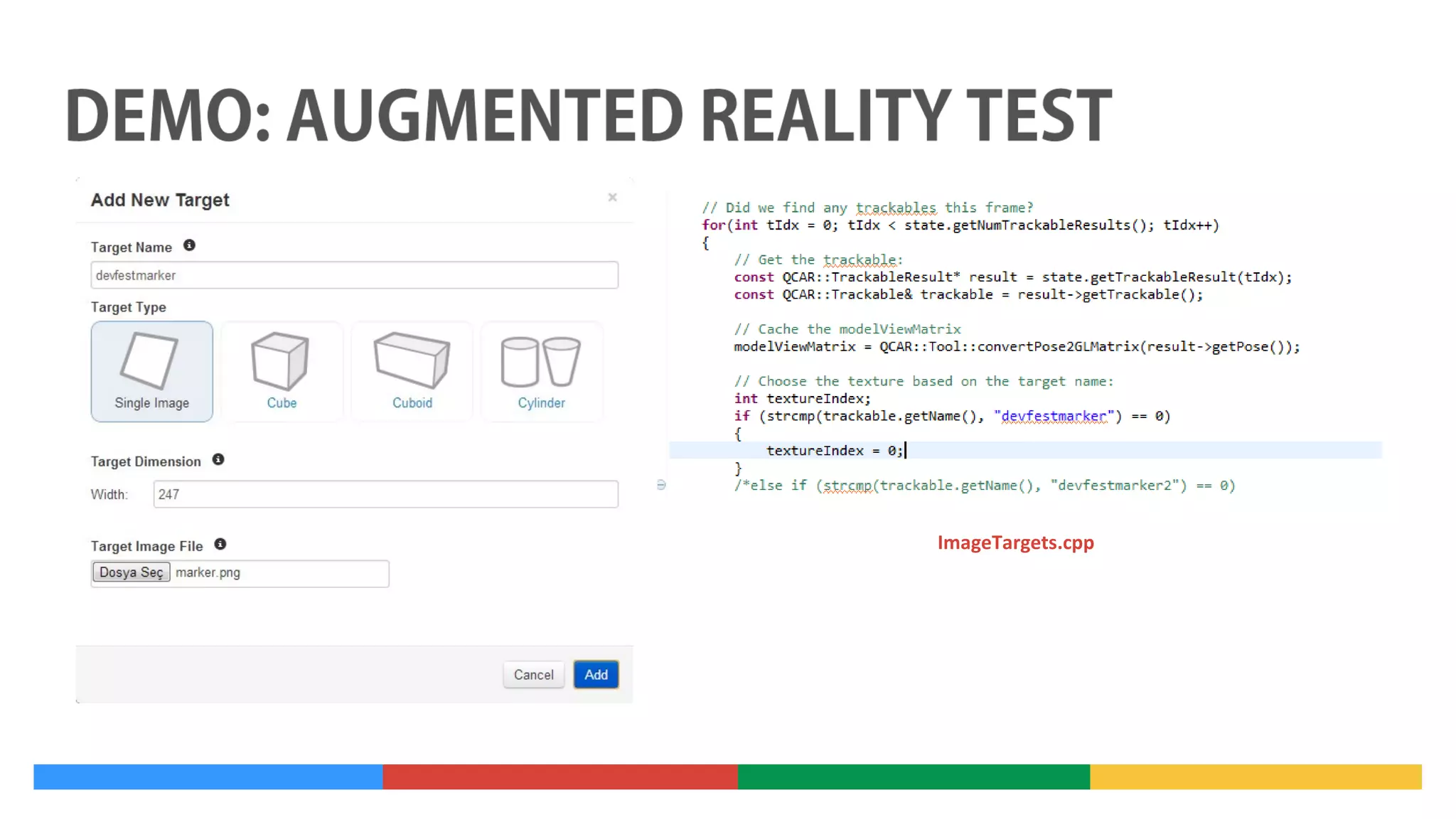

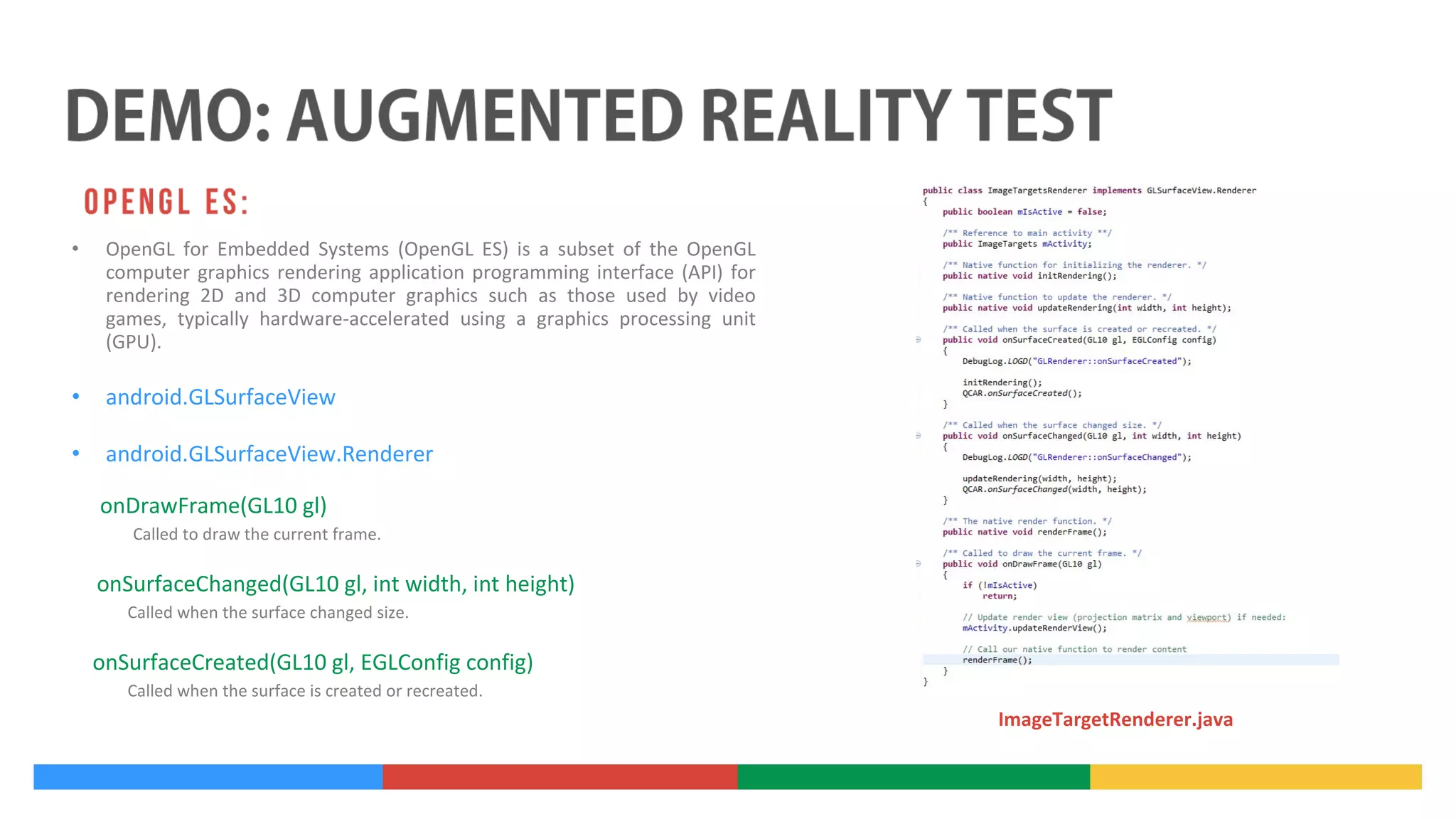

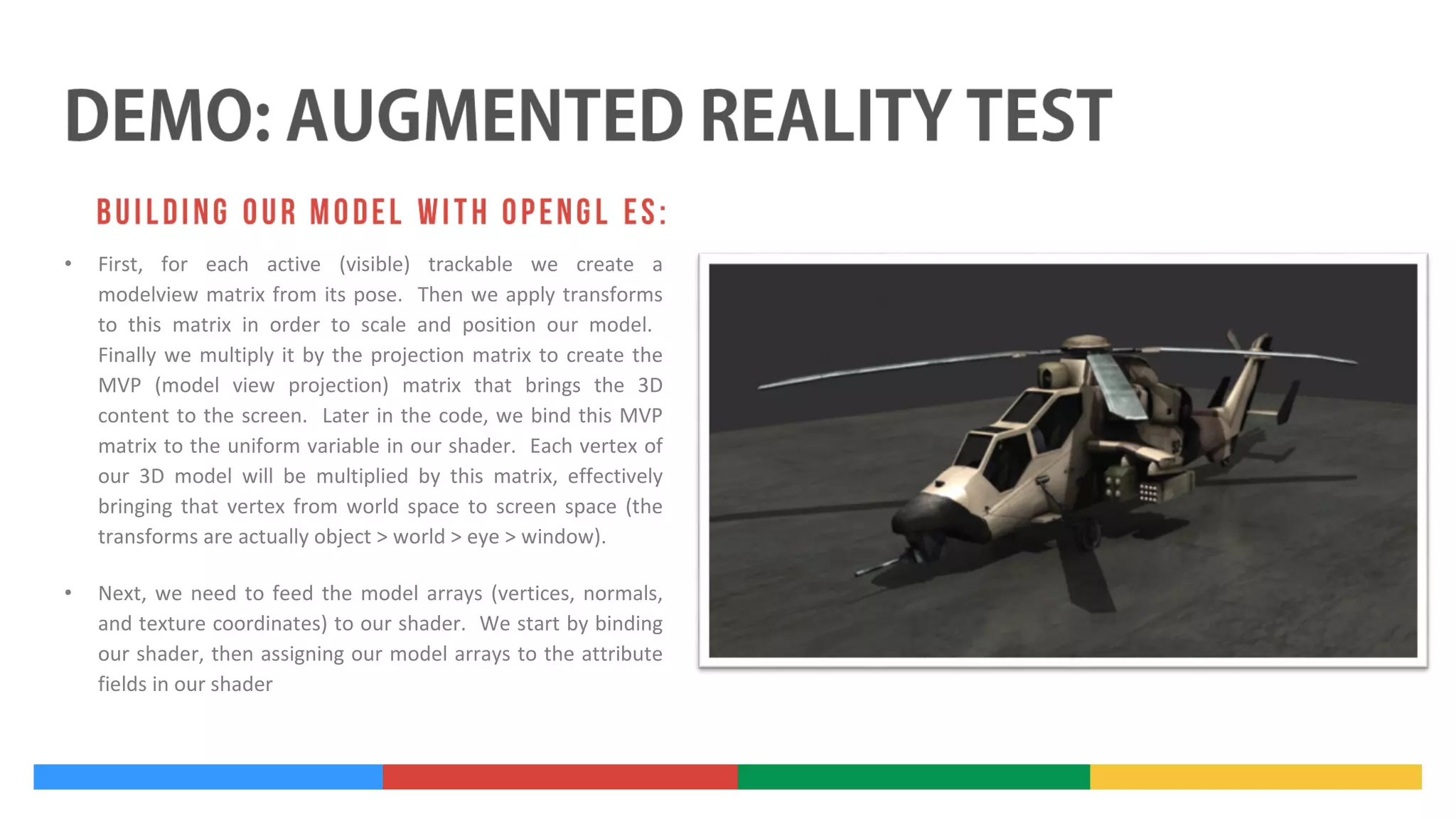

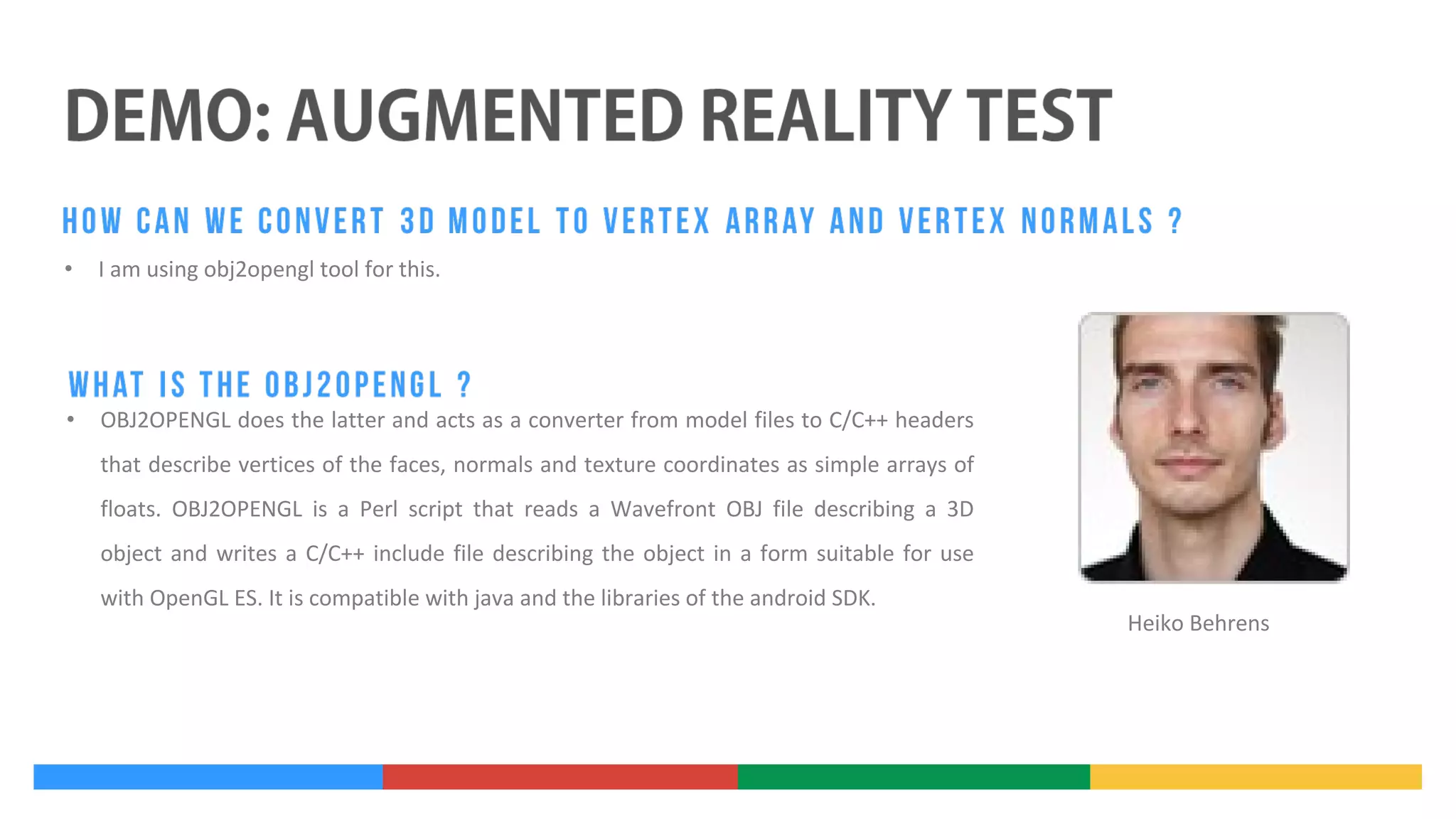

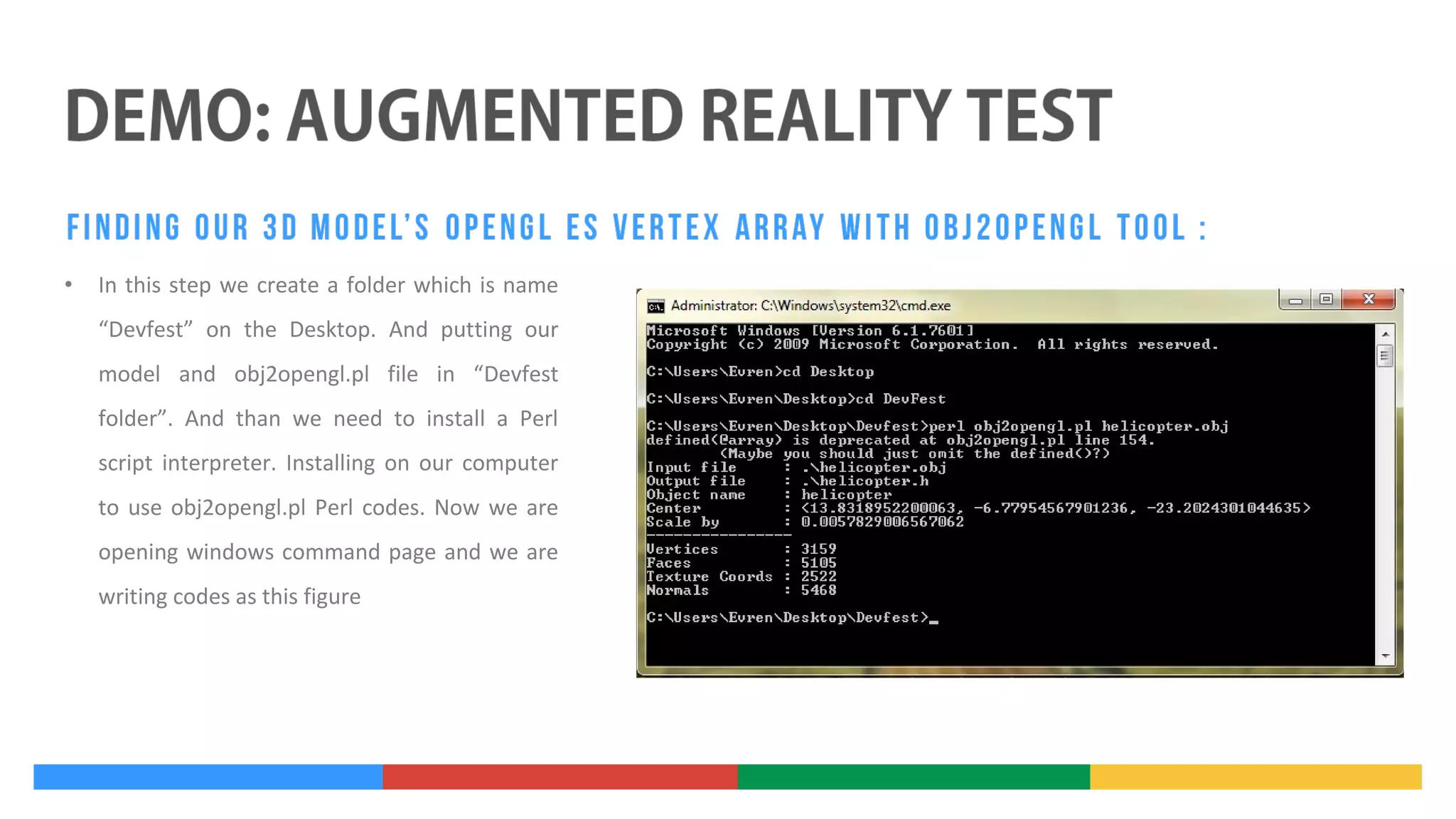

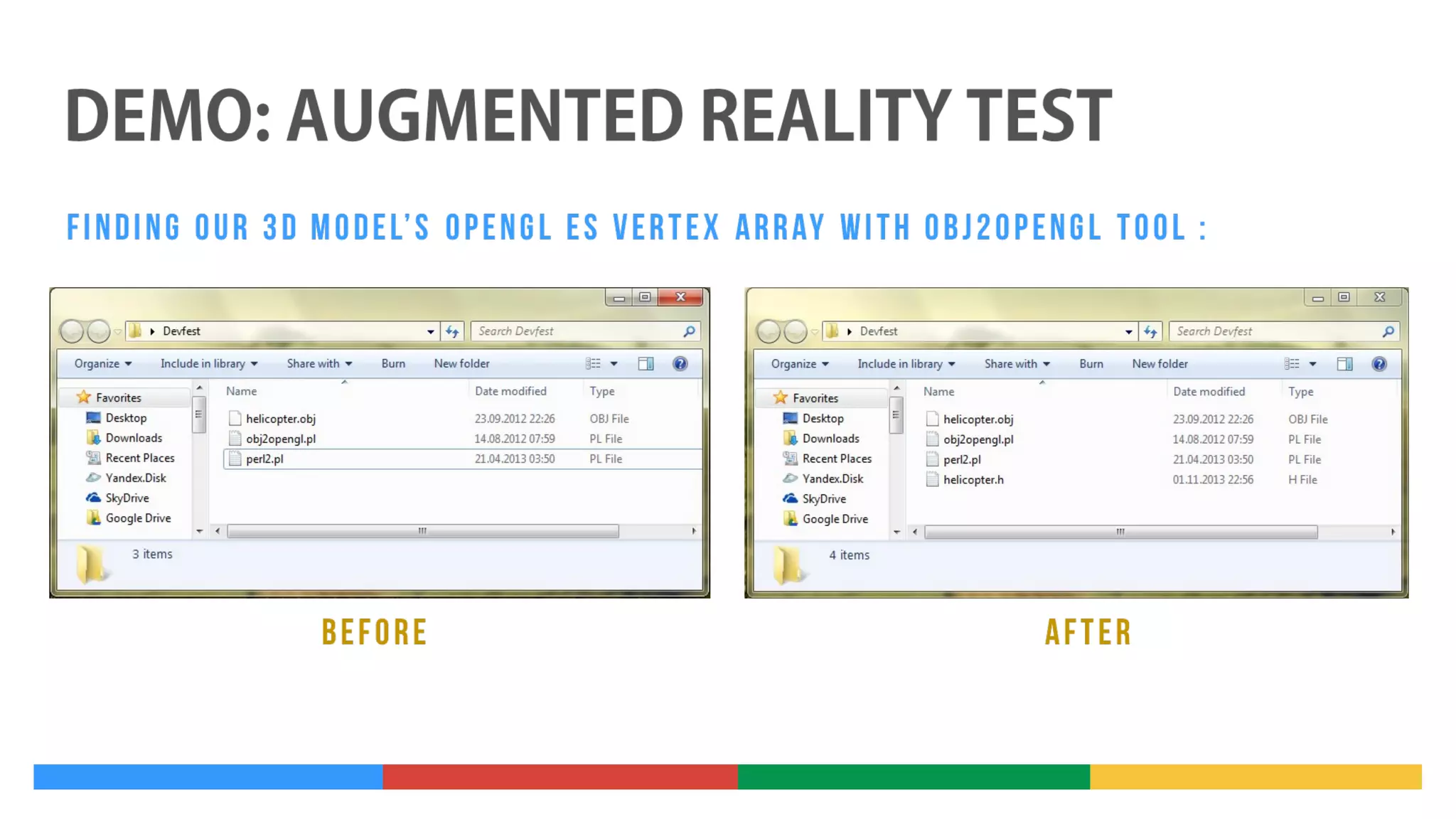

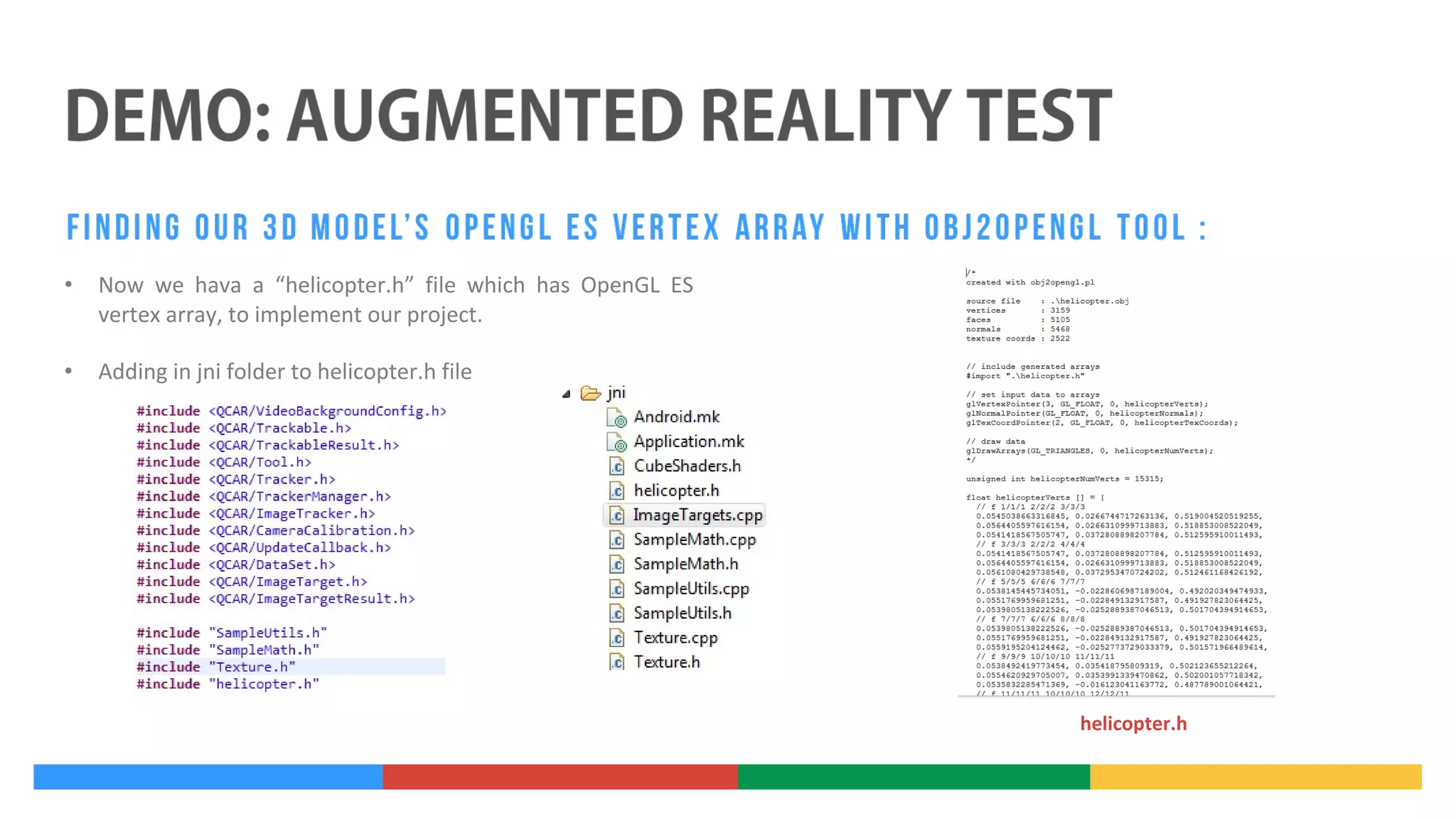

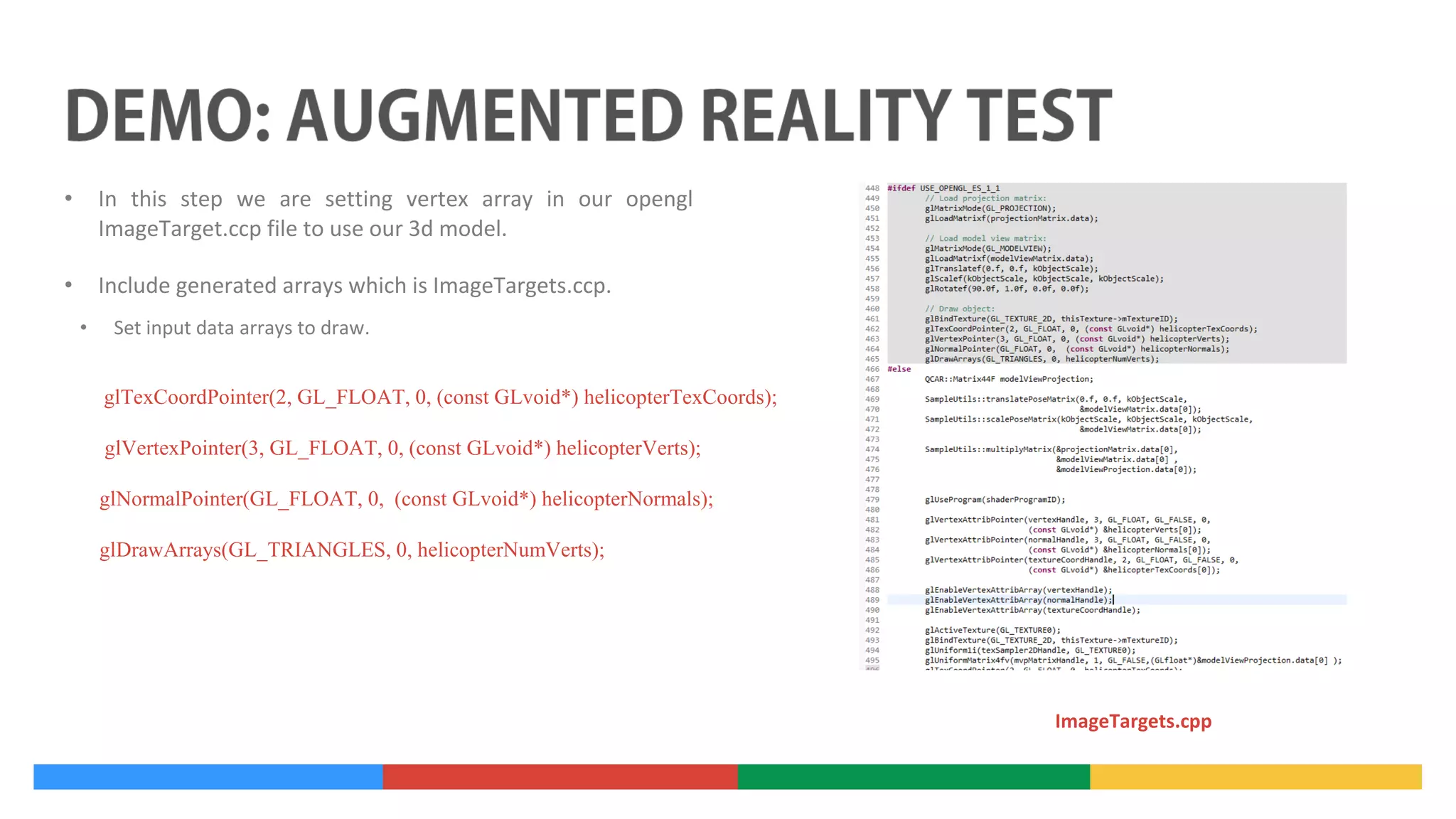

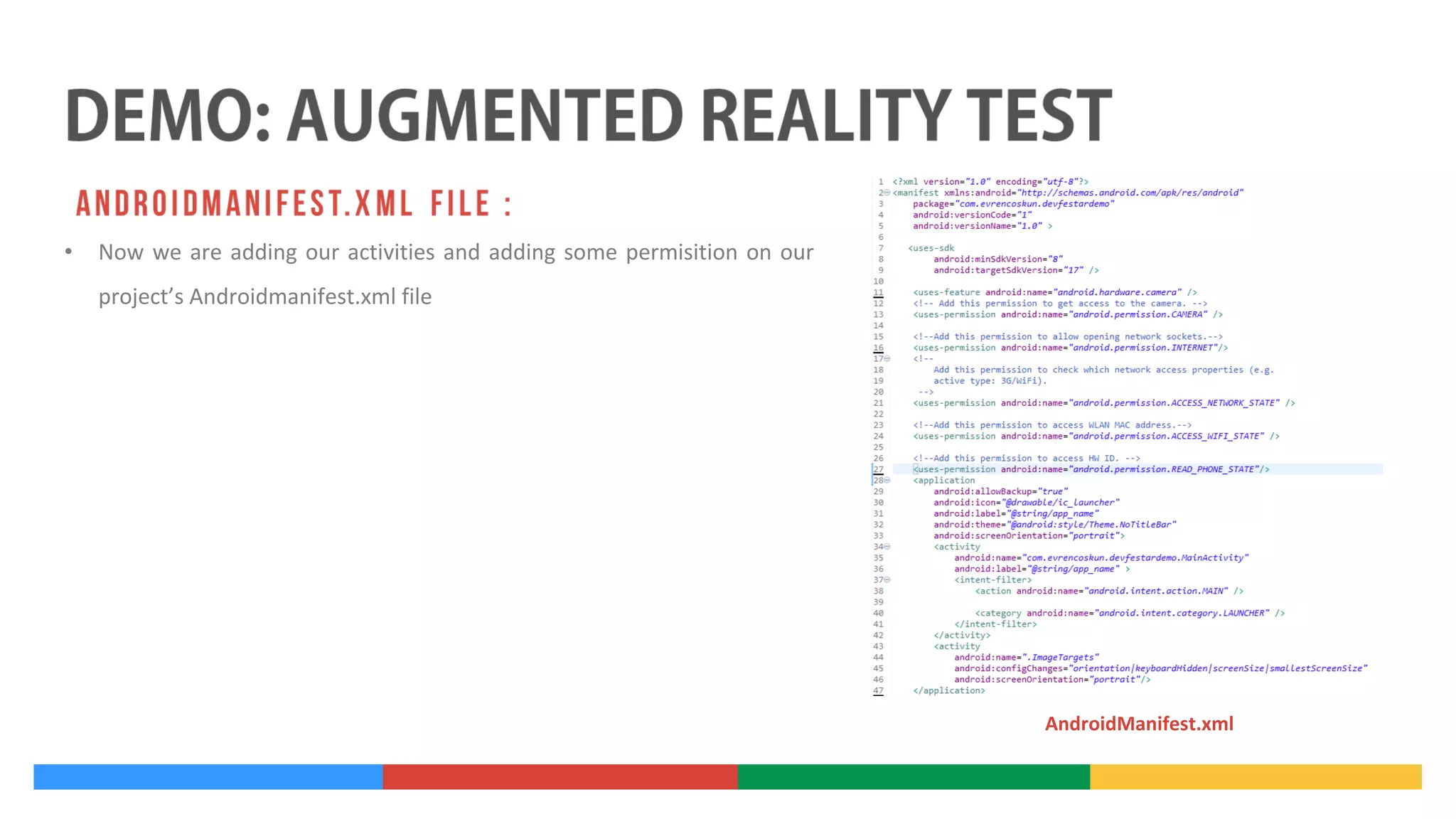

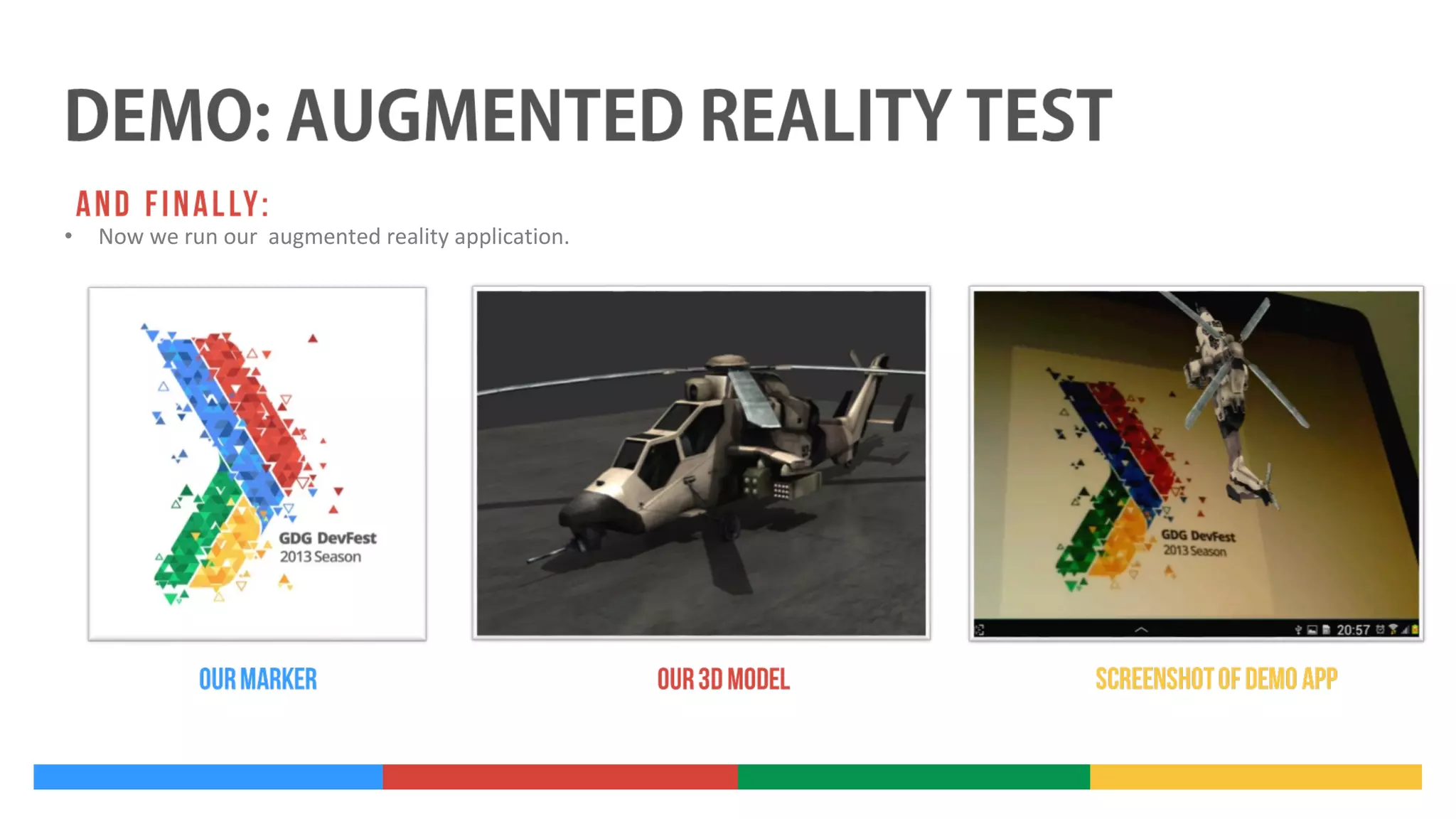

- Common methods for implementing AR including marker-based AR, image target tracking, and location-based applications utilizing GPS, compass, and other sensors.

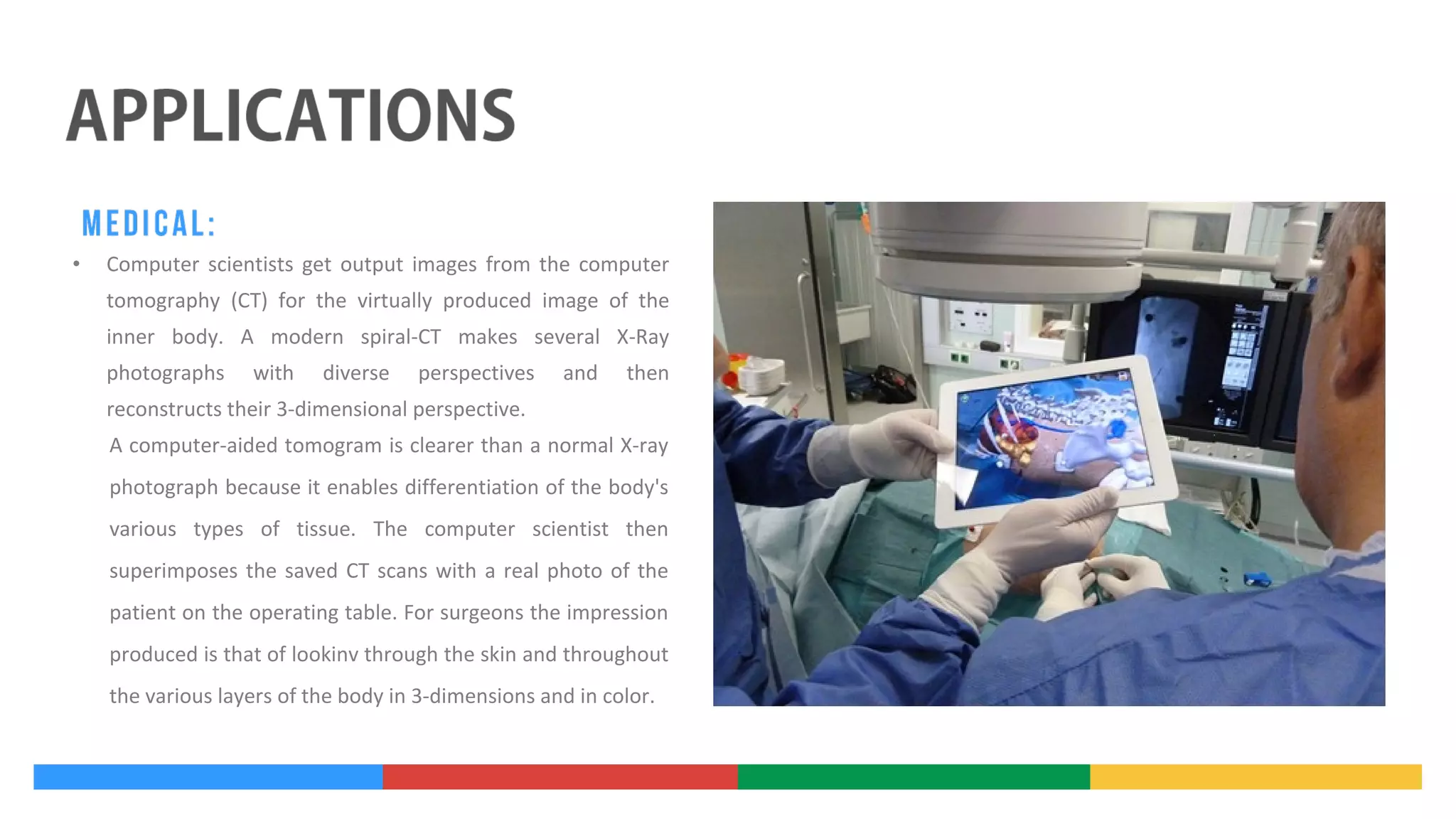

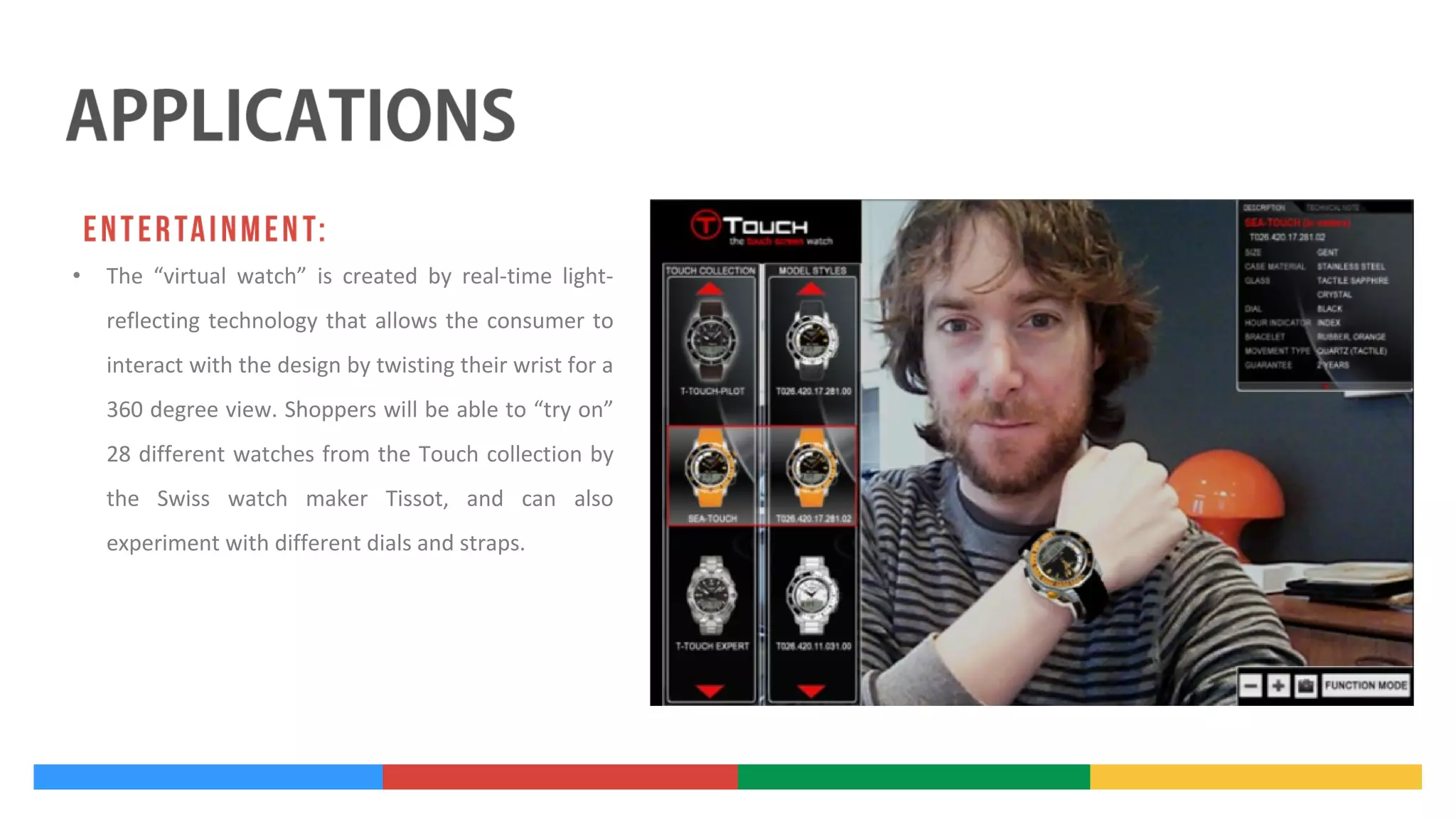

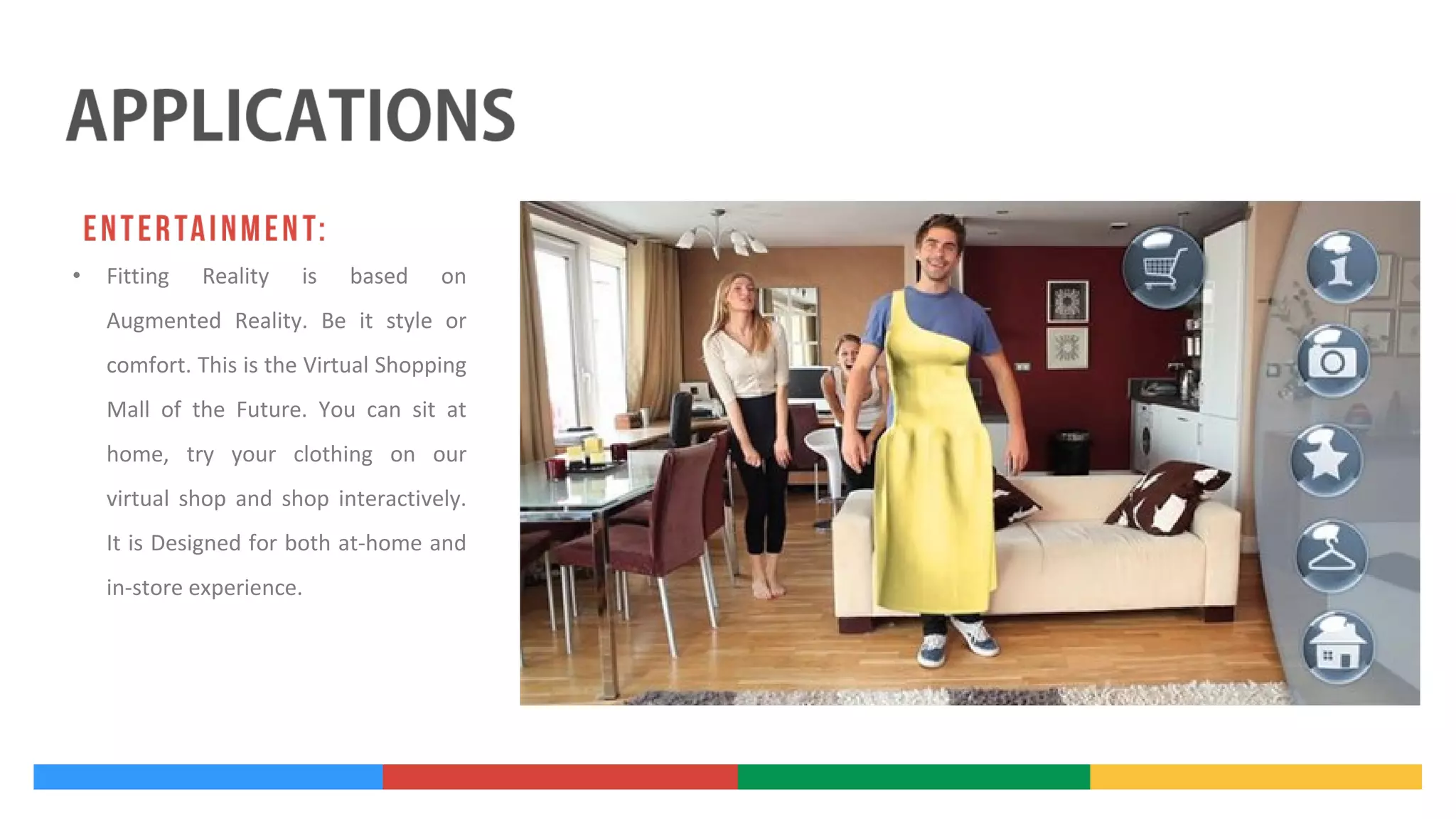

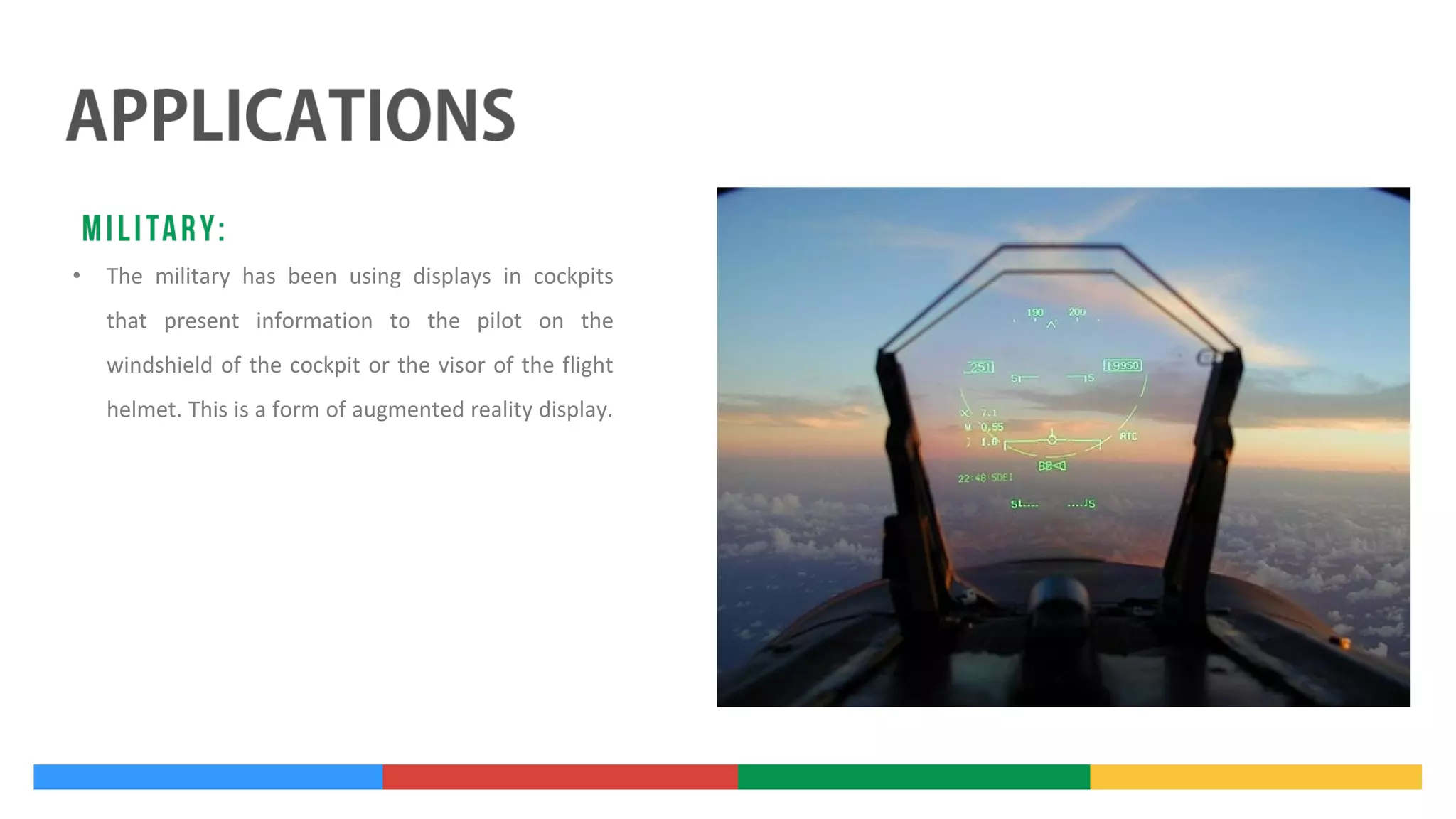

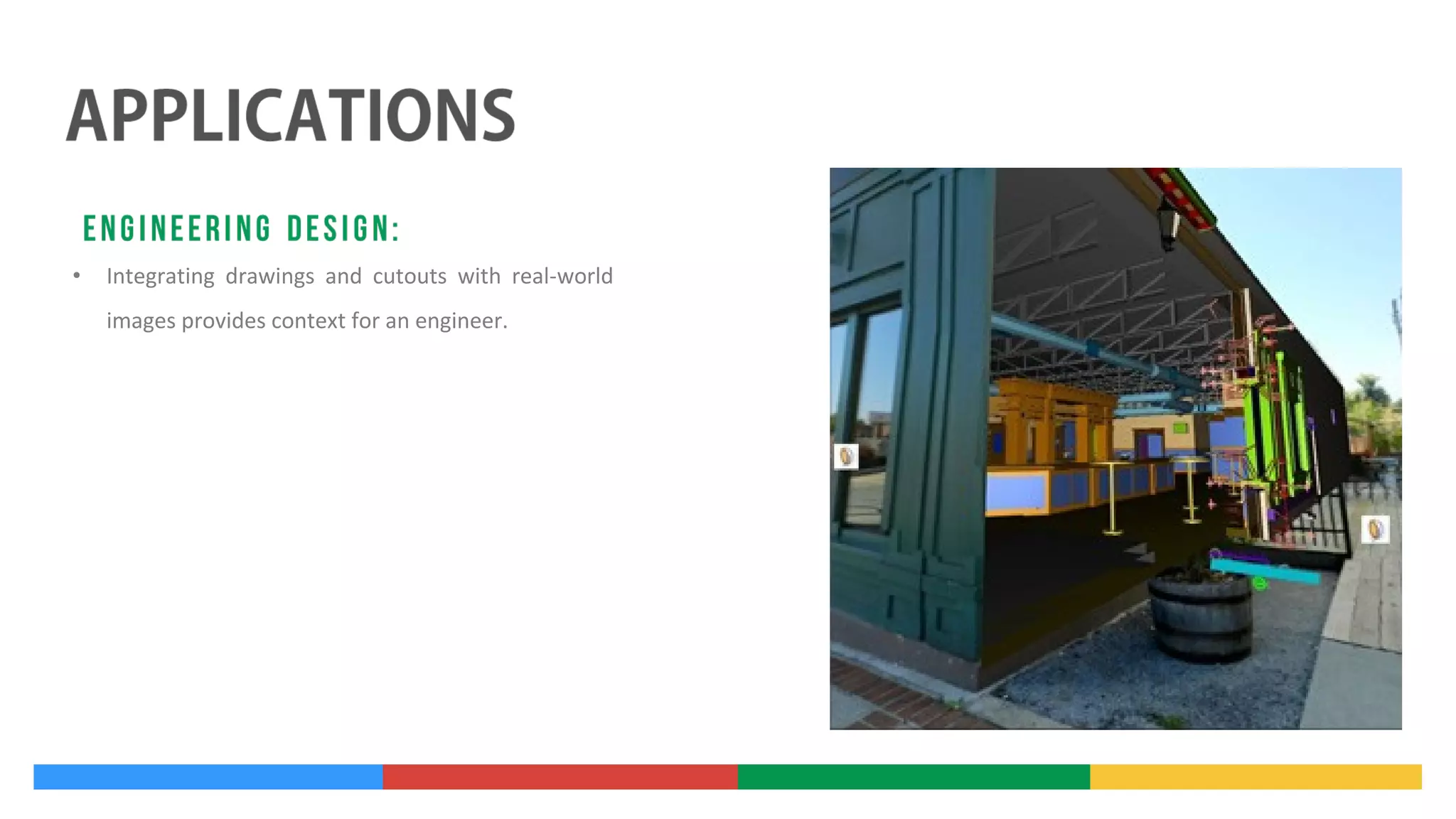

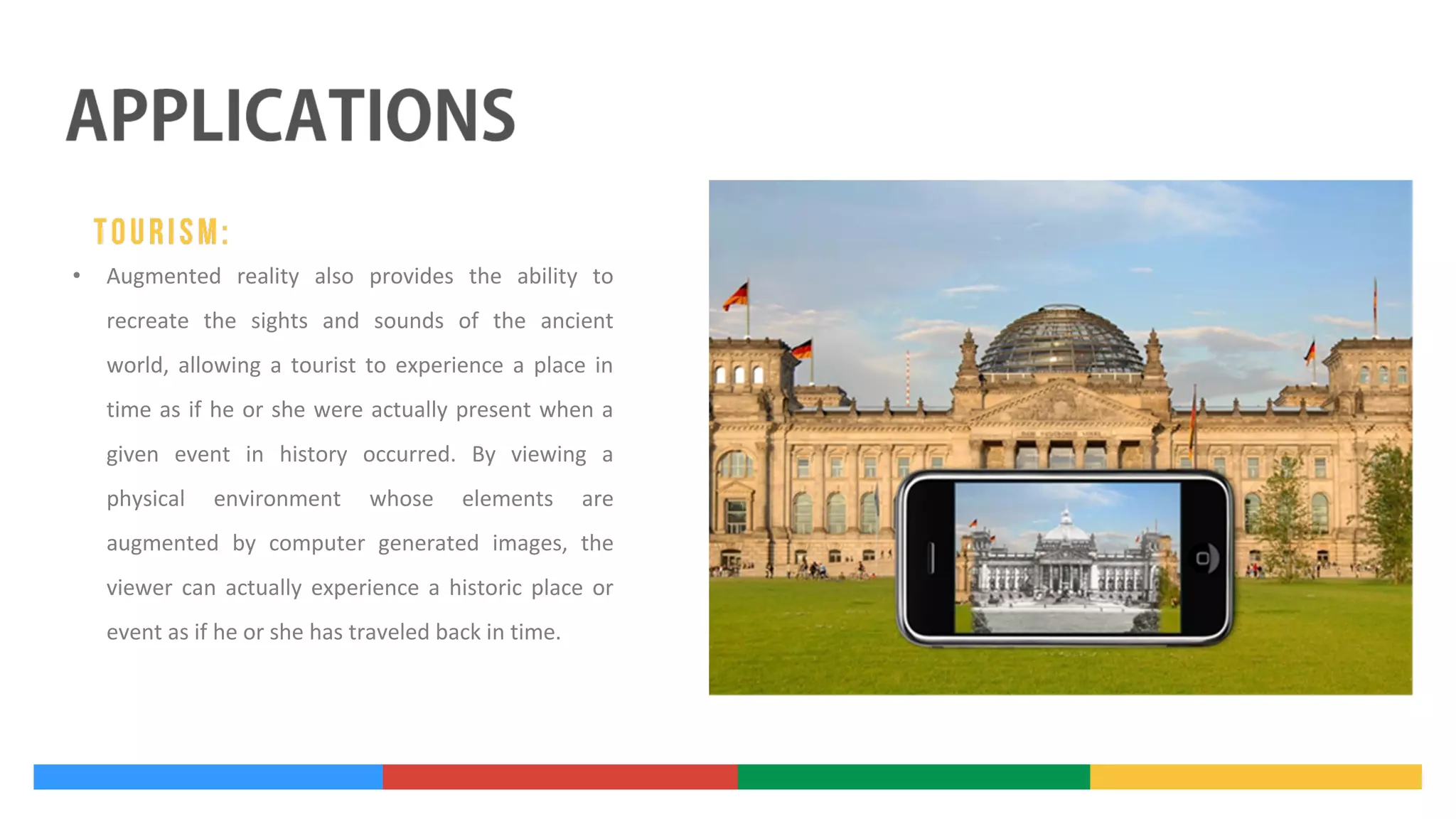

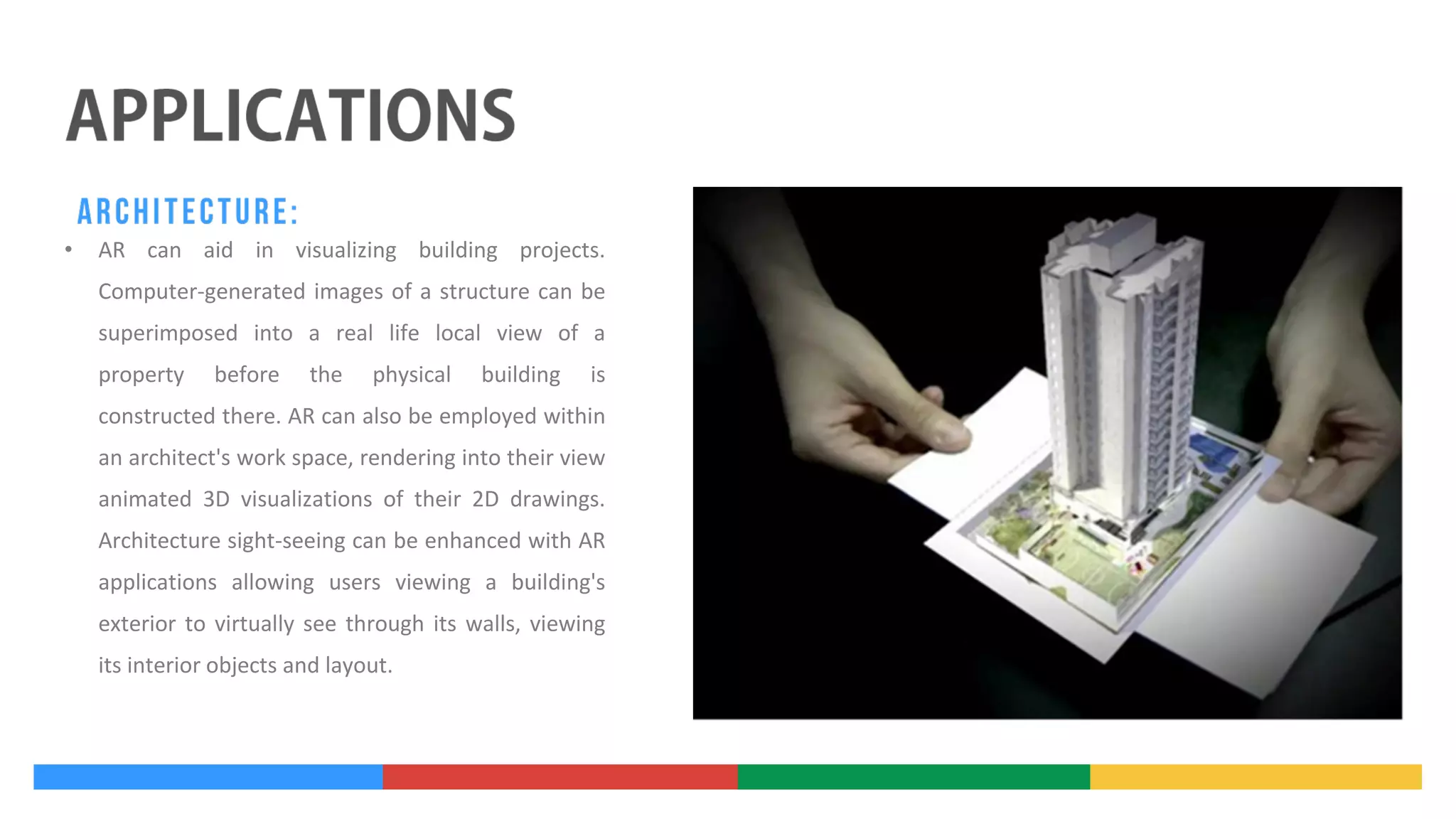

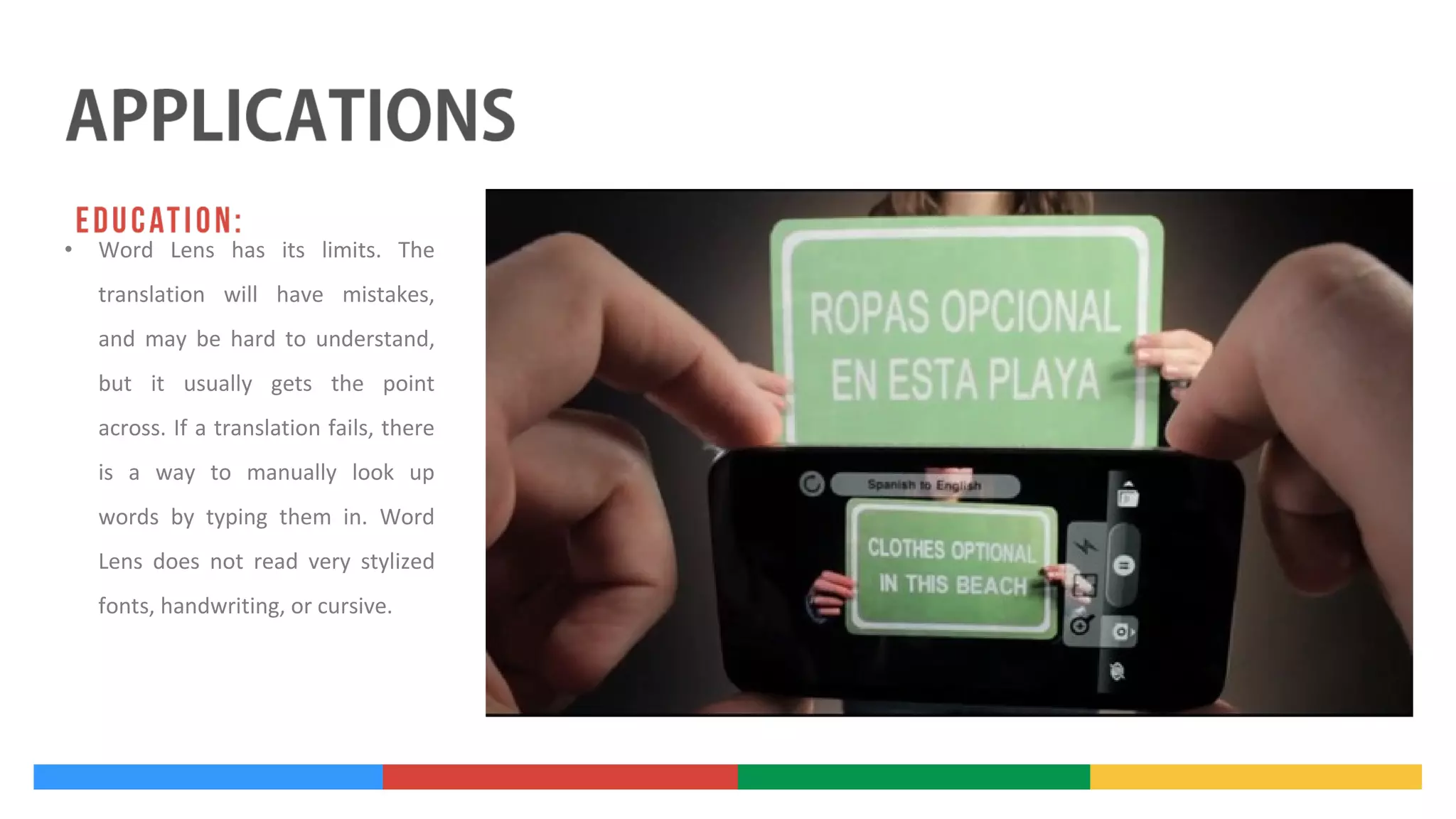

- Examples of current and potential future applications of AR spanning education, military, engineering, retail