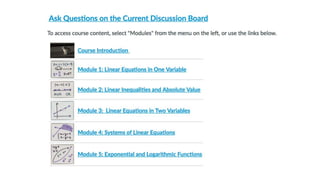

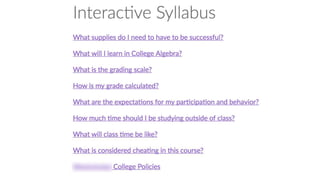

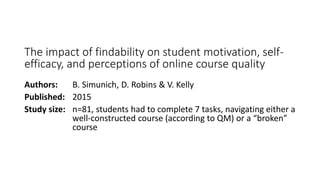

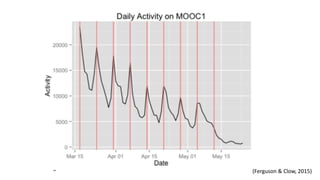

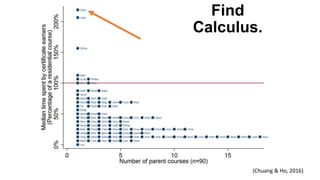

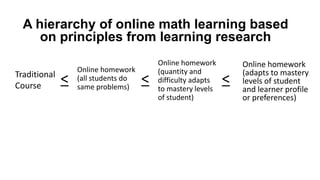

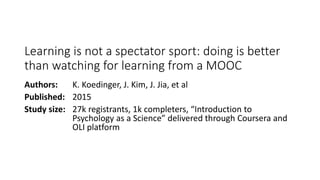

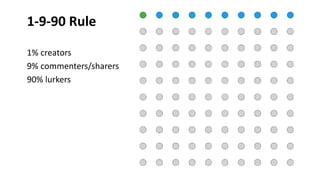

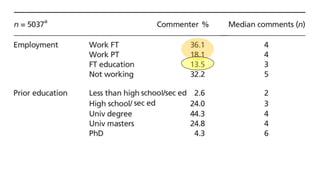

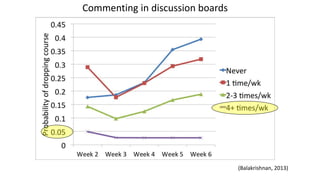

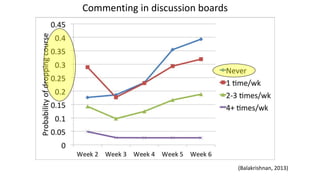

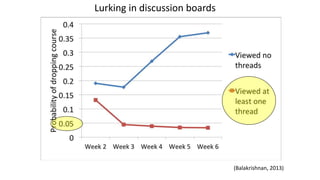

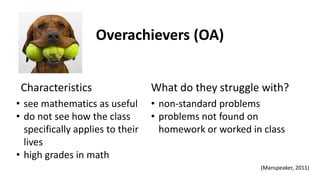

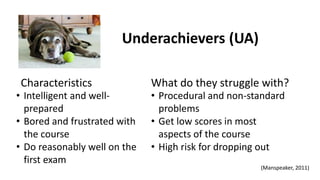

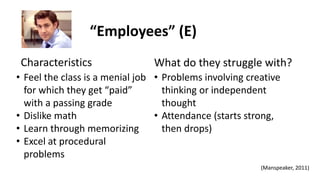

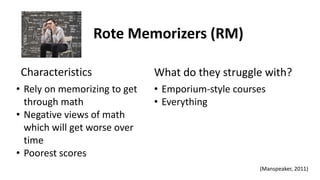

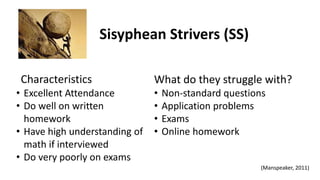

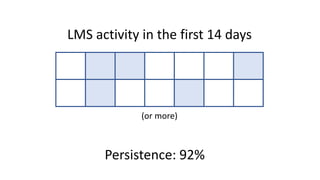

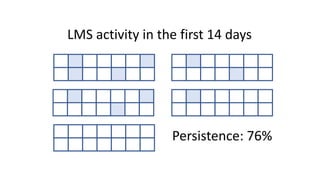

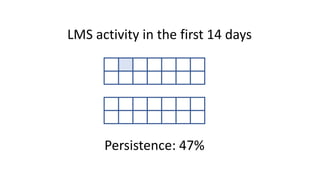

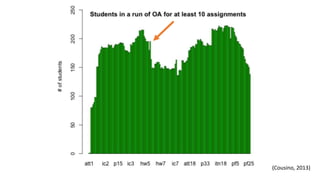

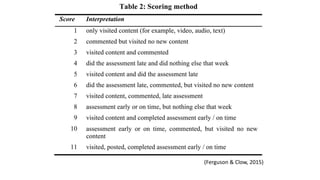

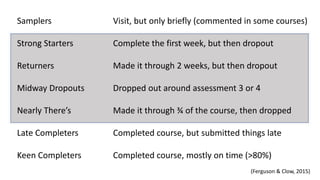

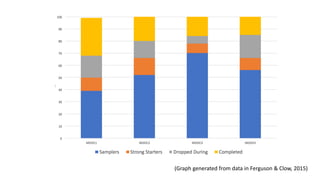

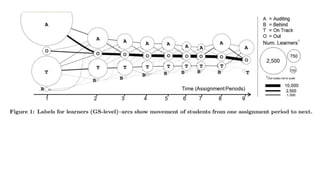

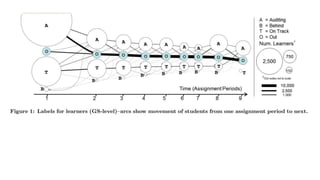

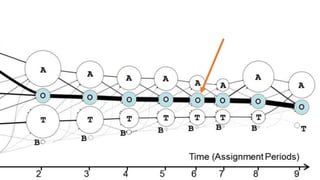

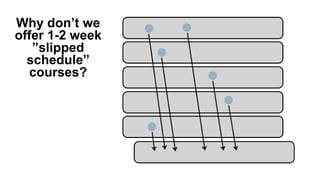

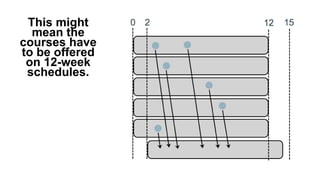

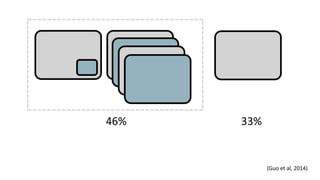

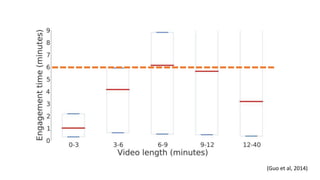

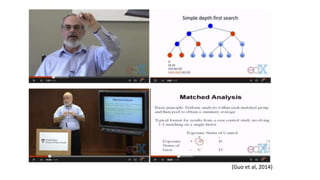

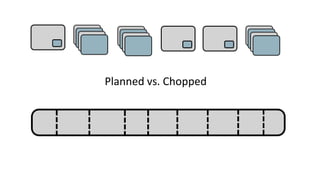

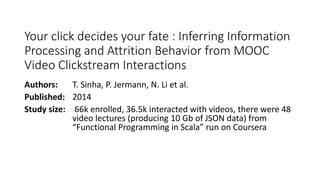

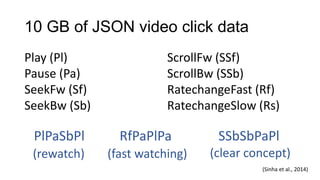

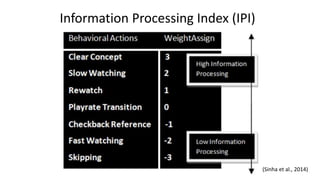

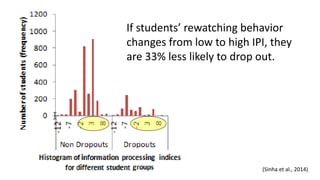

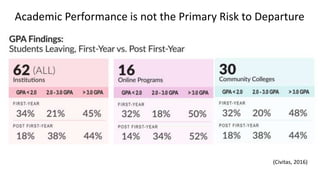

The document summarizes 10 lessons learned from large-scale online learning initiatives. The lessons are: 1) Improve how learners find information within courses. 2) Encourage more frequent student engagement. 3) Use adaptive online math learning systems. 4) Get students to participate in learning communities. 5) Use student profiles to strategize interventions. 6) Anticipate and support students at risk of disengagement. 7) Offer catch-up options for students who fall behind. 8) Optimize instructional video design. 9) Allow students to struggle with concepts rather than always providing solutions. 10) Ensure courses support curiosity, challenge, and creativity.