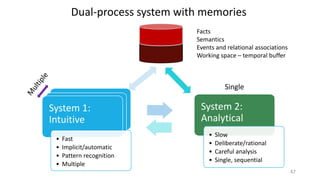

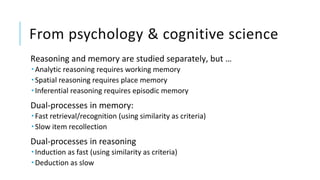

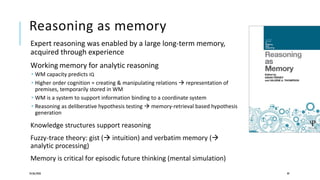

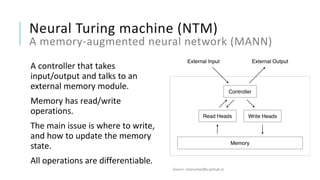

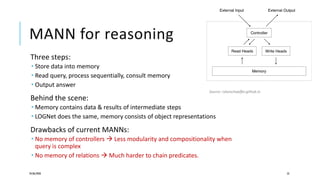

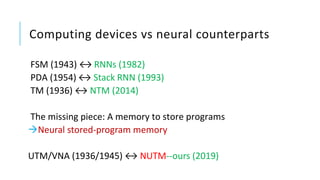

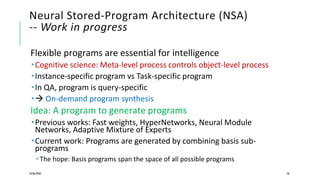

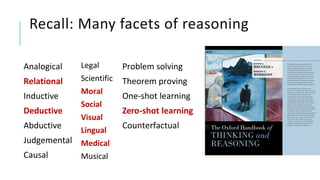

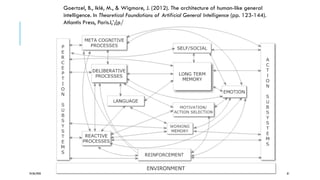

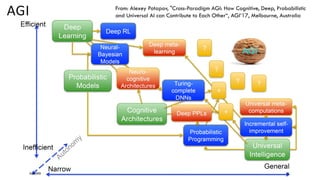

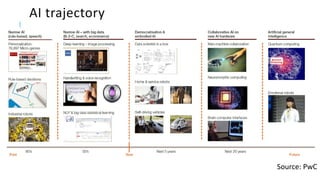

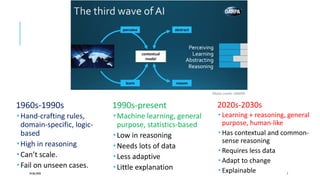

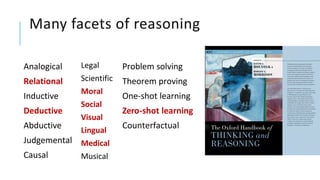

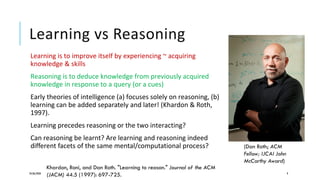

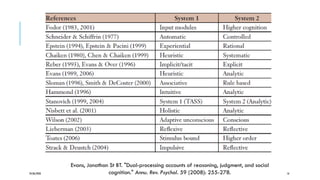

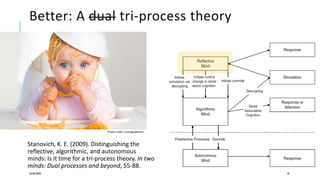

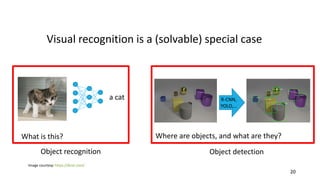

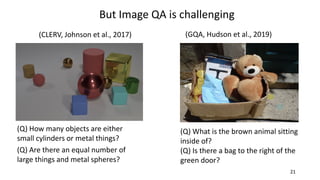

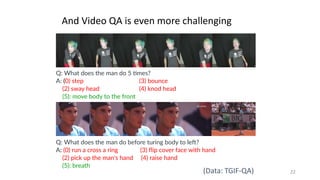

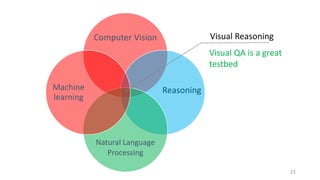

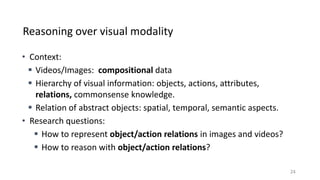

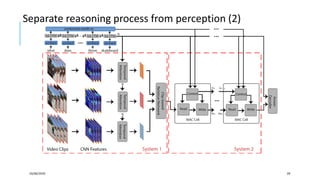

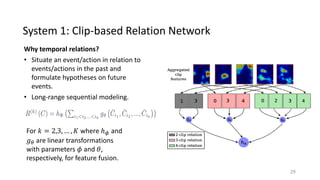

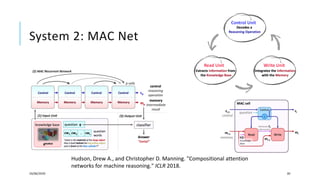

The document discusses dual-process theories of reasoning, emphasizing the distinction between intuitive and analytical reasoning systems. It explores how machine learning and reasoning can be integrated, the challenges of visual question answering, and the importance of memory in reasoning processes. The text proposes advancements in cognitive architectures and neural networks aimed at improving machine reasoning capabilities.

![“[…] one cannot hope to understand

reasoning without understanding the memory

processes […]”

(Thompson and Feeney, 2014)

24/06/2020 46](https://image.slidesharecdn.com/machine-reasoninga2i2-full-200624062420/85/Machine-Reasoning-at-A2I2-Deakin-University-46-320.jpg)