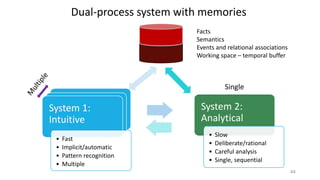

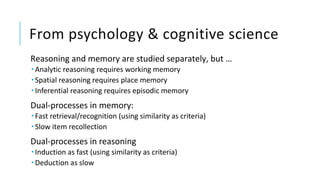

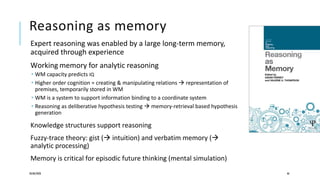

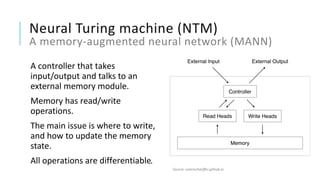

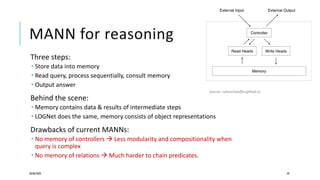

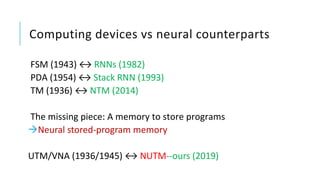

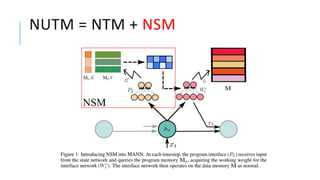

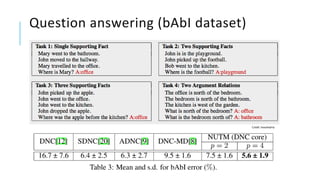

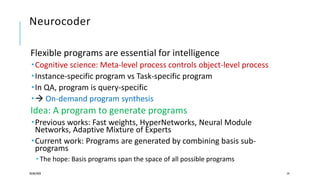

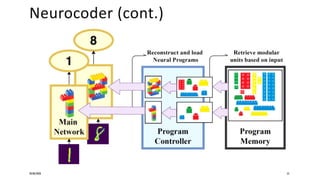

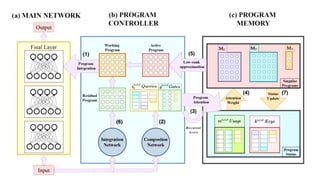

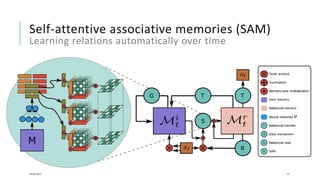

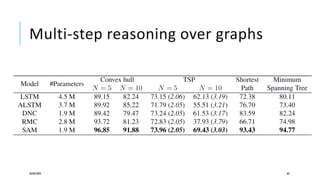

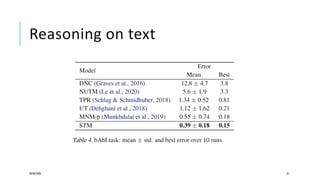

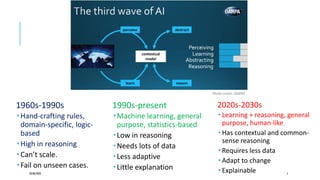

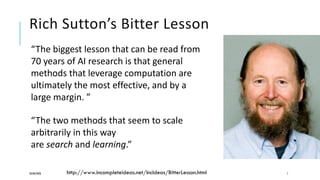

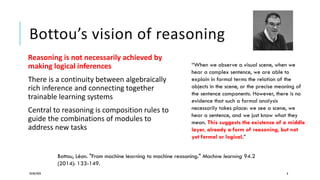

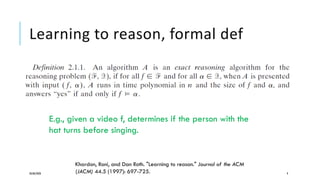

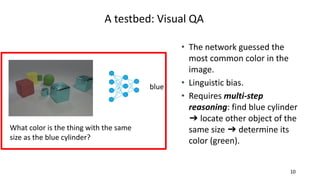

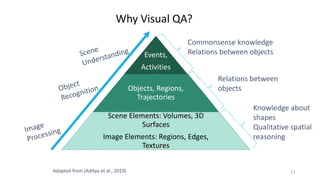

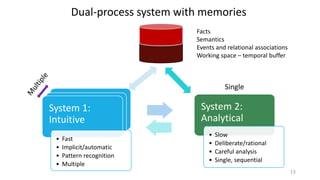

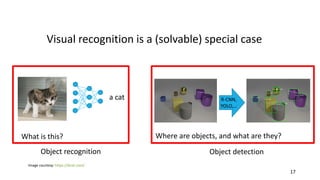

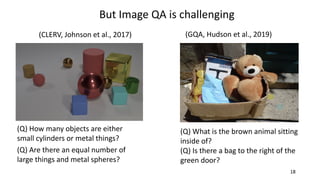

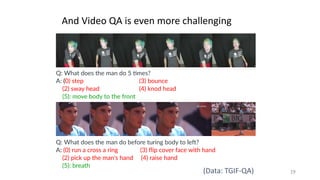

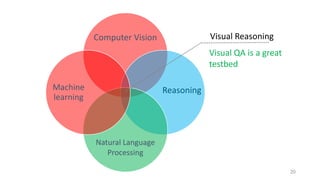

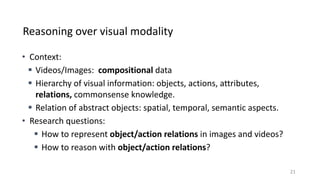

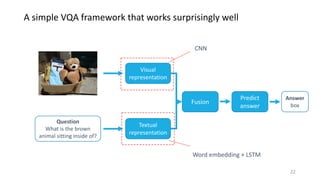

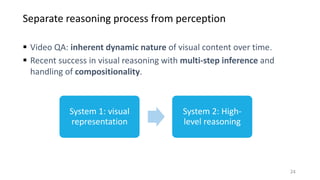

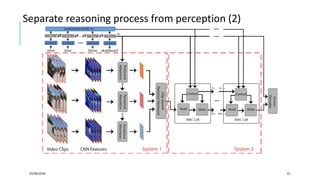

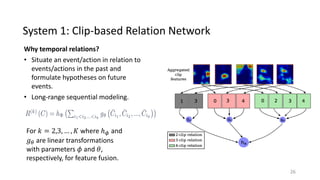

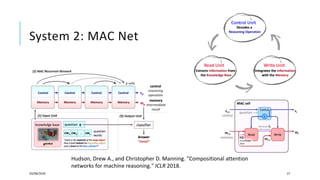

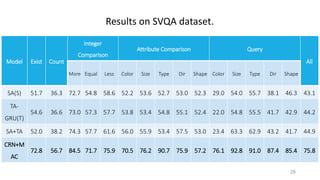

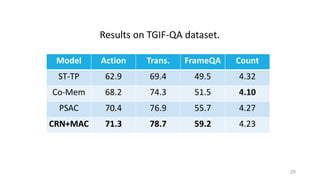

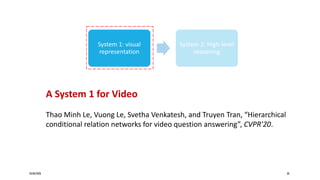

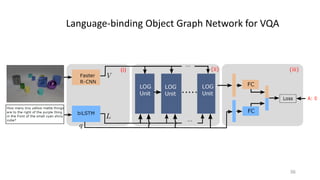

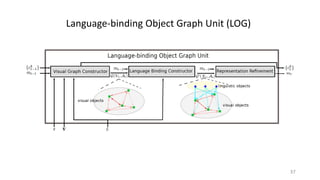

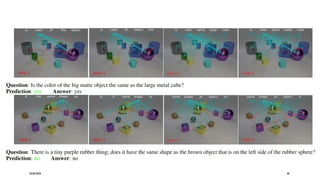

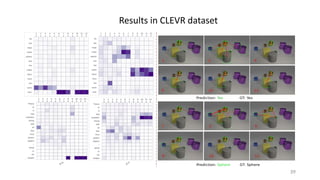

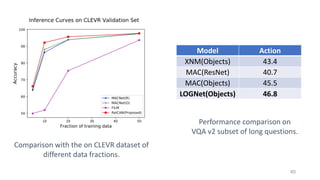

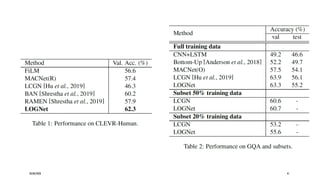

The document discusses the evolution of machine reasoning and learning, comparing various cognitive architectures and their limitations from the 1960s to the present. It explores the interplay between learning and reasoning, emphasizing the need for contextual and common-sense reasoning in AI systems, and highlights advancements in visual question answering frameworks. The insights from dual-process theories and neural Turing machines are presented as essential components for developing more effective reasoning capabilities in artificial intelligence.

![“[…] one cannot hope to understand

reasoning without understanding the memory

processes […]”

(Thompson and Feeney, 2014)

20/08/2020 43](https://image.slidesharecdn.com/machine-reasoning-200820041317/85/Machine-reasoning-43-320.jpg)