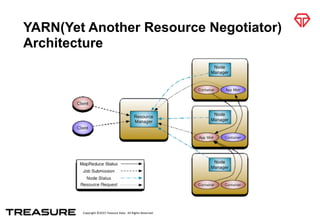

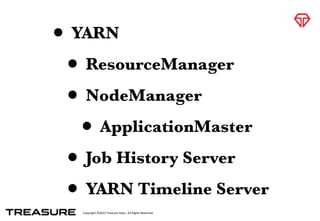

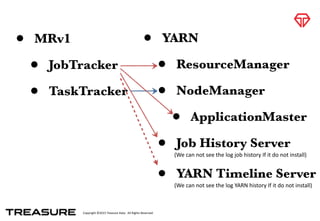

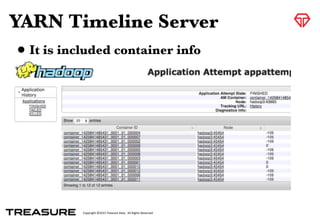

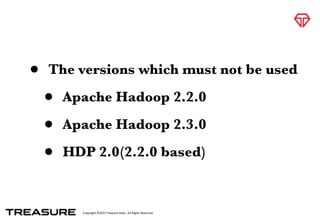

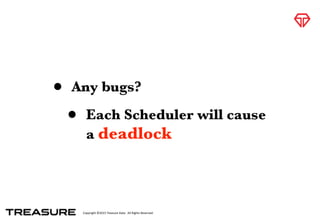

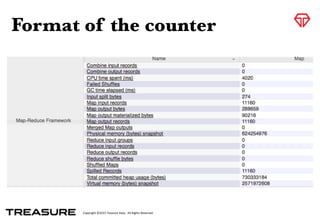

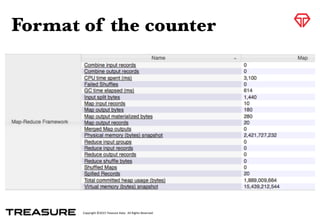

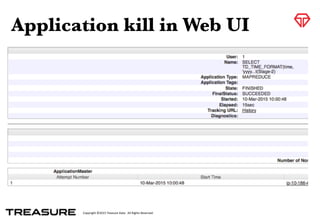

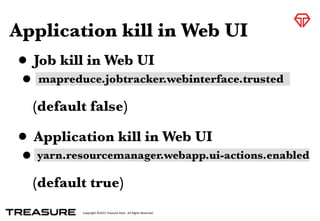

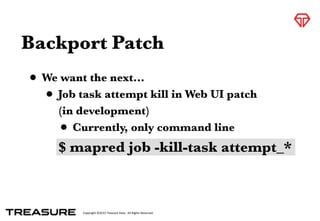

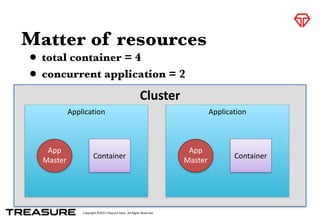

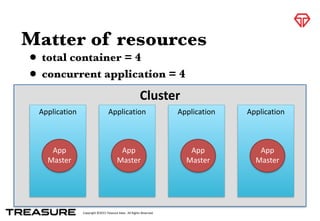

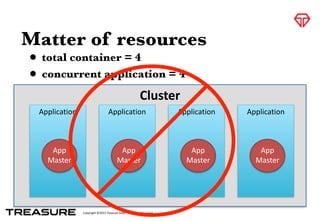

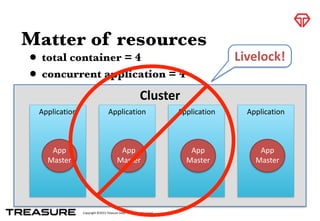

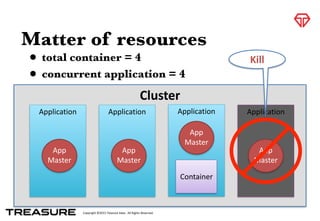

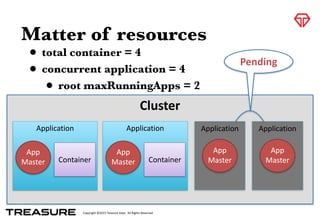

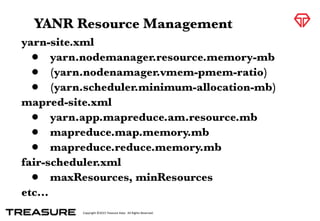

This document summarizes Ryu Kobayashi's presentation on HDP2 and YARN operations. The presentation introduced YARN, the resource management framework in Hadoop 2.0, describing its architecture and how it differs from the previous MapReduce v1 framework. It highlighted important considerations for YARN resource management and potential bugs in older versions of Hadoop.