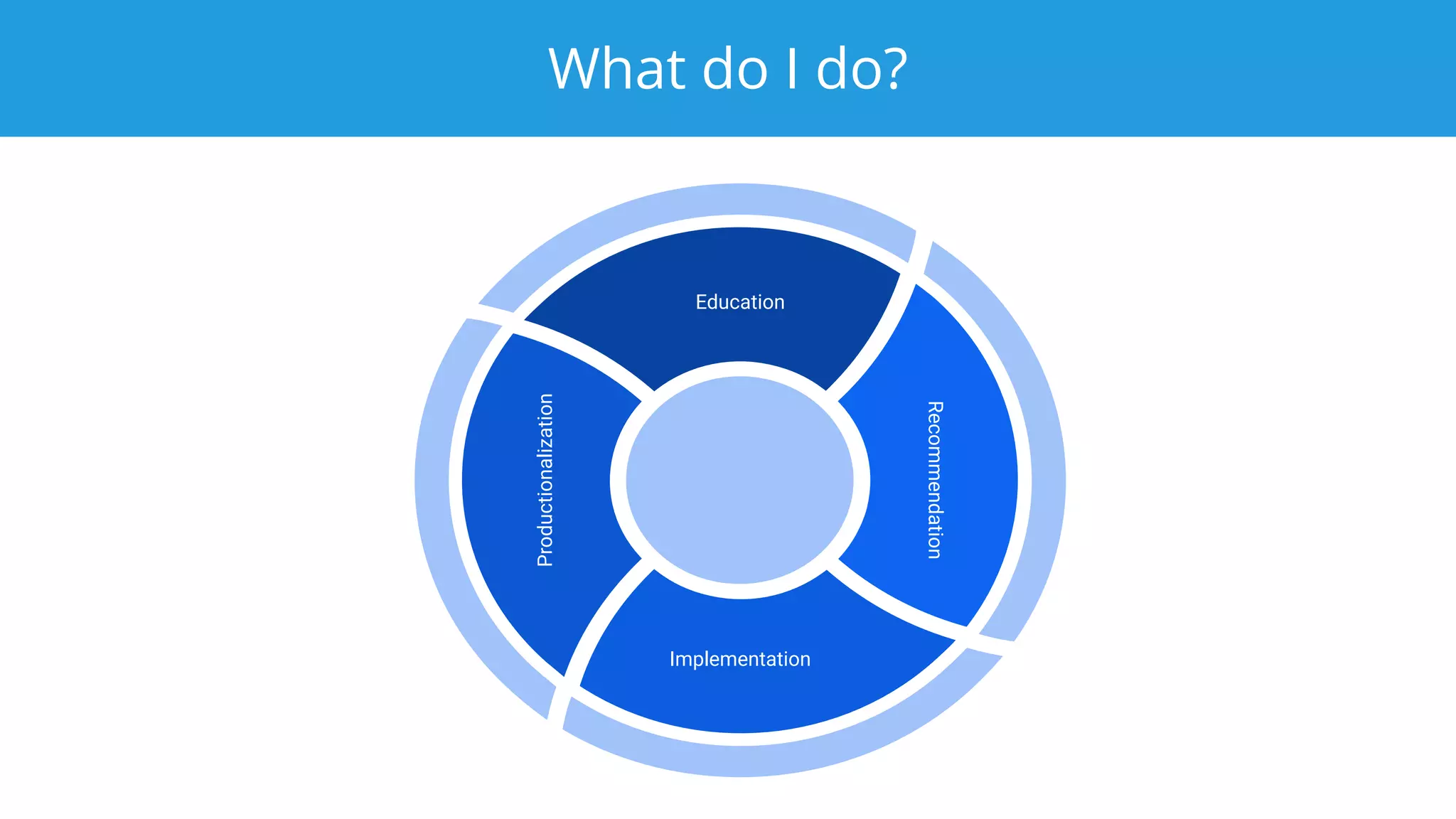

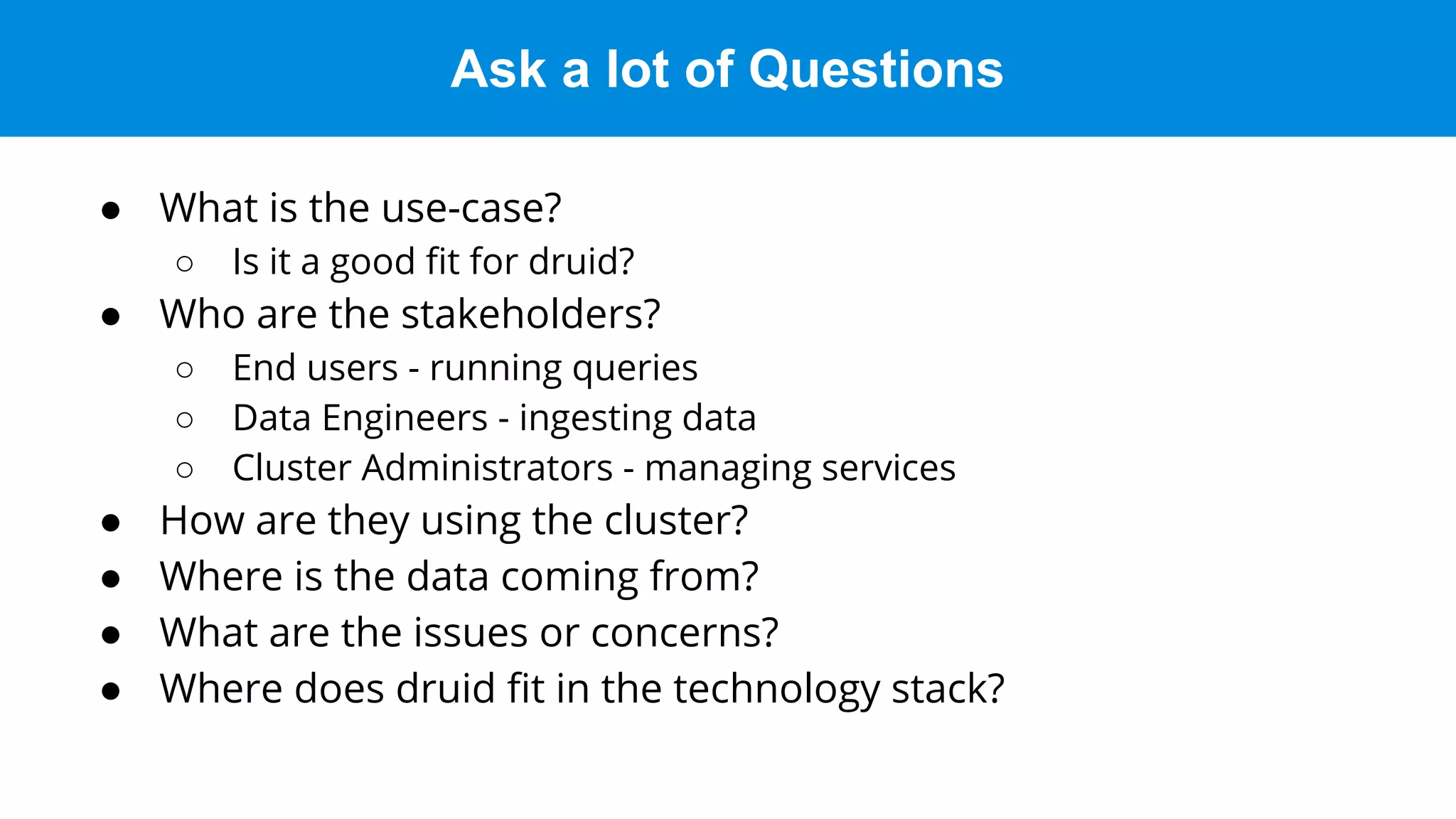

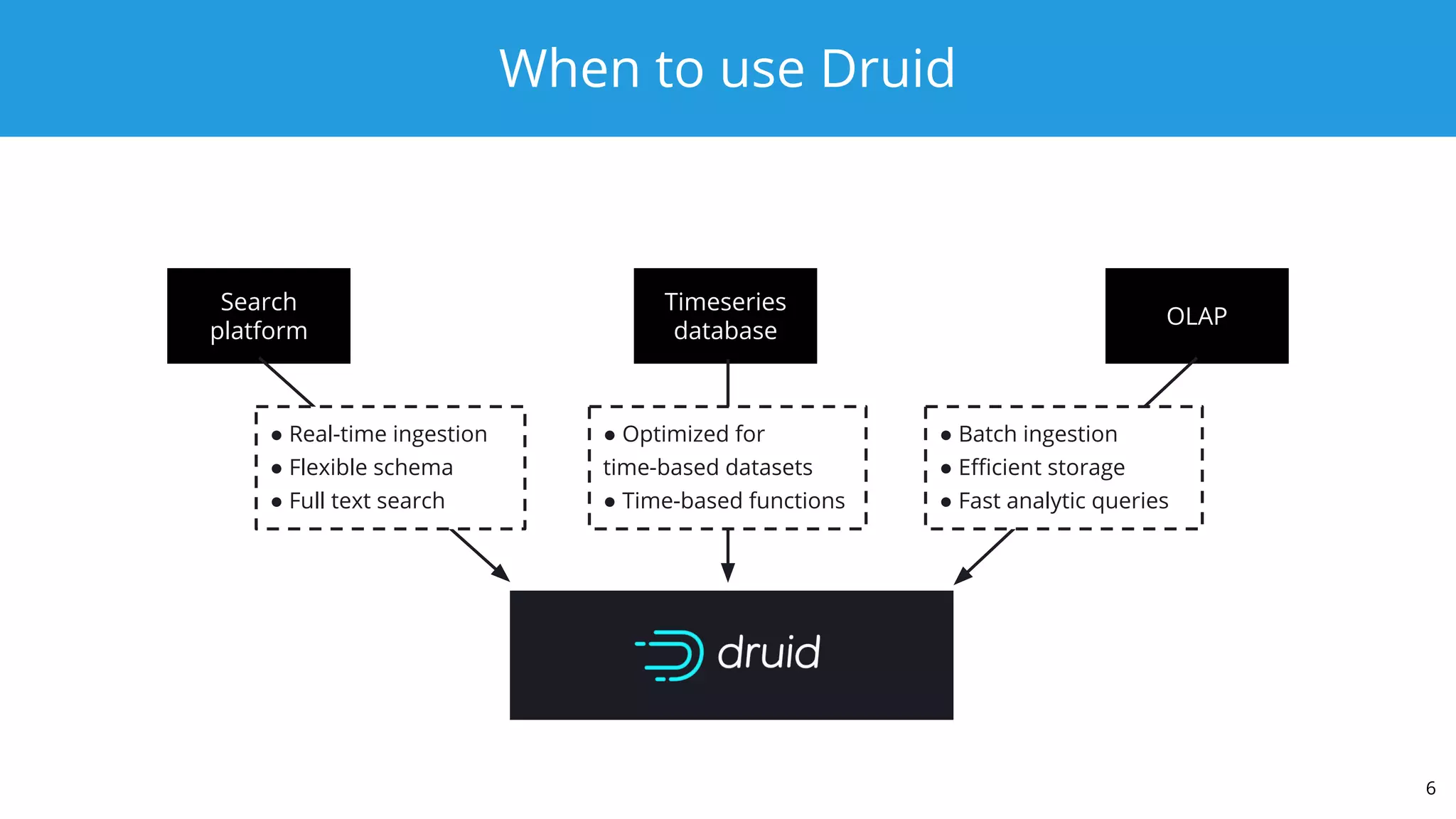

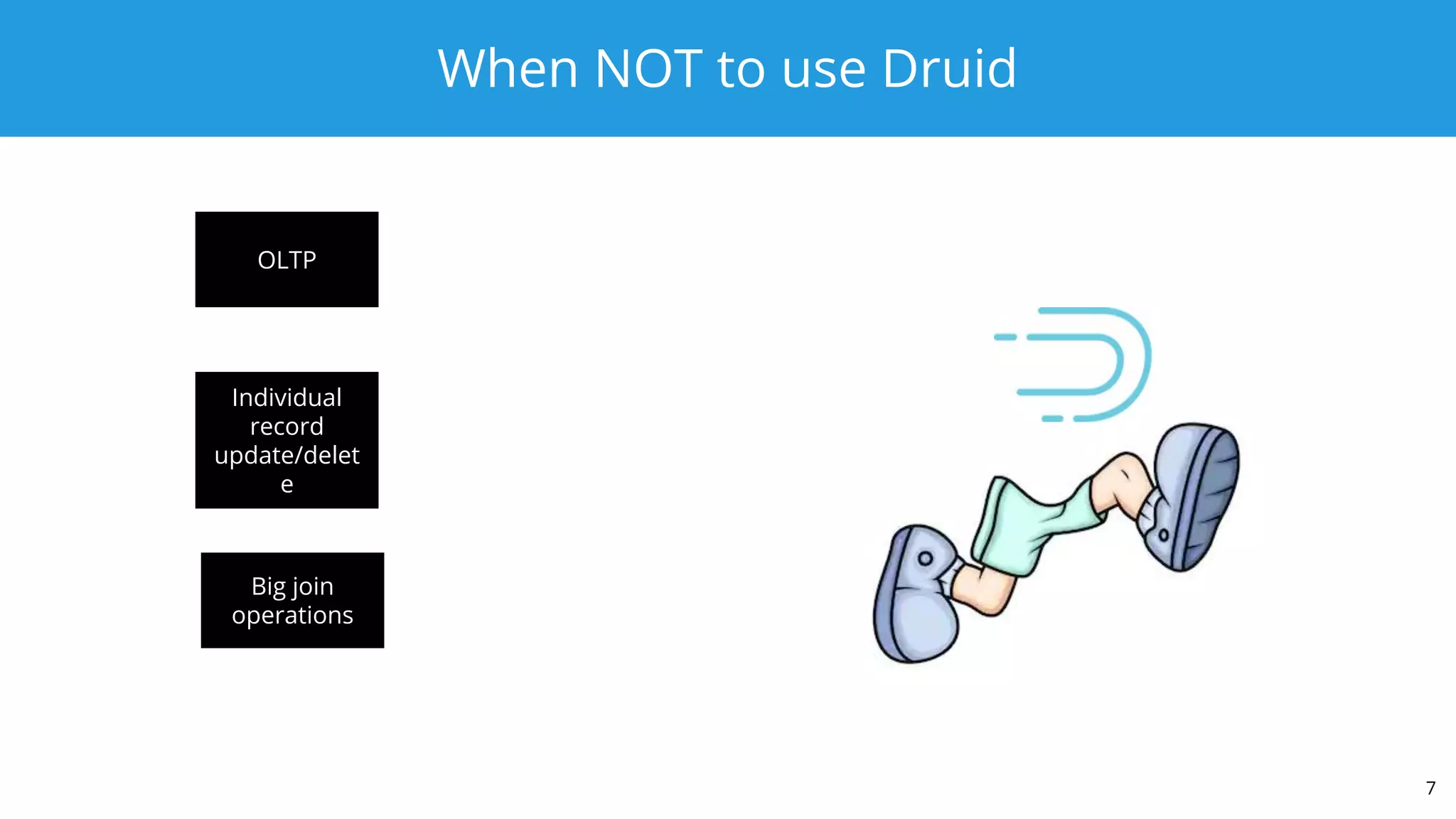

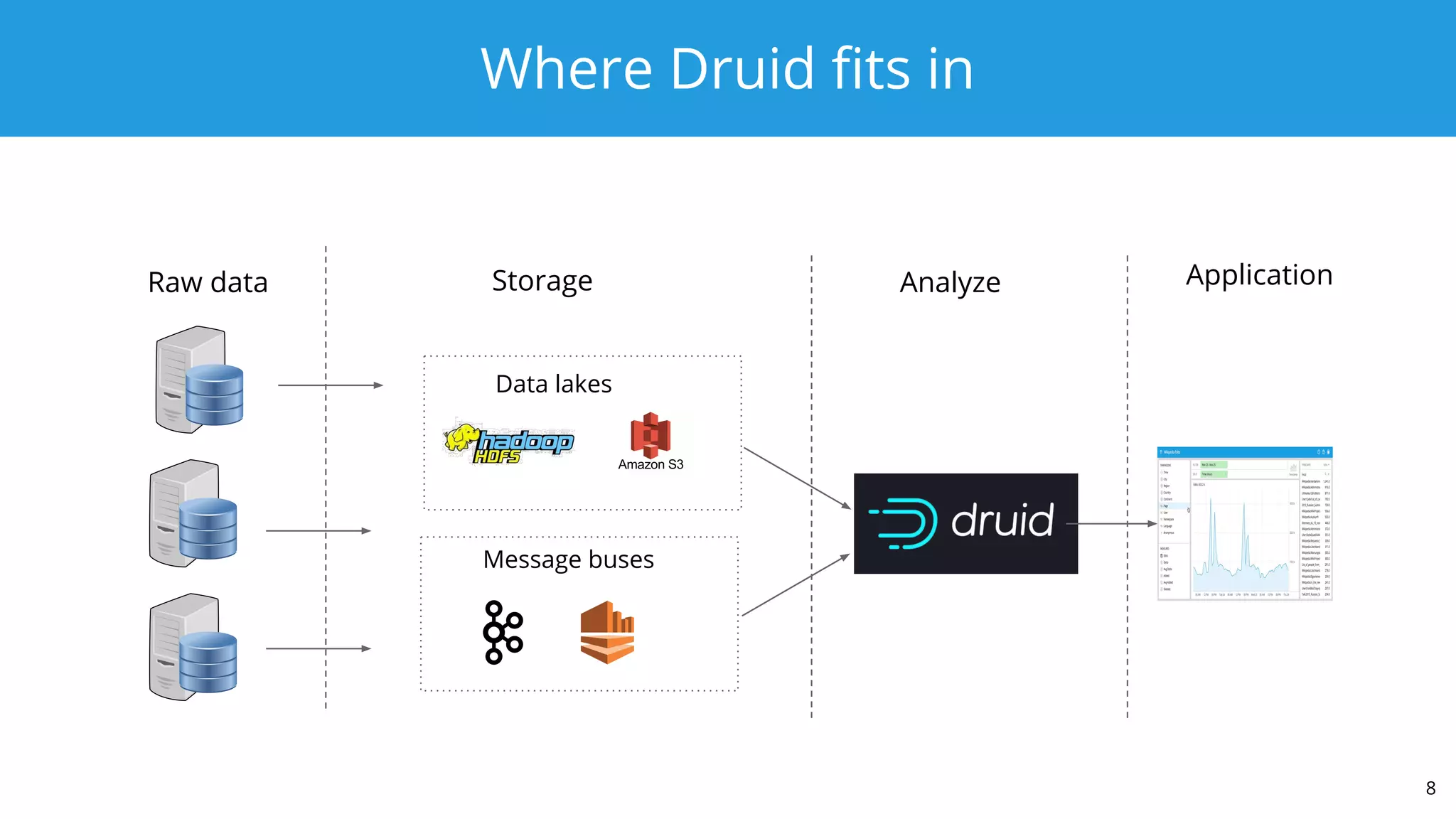

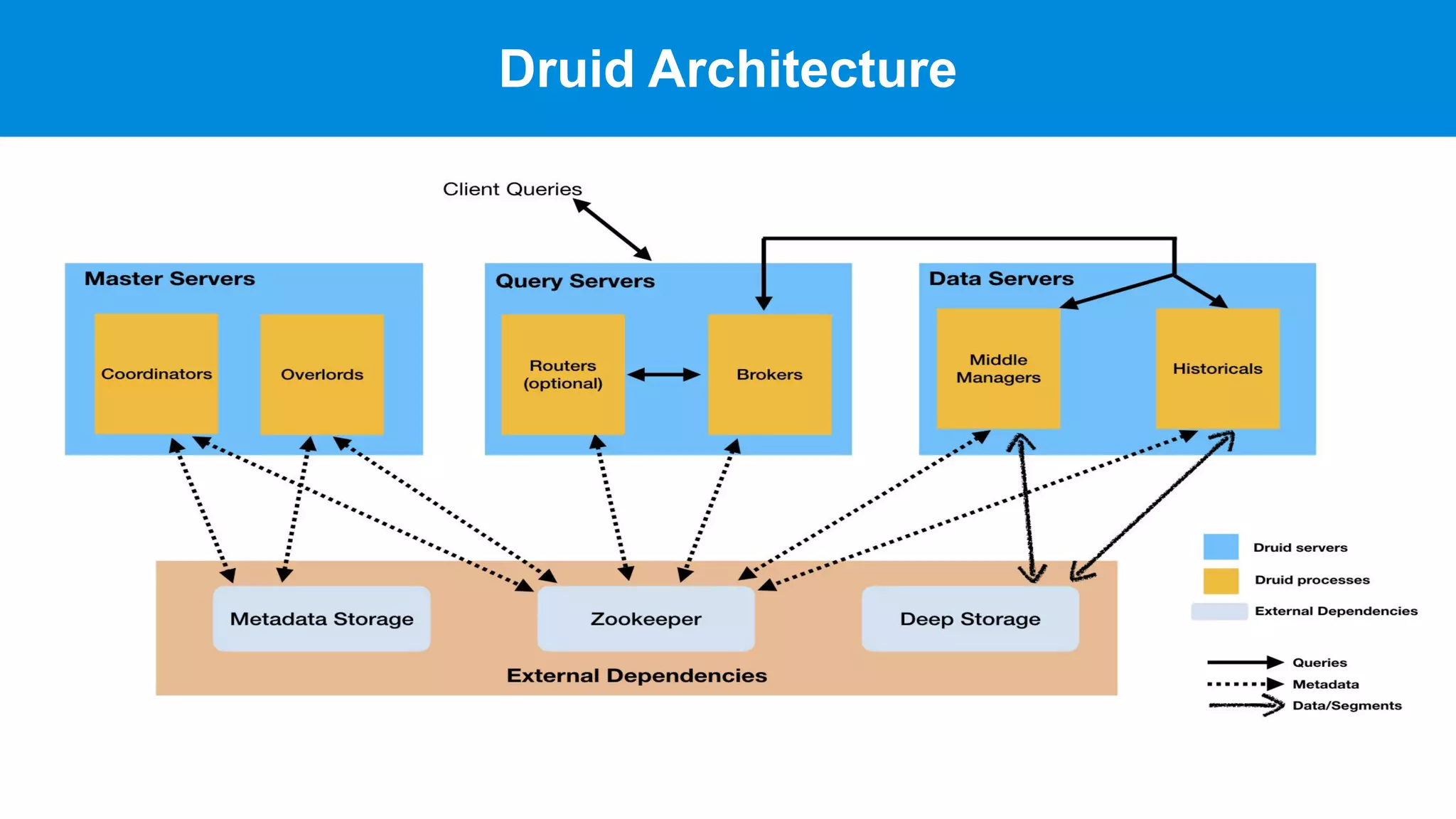

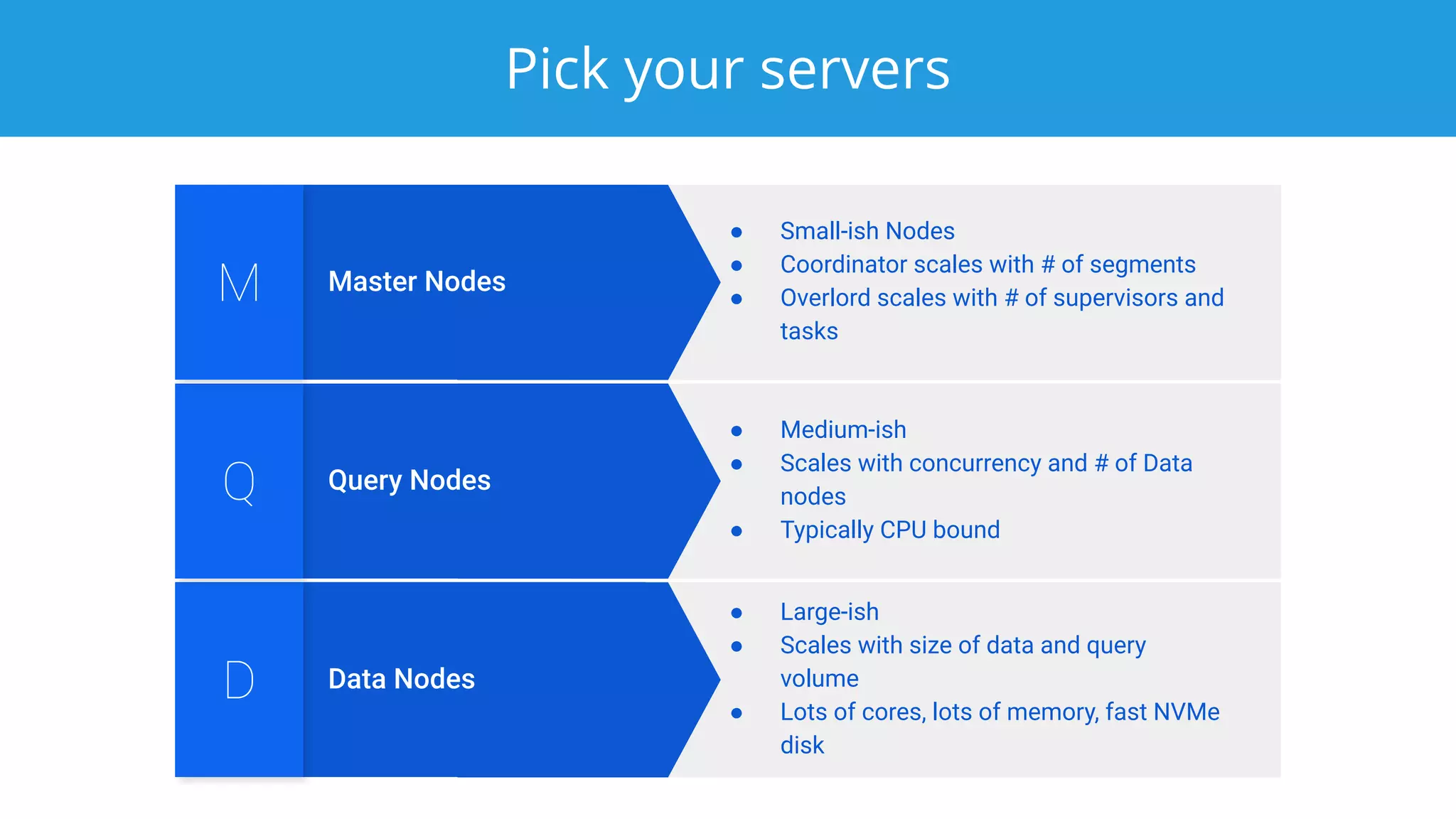

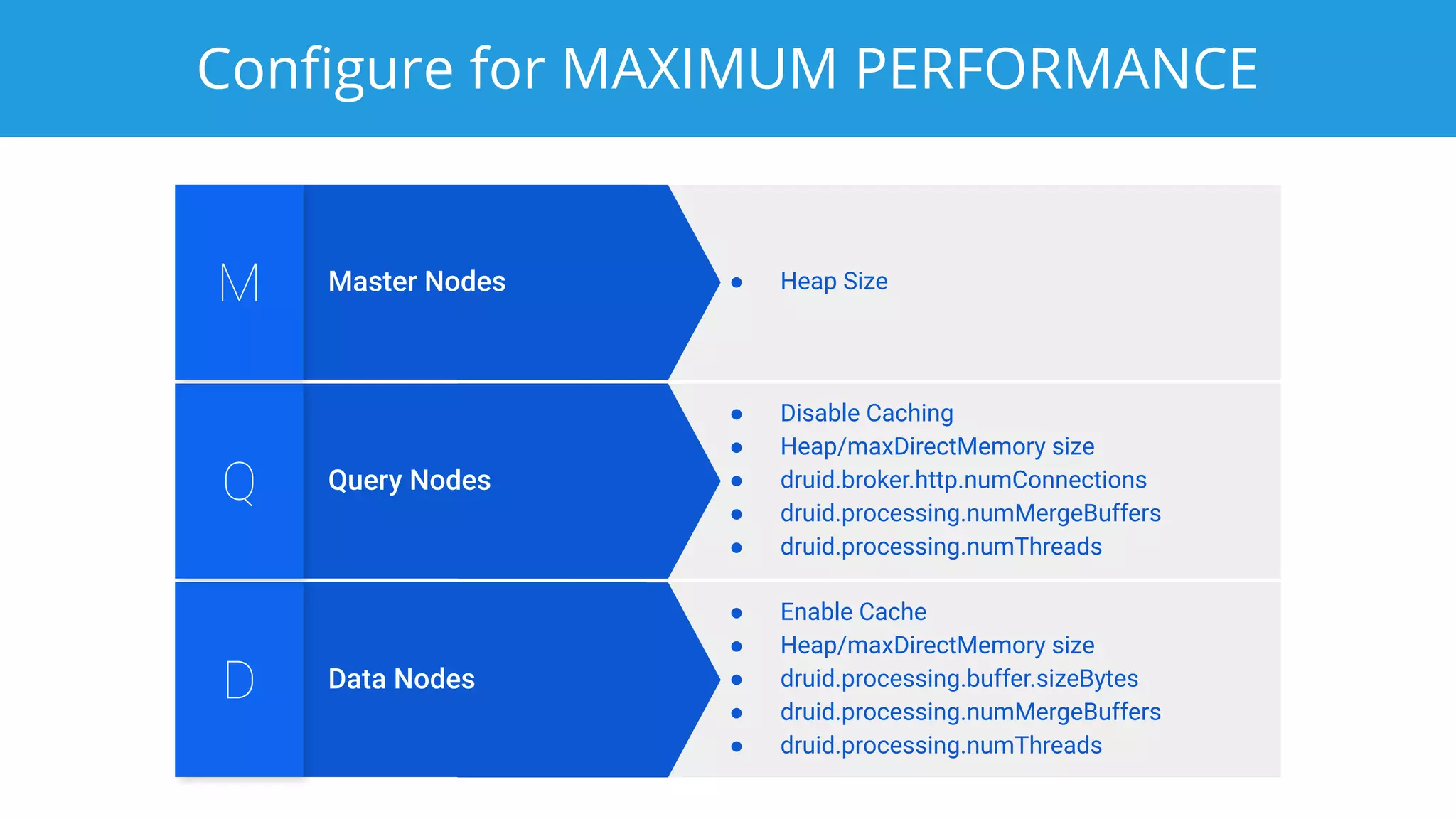

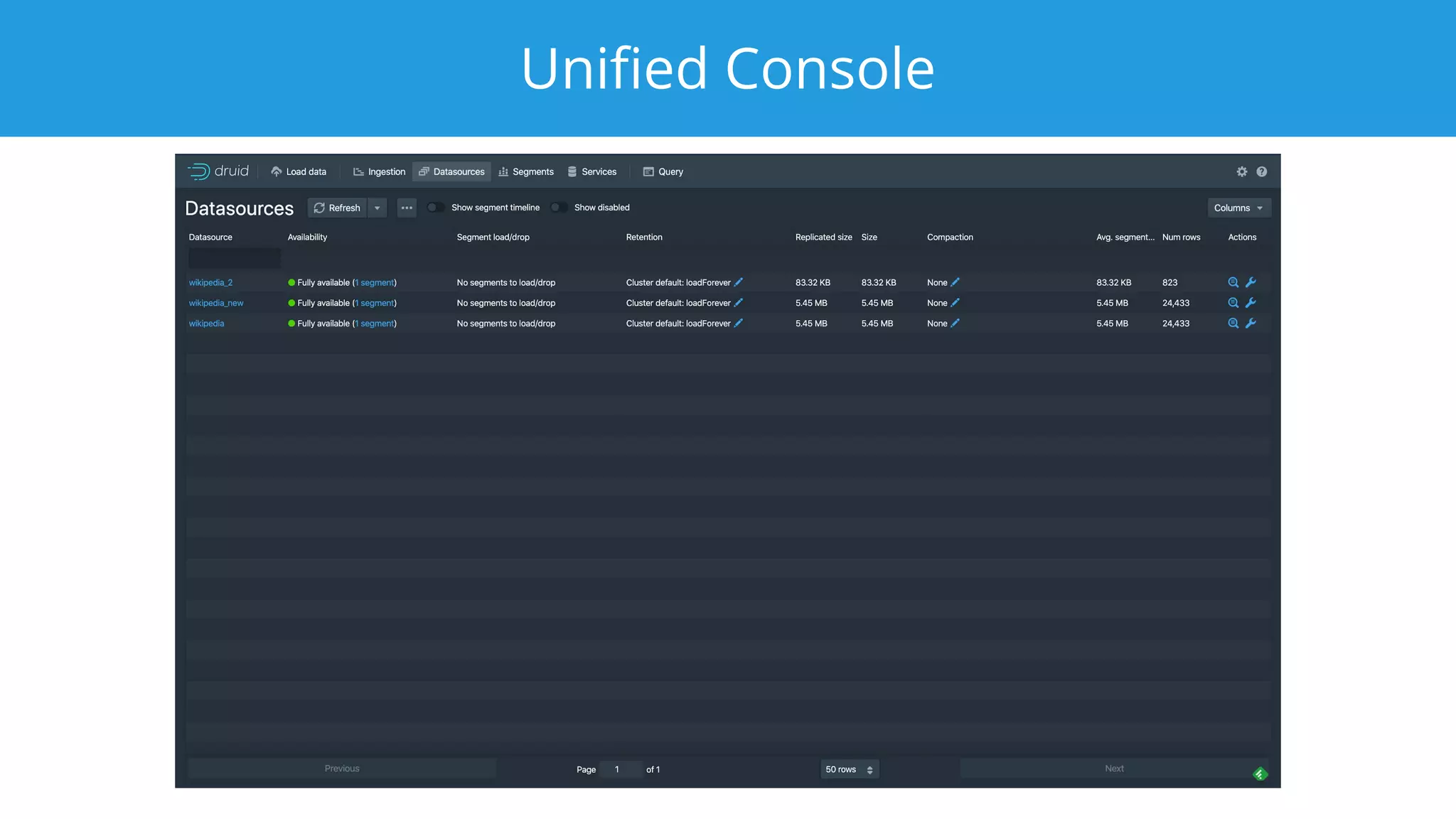

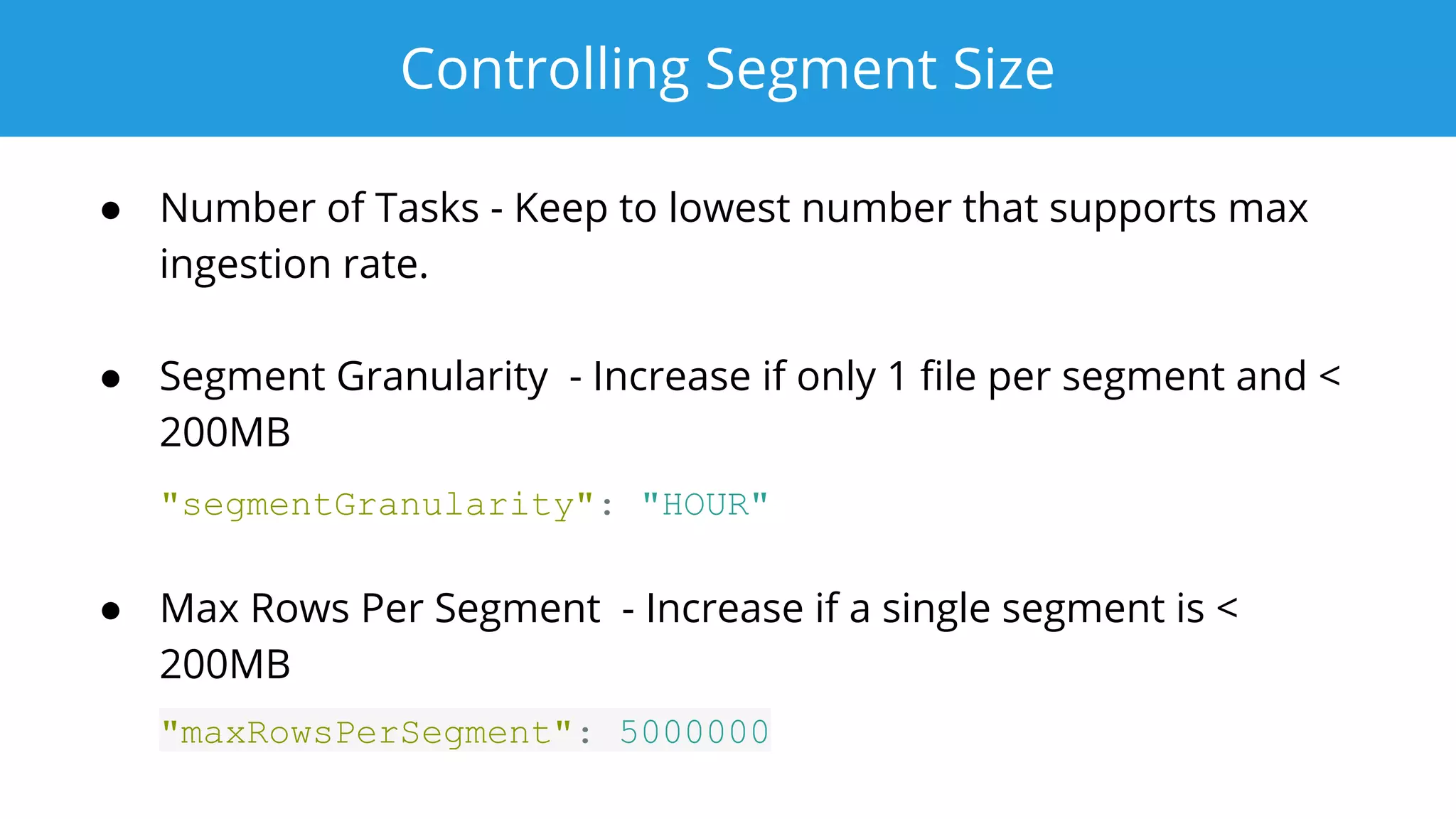

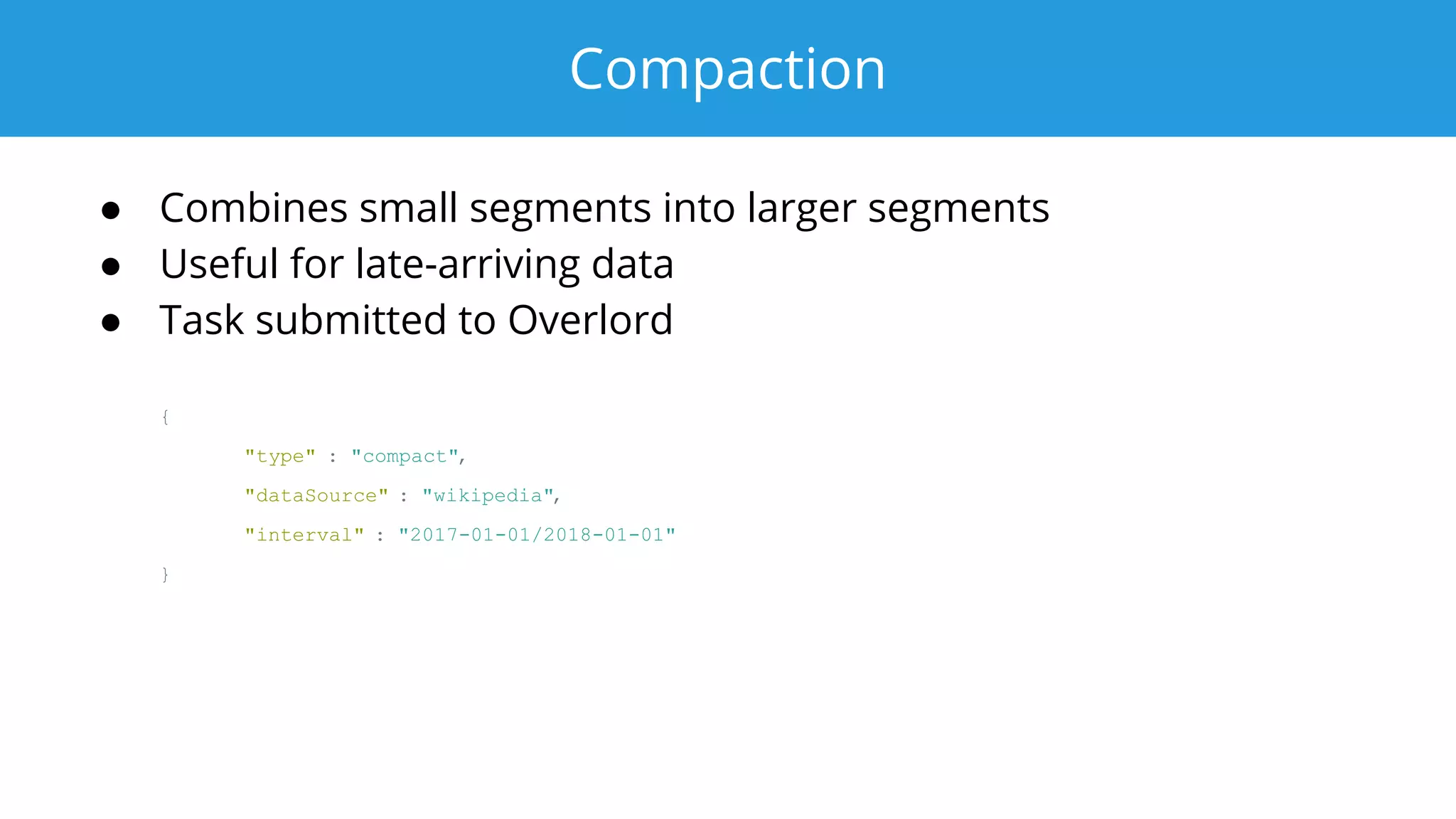

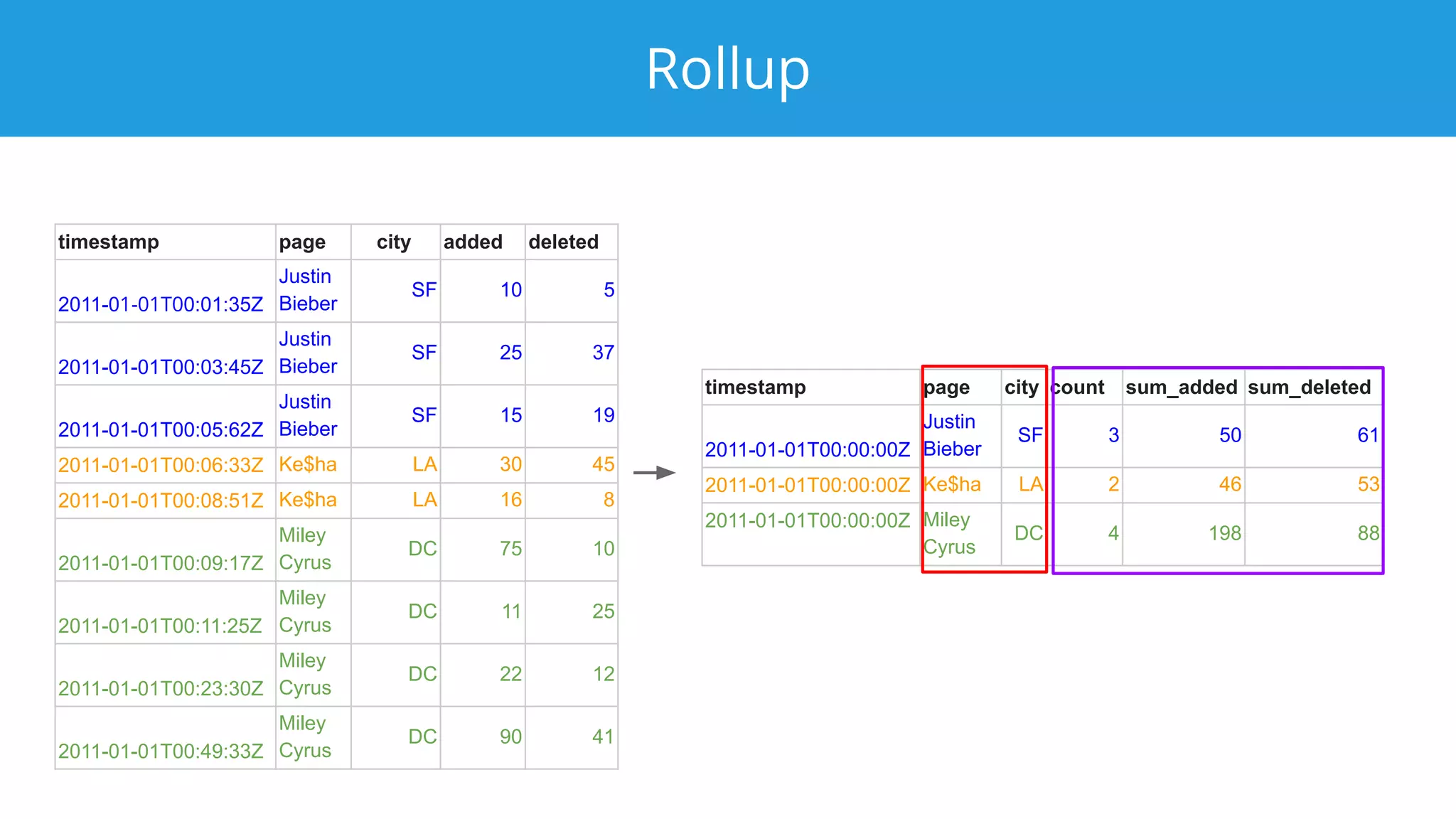

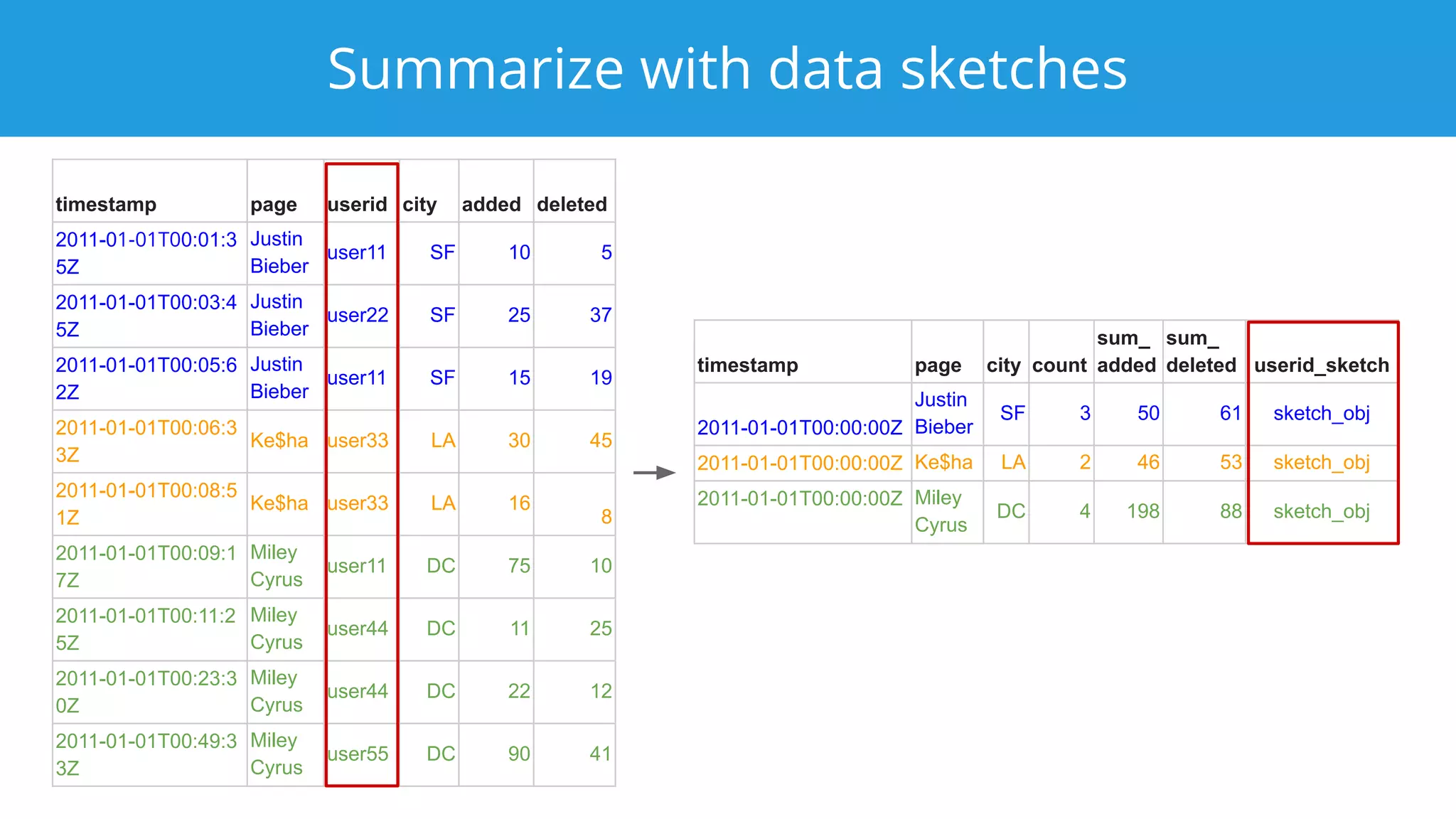

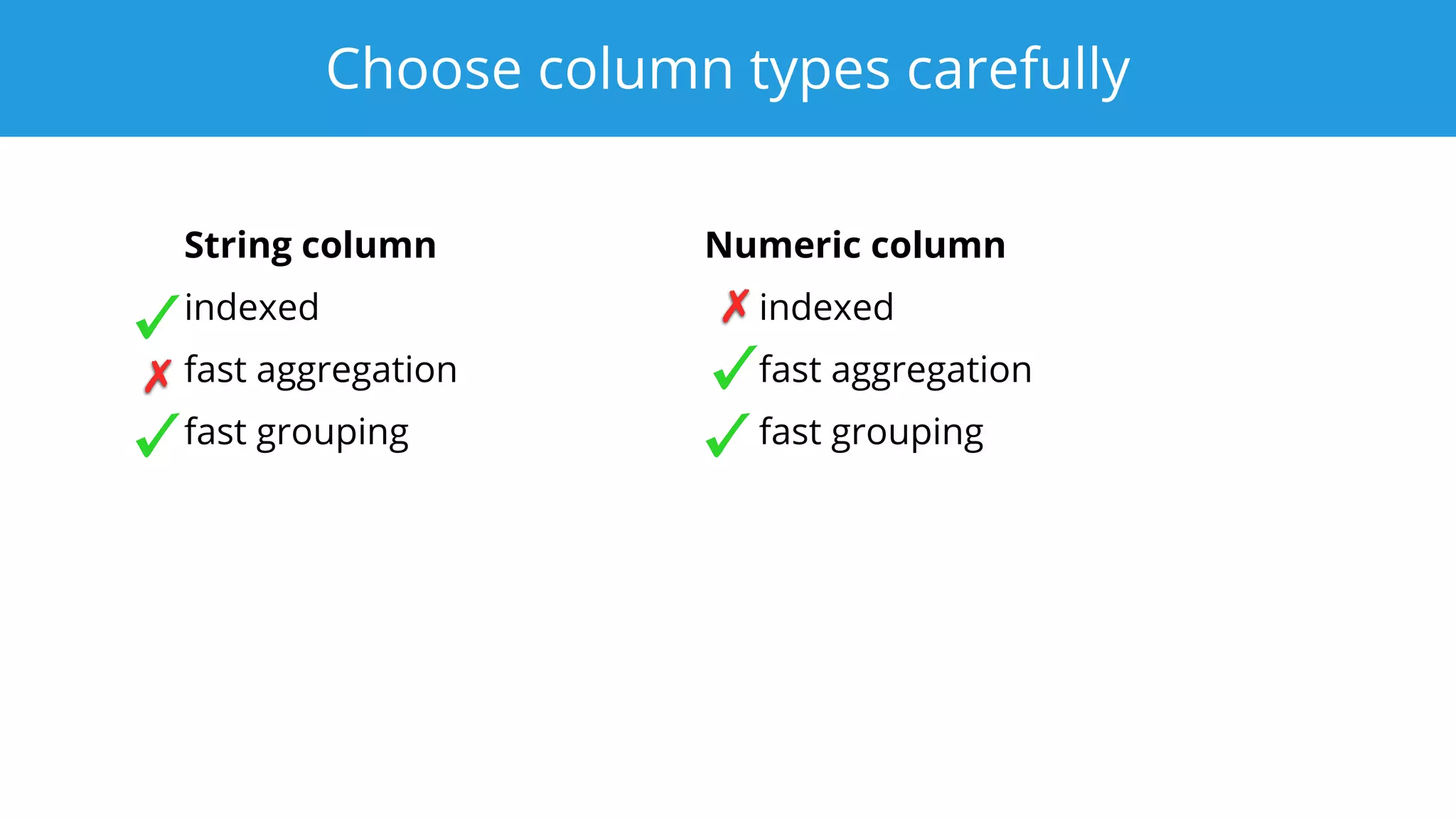

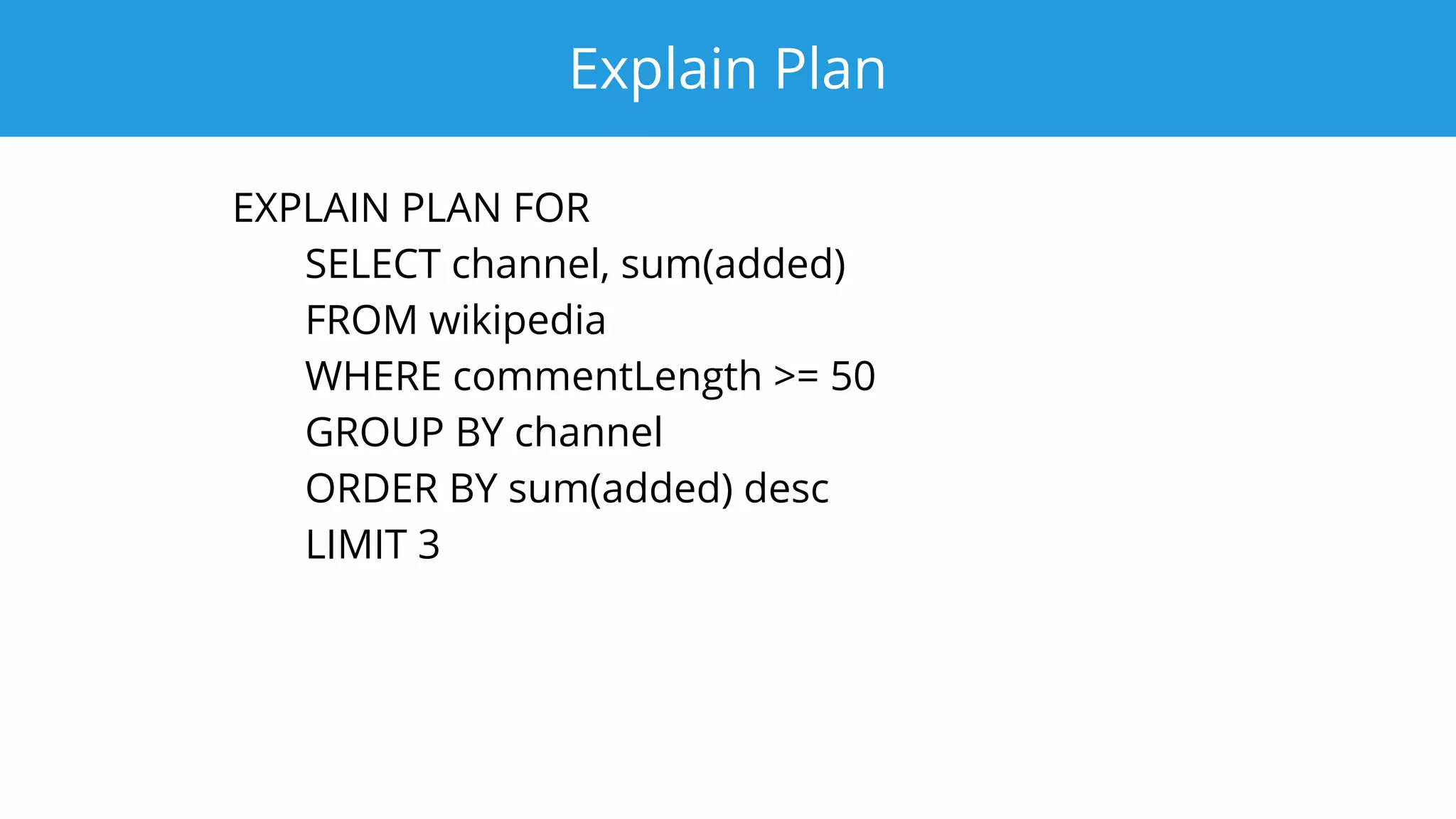

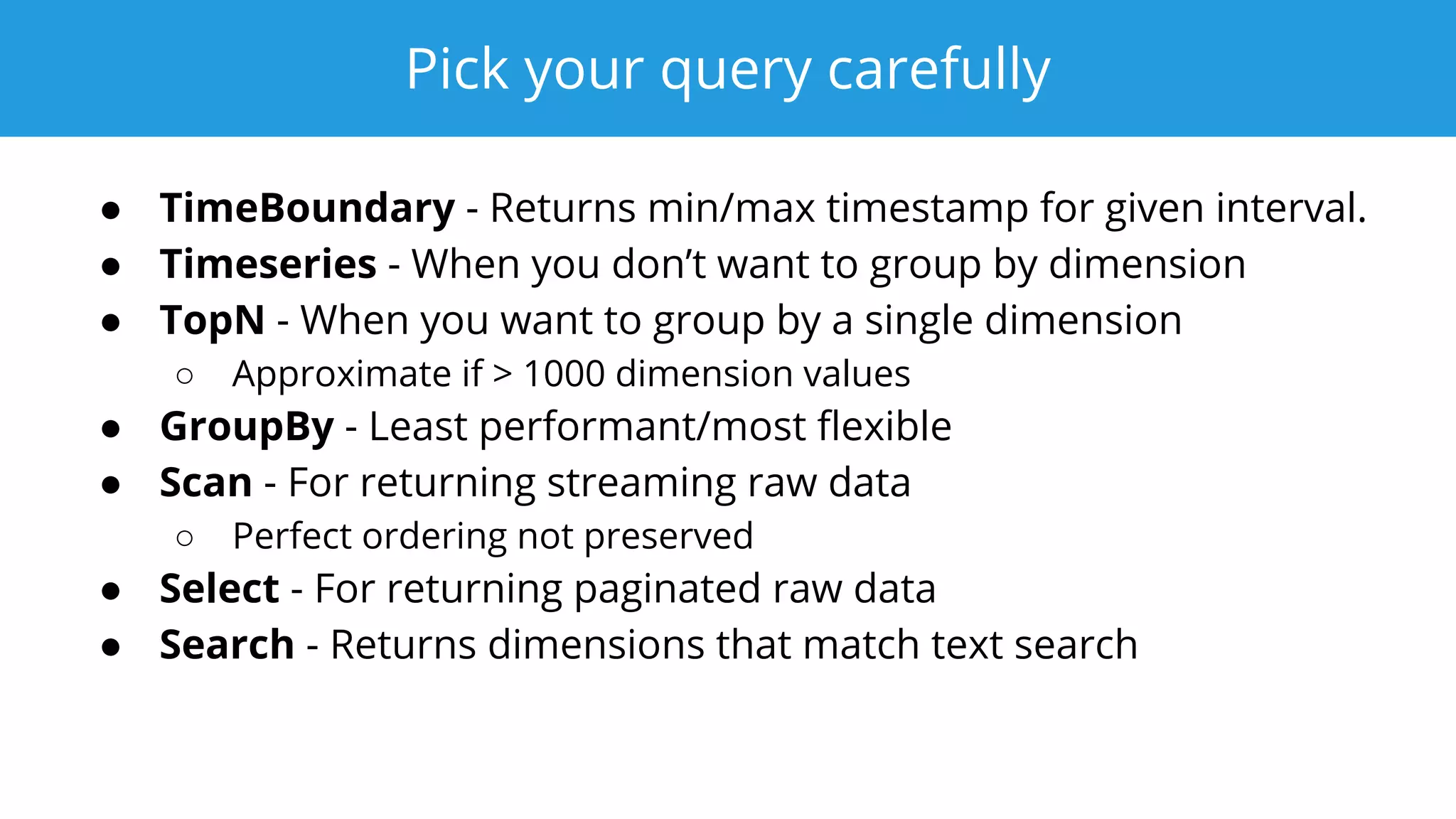

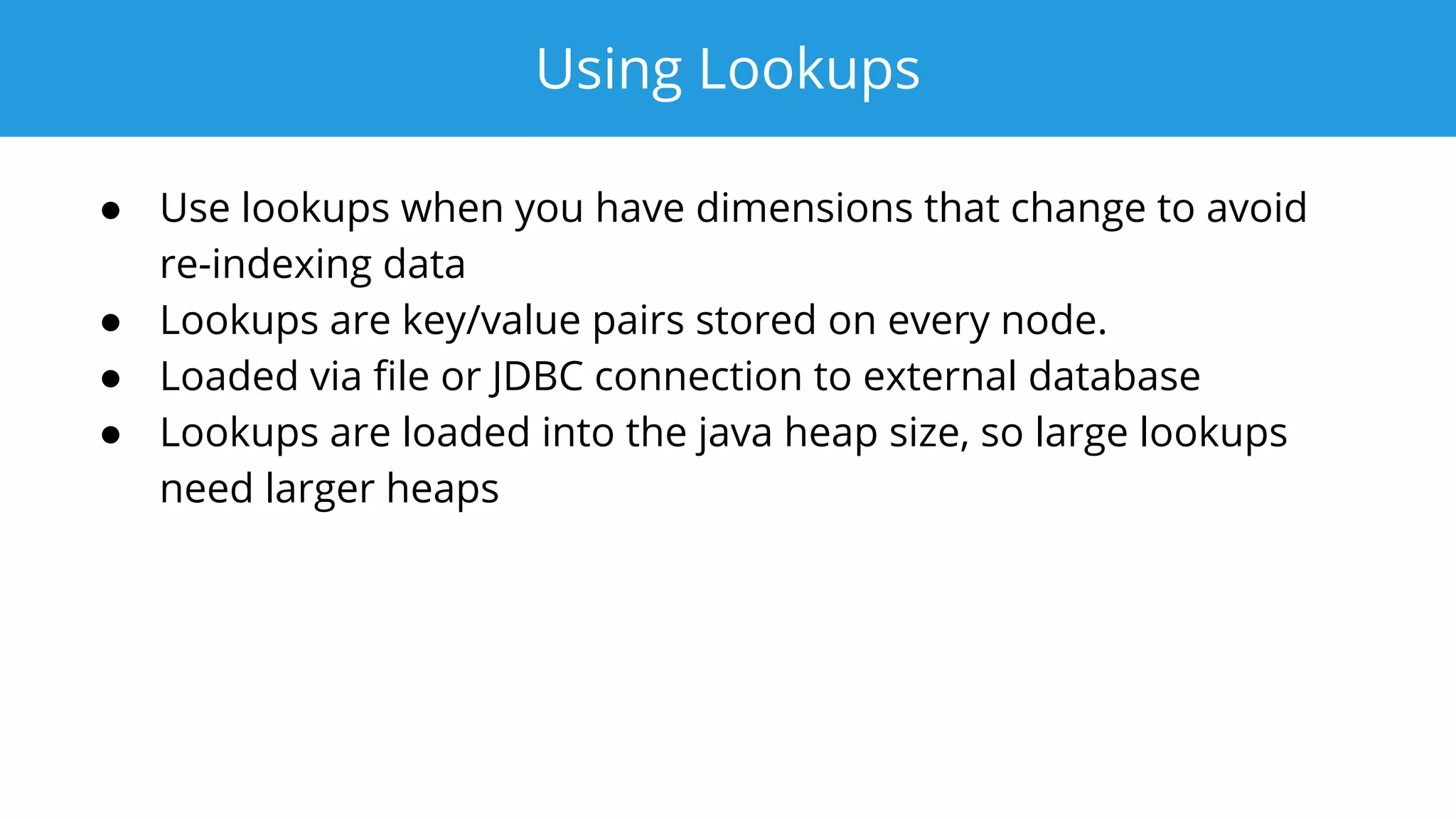

This document summarizes a typical day for a Druid architect. It describes common tasks like evaluating production clusters, analyzing data and queries, and recommending optimizations. The architect asks stakeholders questions to understand usage and helps evaluate if Druid is a good fit. When advising on Druid, the architect considers factors like data sources, query types, and technology stacks. The document also provides tips on configuring clusters for performance and controlling segment size.