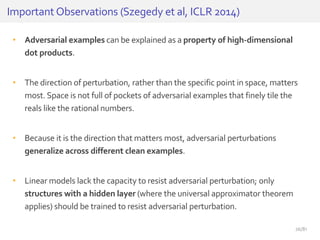

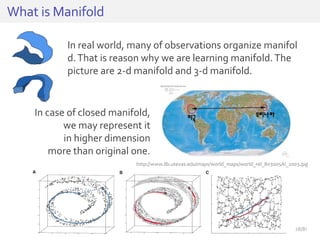

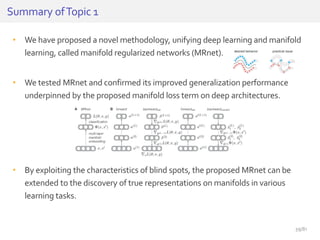

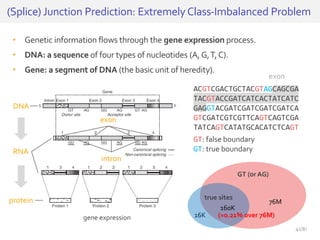

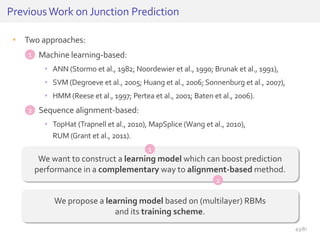

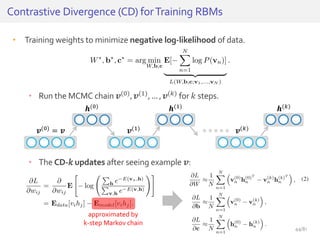

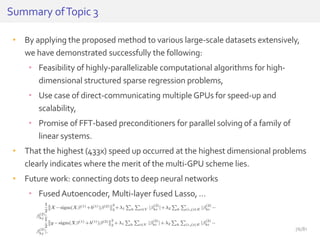

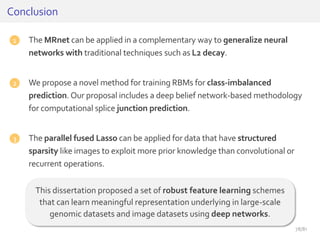

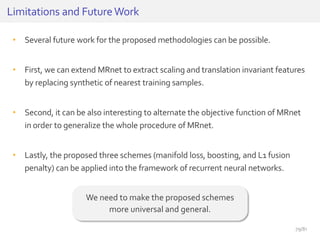

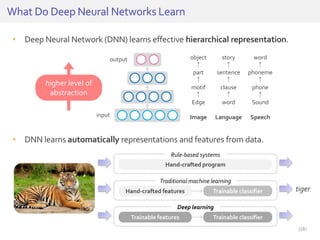

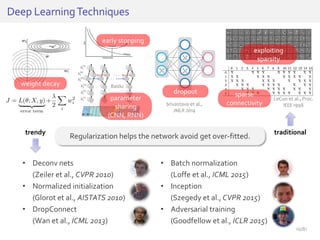

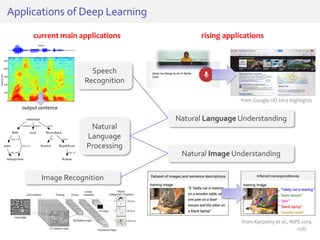

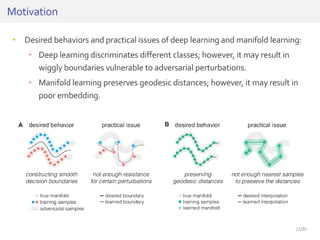

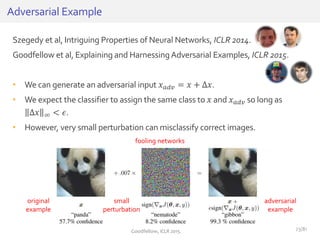

The document discusses deep neural networks (DNNs) and their applications, focusing on issues such as adversarial examples, class imbalance, and spatial dependency handling. It outlines various methodological advancements and research contributions from Taehoon Lee, including manifold regularized DNNs and enhancements in data representation. The implications of DNNs for areas like bioinformatics, image recognition, and natural language processing are highlighted, alongside providing a comprehensive overview of the author's research achievements and ongoing work.

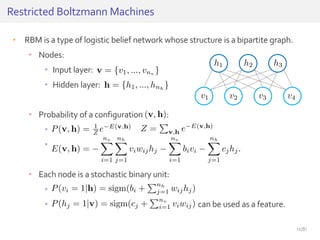

![History ofArtificial Neural Networks

Minsky and Papert, 1969

“Perceptrons”

(Limits of Perceptrons) [M69]

Rosenblatt, 1958

Perceptron [R58]

Fukushima, 1980

NeoCognitron

(Convolutional NN) [F80]

Hinton, 1983

Boltzmann

machine [H83]

Fukushima, 1975

Cognitron (Autoencoder) [F75]

Hinton, 1986

RBM, Restricted

Boltzmann machine [H86]

Hinton, 2006

Deep Belief

Networks [H06]

(mid 1980s)

Back-propagation

Early Models

Basic Models

Break

through

Le, 2012

Training of 1 billion

parameters [L12]

Lee, 2009

Convolutional

RBM [L09]

LeCun, 1998

Revisit of CNN [L98]

http://www.technologyrevi

ew.com/featuredstory/5136

96/deep-learning/

9/81](https://image.slidesharecdn.com/seminar20160104taehoonlee-170514123810/85/PhD-Defense-9-320.jpg)

![• Consider the dot product between a weight vector w and an adversarial

example 𝑥 𝑎𝑑𝑣:

• The adversarial perturbation causes the activation to grow by 𝑤 𝑇∆𝑥.

• We can maximize this increase subject to max norm constraint on ∆𝑥 by

assigning ∆𝑥 = sign(𝑤).

HowCanWe Fool Neural Networks?

𝑤 𝑇 𝑥 𝑎𝑑𝑣 = 𝑤 𝑇 𝑥 + 𝑤 𝑇∆𝑥

𝑥 𝑎𝑑𝑣 = 𝑥 − 𝜀𝑤 if 𝑥 is positive

𝑥 𝑎𝑑𝑣 = 𝑥 + 𝜀𝑤 if 𝑥 is negative

𝑤 = [8.28, 10.03]𝑥

24/81](https://image.slidesharecdn.com/seminar20160104taehoonlee-170514123810/85/PhD-Defense-24-320.jpg)