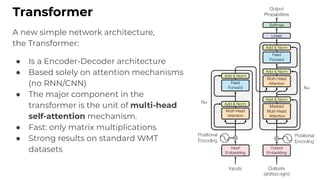

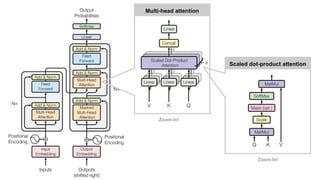

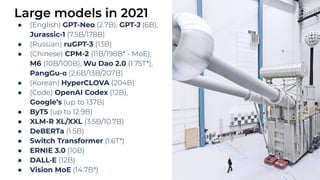

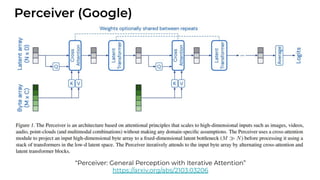

1. The document discusses recent developments in transformer architectures in 2021. It covers large transformers with models of over 100 billion parameters, efficient transformers that aim to address the quadratic attention problem, and new modalities like image, audio and graph transformers.

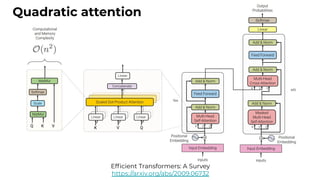

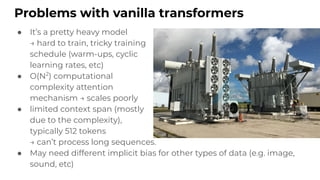

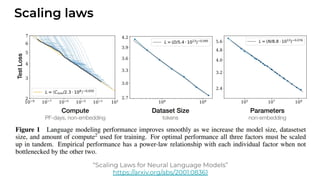

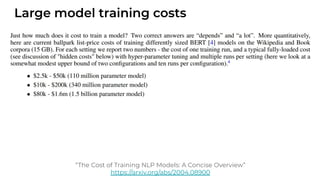

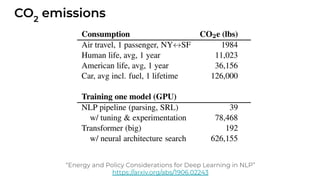

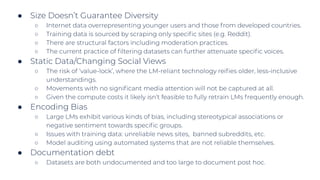

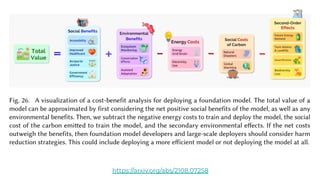

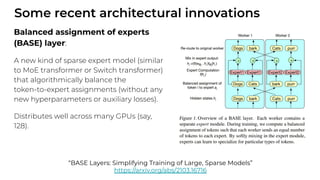

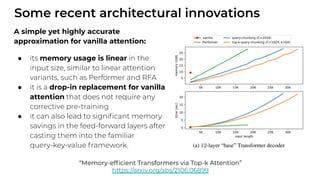

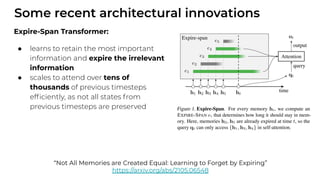

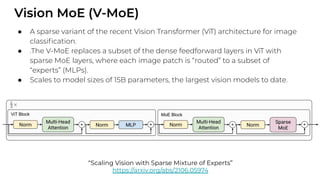

2. Issues with large models include high costs of training, carbon emissions, potential biases, and static training data not reflecting changing social views. Efficient transformers use techniques like mixture of experts, linear attention approximations, and selective memory to improve scalability.

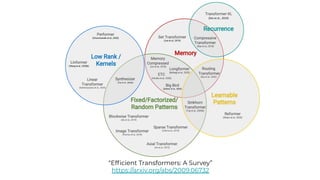

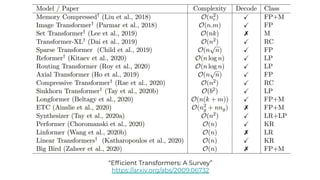

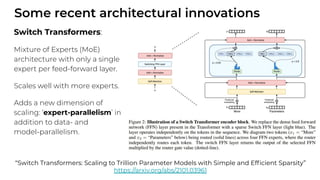

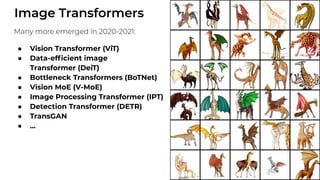

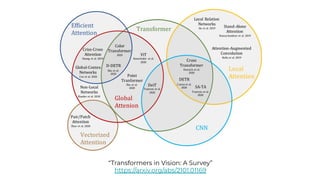

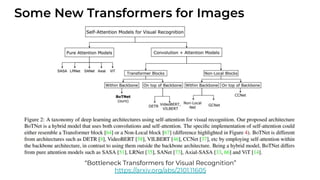

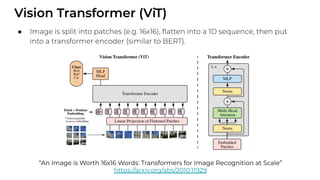

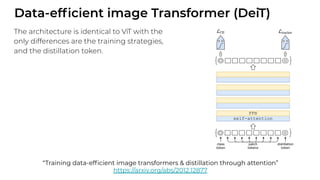

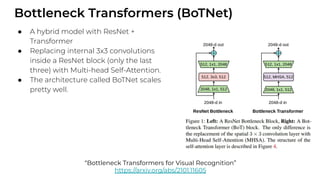

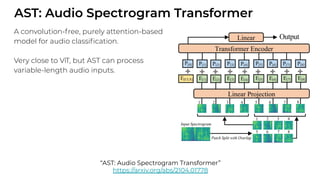

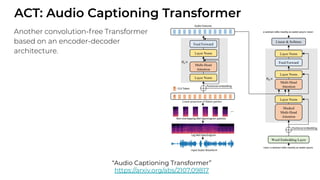

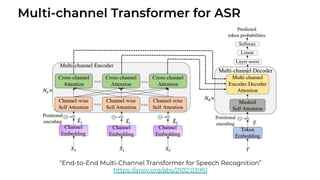

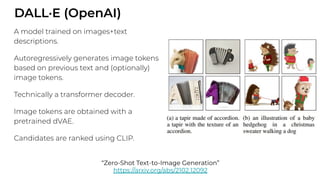

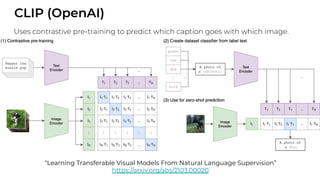

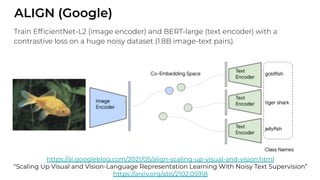

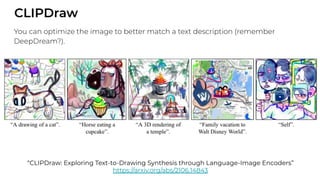

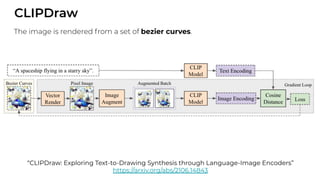

3. New modalities of transformers in 2021 include vision transformers applied to images and audio transformers for processing sound. Multimodal transformers aim to combine multiple modalities.