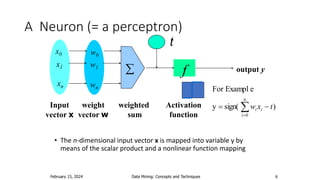

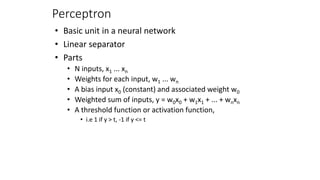

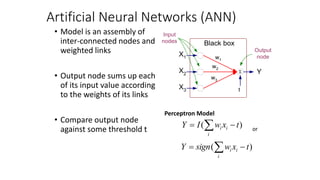

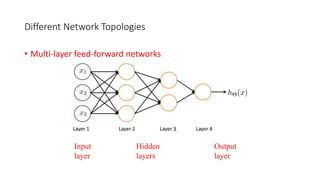

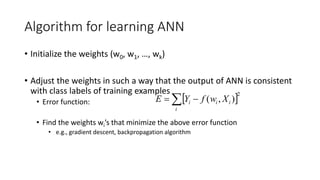

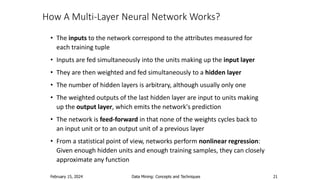

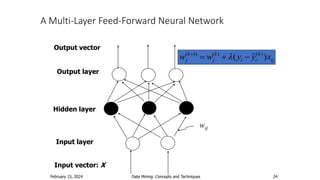

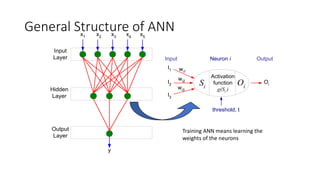

- Artificial neural networks are computational models inspired by the human brain that use algorithms to mimic brain functions. They are made up of simple processing units (neurons) connected in a massively parallel distributed system. Knowledge is acquired through a learning process that adjusts synaptic connection strengths.

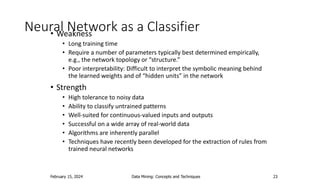

- Neural networks can be used for pattern recognition, function approximation, and associative memory in domains like speech recognition, image classification, and financial prediction. They have flexible inputs, resistant to errors, and fast evaluation, though interpretation is difficult.