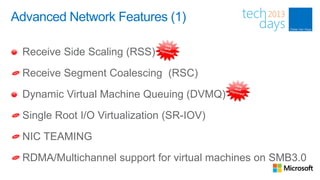

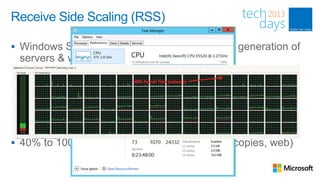

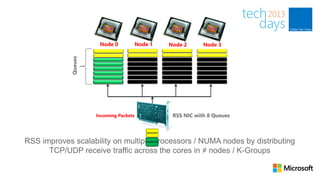

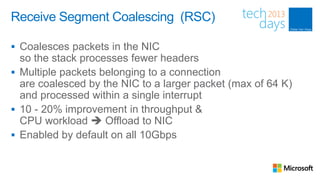

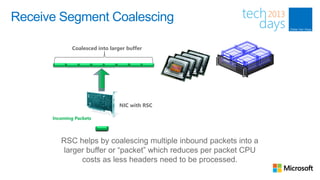

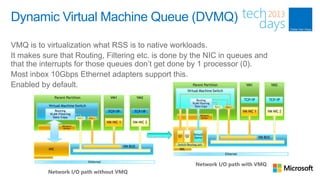

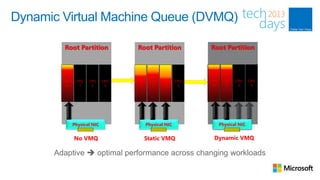

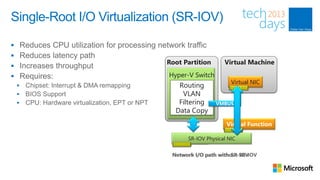

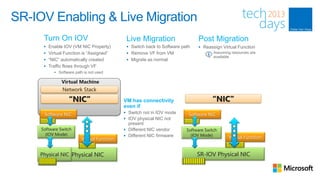

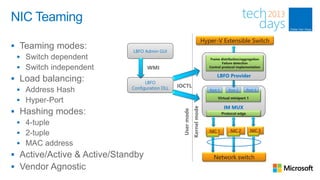

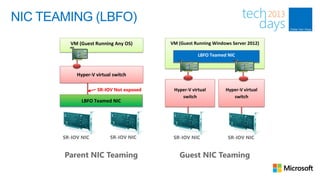

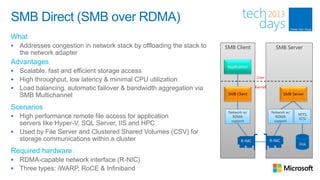

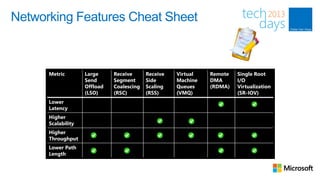

Windows Server 2012 includes several new and improved networking features for Hyper-V. These features help improve performance and scalability by offloading more processing to the network interface card. New features include improved Receive Side Scaling, Receive Segment Coalescing, Dynamic Virtual Machine Queuing, Single Root I/O Virtualization, and NIC teaming. These features address challenges around availability, reliability, security and reducing complexity for virtualized workloads.