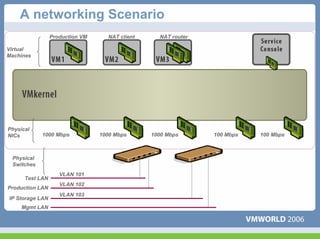

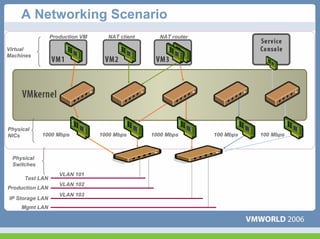

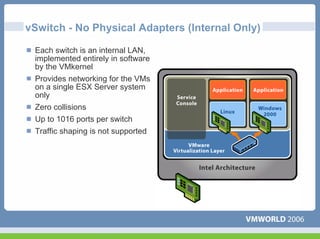

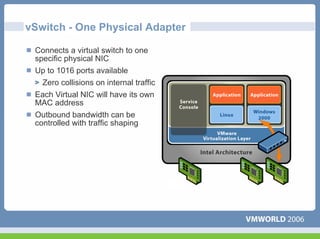

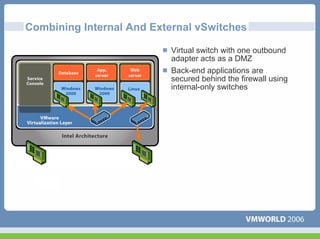

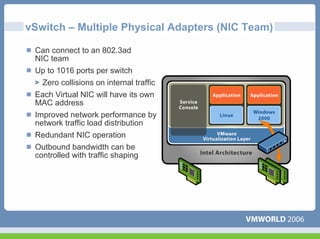

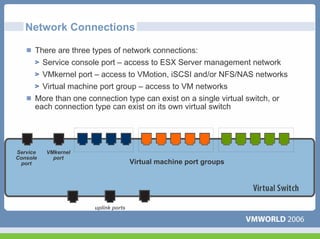

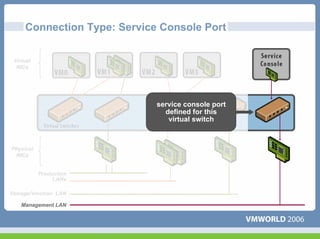

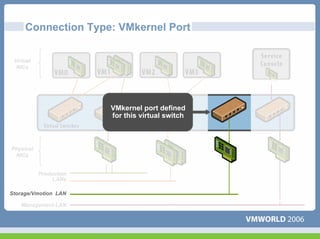

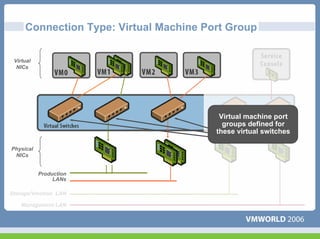

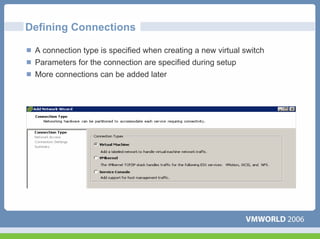

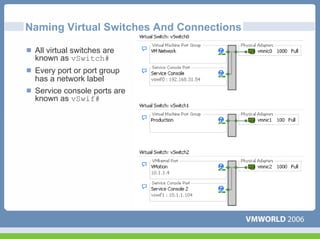

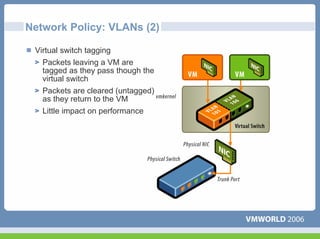

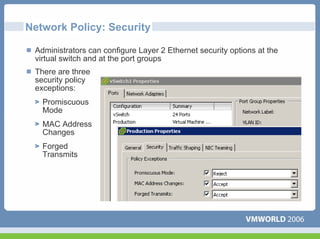

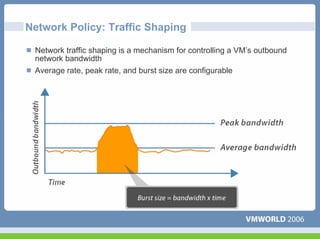

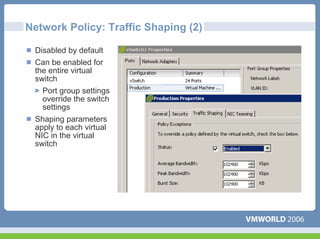

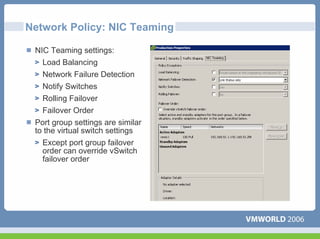

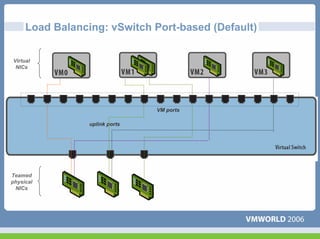

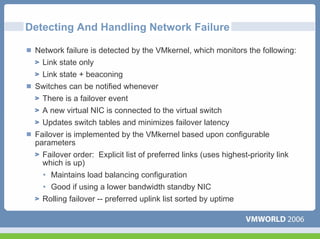

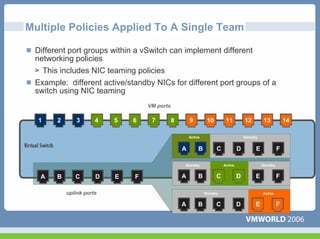

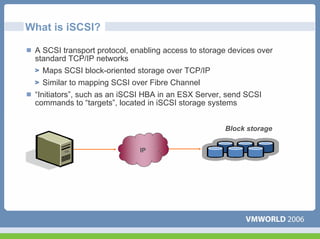

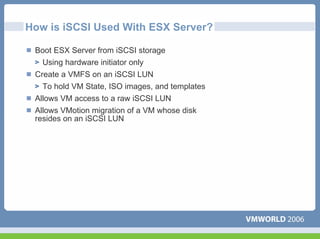

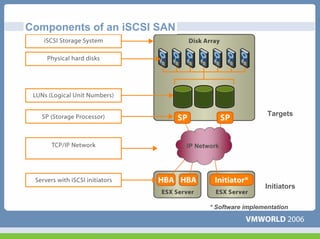

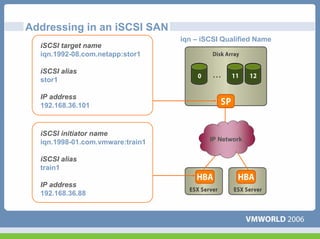

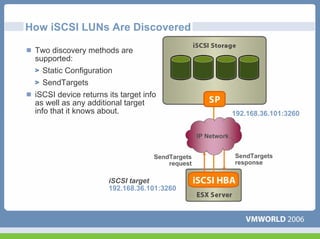

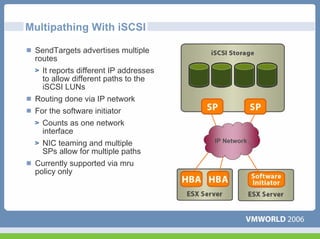

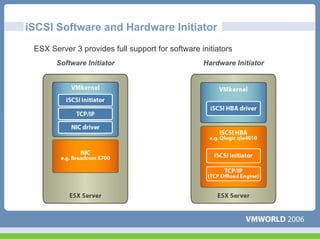

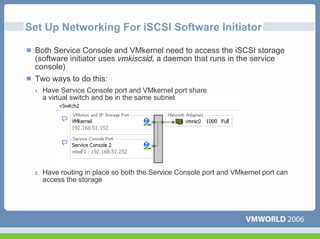

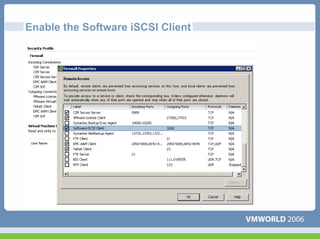

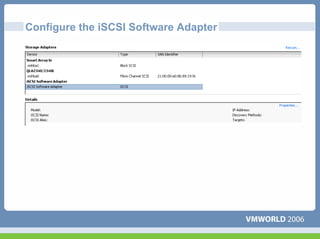

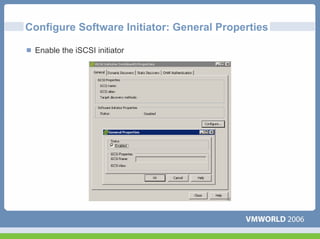

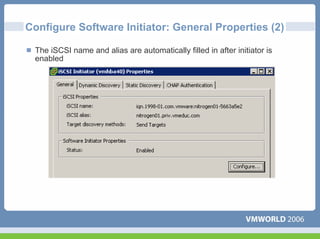

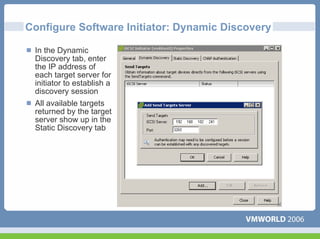

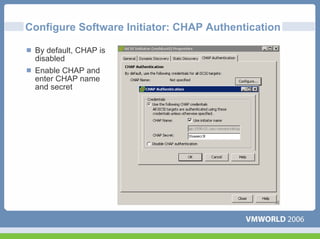

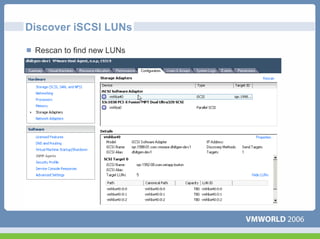

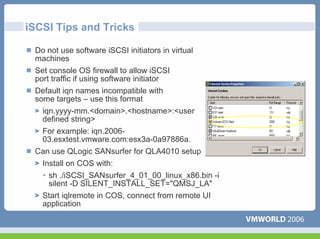

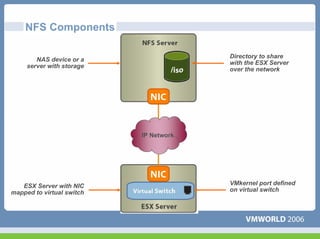

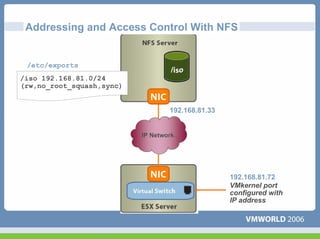

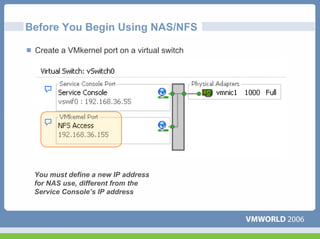

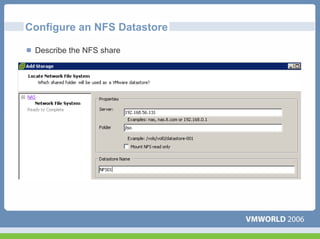

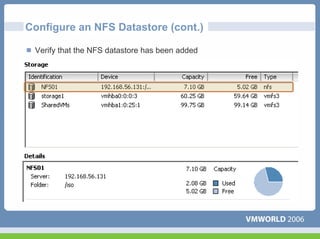

This document discusses networking virtual machines in VMware environments. It covers virtual switch connections, port group policies, and networking IP storage using iSCSI and NAS. Specifically, it describes how to configure virtual switches, define different connection types (service console ports, VMkernel ports, virtual machine port groups), apply network policies like VLANs and security, and configure IP storage access through iSCSI software initiators and NFS.