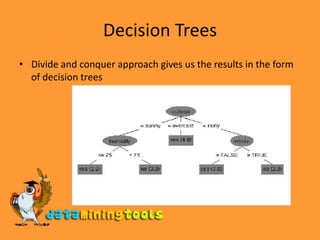

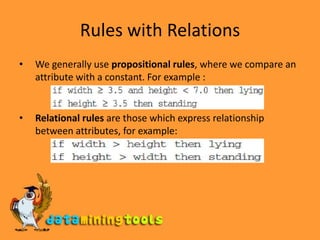

This document summarizes different methods for knowledge representation including decision tables, decision trees, classification rules, association rules, rules with exceptions, rules with relations, trees for numerical prediction, instance-based learning, and clustering. Key points covered include how each method represents knowledge, advantages and disadvantages of different methods, and different types of outputs for clustering.