Embed presentation

Downloaded 26 times

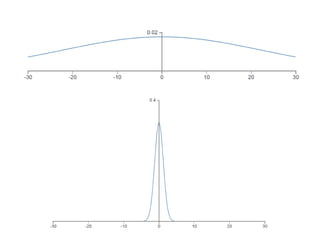

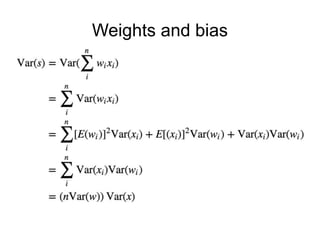

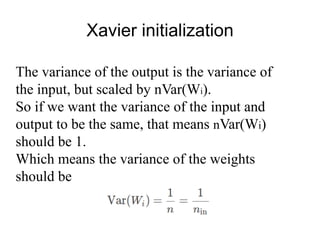

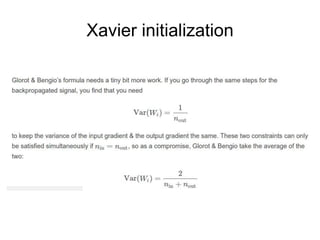

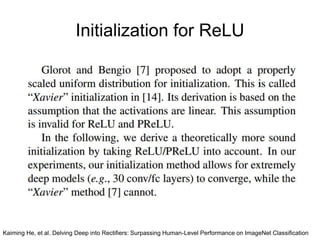

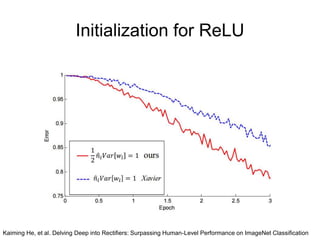

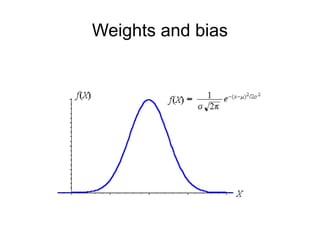

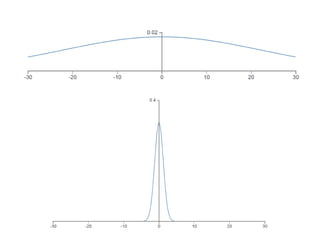

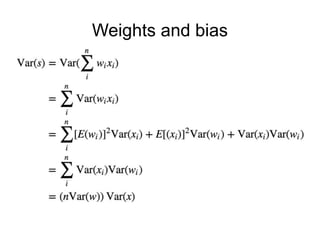

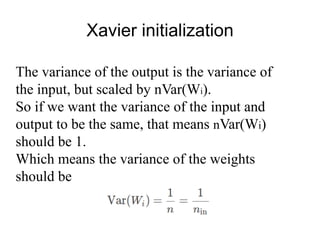

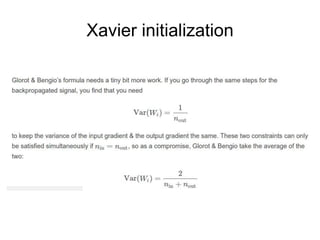

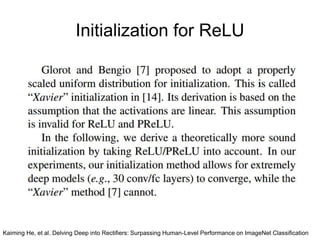

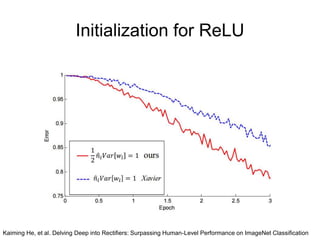

The document discusses different methods for initializing weights in neural networks. It first mentions that all zero initialization causes every neuron to compute the same output, lacking asymmetry. Xavier initialization scales the variance of the weights so that the variance of the input and output are the same. Initialization for ReLU networks is discussed in a paper by Kaiming He et al. that achieved human-level performance on ImageNet classification.