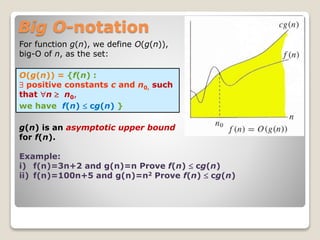

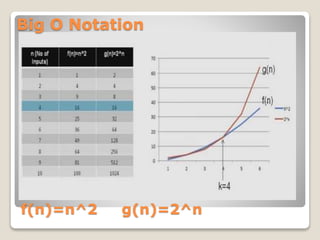

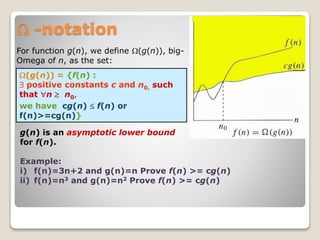

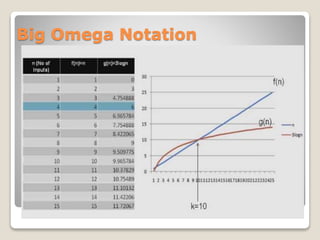

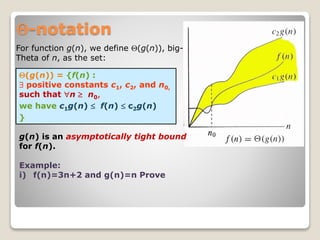

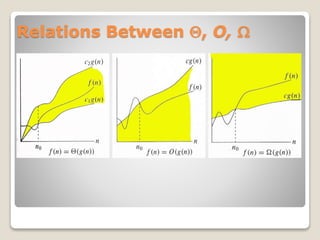

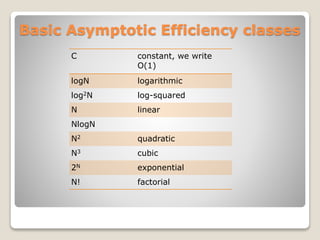

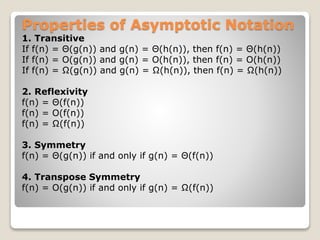

This document introduces asymptotic notations that are used to describe the time complexity of algorithms. It defines big O, big Omega, and big Theta notations, which describe the limiting behavior of functions. Big O notation provides an asymptotic upper bound, big Omega provides a lower bound, and big Theta provides a tight bound. Examples are given of different asymptotic efficiency classes like constant, logarithmic, linear, quadratic, and exponential time. Properties of asymptotic notations like transitivity, reflexivity, symmetry, and transpose symmetry are also covered.