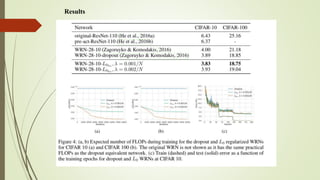

The document discusses the learning of sparse neural networks using l0 regularization, emphasizing its advantages in model compression and sparsification to combat overfitting. It describes the computational challenges posed by the non-differentiability of the l0 norm and introduces a re-parameterization approach that employs a continuous random variable to reformulate the loss function. The findings highlight the importance of optimizing network weights to achieve sparsity while maintaining differentiability through the re-parameterization trick.