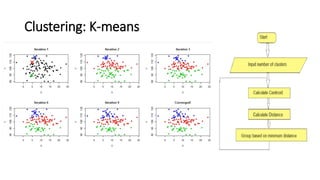

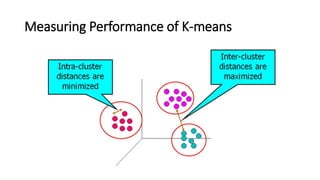

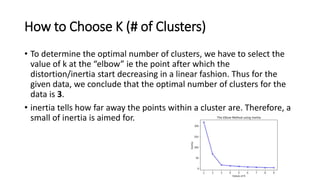

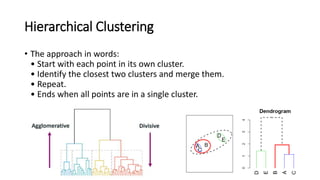

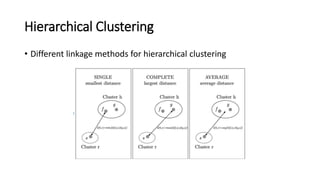

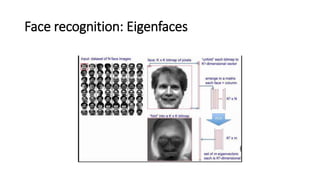

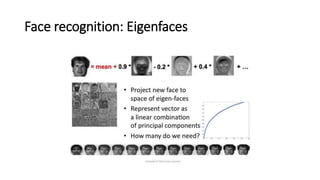

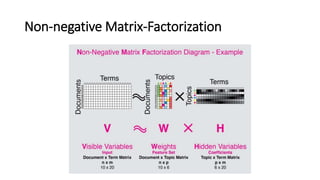

This document provides an overview of unsupervised learning techniques in scikit-learn including k-means clustering, hierarchical clustering, principal component analysis (PCA), and non-negative matrix factorization (NMF). It discusses how to choose the optimal number of clusters k for k-means using the elbow method by selecting the value of k at the point where the distortion starts decreasing linearly. It also describes hierarchical clustering as successively merging the closest clusters and PCA as finding linear combinations of variables with maximal variance to produce low-dimensional representations. NMF is characterized as factorizing a matrix into two matrices where the columns of one represent topic probabilities and rows of the other represent term frequencies in each topic.