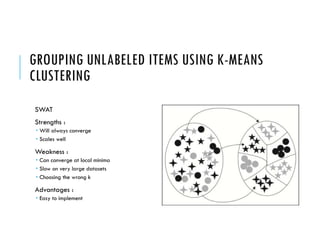

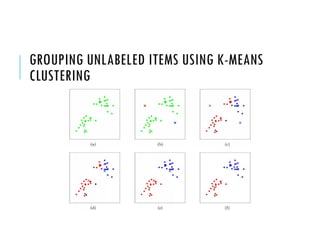

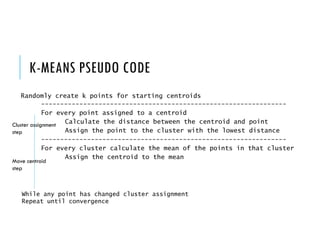

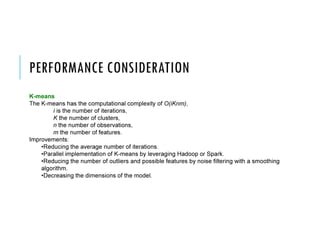

This document discusses machine learning clustering techniques. It begins by explaining the difference between supervised and unsupervised learning, with clustering falling under unsupervised learning. The document then discusses the k-means clustering algorithm in detail, including how it works, its strengths and weaknesses, and the steps involved. It also covers considerations for k-means performance and provides examples of frameworks and platforms for machine learning.