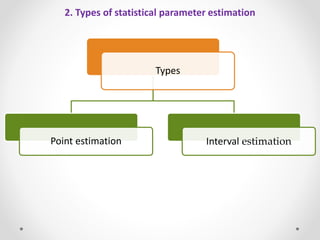

This document discusses statistical parameter estimation methods used to analyze experimental data. It describes point estimation as calculating a single value to represent an unknown population parameter using sample data. Common point estimation methods mentioned are maximum likelihood estimation and linear least squares. It also describes interval estimation, which specifies a lower and upper range for a population parameter rather than a single point. Interval estimation methods provide more precision than point estimation by giving a confidence interval that is likely to contain the true population parameter. The document provides examples of how statistical parameter estimation is used to analyze experimental data and make inferences about unknown population values.