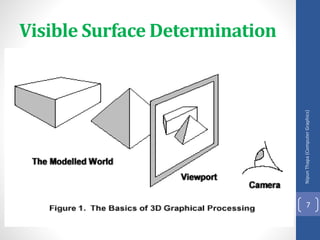

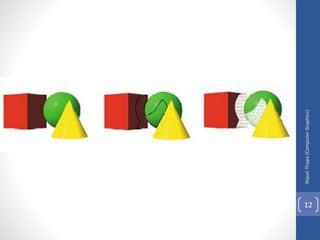

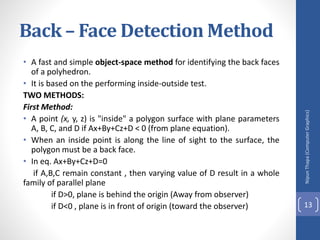

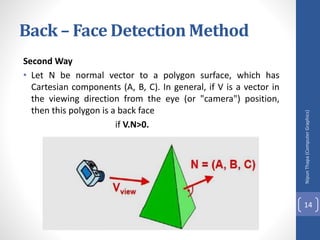

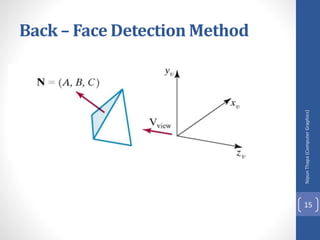

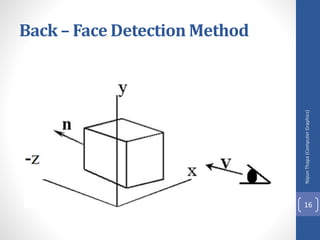

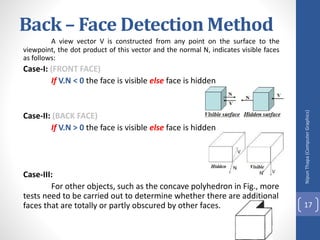

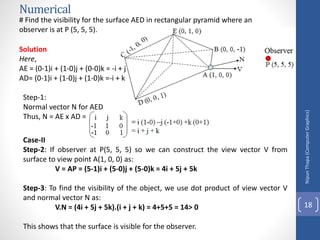

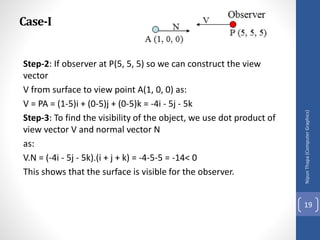

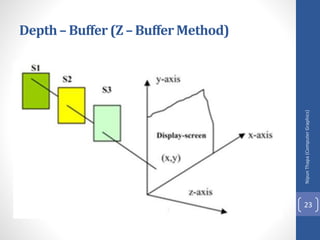

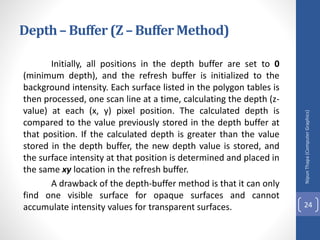

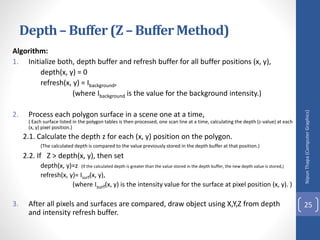

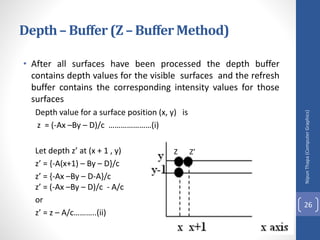

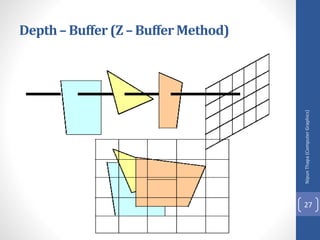

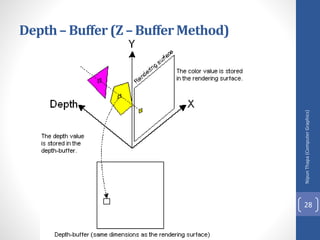

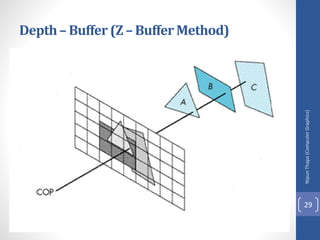

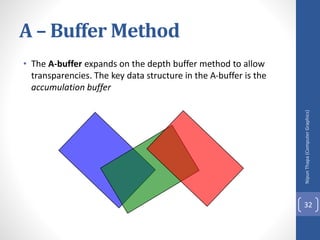

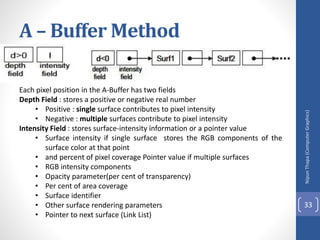

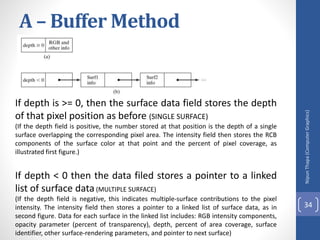

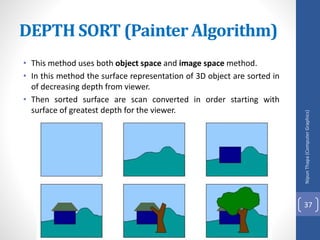

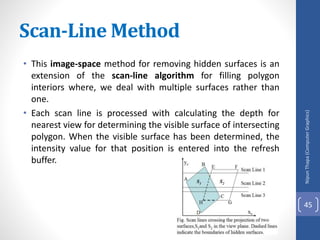

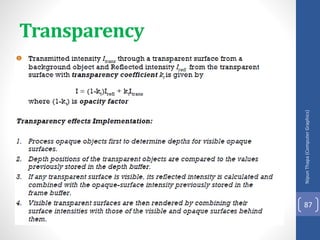

This document discusses different methods for visible surface determination in 3D computer graphics. It describes object-space methods that compare objects within a scene to determine visibility, and image-space methods that decide visibility on a point-by-point basis at each pixel. Specific methods mentioned include the back-face detection method, depth-buffer/z-buffer method, and A-buffer method. The depth-buffer method stores depth and color values in buffers for each pixel and compares surface depths to determine visibility. The A-buffer method extends this to allow accumulation of intensities for transparent surfaces.