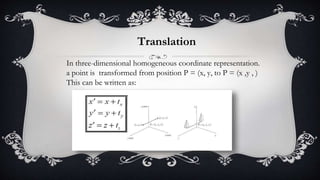

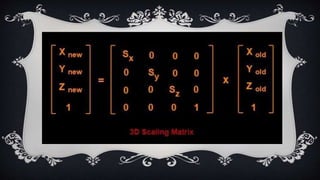

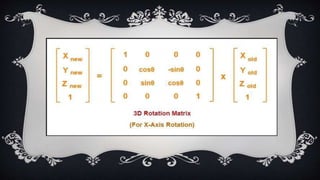

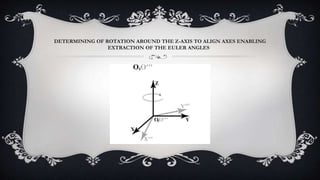

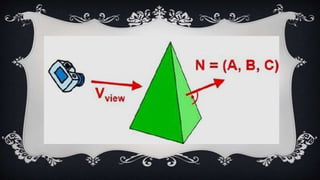

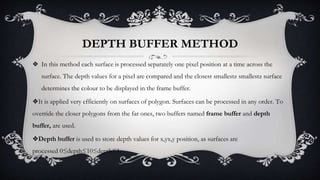

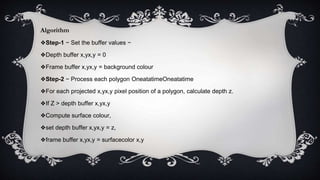

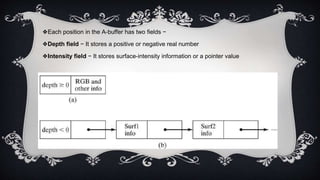

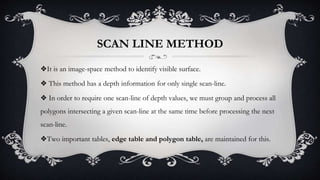

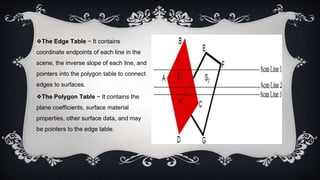

The document discusses 3D geometric transformations, including translation, scaling, and rotation, as well as classification methods for visible surface detection algorithms such as back face detection, depth-buffer, A-buffer, and scan line methods. It explains how geometric transformations can affect object representation and provides details on managing visible surfaces in computer graphics. Applications in fields like computer-aided design, animation, and scientific visualization are also mentioned.