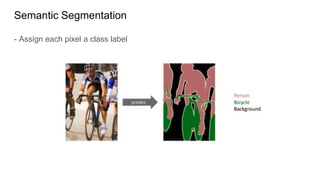

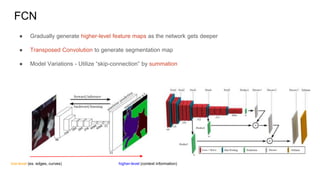

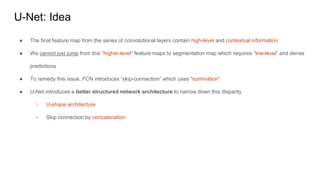

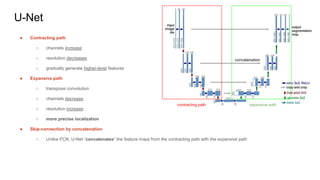

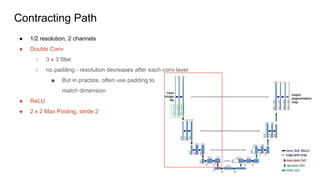

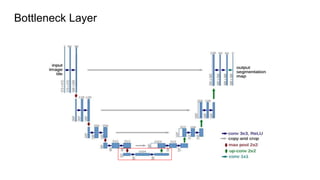

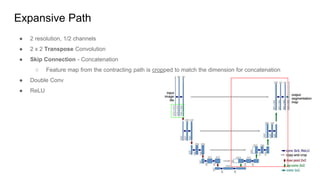

The document summarizes the U-Net convolutional network architecture for biomedical image segmentation. U-Net improves on Fully Convolutional Networks (FCNs) by introducing a U-shaped architecture with skip connections between contracting and expansive paths. This allows contextual information from the contracting path to be combined with localization information from the expansive path, improving segmentation of biomedical images which often have objects at multiple scales. The U-Net architecture has been shown to perform well even with limited training data due to its ability to make use of context.

![References

[1] https://arxiv.org/pdf/1411.4038.pdf

[2] https://arxiv.org/abs/1505.04597](https://image.slidesharecdn.com/u-net1-220514053853-5fbc8544/85/U-Net-1-pptx-15-320.jpg)