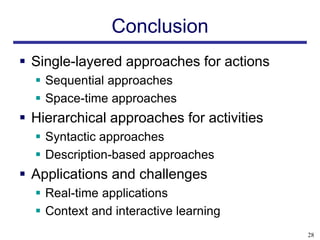

This document discusses applications and challenges in human activity analysis using computer vision techniques. It begins by describing current applications like object recognition in images and videos for tasks like pedestrian detection. It then discusses challenges like analyzing longer and more complex activities that involve interactions between humans, objects, and environments. Real-time processing of continuous video streams and handling large, noisy video databases are also challenges. The document concludes by discussing future directions like 3D modeling of activities, incorporating context like objects and poses, interactive learning approaches, and using active learning techniques to generate training videos.

![Real-time using GP-GPUs

General purpose graphics processing

units (GP-GPUs)

Multiple cores running thousands of threads

Parallel processing

20 times speed-up of

video comparison method in

Shechtman and Irani 2005:

[Rofouei, M., Moazeni, M., and Sarrafzadeh, M., Fast GPU-based space-time correlation for

activity recognition in video sequences, 2008]

17](https://image.slidesharecdn.com/tutorialcvpr2011applicationspdf3062/85/cvpr2011-human-activity-recognition-part-6-applications-17-320.jpg)

![Challenges – activity context

An activity involves interactions among

Humans, objects, and scenes

Running? Kicking?

Activity recognition must consider motion-

object context!

[Gupta, A., Kembhavi, A., and Davis, L., Observing Human-Object Interactions: Using Spatial

and Functional Compatibility for Recognition, IEEE T PAMI 2009]

18](https://image.slidesharecdn.com/tutorialcvpr2011applicationspdf3062/85/cvpr2011-human-activity-recognition-part-6-applications-18-320.jpg)

![Activity context – pose

Object-pose-motion

[Yao, B. and Fei-Fei, L., Modeling Mutual Context of Object and Human Pose in Human-Object

Interaction Activities , CVPR 2010]

19](https://image.slidesharecdn.com/tutorialcvpr2011applicationspdf3062/85/cvpr2011-human-activity-recognition-part-6-applications-19-320.jpg)