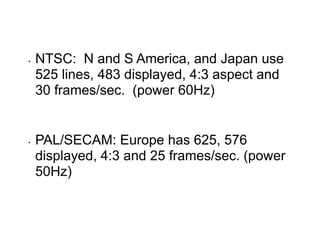

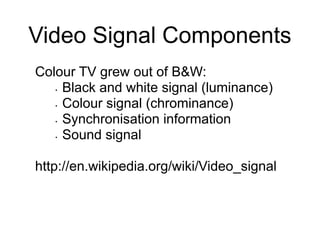

Video and television systems work by presenting a sequence of images rapidly enough that the human eye perceives them as continuous motion. Different regions use different television standards that determine aspects like the number of lines, frames per second, and color systems. Video compression codecs like MPEG remove spatial and temporal redundancy to greatly reduce file sizes for storage and transmission while maintaining adequate quality.