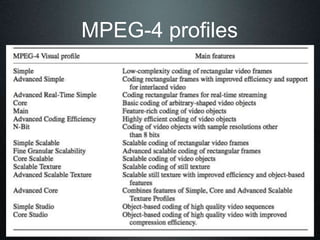

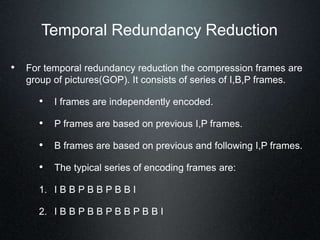

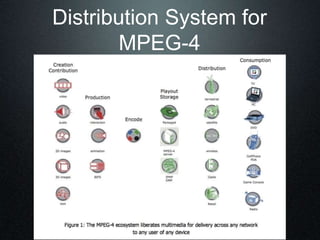

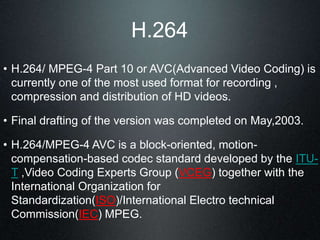

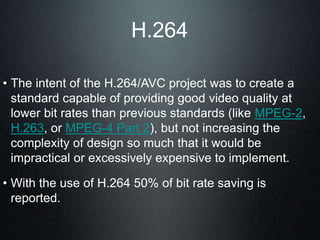

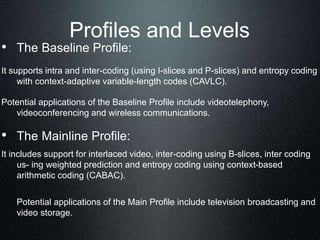

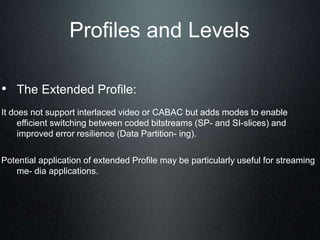

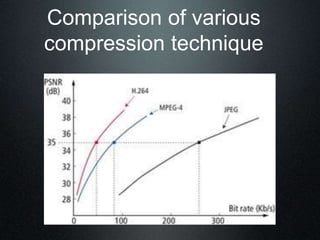

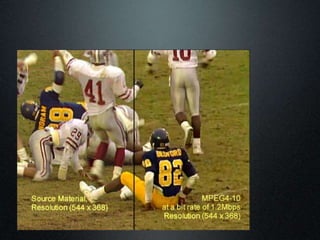

This document compares video compression standards MPEG-4 and H.264. It discusses key factors for video compression like spatial and temporal sampling. It provides an overview of MPEG-4 including object-based coding, profiles and levels. H.264 is introduced as a standard that provides 50% bit rate savings over MPEG-2. Profiles and levels are explained for both standards. Common uses of each are listed, along with future development options.

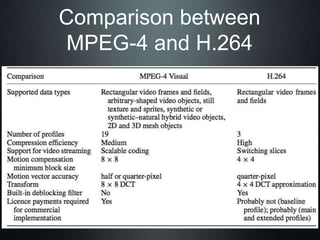

![Size of uncompressed video

and bandwidth of carriers

Video Source Output data rate[Kbits/sec]

Quarter VGA (320X240)

@20 frames/sec

36 864

CIF camera (352X288)

@30 frames/sec

72 990

VGA (640X480) @30 frames/sec 221 184

Transmission Medium Data Rate [Kbits/sec]

Wireline modem 56

GPRS (estimated average rate) 30

3G/WCDMA (theoretical maximum) 384](https://image.slidesharecdn.com/mpeg4copy-120428133000-phpapp01-231020075415-c8d73b86/85/mpeg4copy-120428133000-phpapp01-ppt-7-320.jpg)