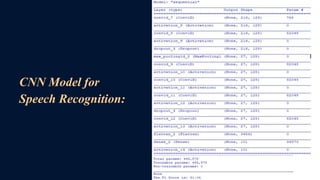

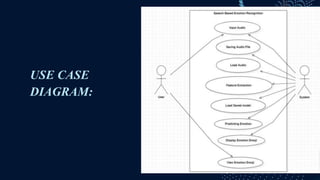

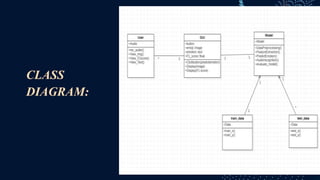

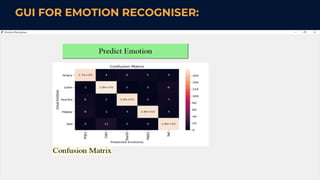

This document describes a student project on speech-based emotion recognition. The project uses convolutional neural networks (CNN) and mel-frequency cepstral coefficients (MFCC) to classify emotions in speech into categories like happy, sad, fearful, calm and angry. The proposed system provides advantages over existing systems by allowing variable length audio inputs, faster processing, and real-time classification of more emotion categories. It achieves a test accuracy of 91.04% according to the document.