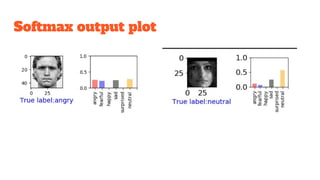

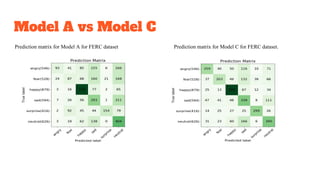

This document describes a project to build a convolutional neural network (CNN) model to recognize six basic human emotions (angry, fear, happy, sad, surprise, neutral) from facial expressions. The CNN architecture includes convolutional, max pooling and fully connected layers. Models are trained on two datasets - FERC and RaFD. Experimental results show that Model C achieves the best testing accuracy of 71.15% on FERC and 63.34% on RaFD. Visualizations of activation maps and a prediction matrix are provided to analyze the model's performance and confusions between emotions. A live demo application is also developed using OpenCV to demonstrate real-time emotion recognition from video frames.

![TFLearn

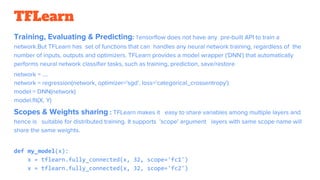

TFlearn is a modular and transparent deep learning library built on top of Tensorflow.TFLearn is a

high-level API for fast neural network building and training.

Layers:Defining a model using Tensorflow completely is time consuming and repetitive, TFLearn has

"layers" that represent an abstract set of operations , which make building neural networks more

convenient.

Tensoflow:

with tf.name_scope('conv1'):

W = tf.Variable(tf.random_normal([5, 5, 1, 32]), dtype=tf.float32, name='Weights')

b = tf.Variable(tf.random_normal([32]), dtype=tf.float32, name='biases')

x = tf.nn.conv2d(x, W, strides=[1, 1, 1, 1], padding='SAME')

x = tf.add_bias(W, b)

x = tf.nn.relu(x)

TfLearn:

tflearn.conv_2d(x, 32, 5, activation='relu', name='conv1')](https://image.slidesharecdn.com/humanemotionrecognition-170922175633/85/Human-Emotion-Recognition-9-320.jpg)