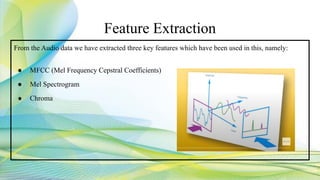

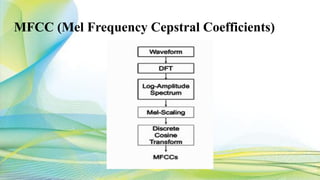

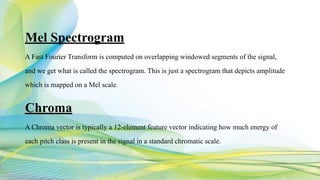

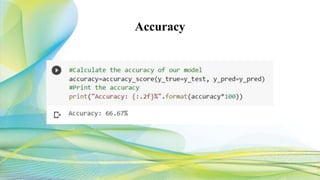

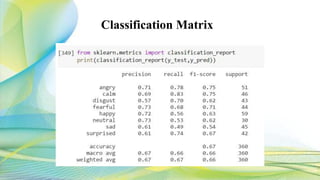

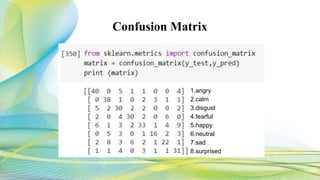

The document discusses speech emotion recognition using machine learning. It aims to build a model to recognize emotion from speech using the librosa and sklearn libraries and the RAVDESS dataset. It extracts MFCC, mel spectrogram, and chroma features from the dataset and uses an MLP classifier to classify emotions into 8 categories with an accuracy of 66.67%. The model works best at identifying calm emotions and gets confused between similar emotions. Future work could explore using larger datasets with CNN, RNN models on different speakers and accents.

![[1] Speech Emotion Recognition using Neural Network and MLP Classifier (Jerry

Joy, Aparna Kannan, Shreya Ram, S. Rama)

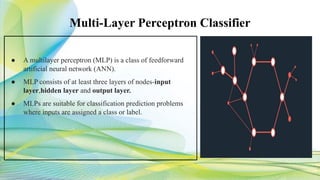

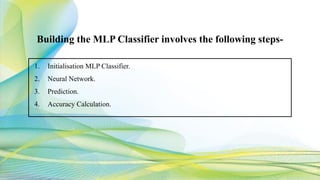

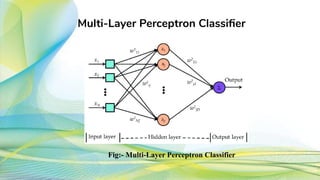

● MLP Classifier

● 5 features extracted- MFCC, Contrast, Mel Spectrograph Frequency, Chroma

and Tonnetz

● Accuracy 70.28%

[2]Voice Emotion Recognition using CNN and Decision Tree (Navya Damodar,

Vani H Y, Anusuya M A.)

● Decision Tree , CNN

● MFCCs extracted

● Accuracy 72% CNN, 63% Decision Tree

Literature Review](https://image.slidesharecdn.com/speechprocessing-201102052717/85/Speech-emotion-recognition-3-320.jpg)

![Dataset

Ryerson Audio-Visual Database of Emotional Speech and Song (RAVDESS) dataset.

● [3]RAVDESS dataset has recordings of 24 actors, 12 male actors and 12 female

actors, the actors are numbered from 01 to 24 in North American accent.

● All emotional expressions are uttered at two levels of intensity: normal and strong,

except for the ‘neutral’ emotion, it is produced only in normal intensity. Thus, the

portion of the RAVDESS, that we use contains 60 trials for each of the 24 actors,

thus making it 1440 files in total.](https://image.slidesharecdn.com/speechprocessing-201102052717/85/Speech-emotion-recognition-12-320.jpg)

![[1] Training process workflow](https://image.slidesharecdn.com/speechprocessing-201102052717/85/Speech-emotion-recognition-13-320.jpg)

![[1] Testing process workflow](https://image.slidesharecdn.com/speechprocessing-201102052717/85/Speech-emotion-recognition-14-320.jpg)

![References

[1] Jerry Joy, Aparna Kannan, Shreya Ram, S. Rama Speech Emotion Recognition using Neural

Network and MLP Classifier, IJESC, April 2020.

[2]Navya Damodar, Vani H Y, Anusuya M A. Voice Emotion Recognition using CNN and

Decision Tree. International Journal of Innovative Technology and Exploring Engineering

(IJITEE), October 2019.

[3]RAVDESS Dataset: https://zenodo.org/record/1188976#.X5r20ogzZPZ

[4]MLP/CNN/RNN Classification:

https://machinelearningmastery.com/when-to-use-mlp-cnn-and-rnn-neural-networks/

[5]MFCC:https://medium.com/prathena/the-dummys-guide-to-mfcc-aceab2450fd](https://image.slidesharecdn.com/speechprocessing-201102052717/85/Speech-emotion-recognition-28-320.jpg)