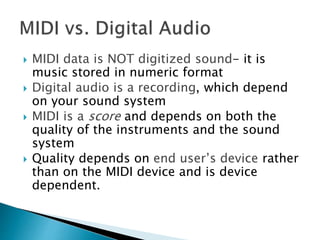

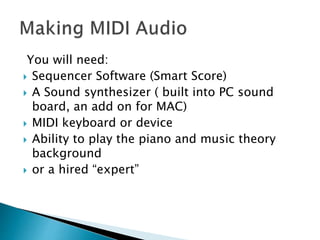

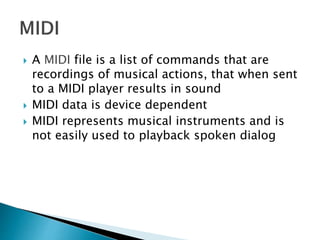

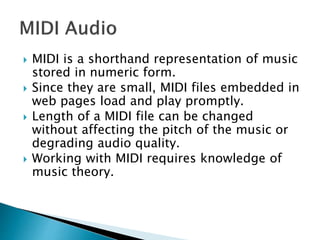

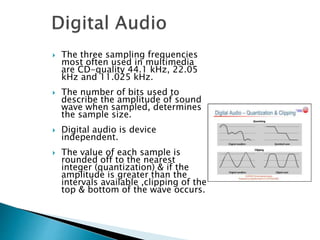

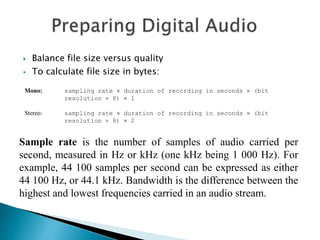

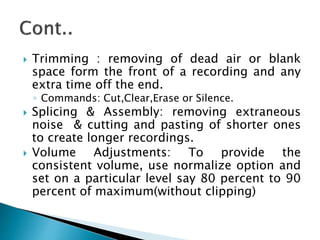

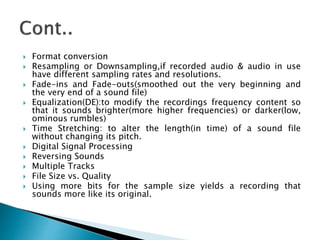

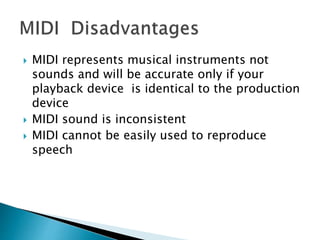

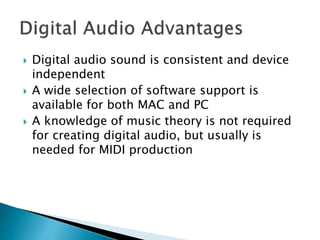

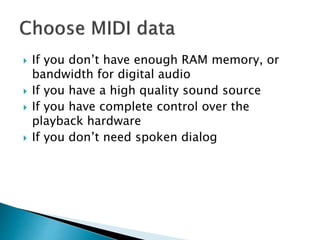

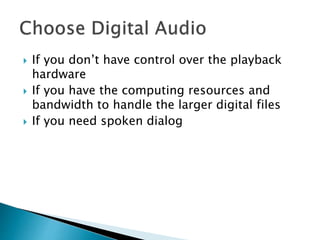

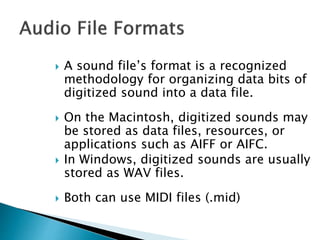

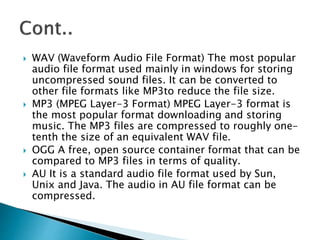

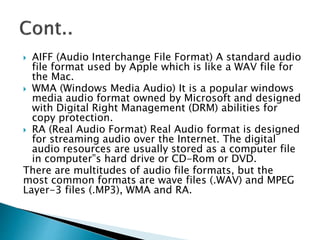

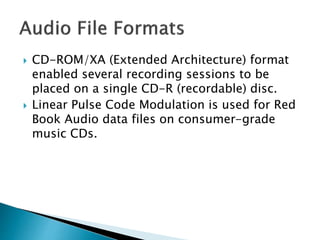

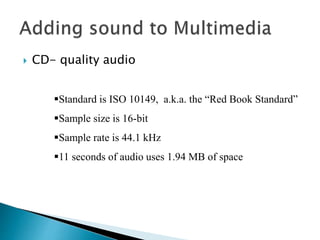

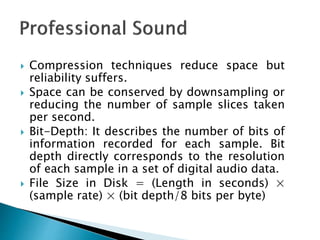

The document discusses digital audio and MIDI audio technologies. Digital audio involves sampling an analog sound wave and storing it digitally, while MIDI represents music as numeric codes that can be played on different sound-generating devices. The document compares factors such as file size, quality, and device dependence between digital audio and MIDI formats. It also provides guidance on editing, incorporating, and optimizing sounds in multimedia projects.