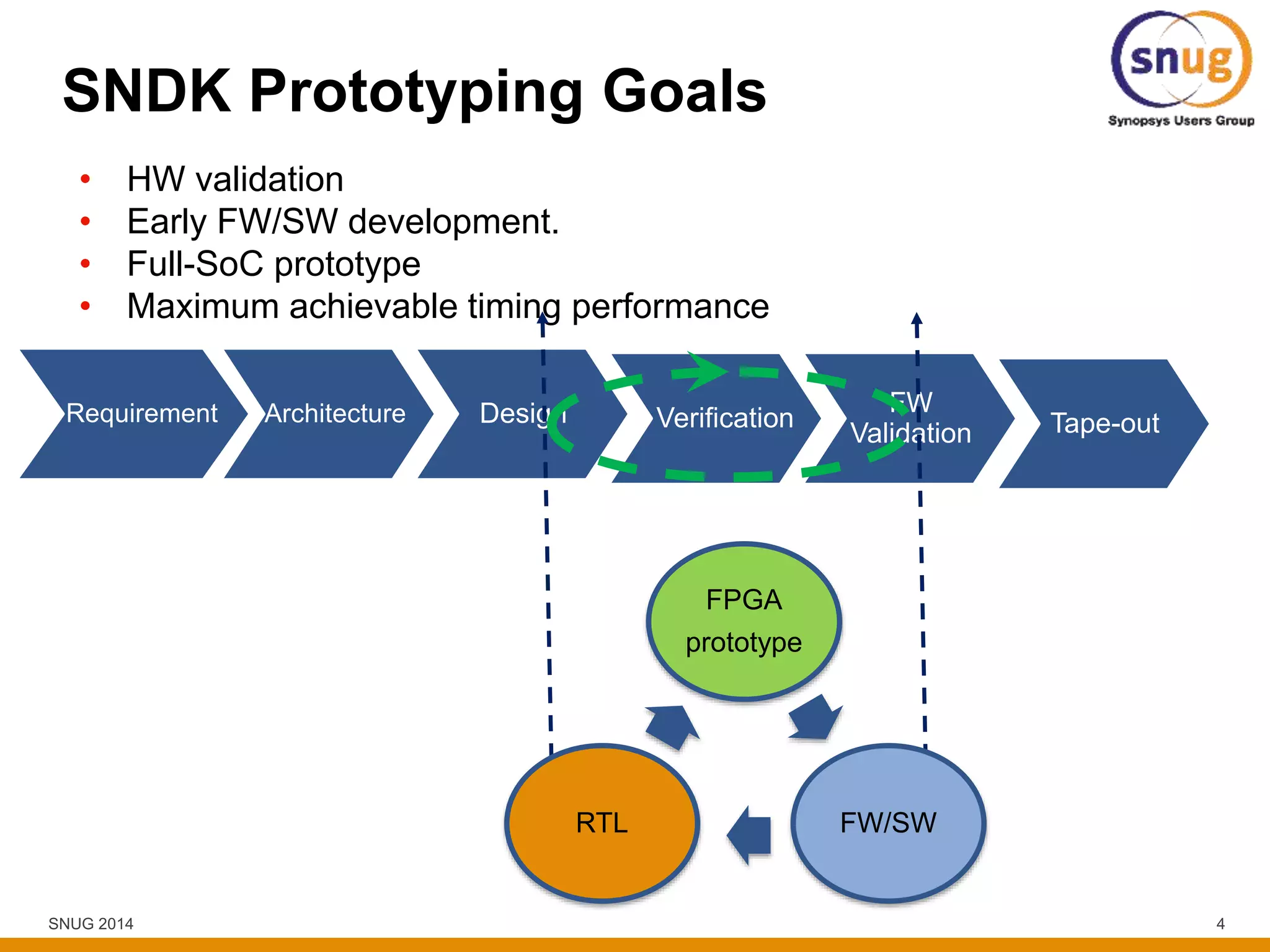

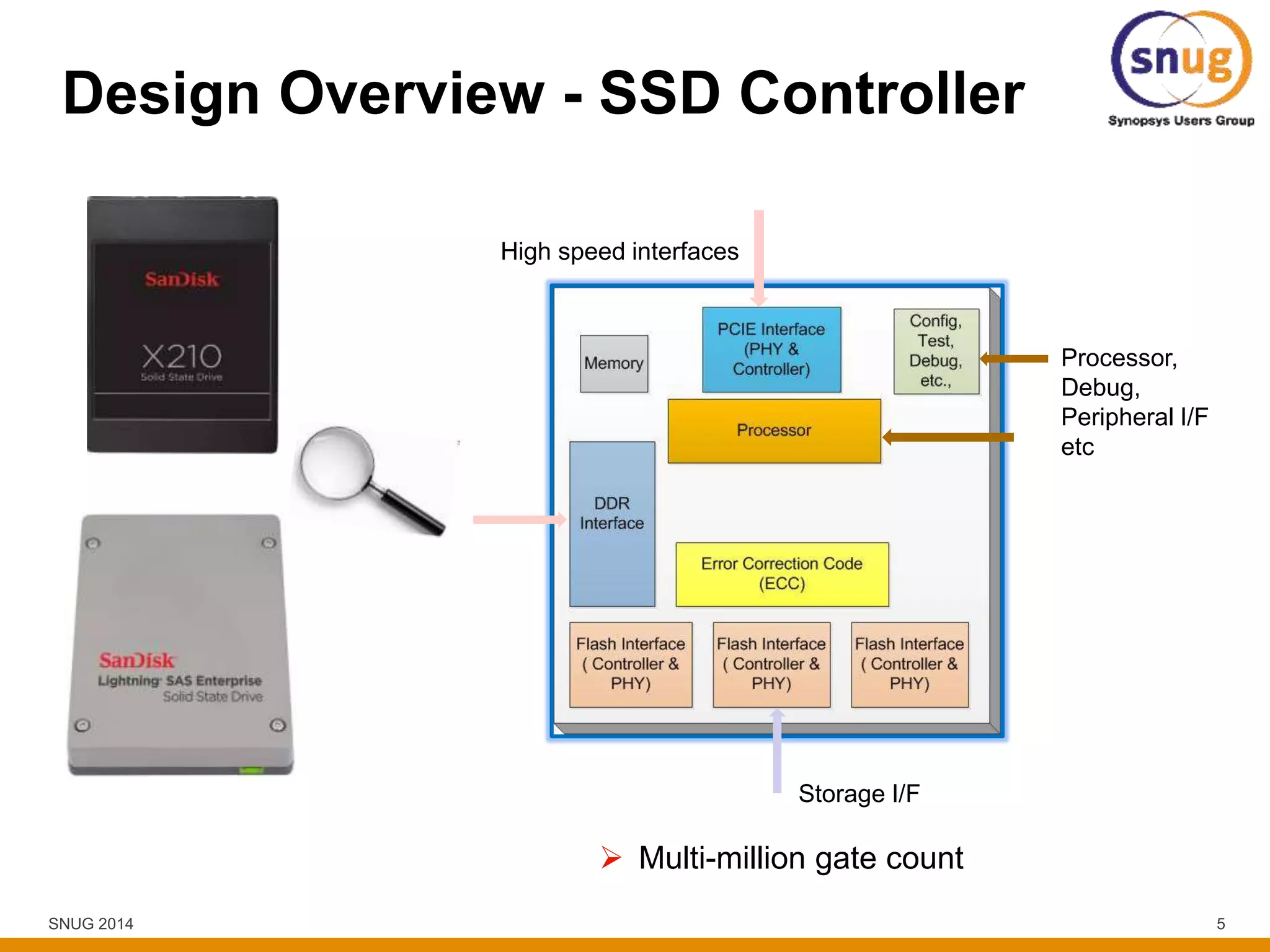

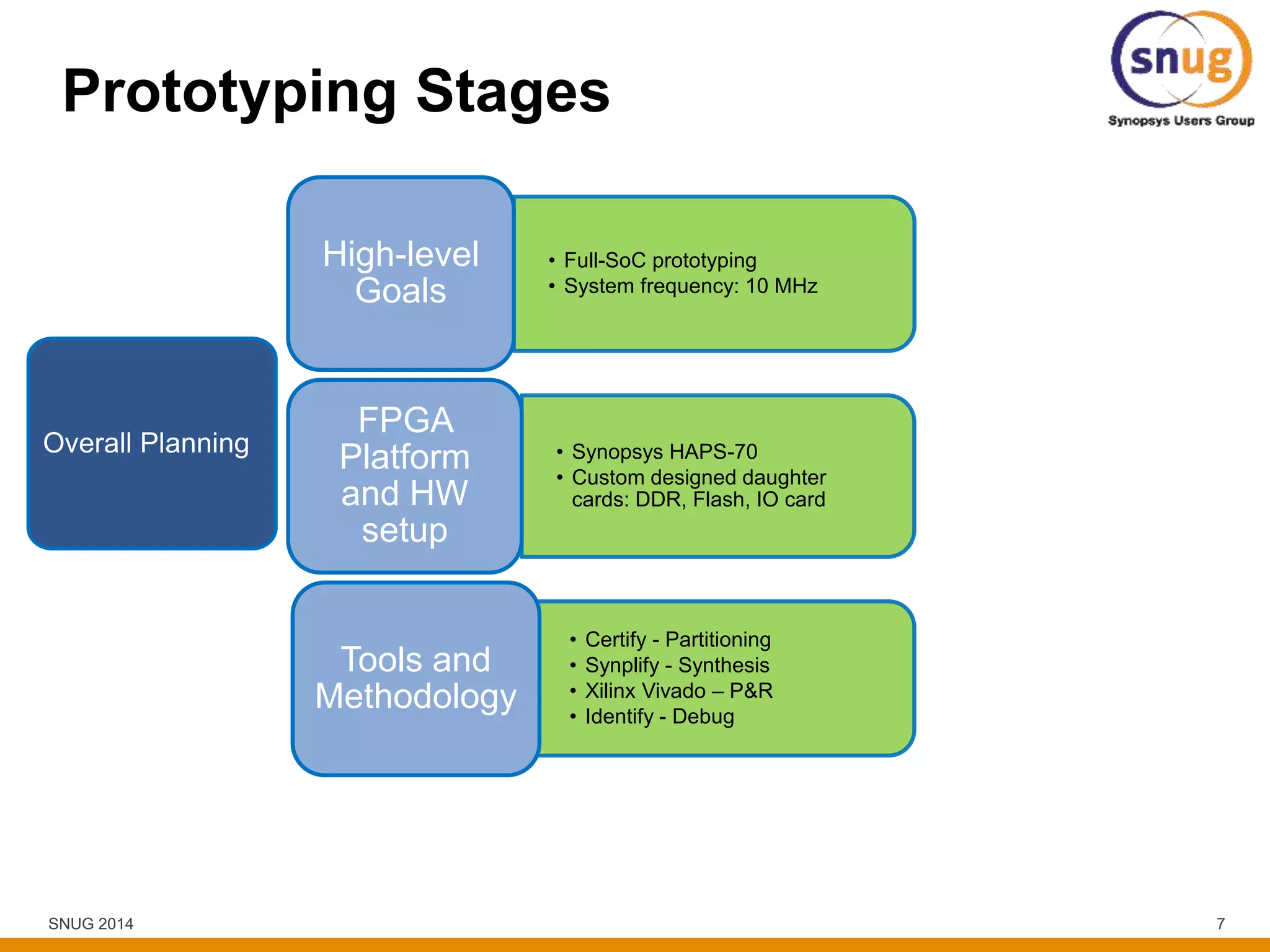

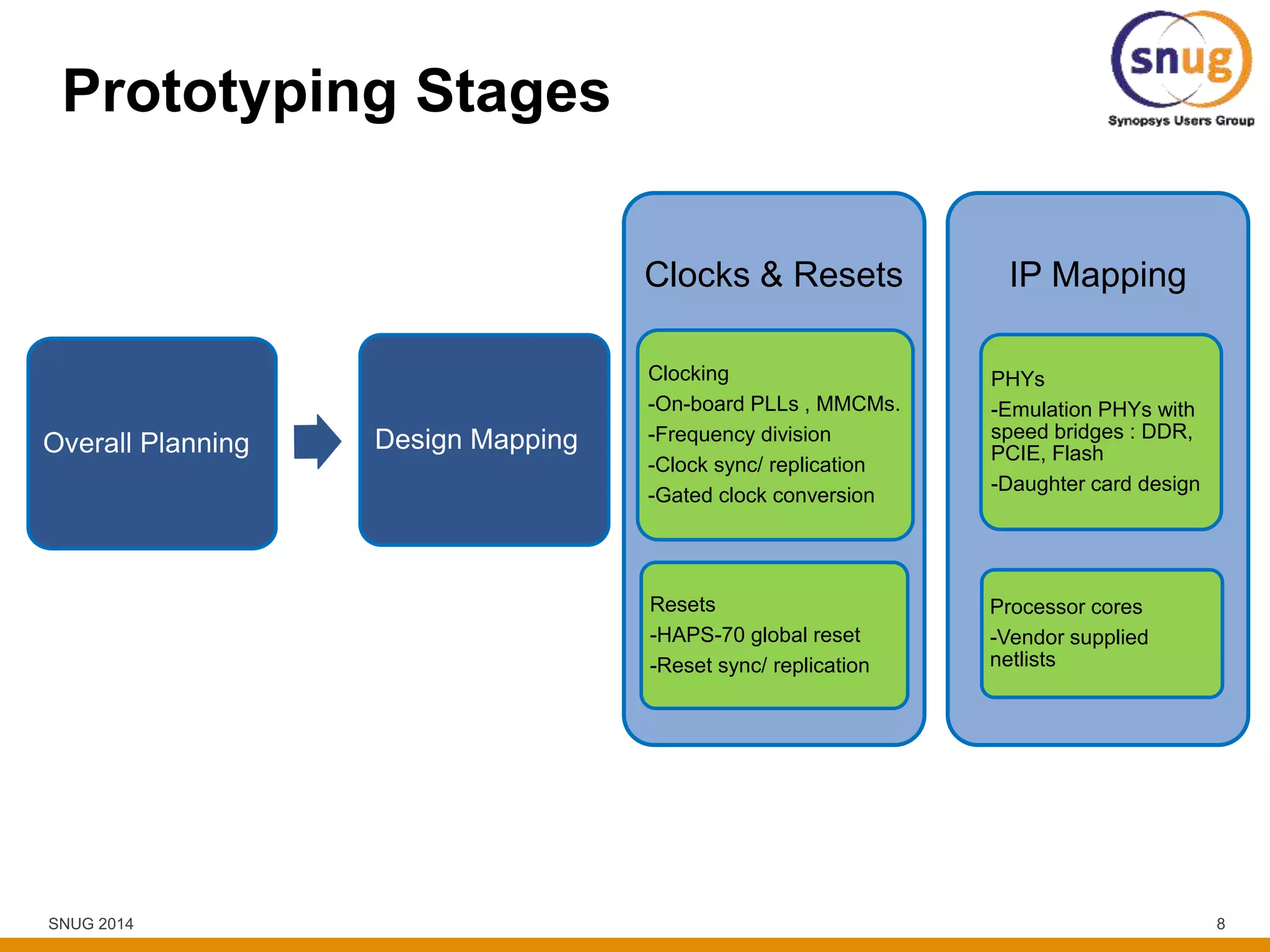

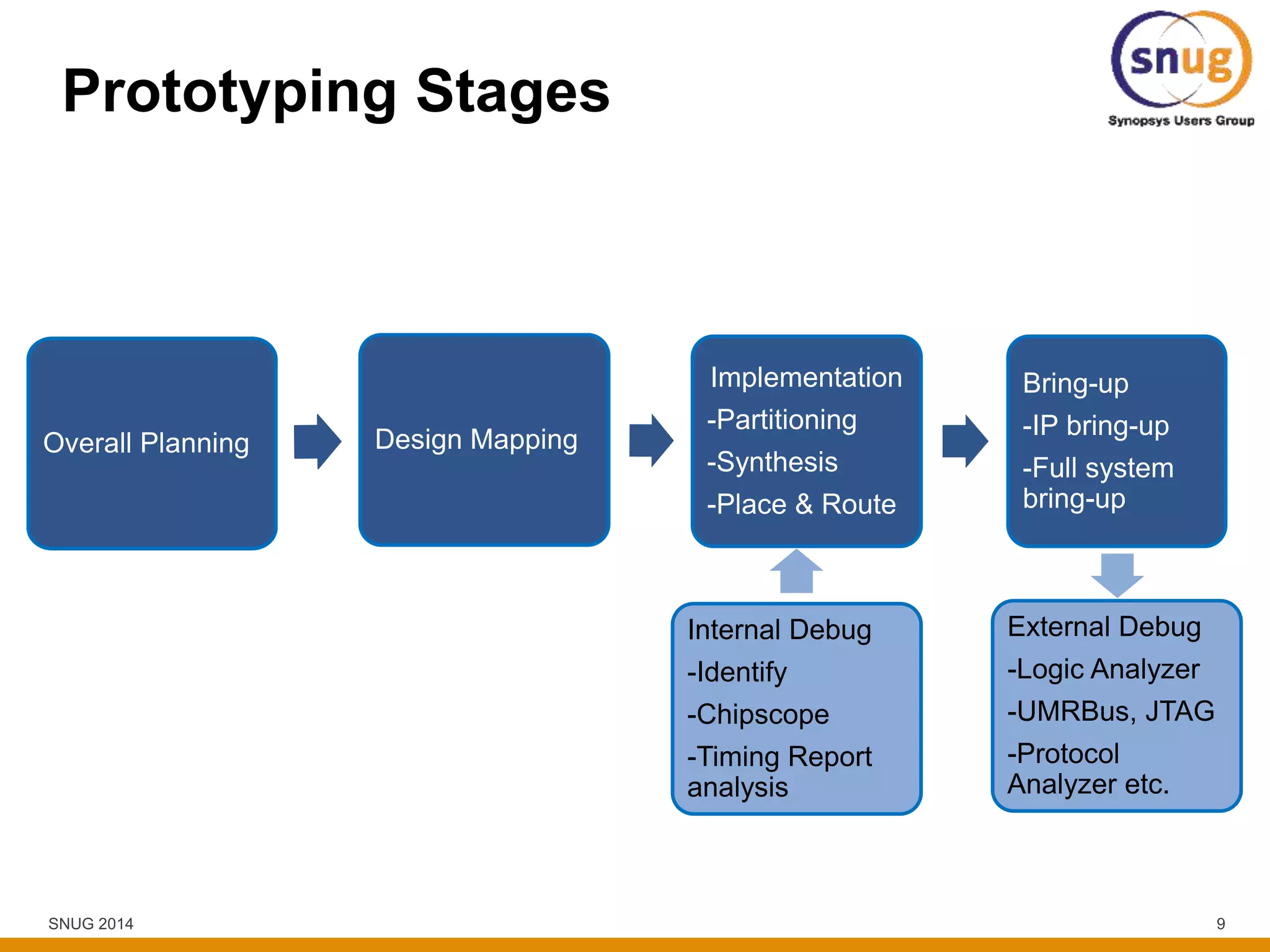

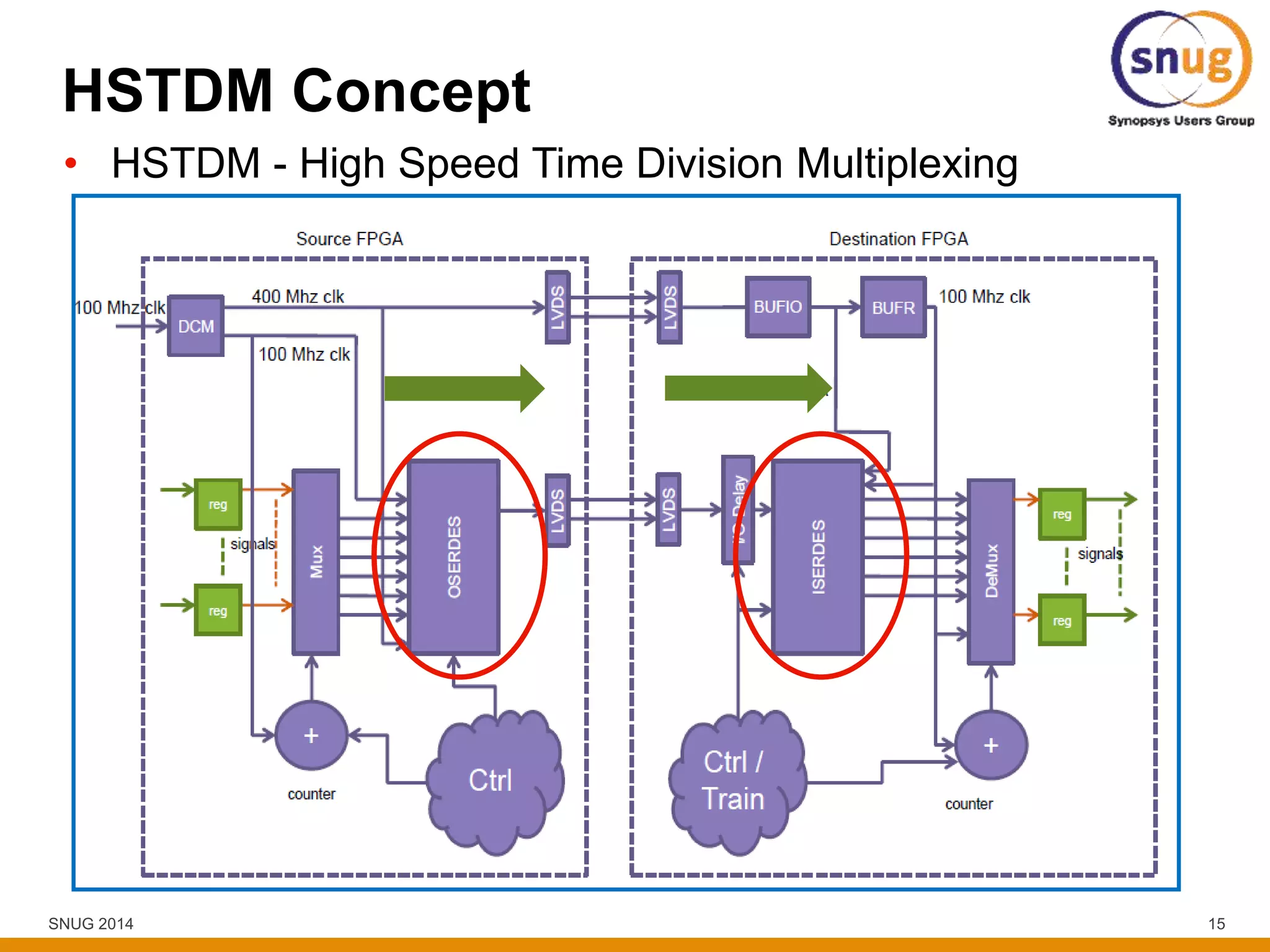

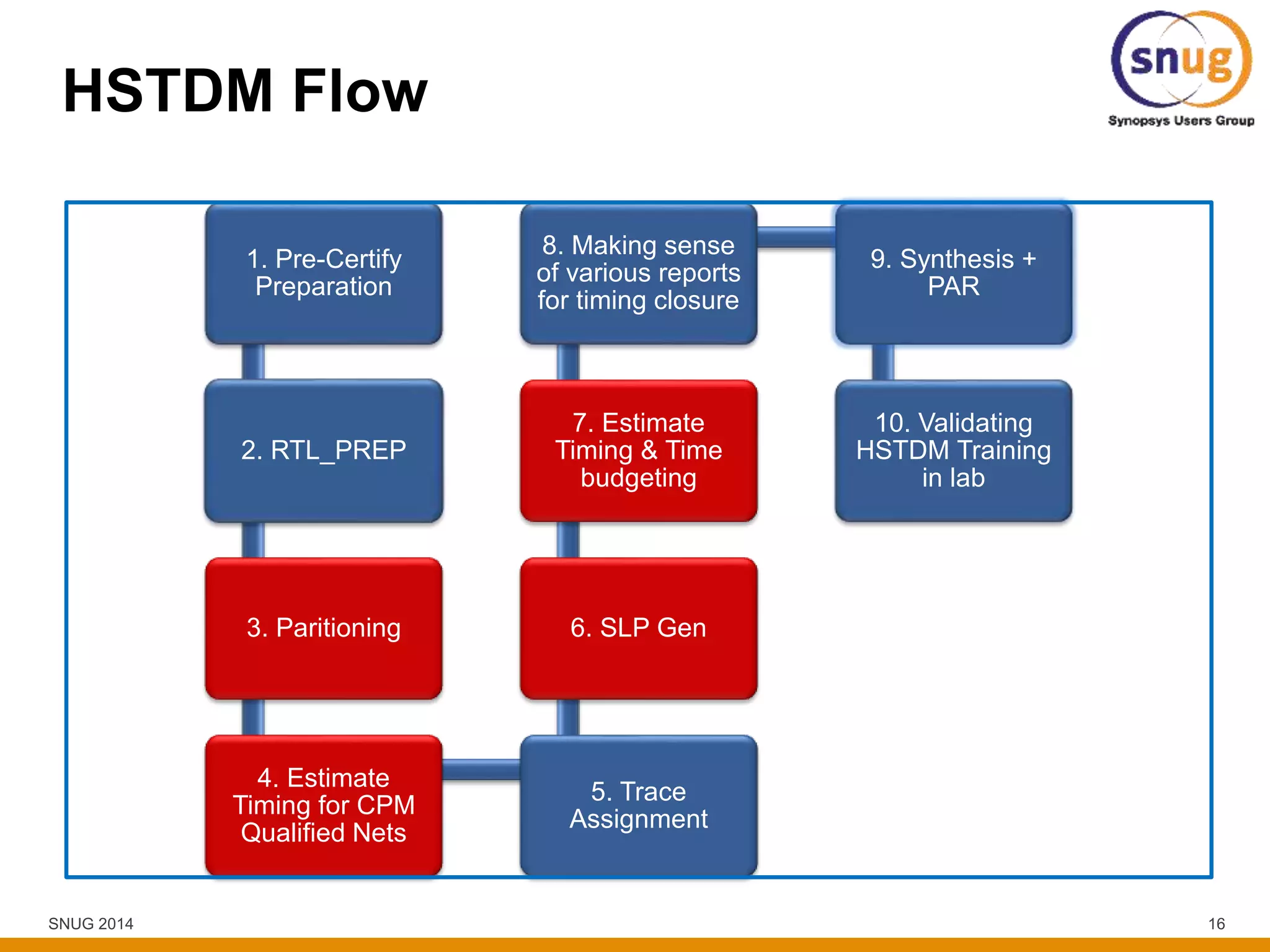

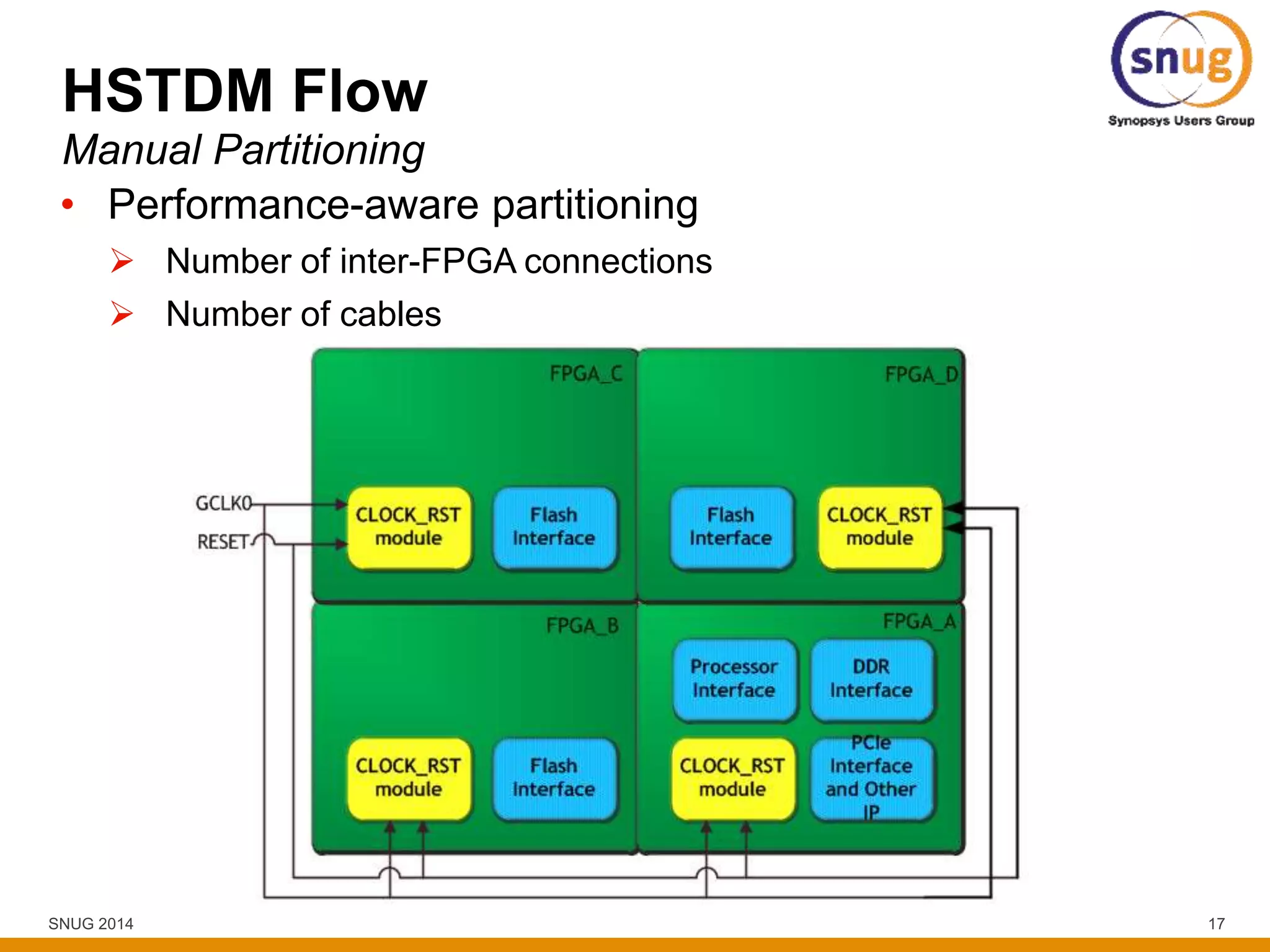

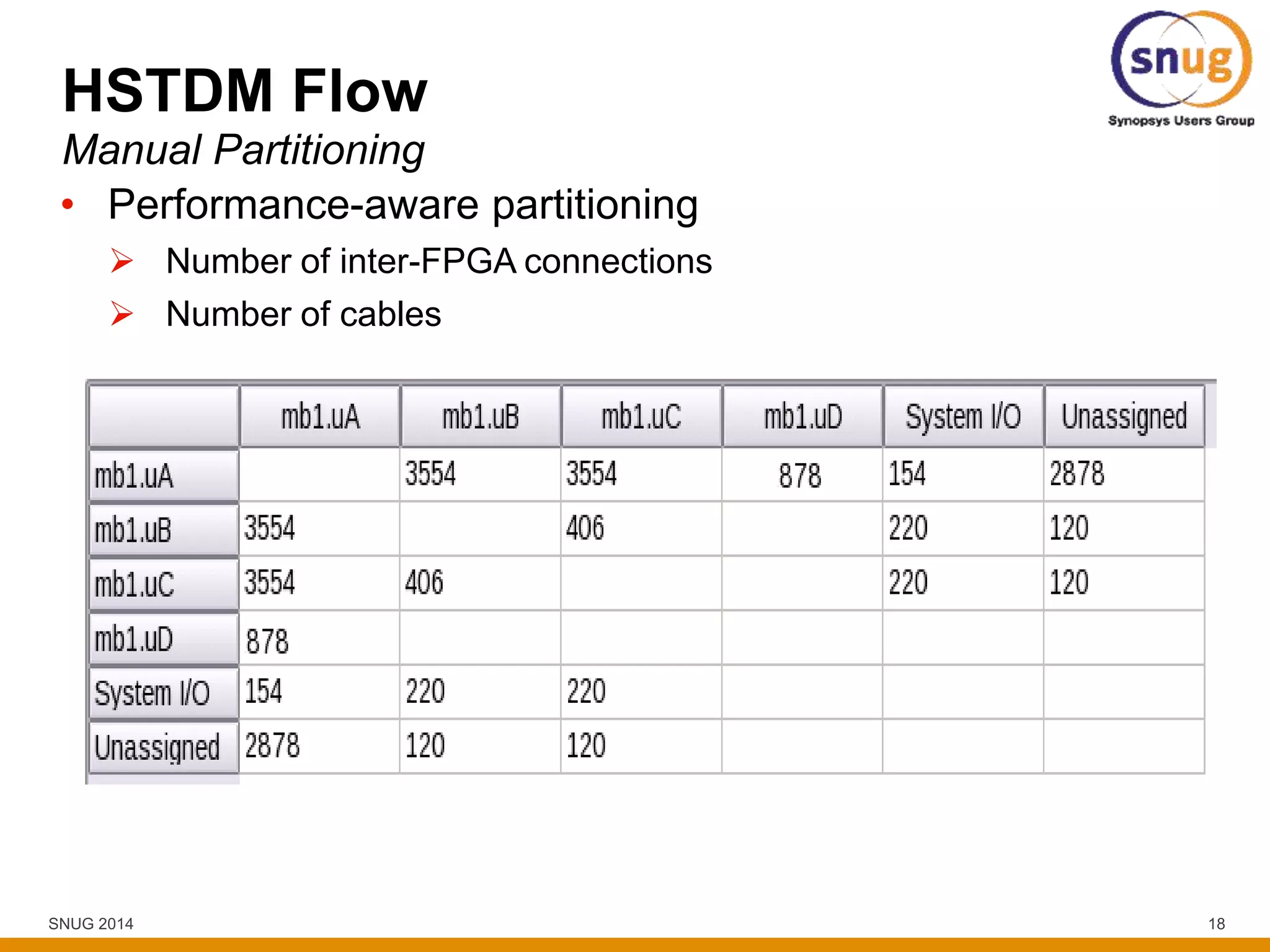

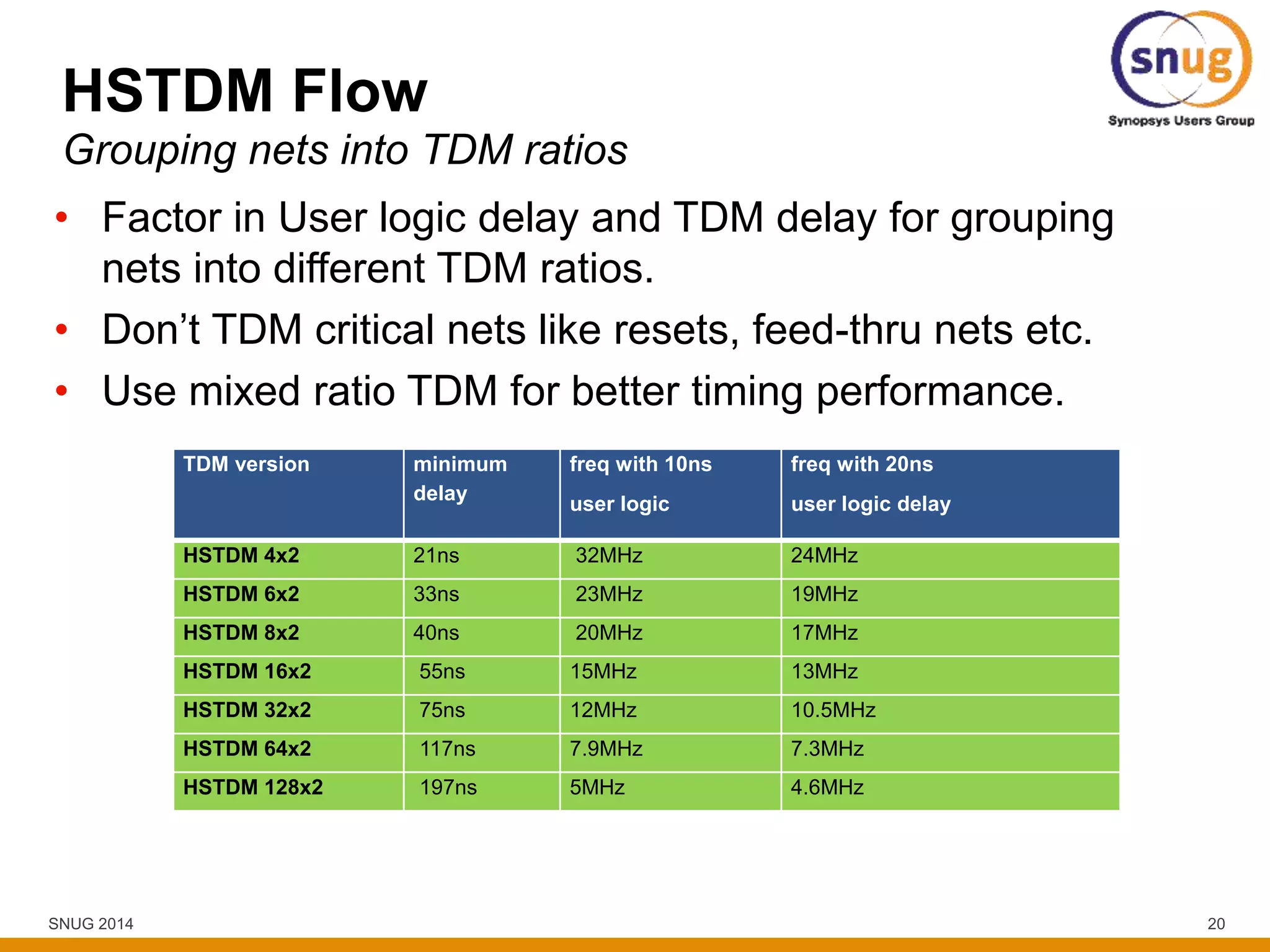

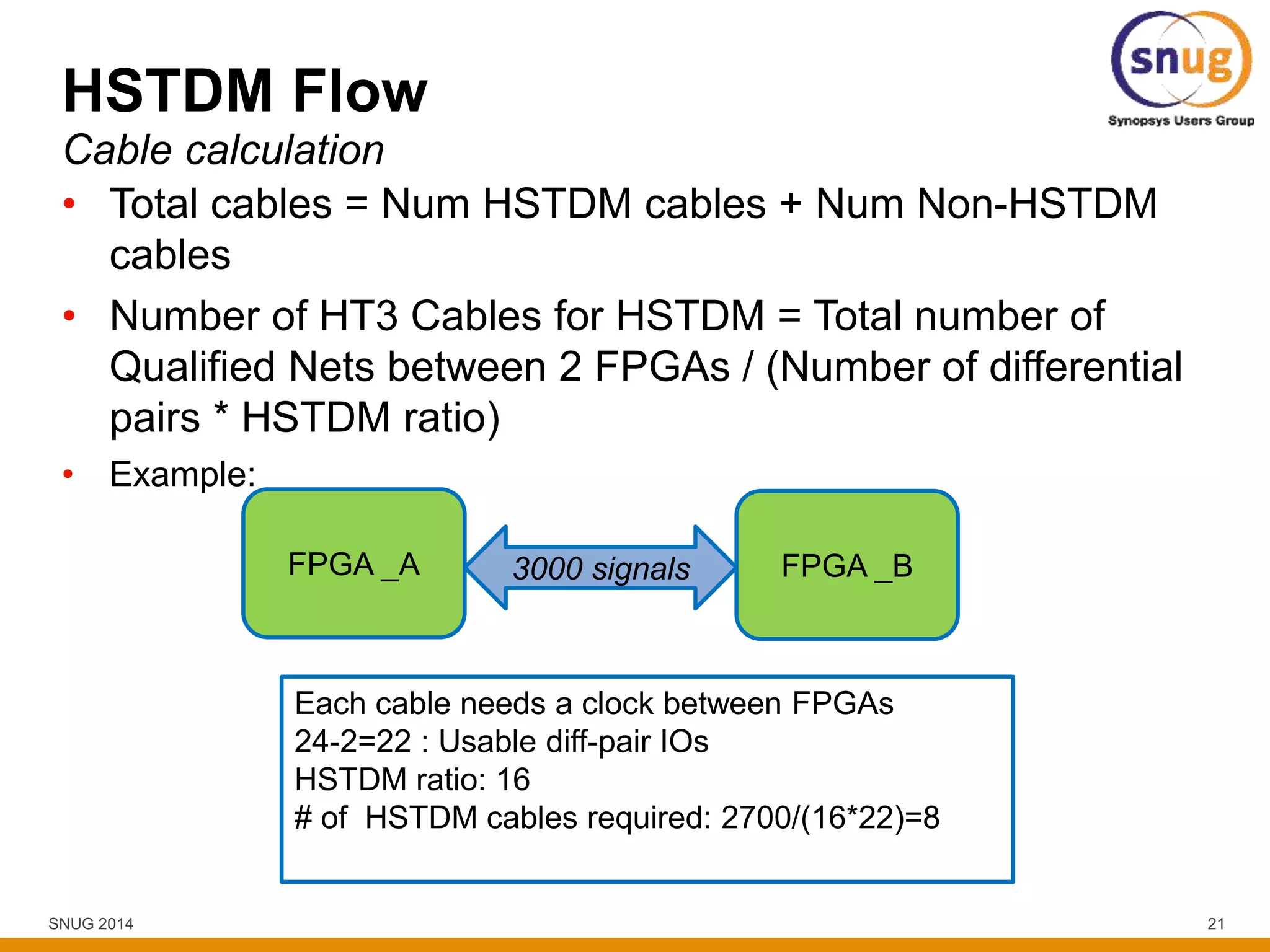

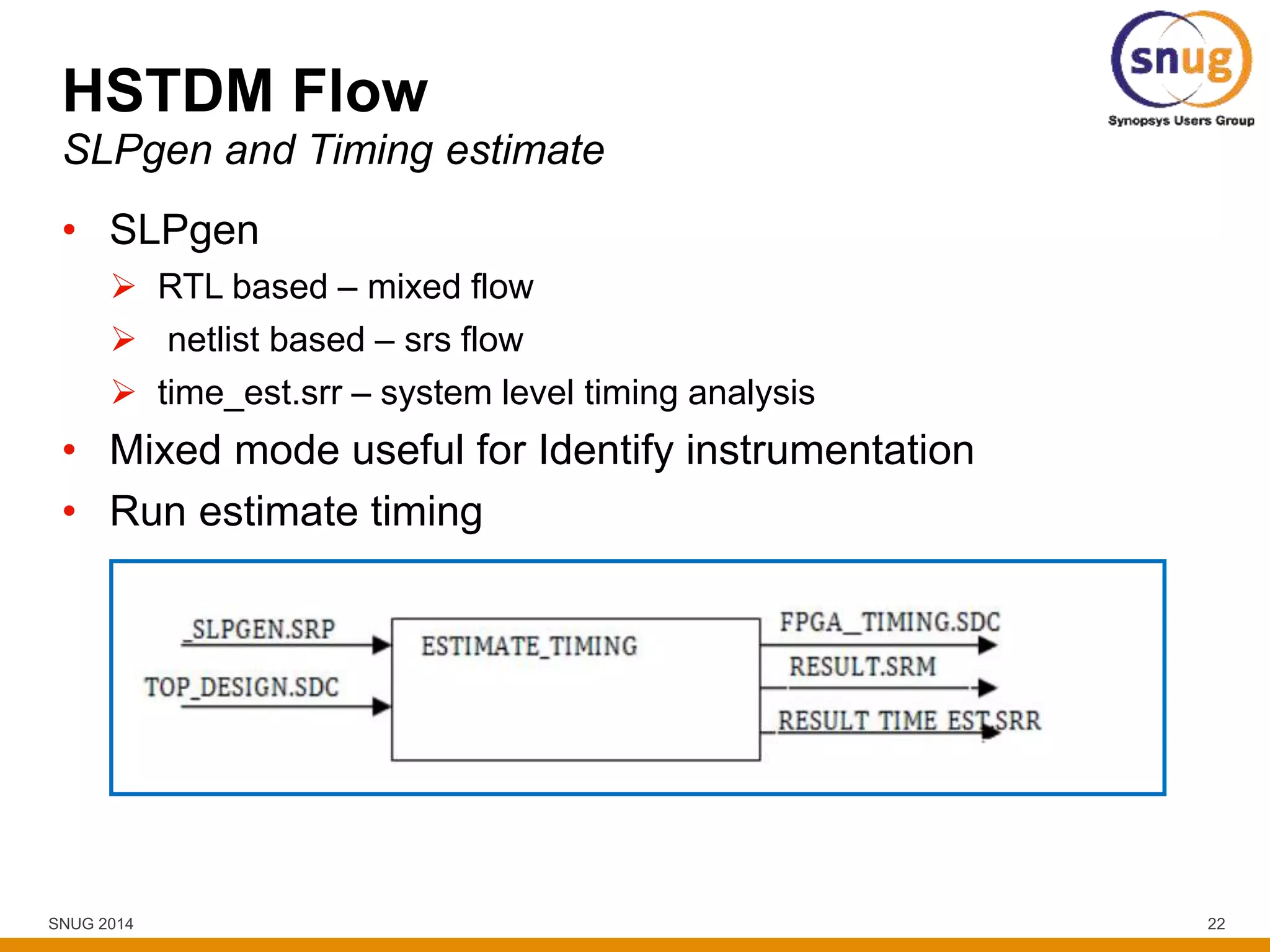

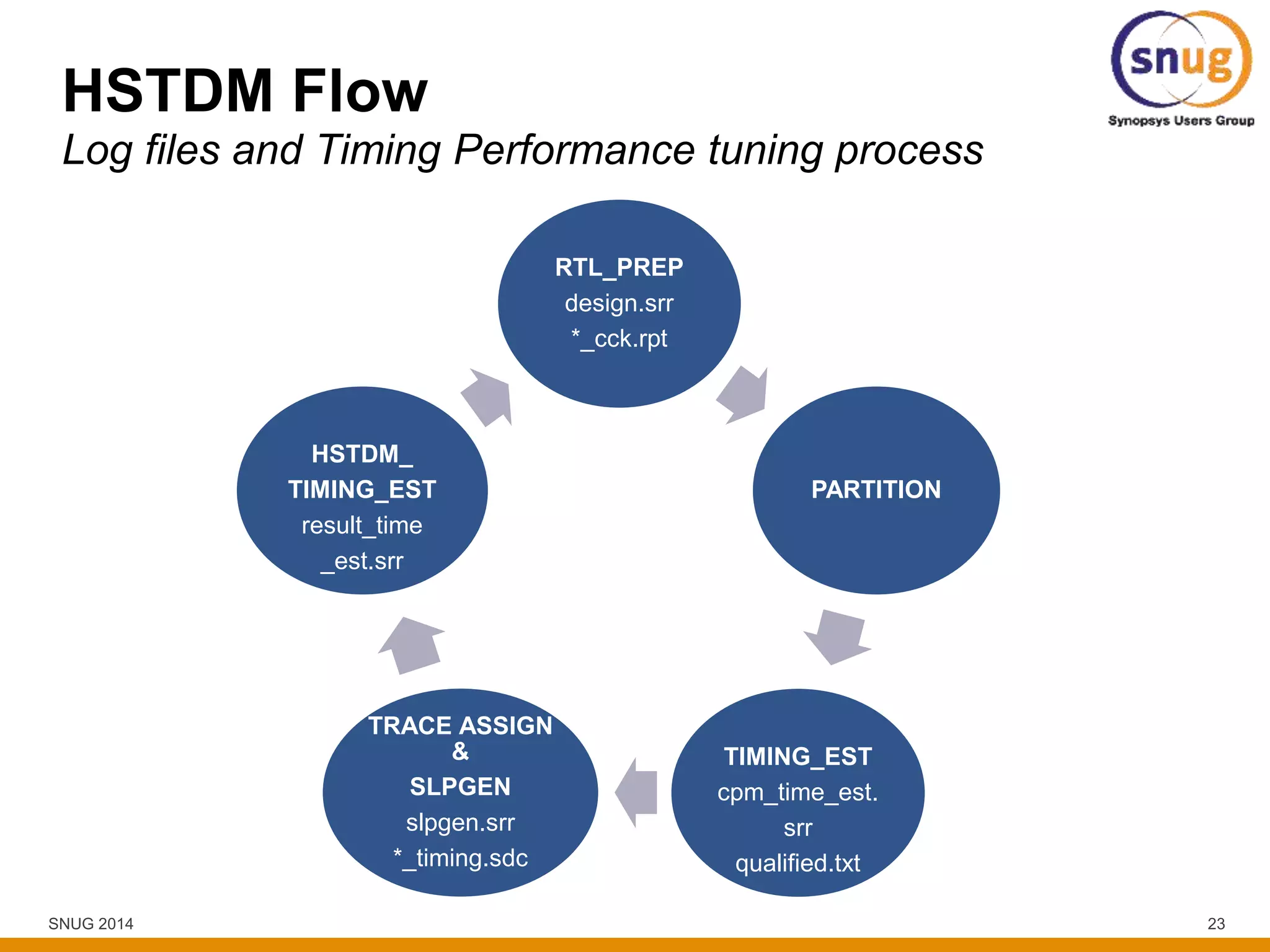

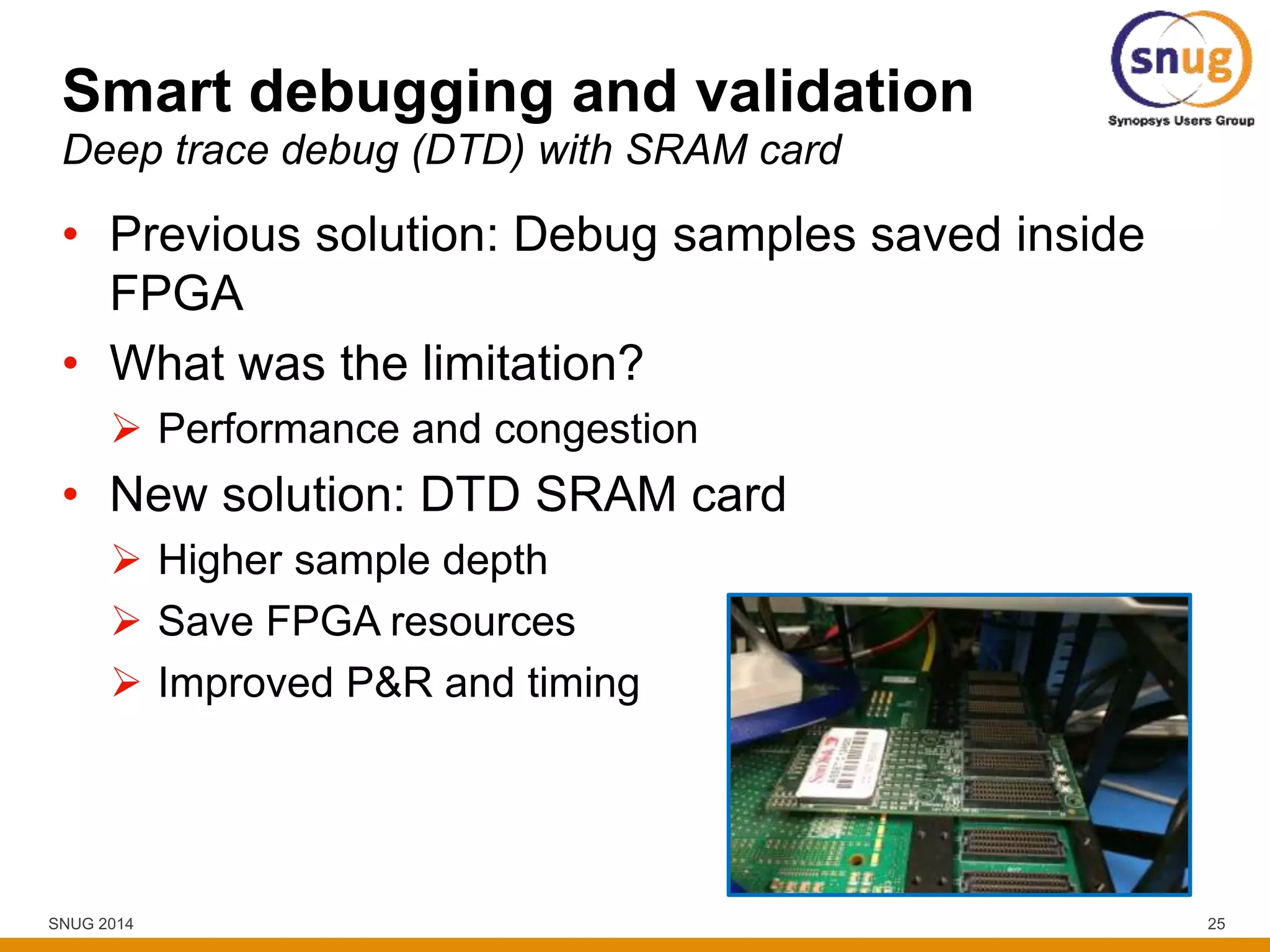

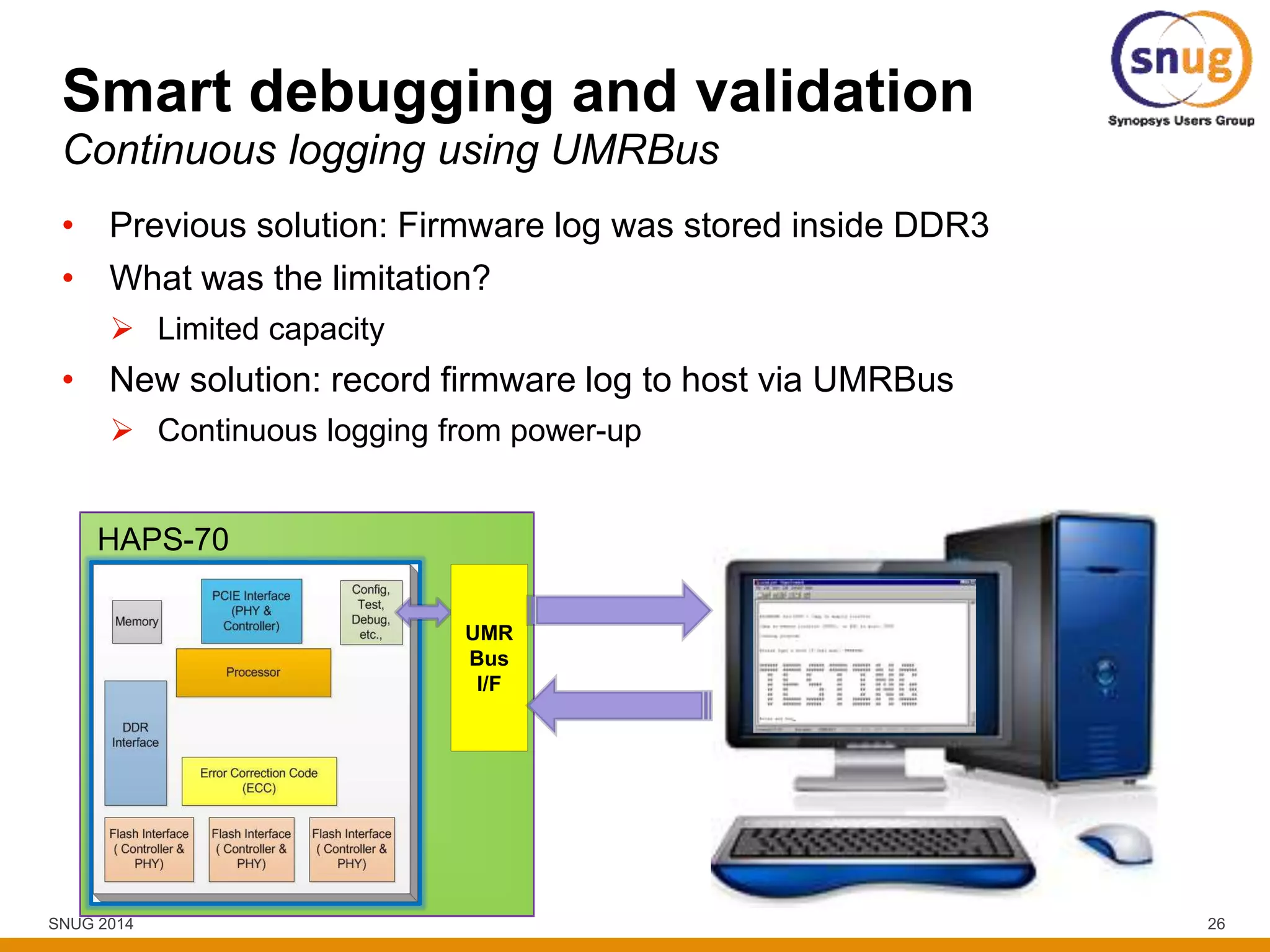

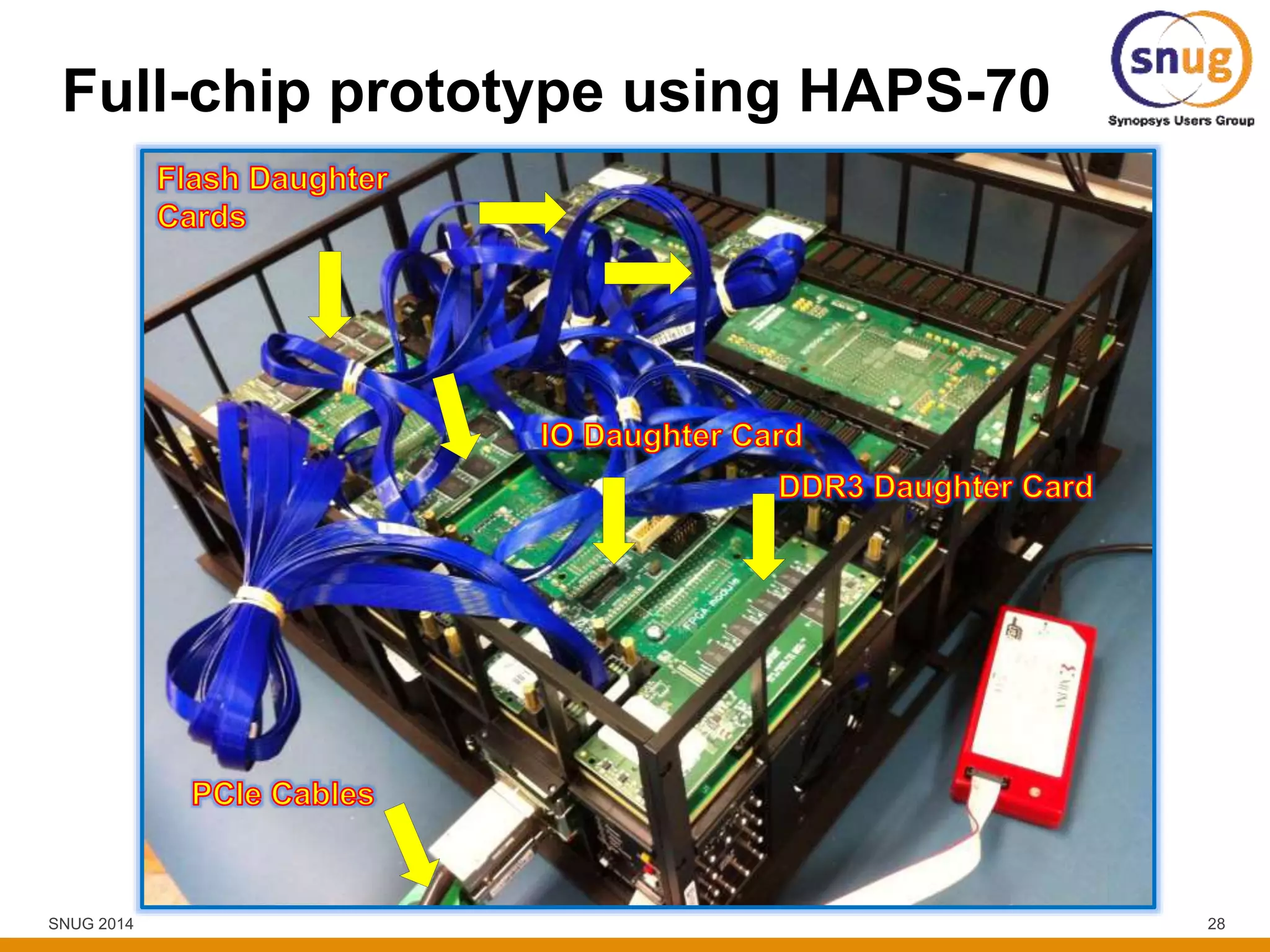

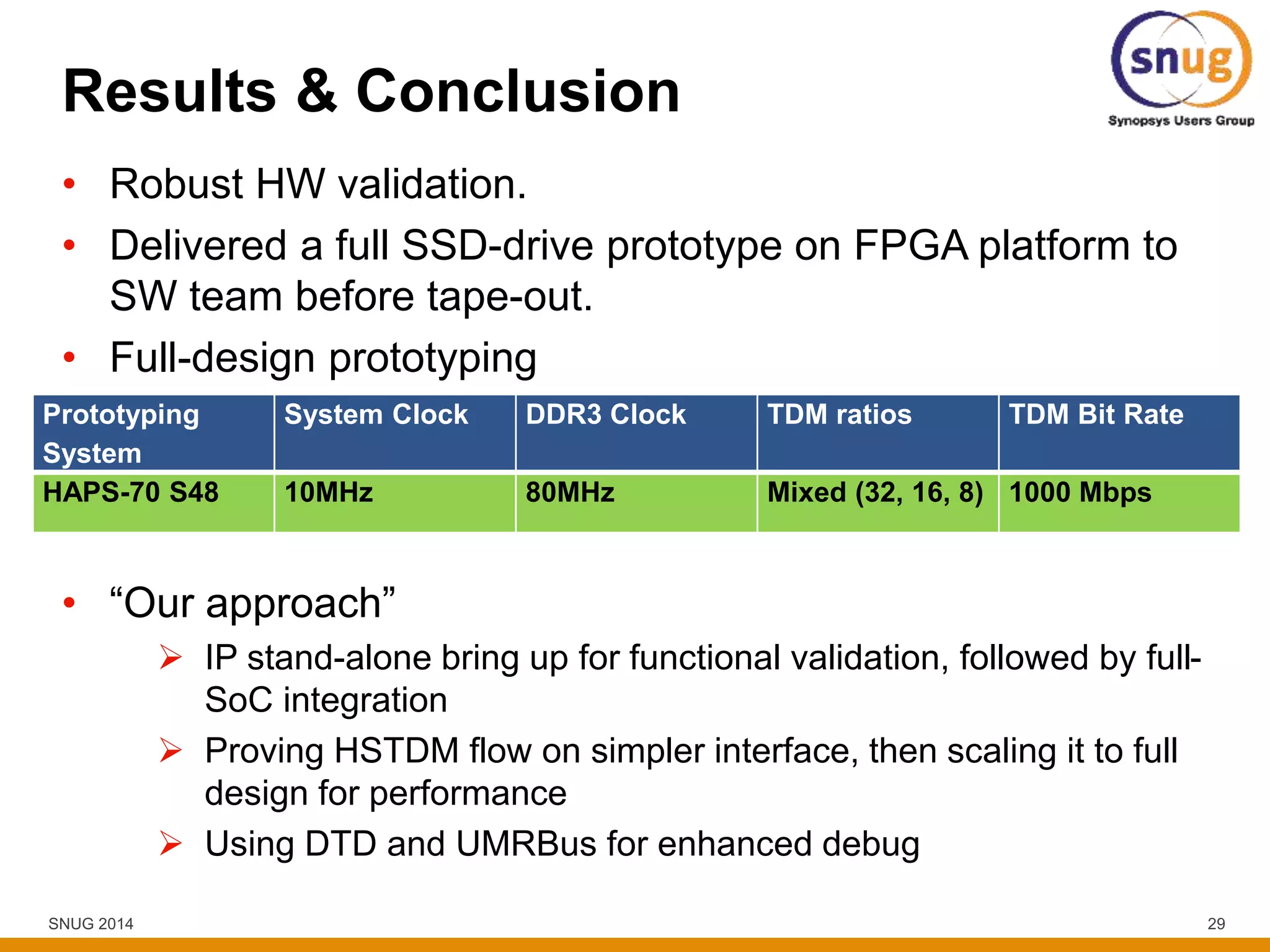

This document summarizes a presentation about achieving maximum system performance on a multi-FPGA design using the HAPS-70 FPGA prototyping system. The presentation discusses mapping the design to the HAPS-70, leveraging HSTDM technology to maximize performance, using smart debugging techniques, and achieving a prototype system clocked at 10 MHz. Key techniques included performance-aware partitioning, estimating timing on qualified nets for TDM, calculating optimal TDM ratios, and using deep trace debugging and continuous logging over UMRBus. The approach successfully delivered a full SSD controller prototype to software teams ahead of tape-out.