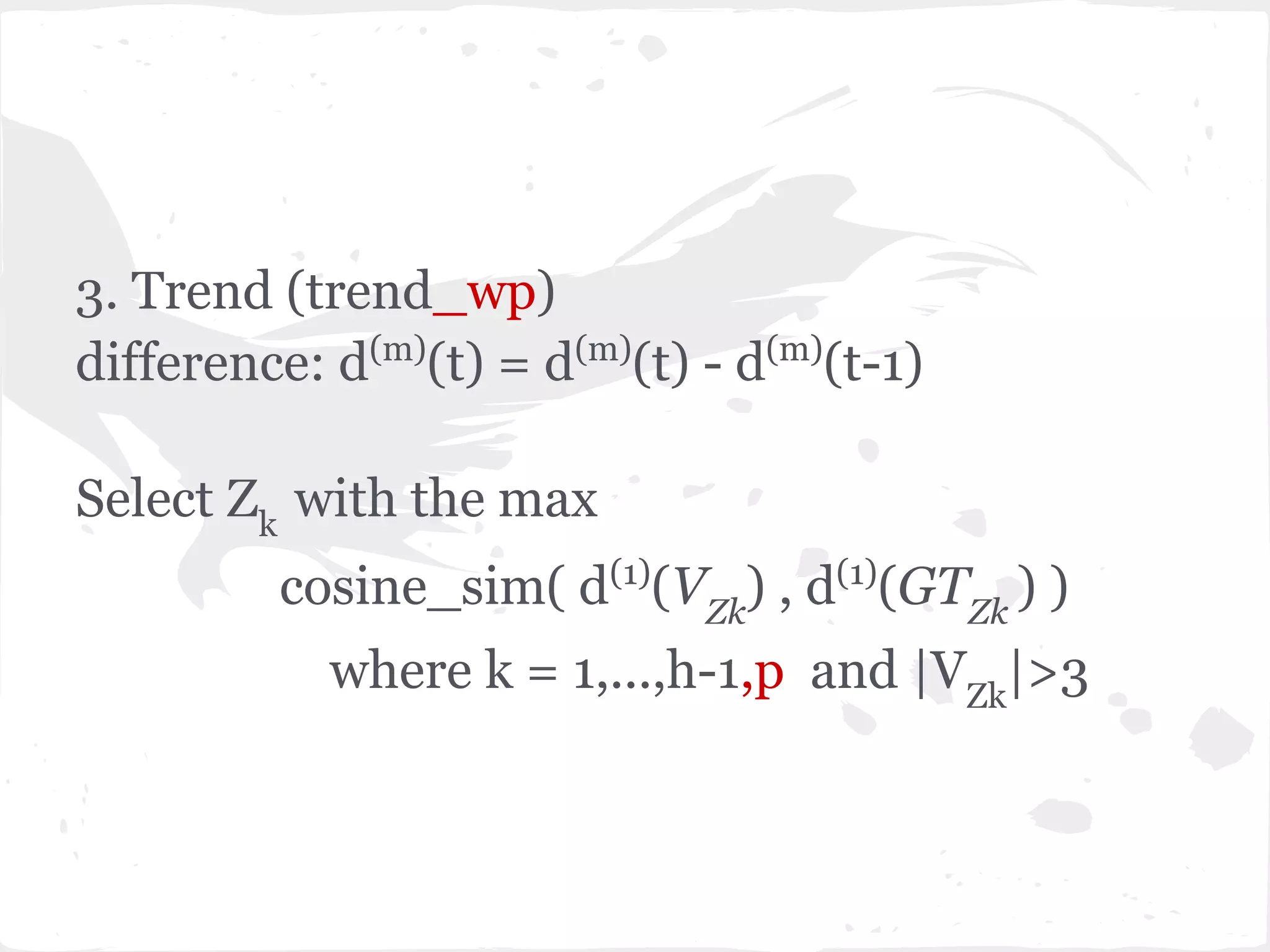

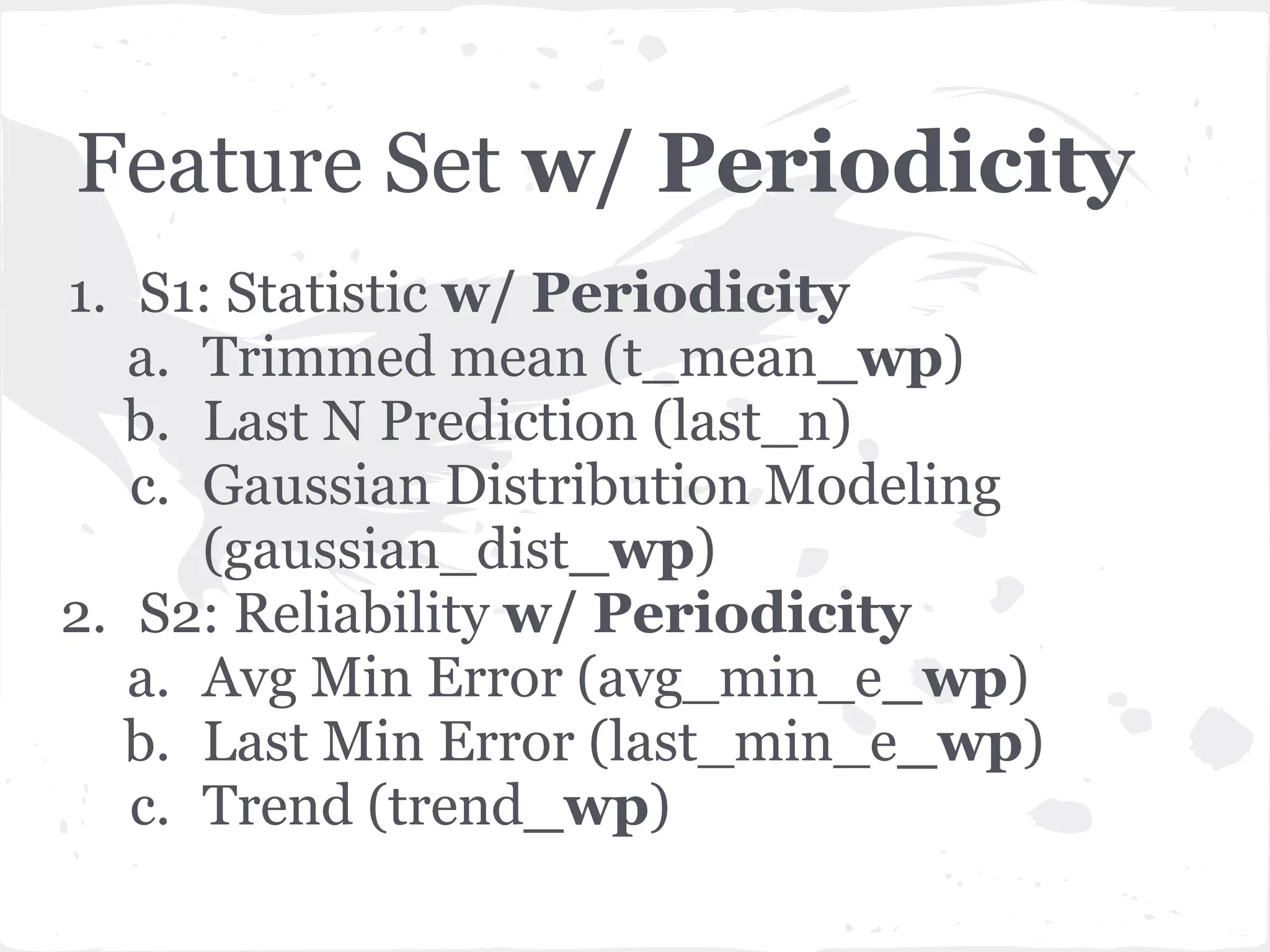

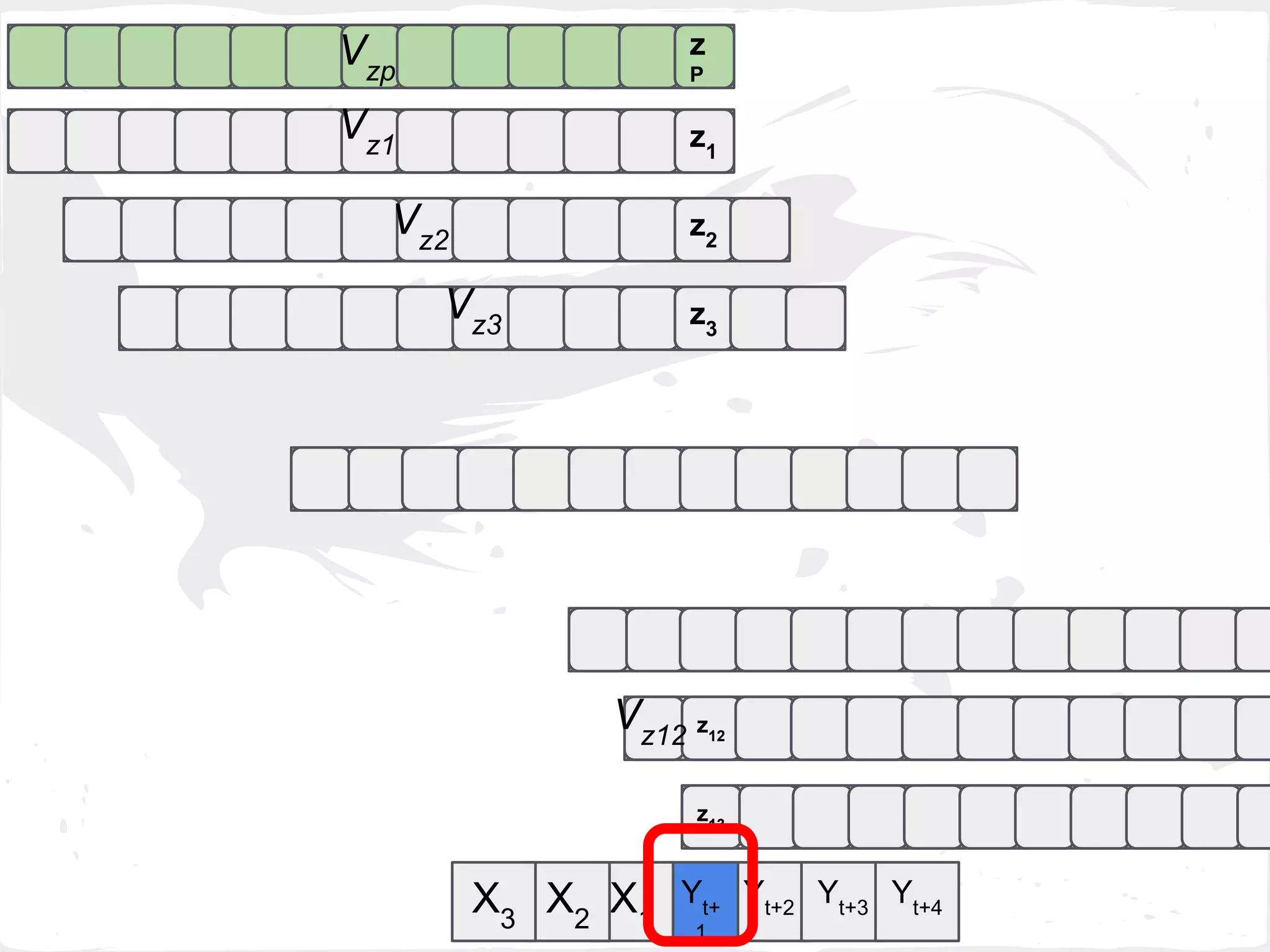

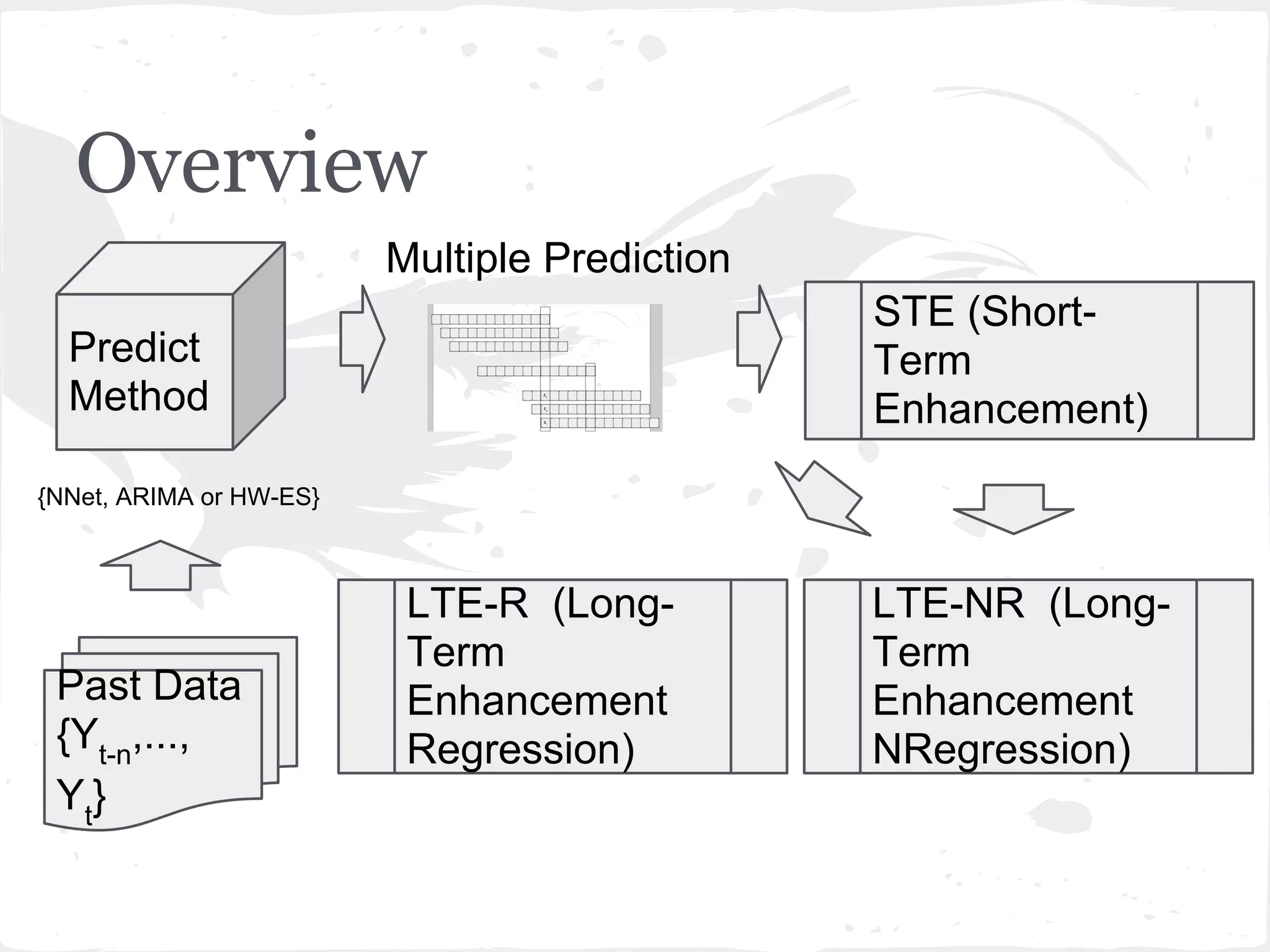

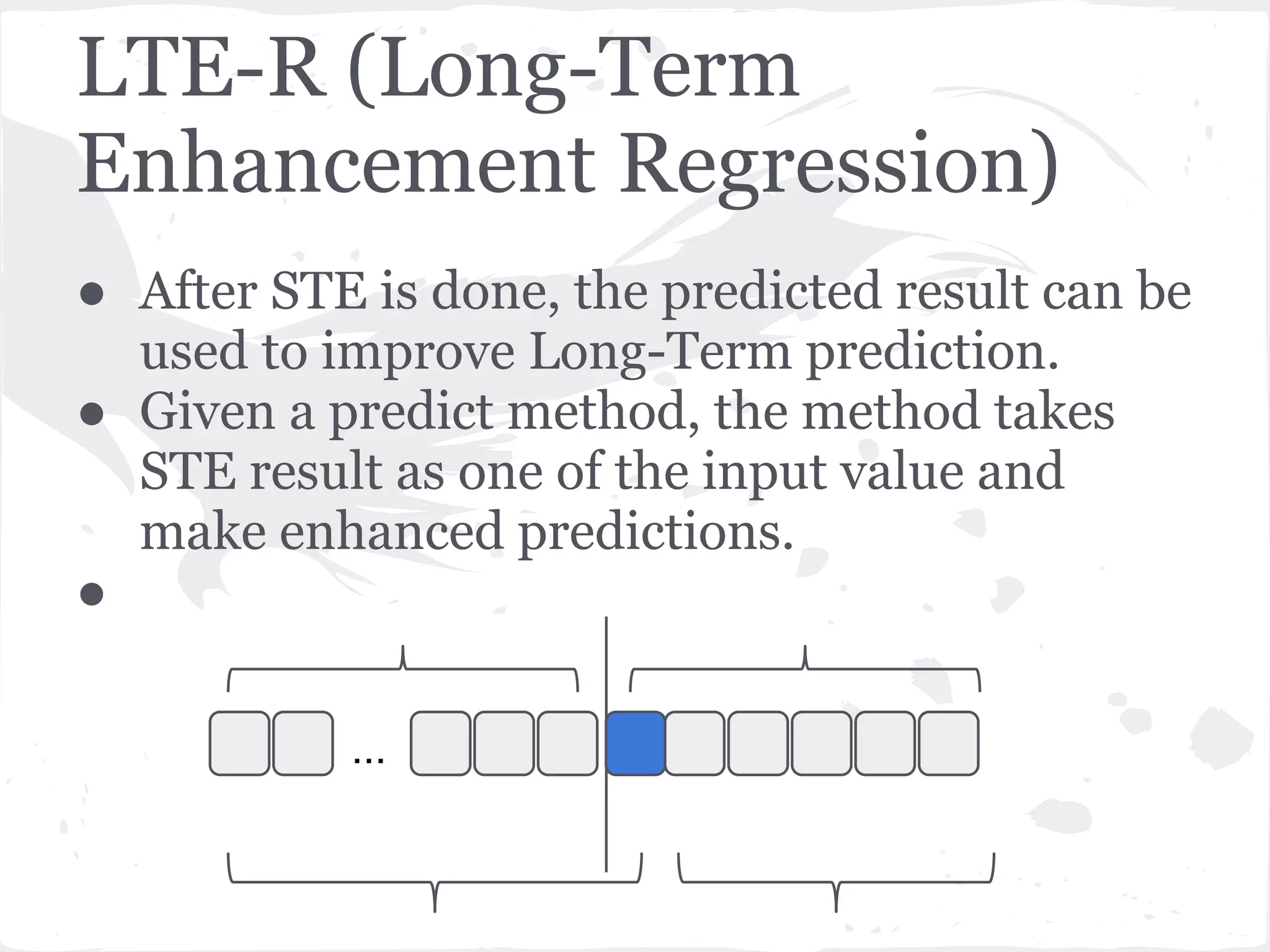

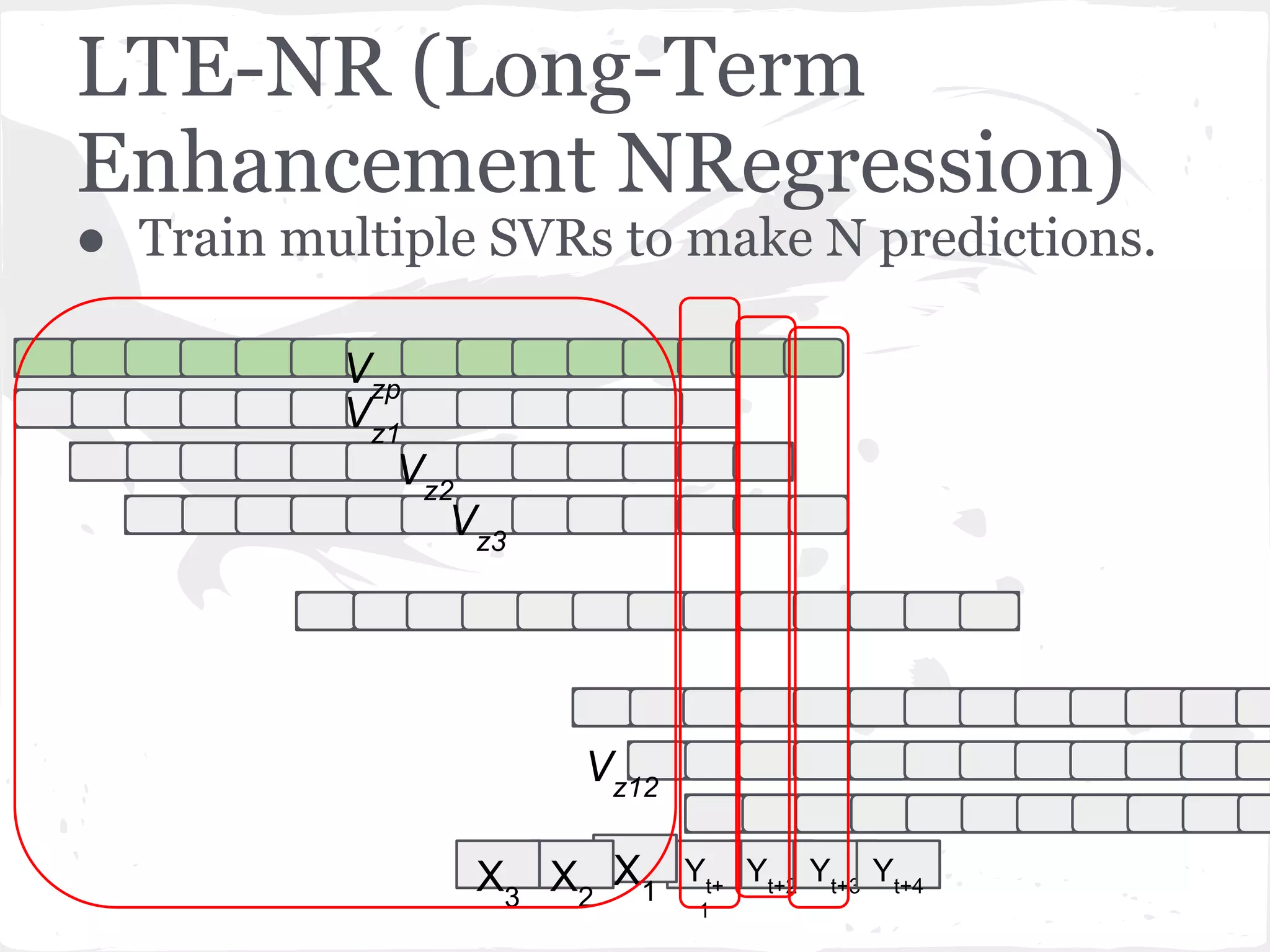

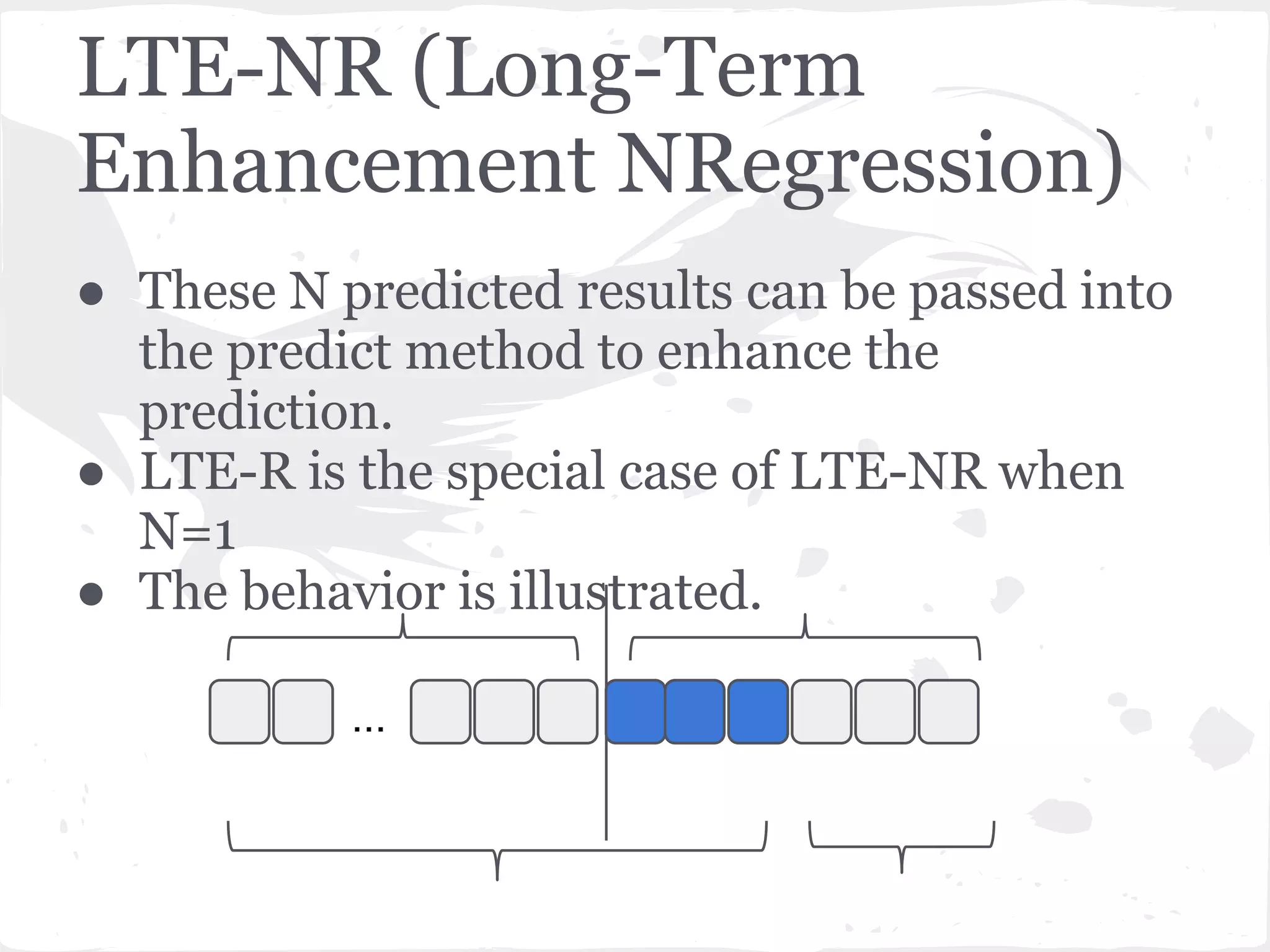

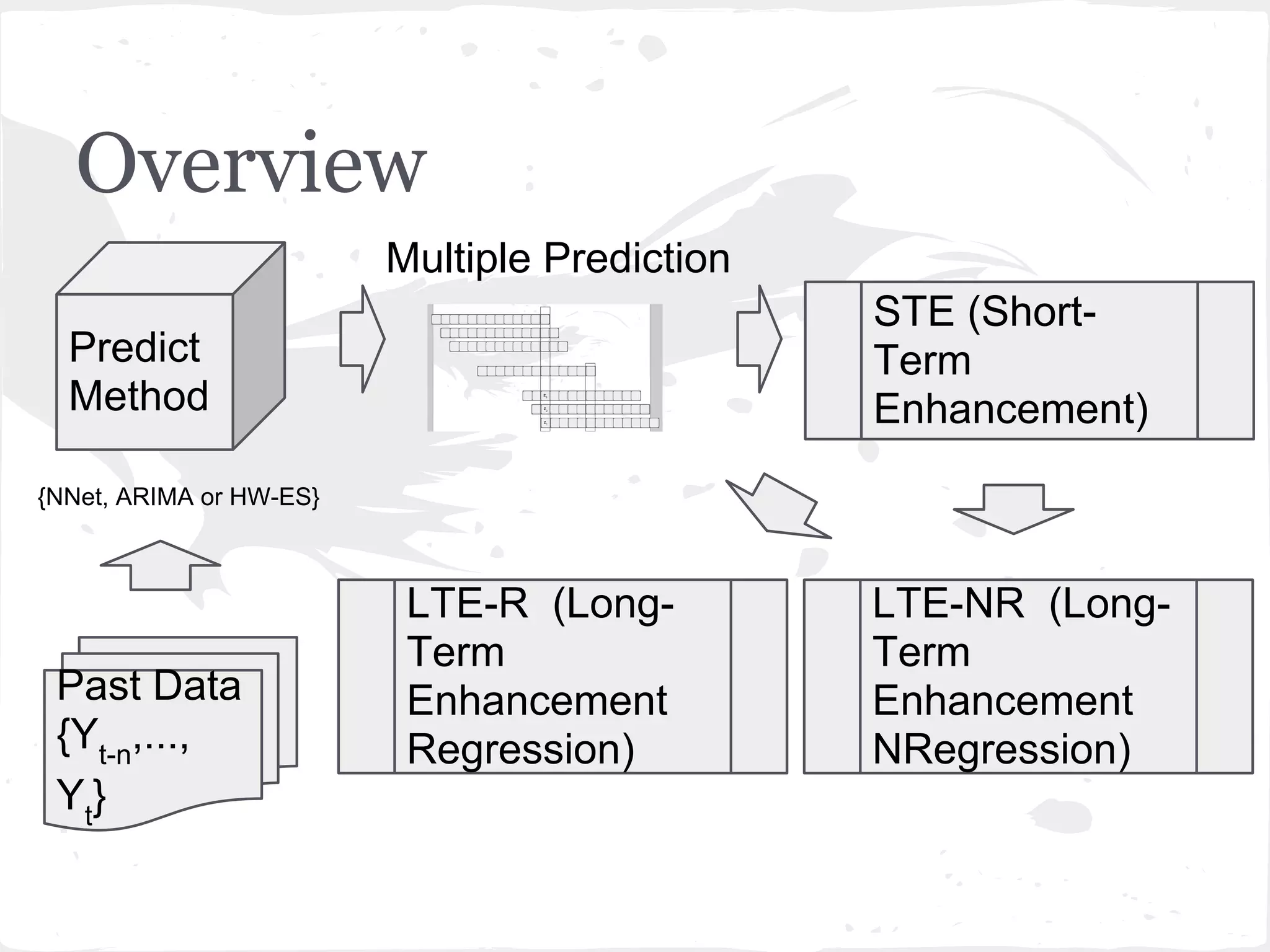

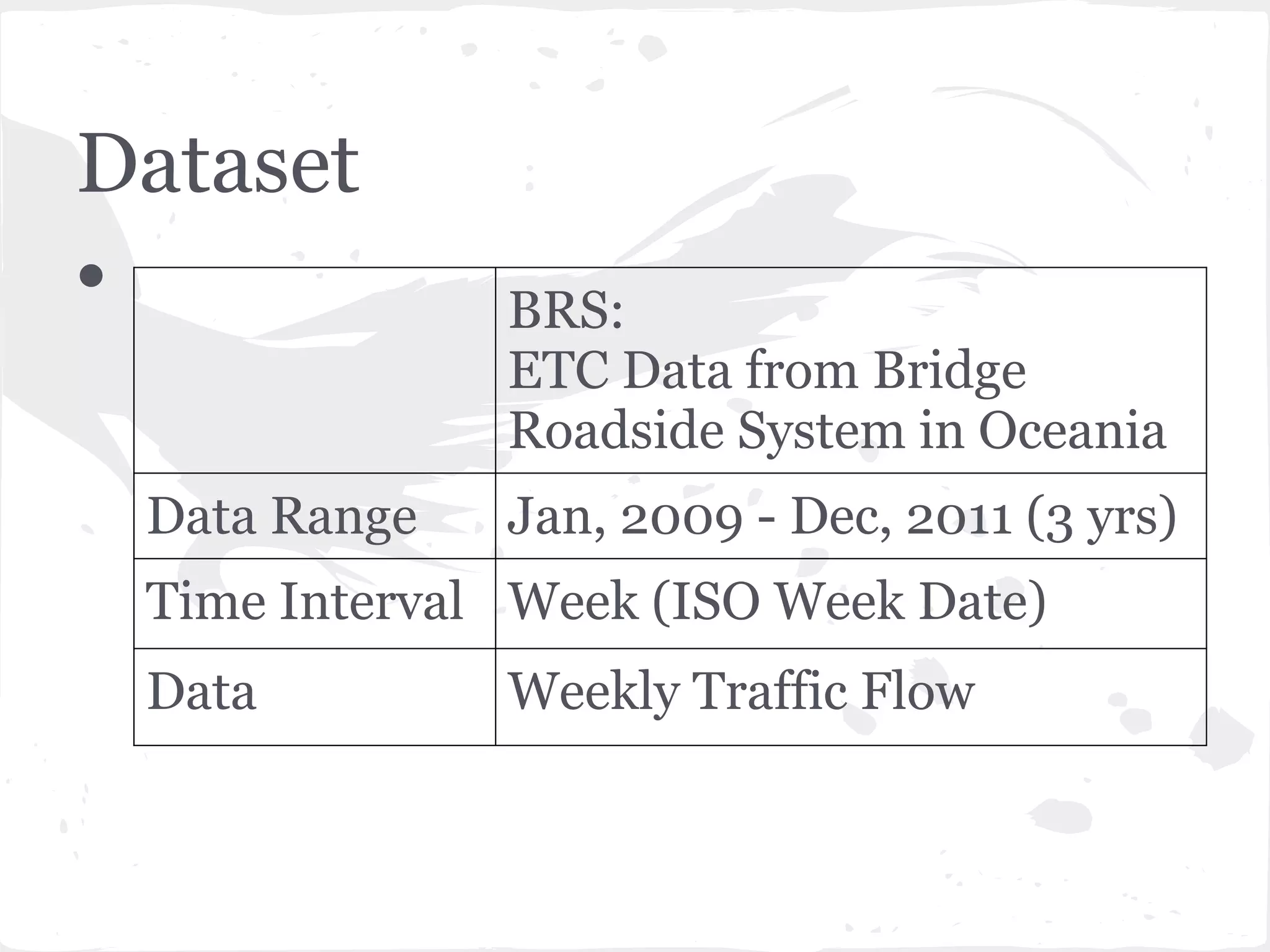

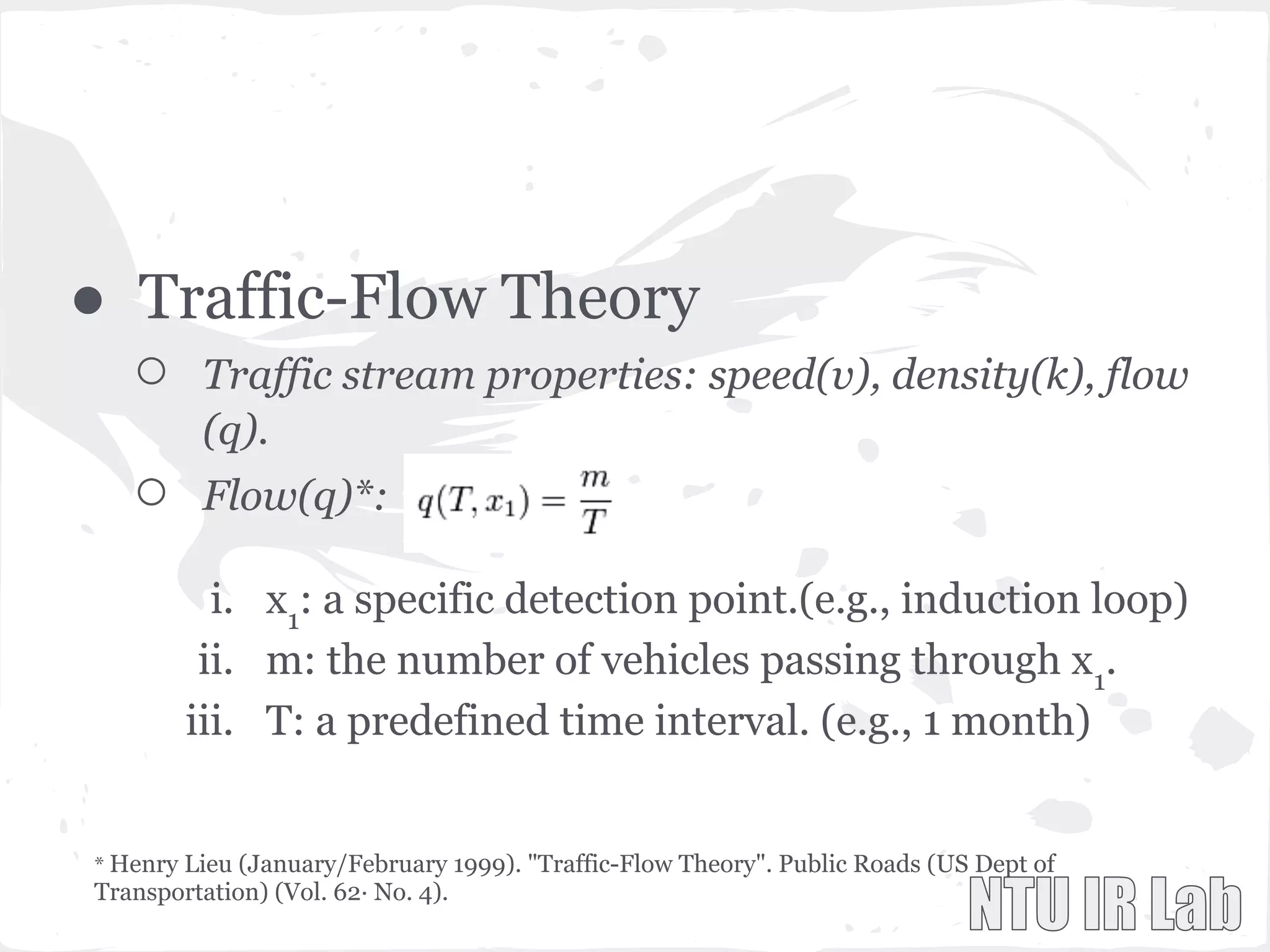

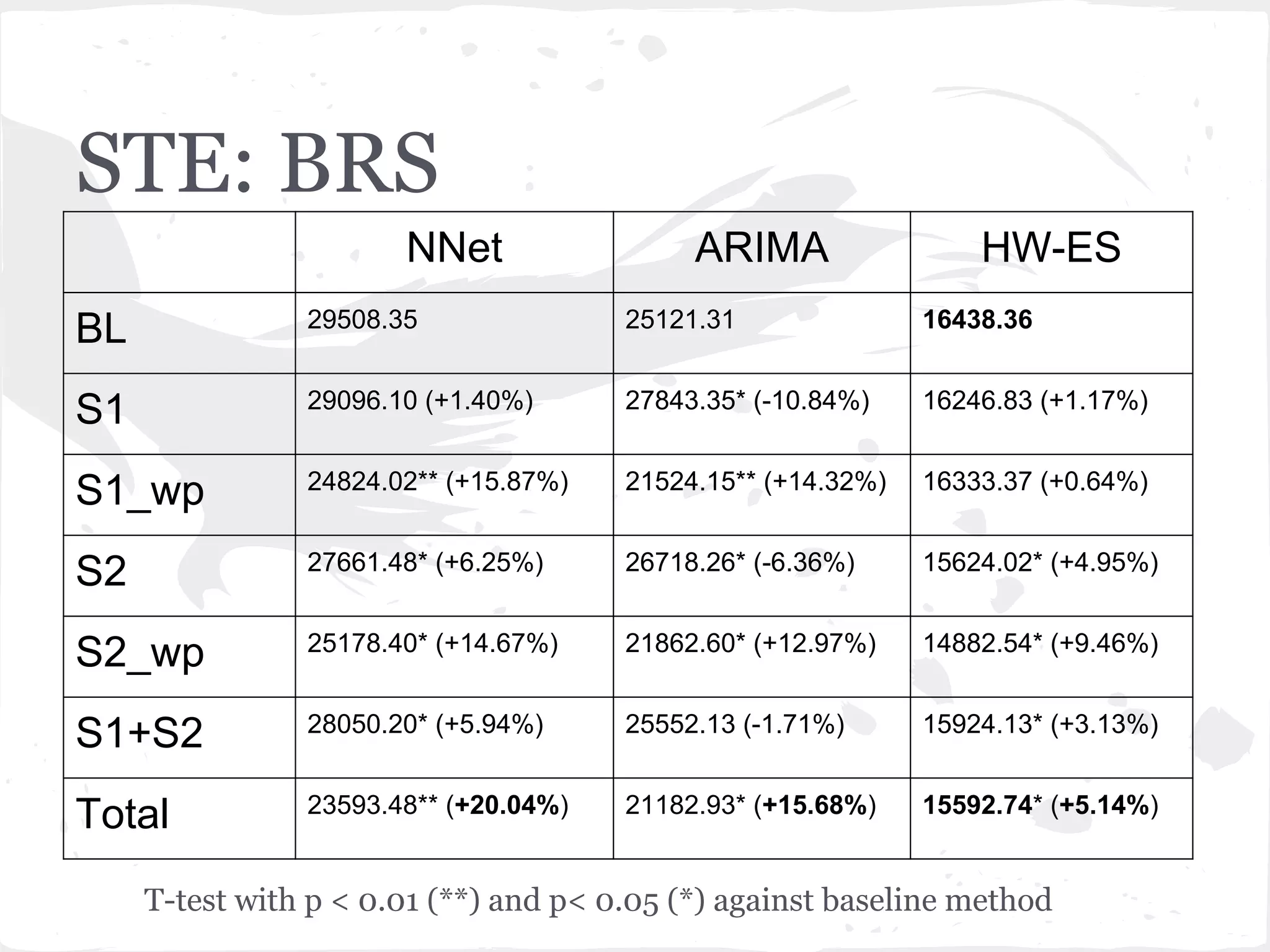

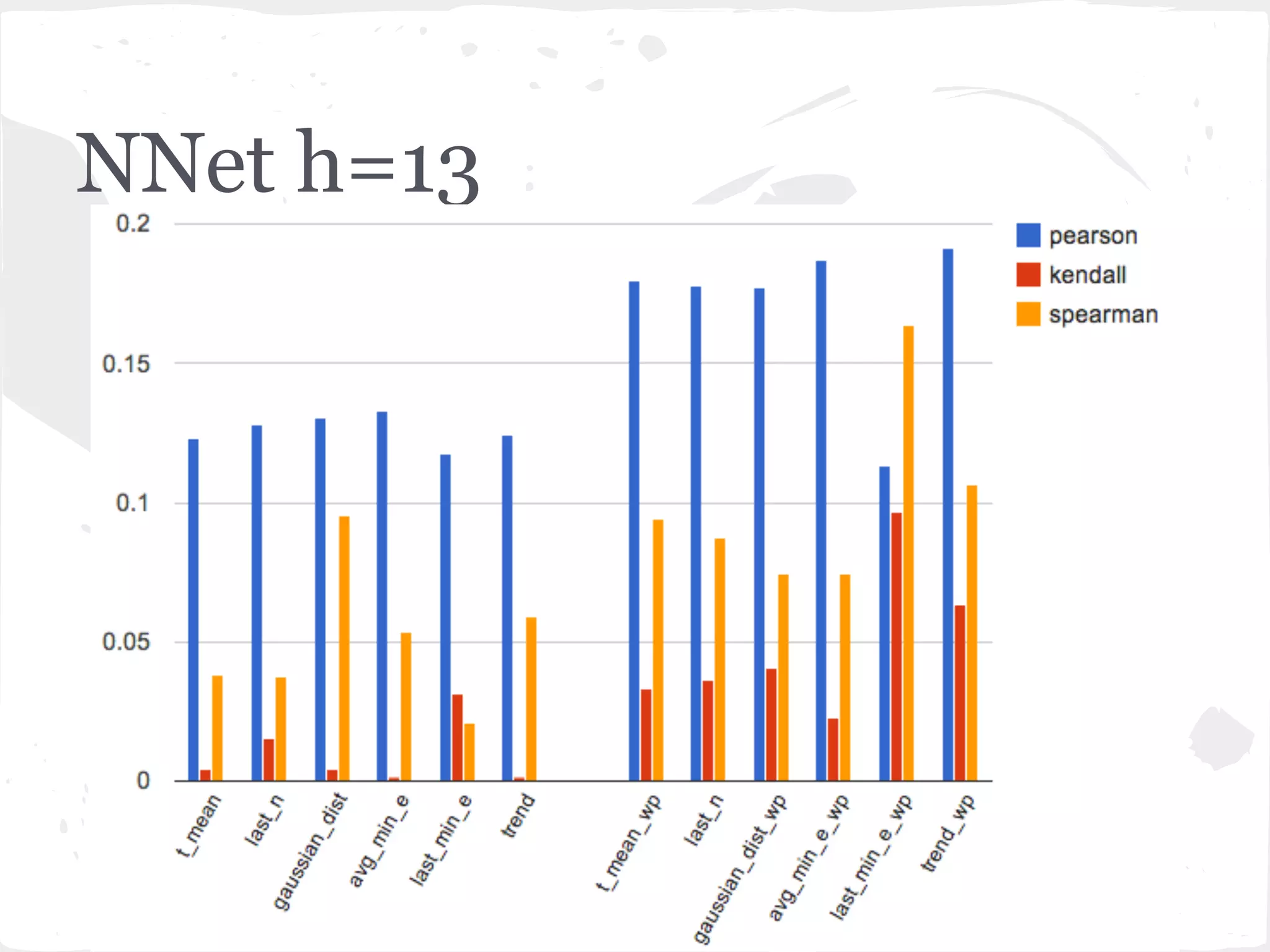

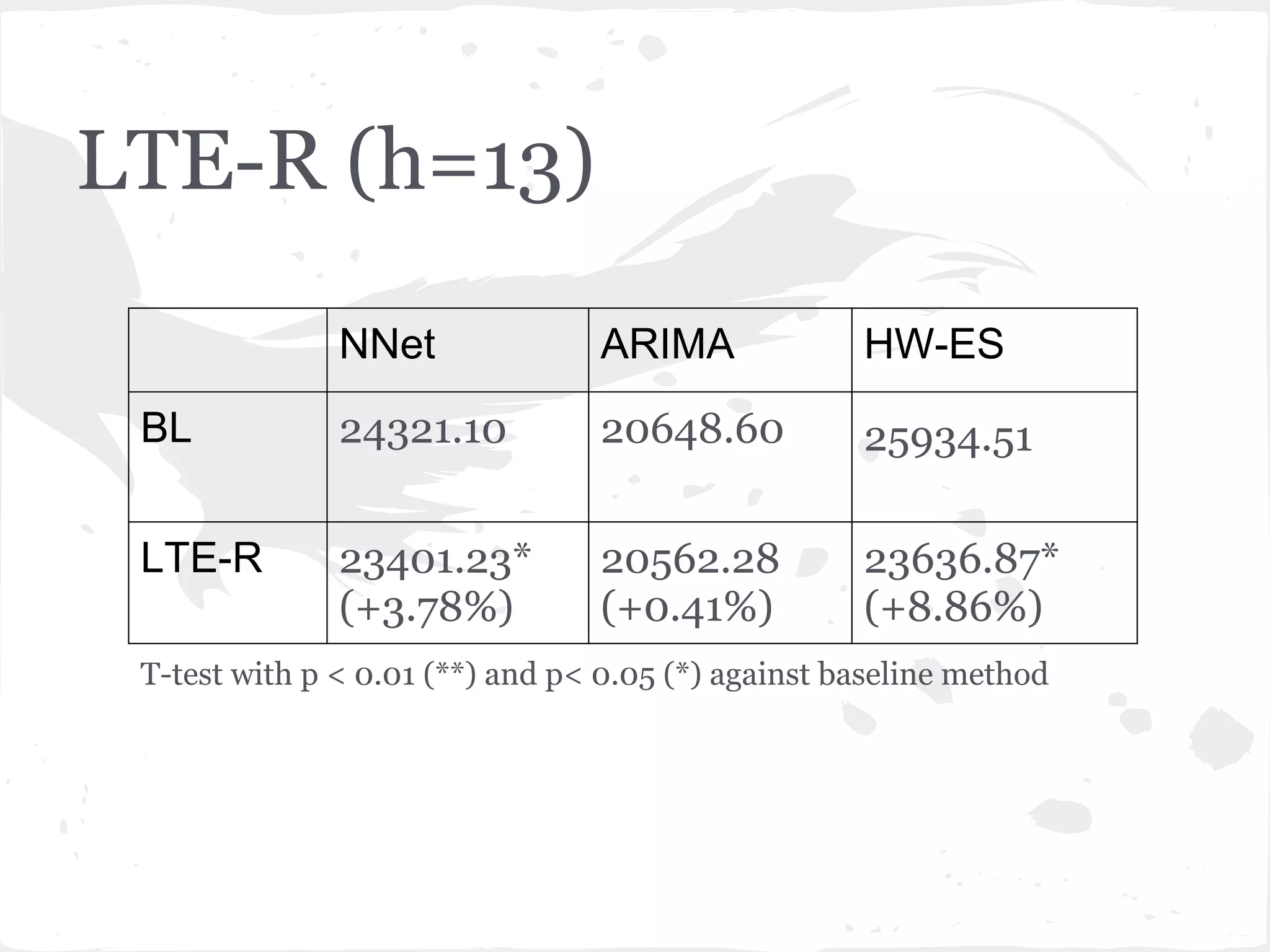

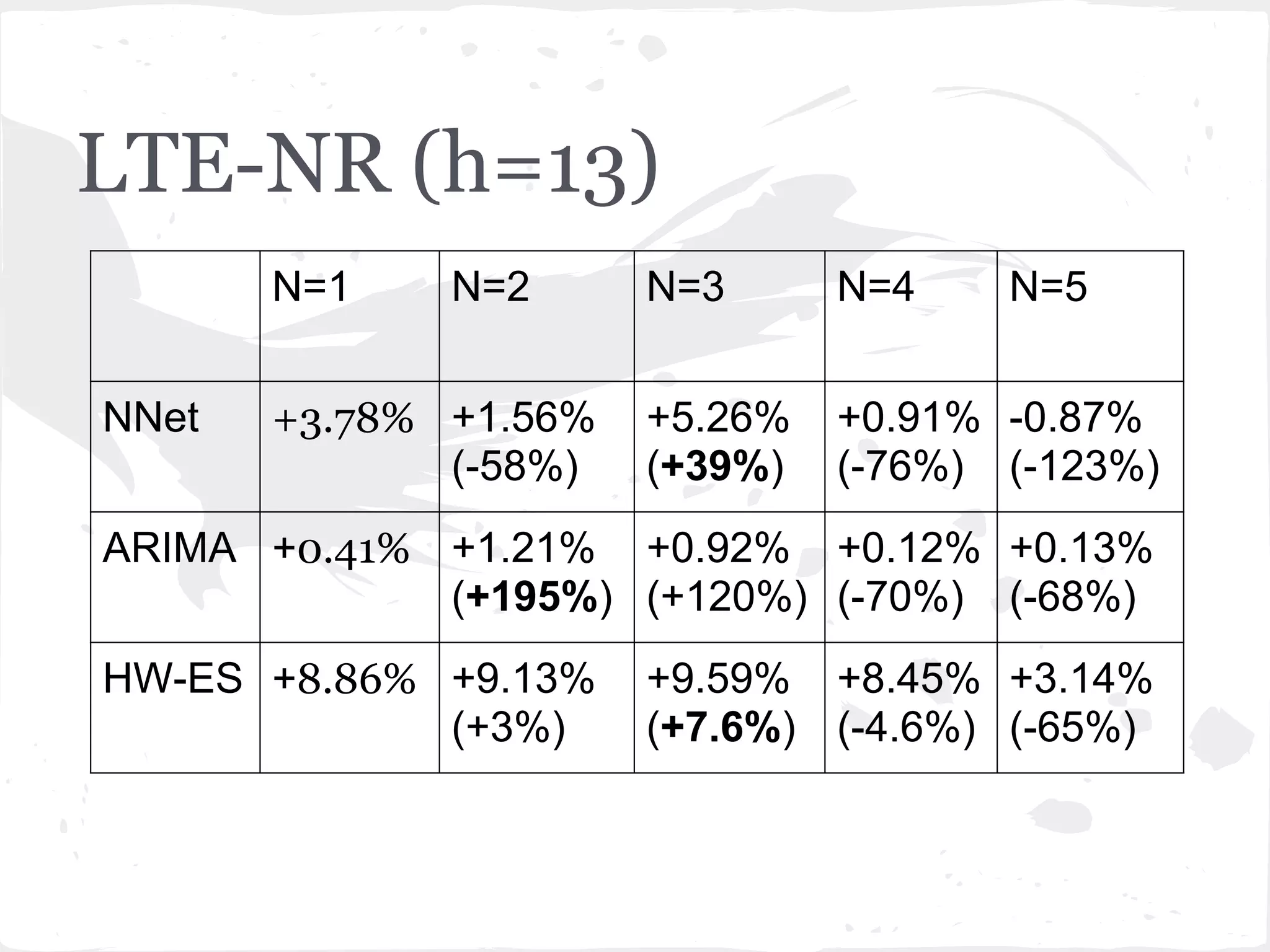

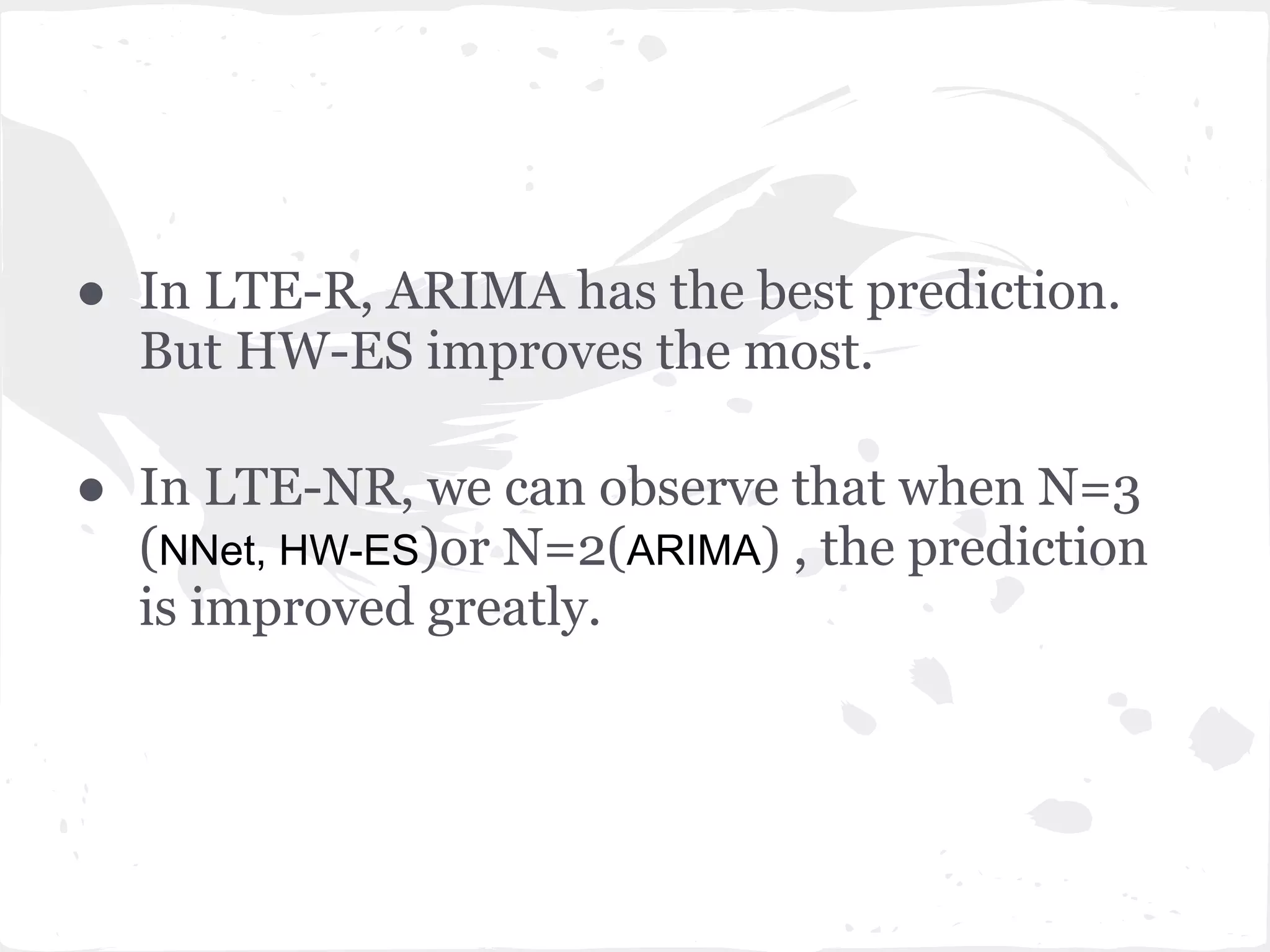

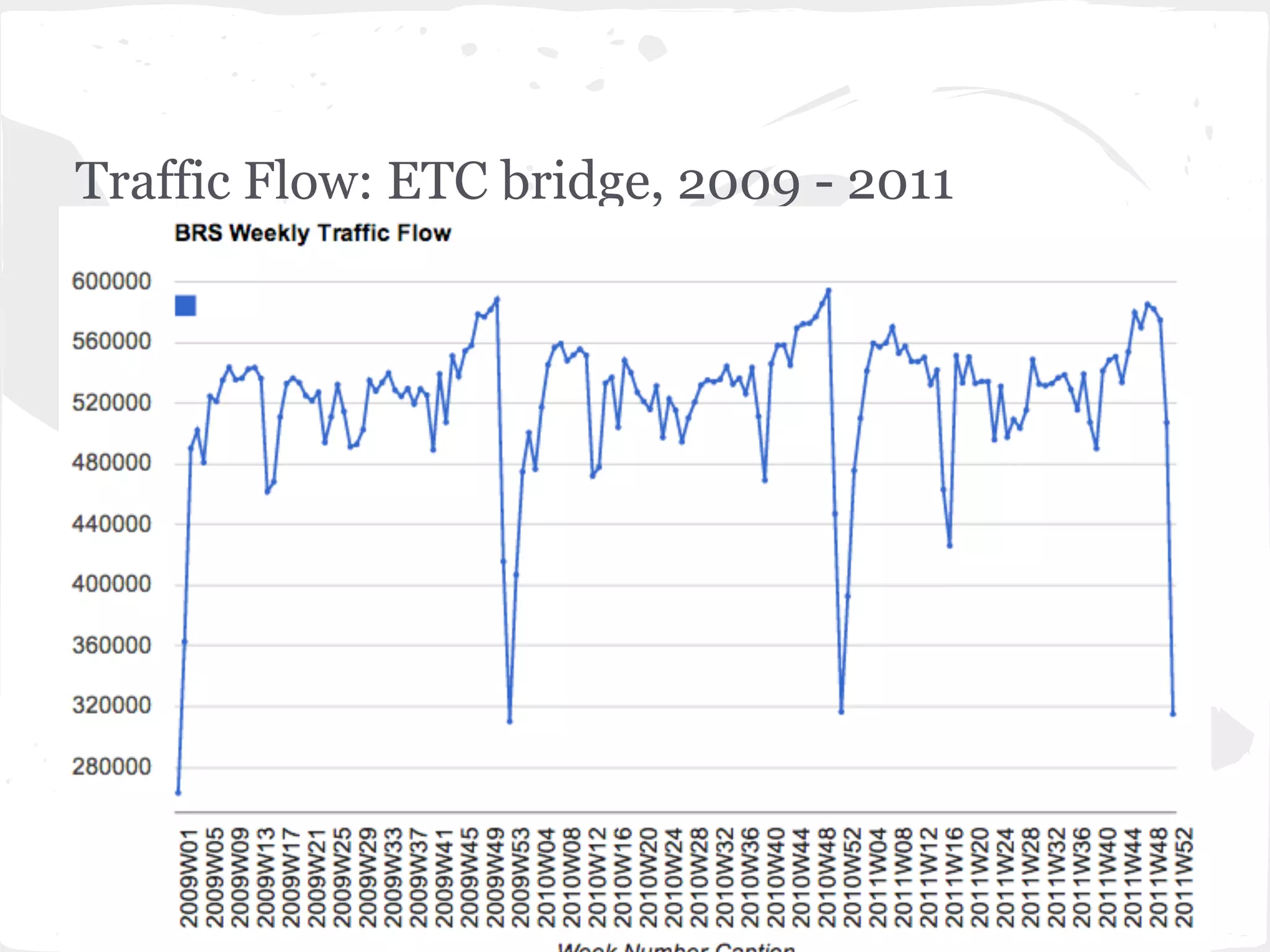

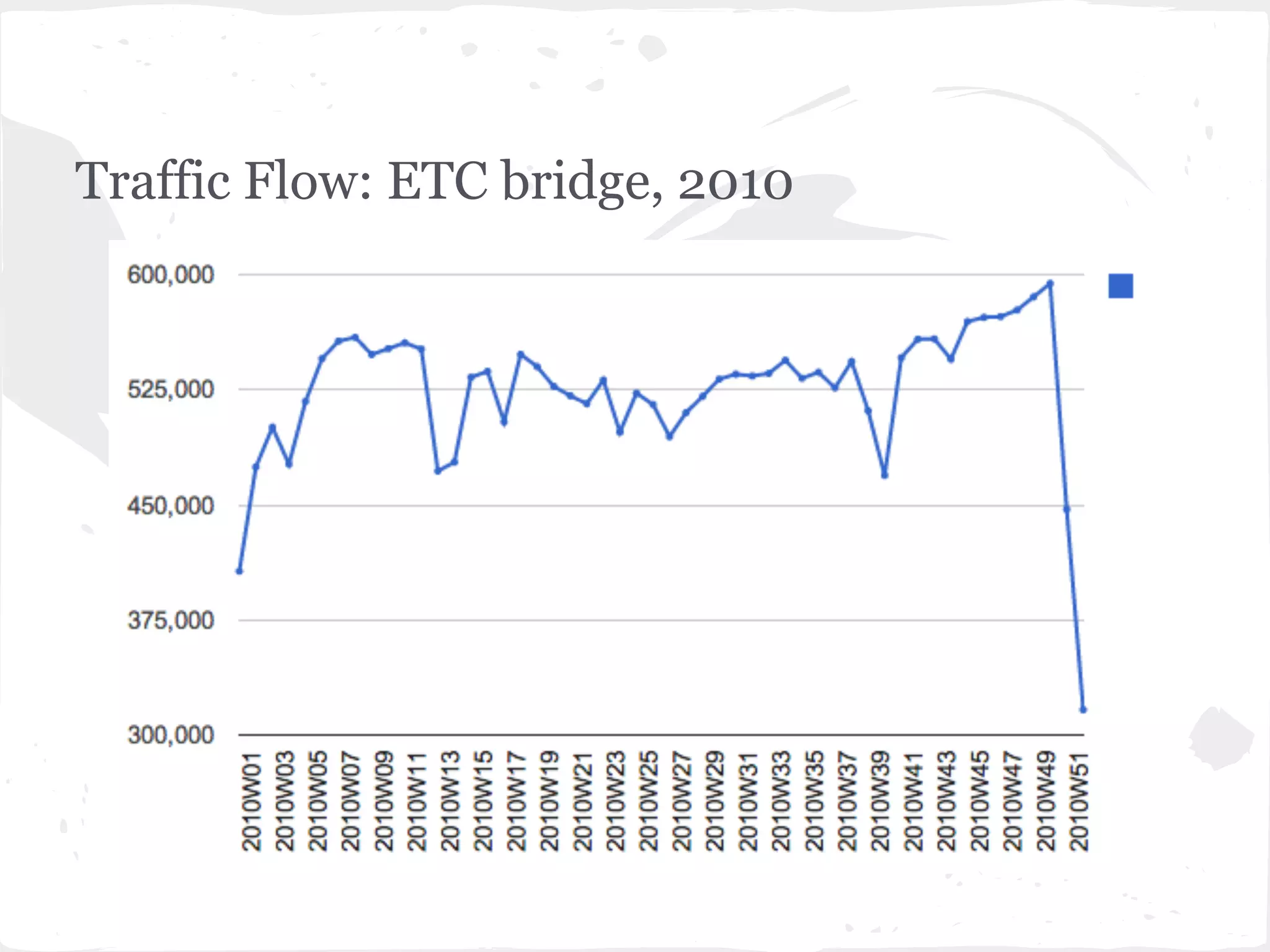

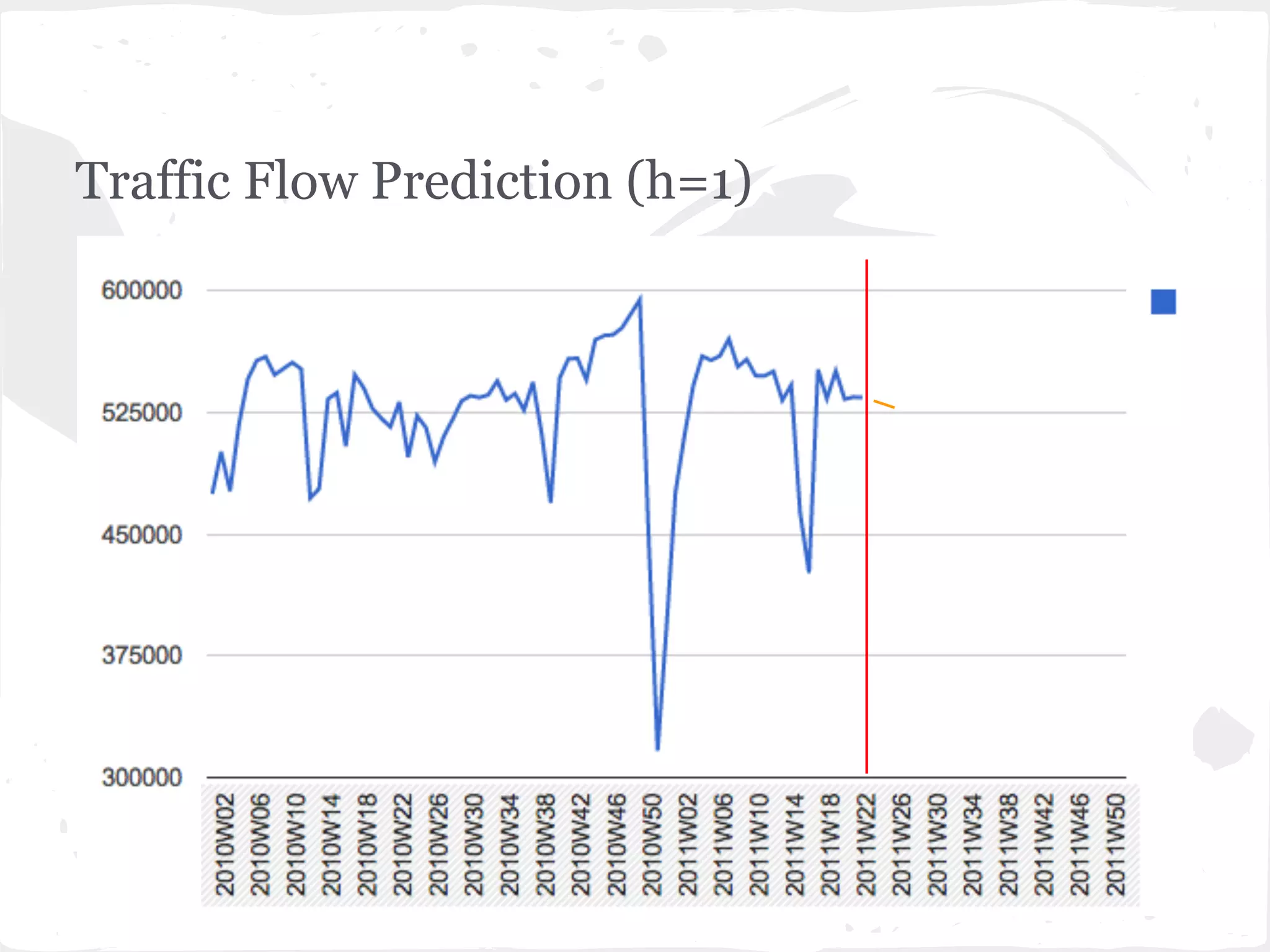

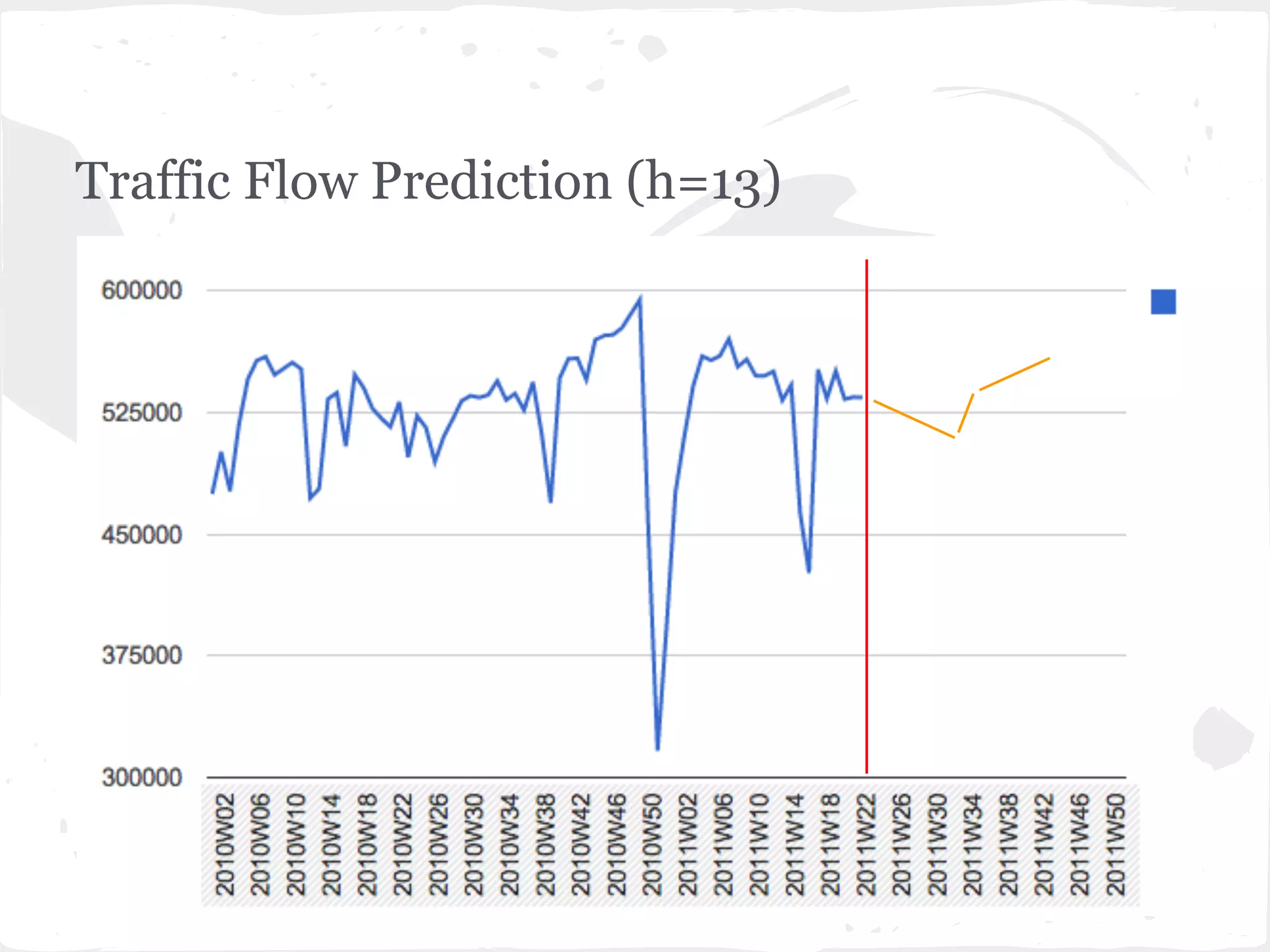

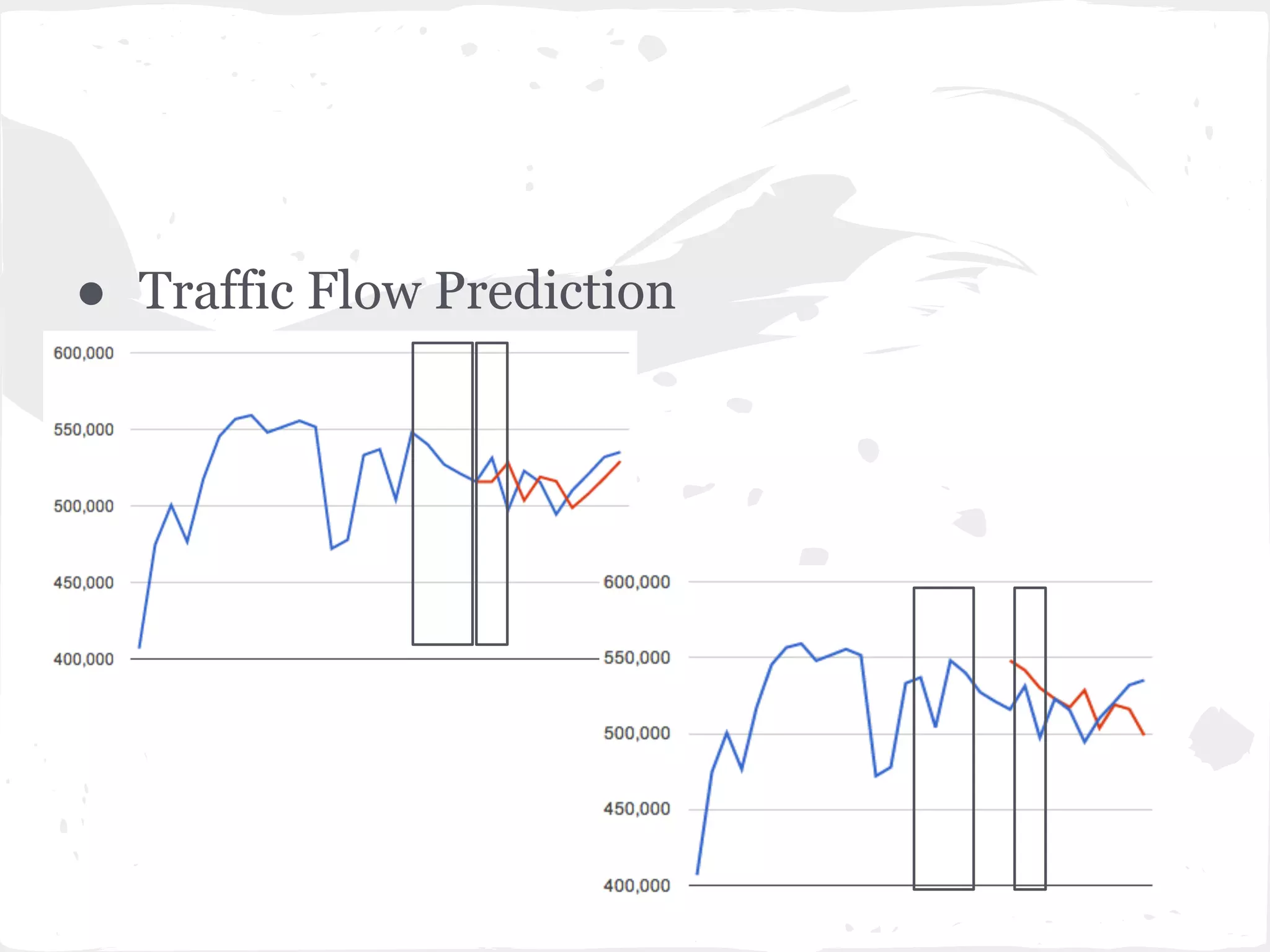

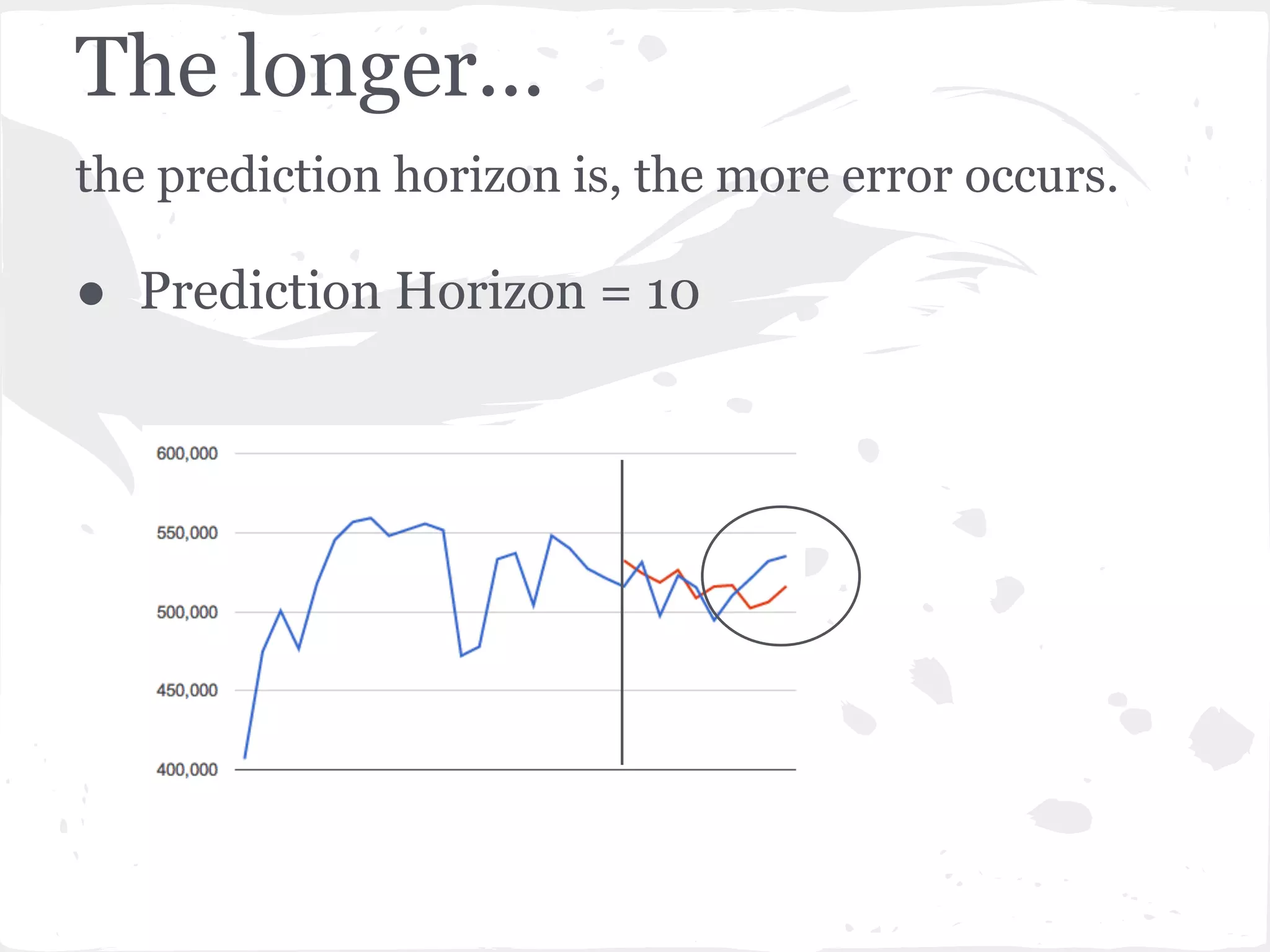

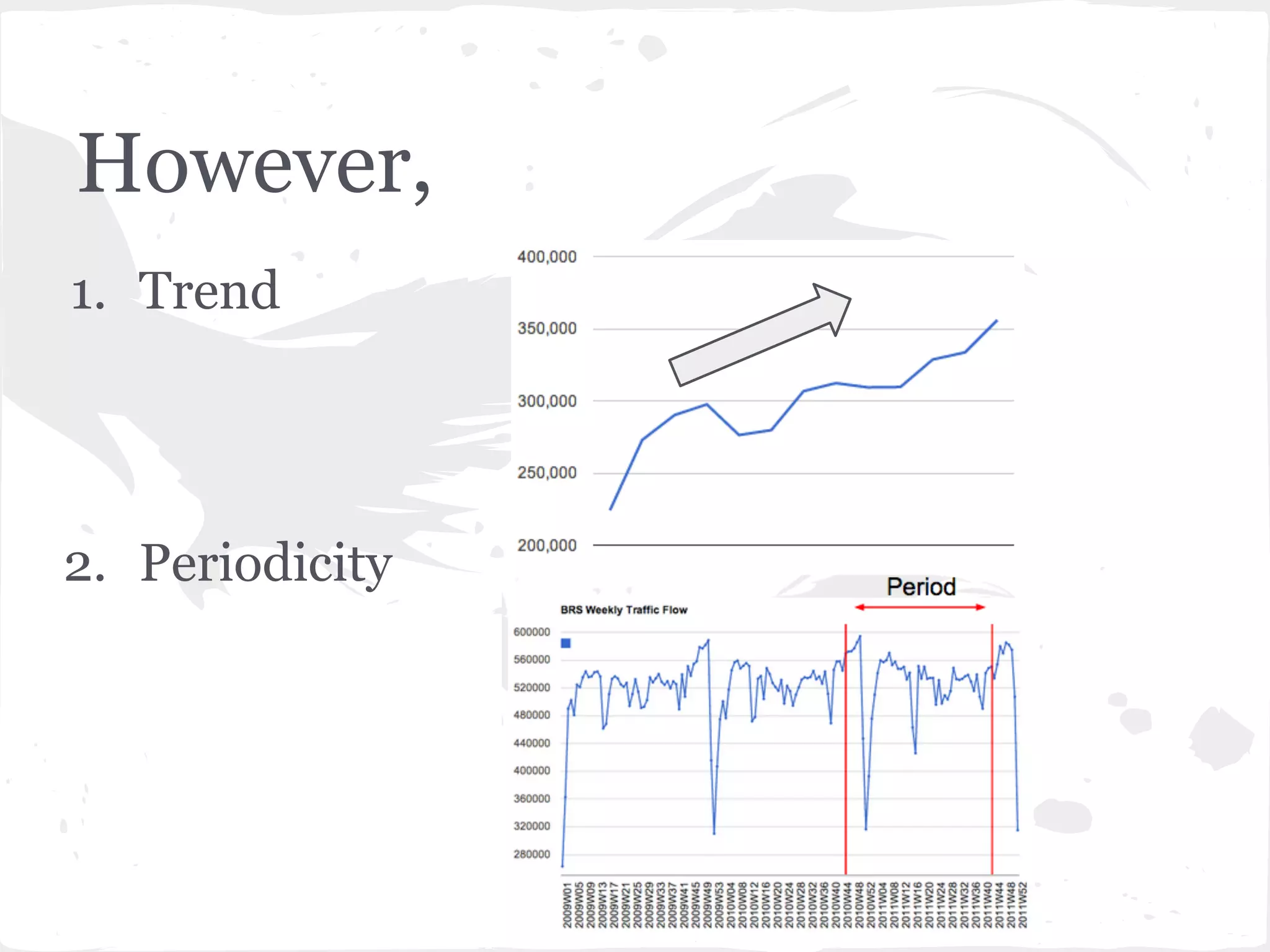

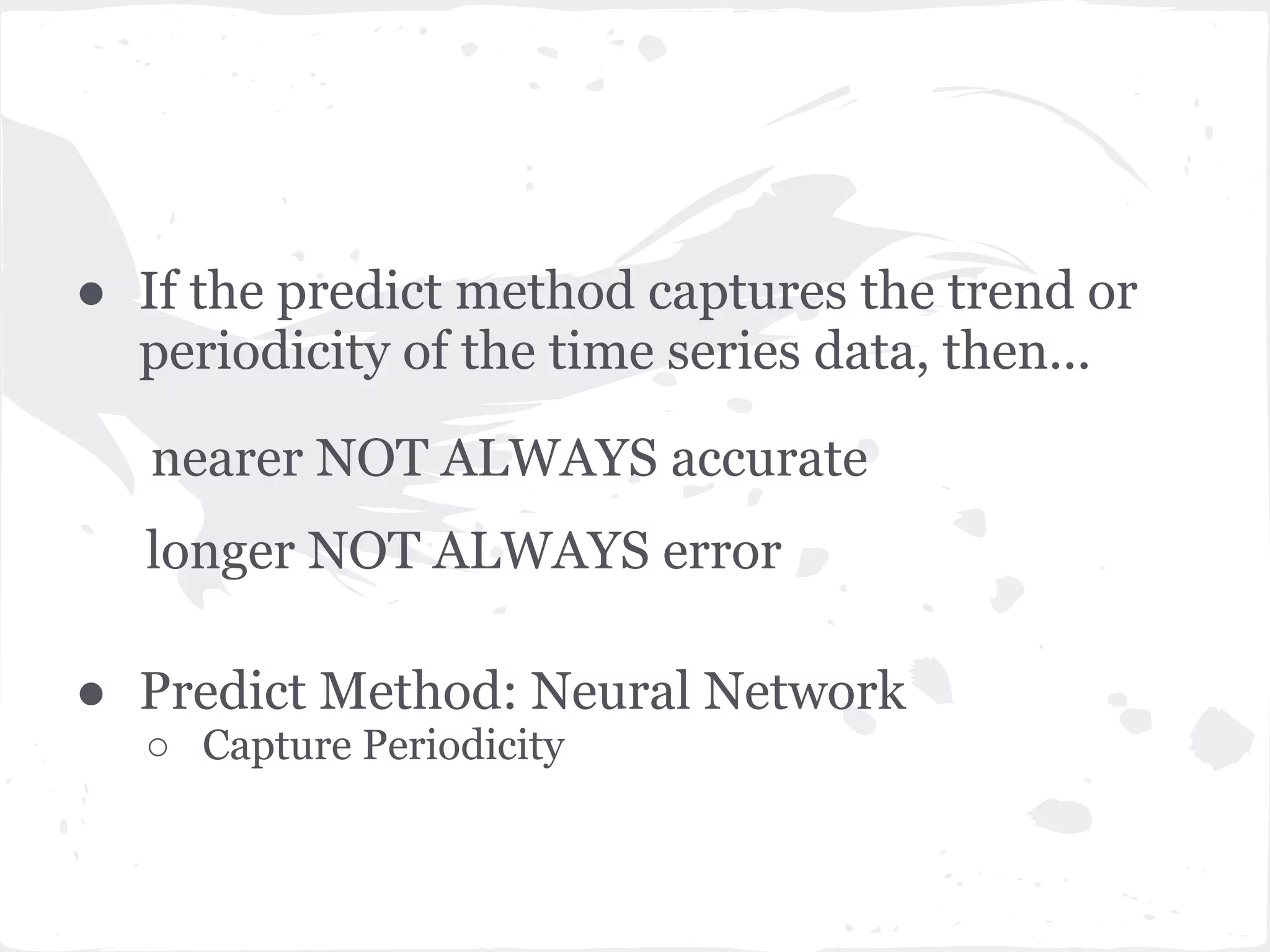

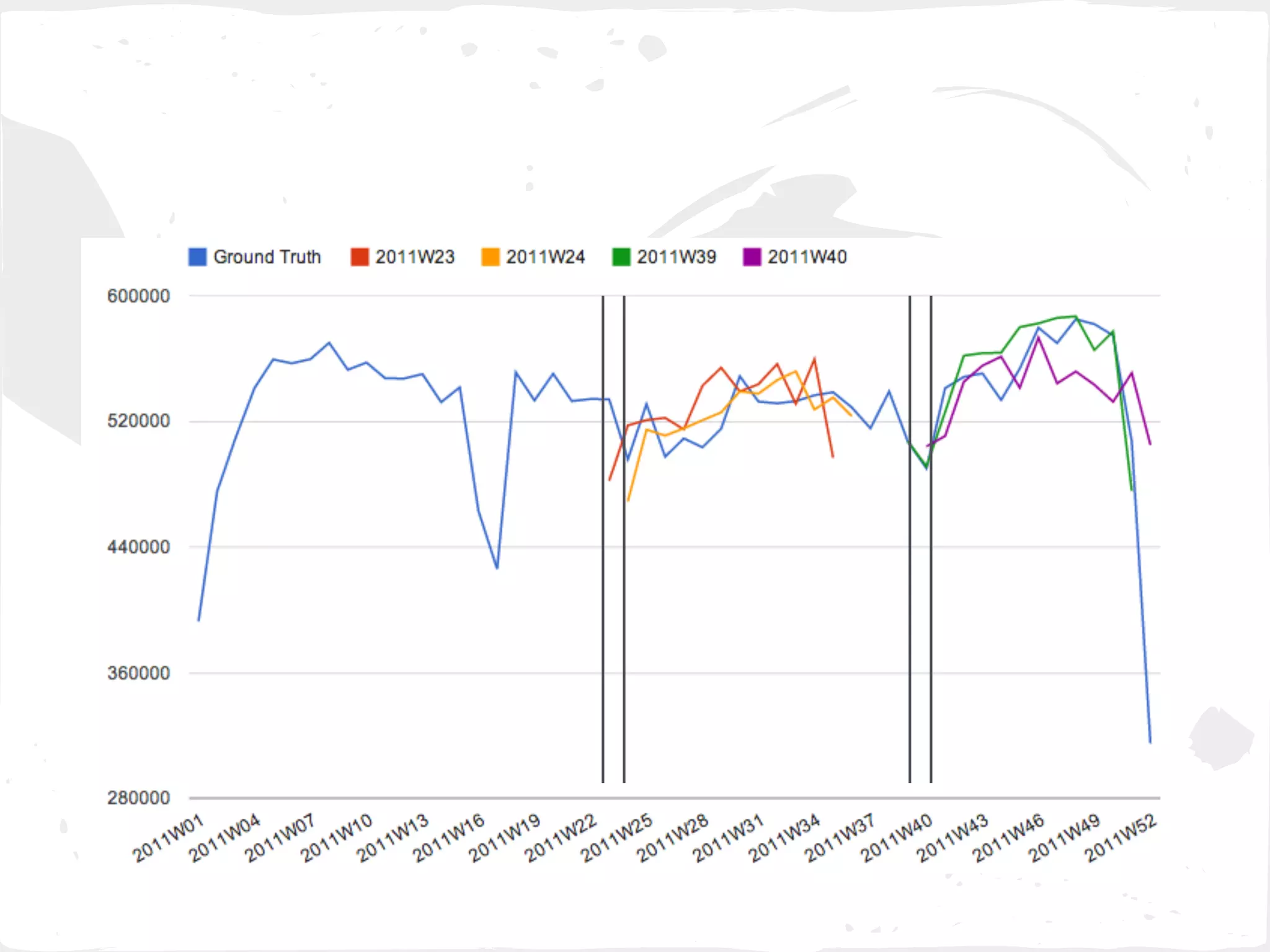

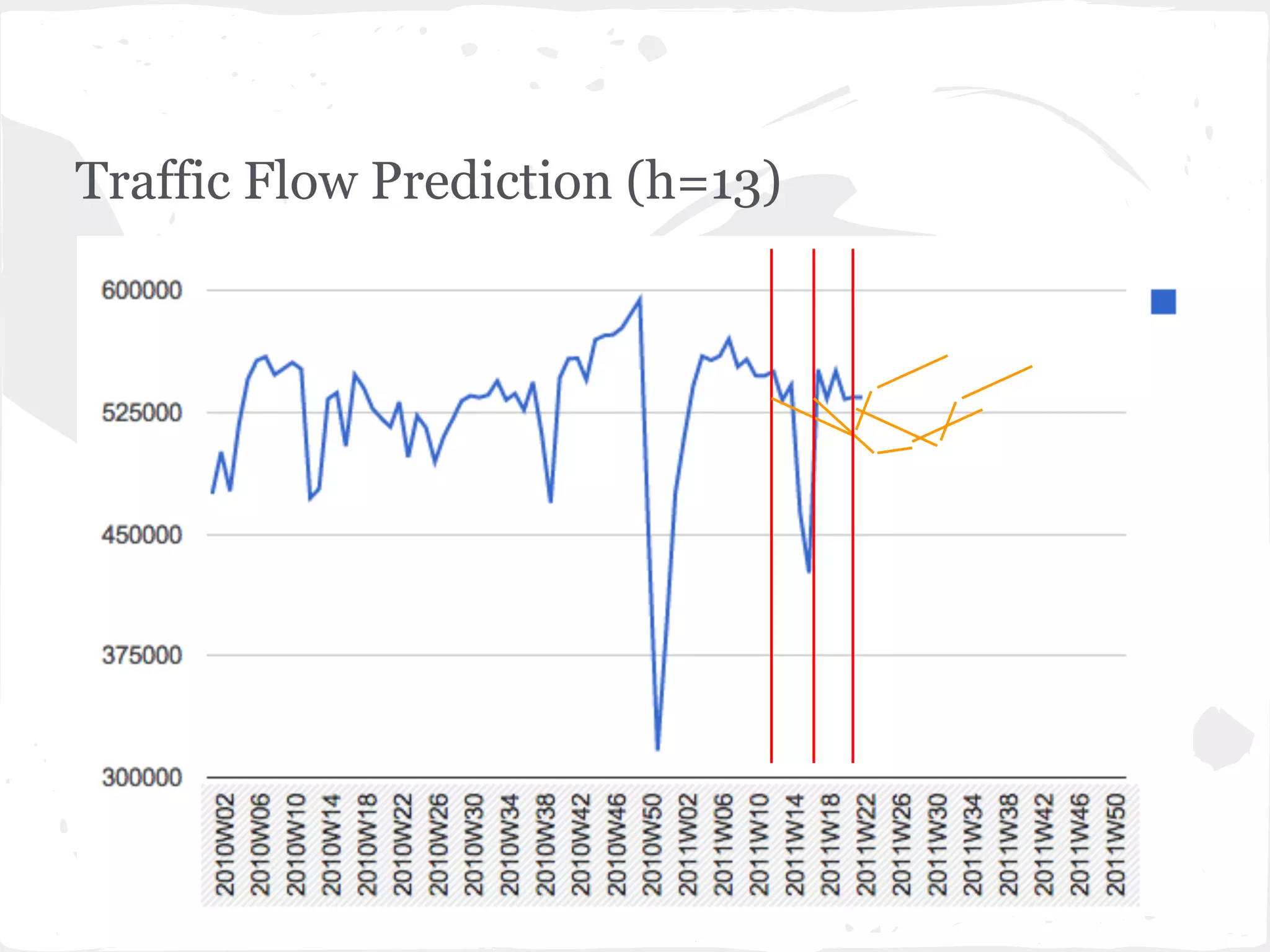

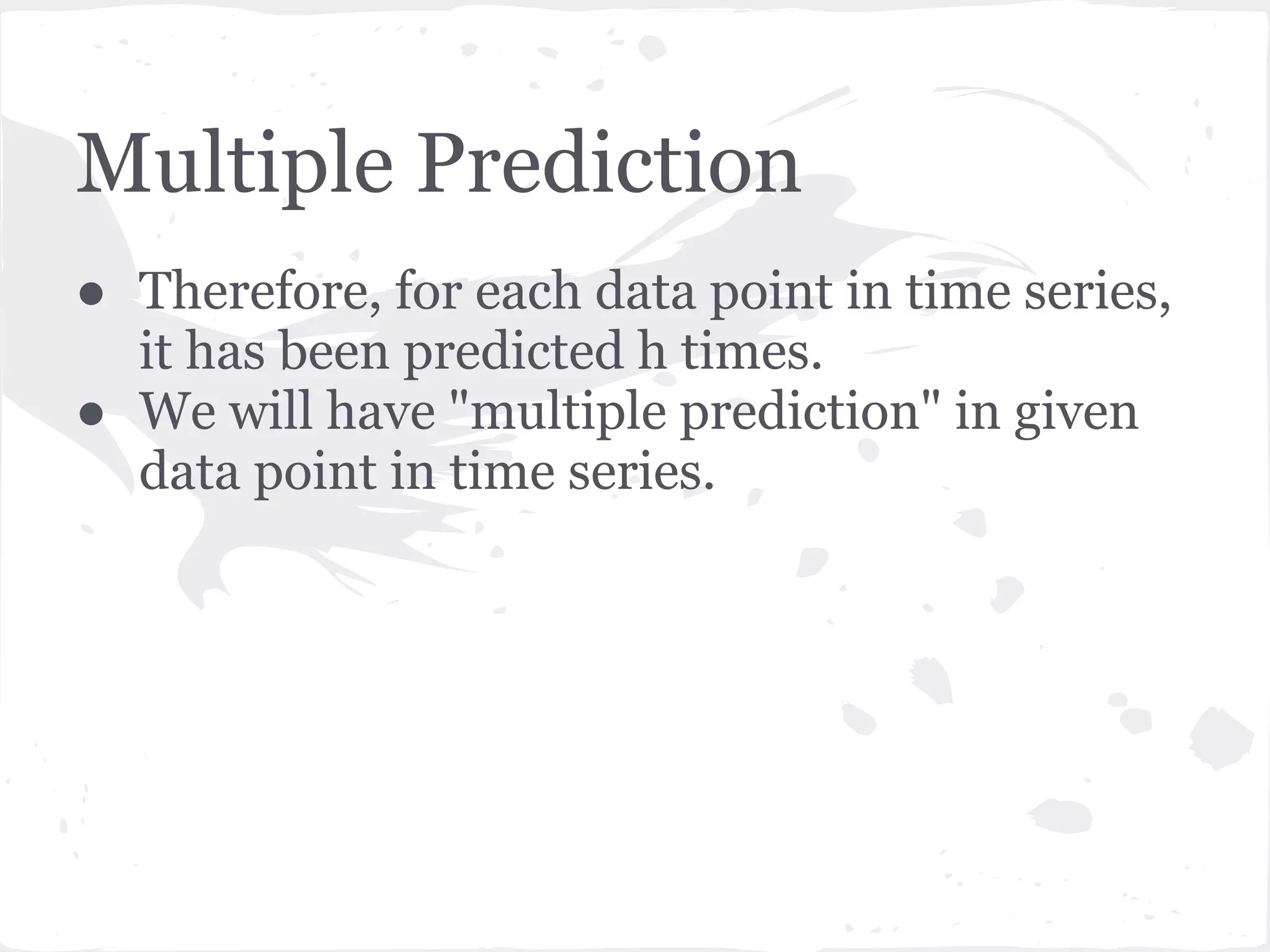

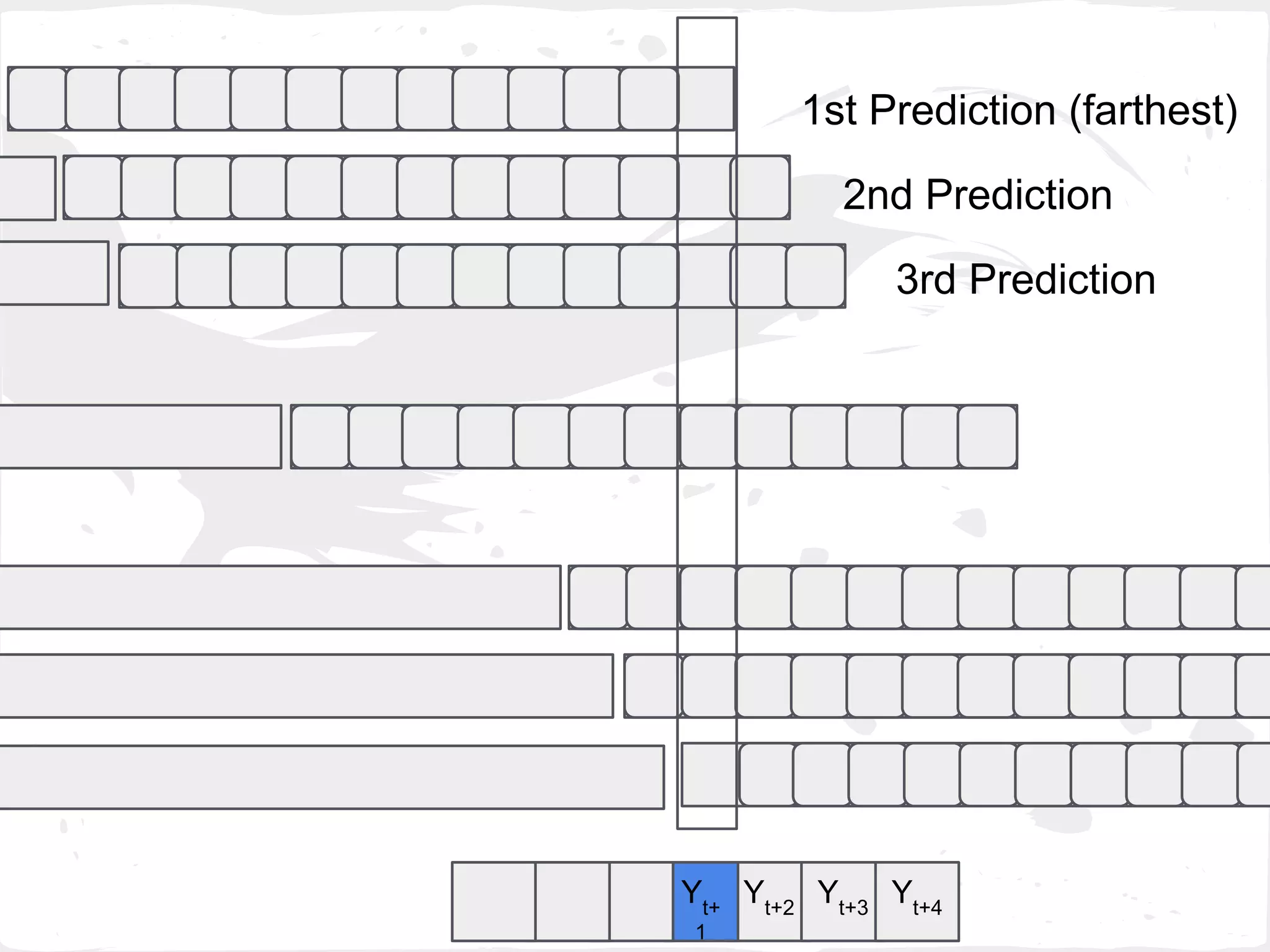

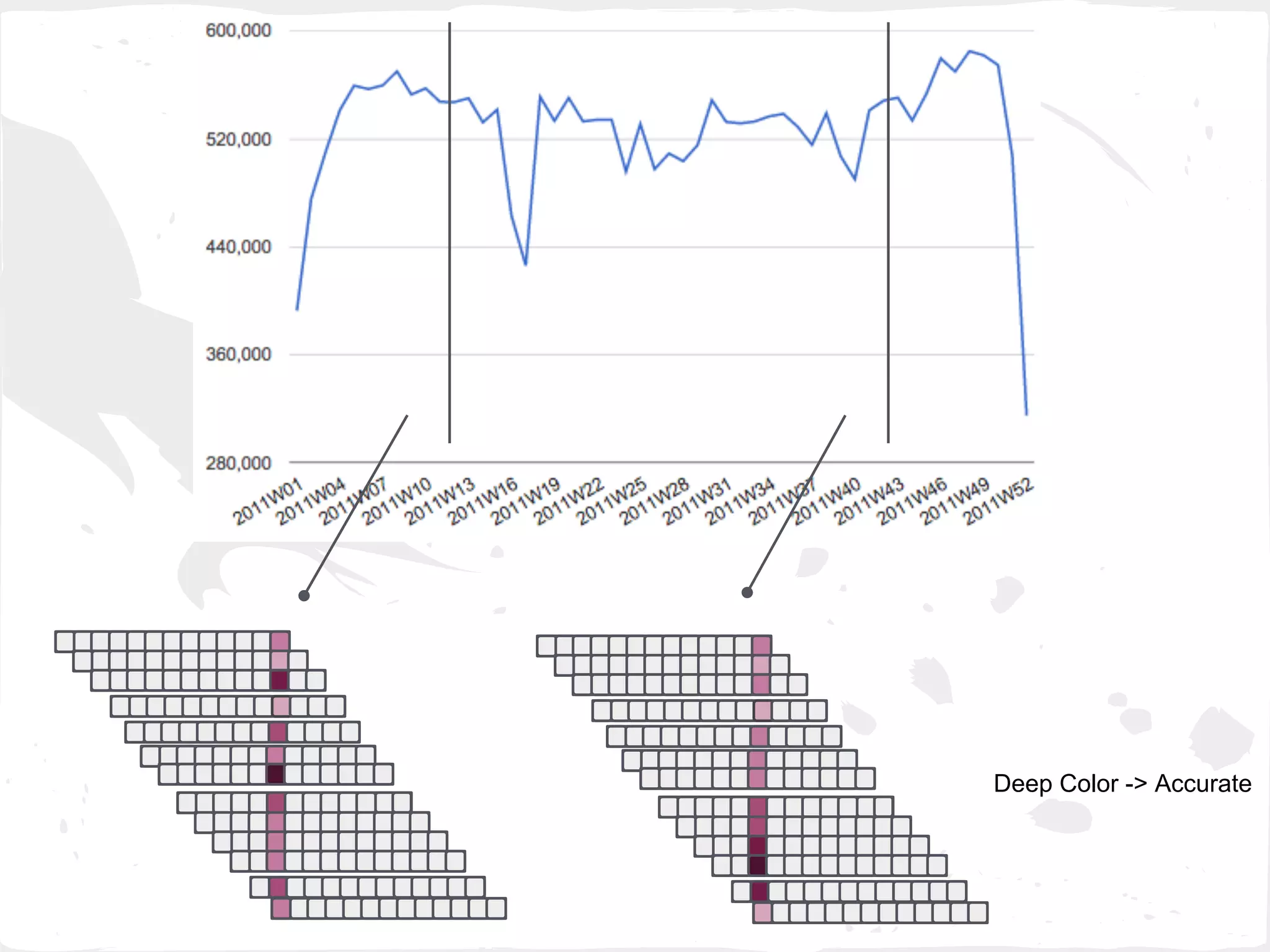

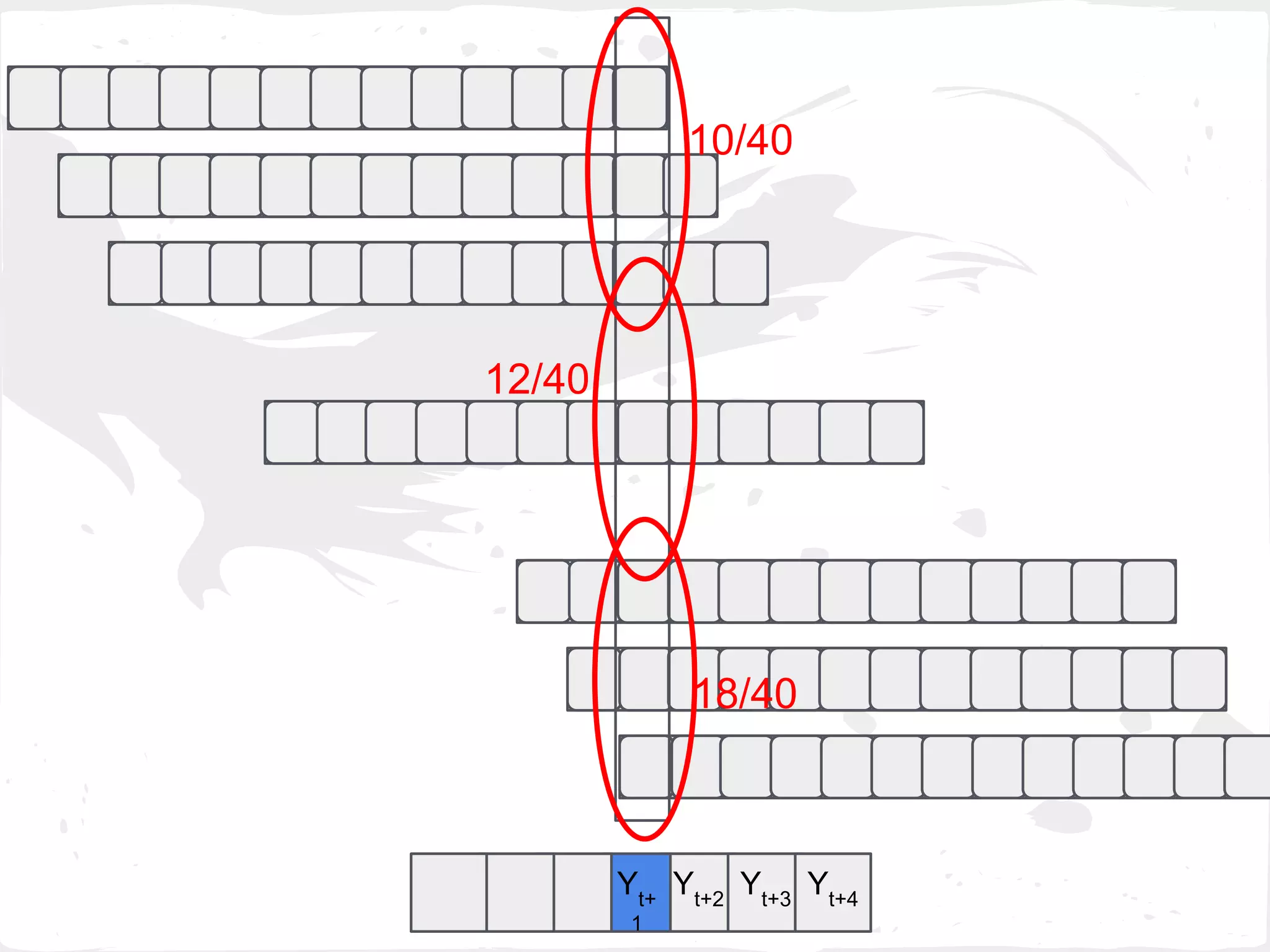

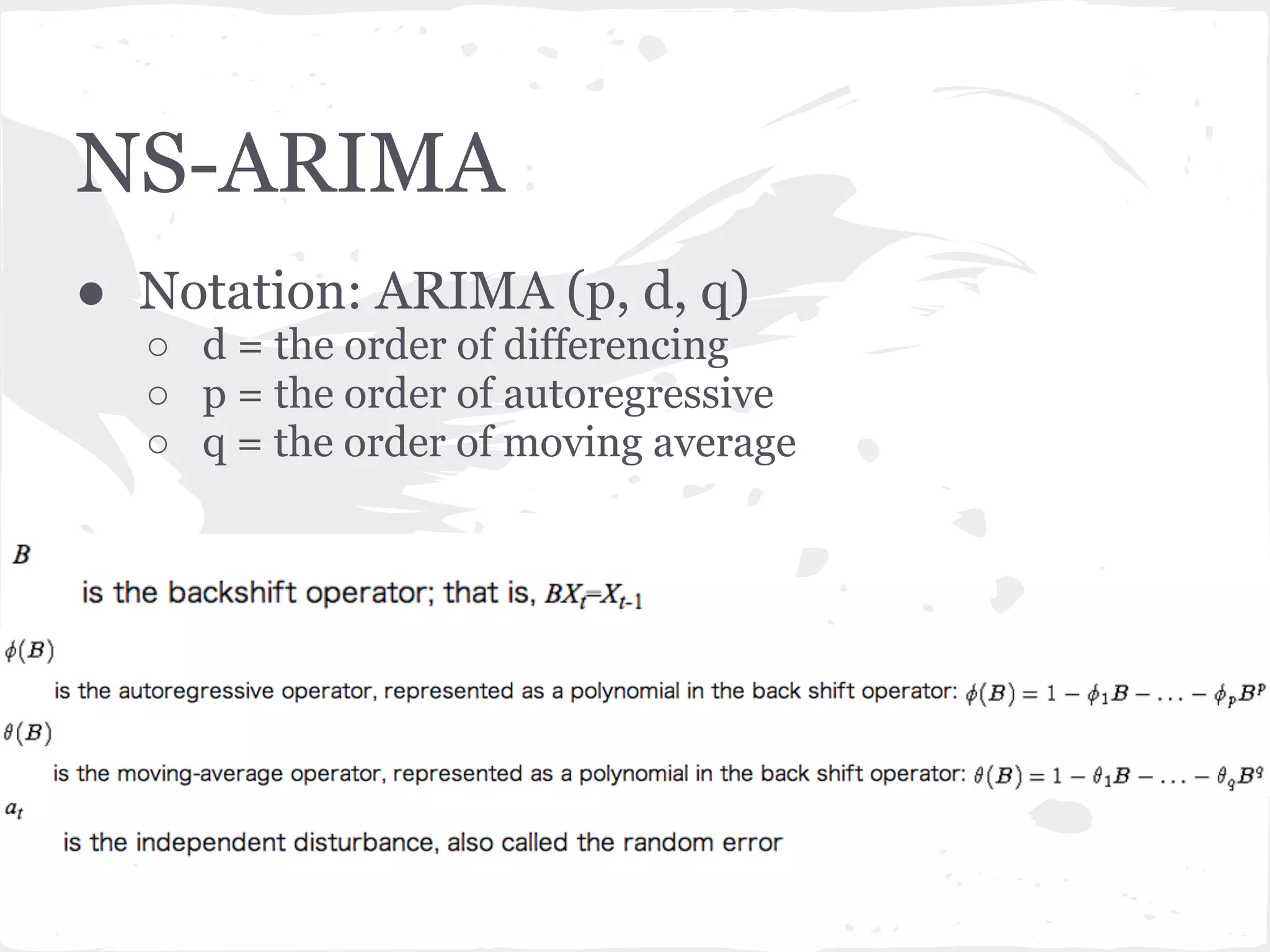

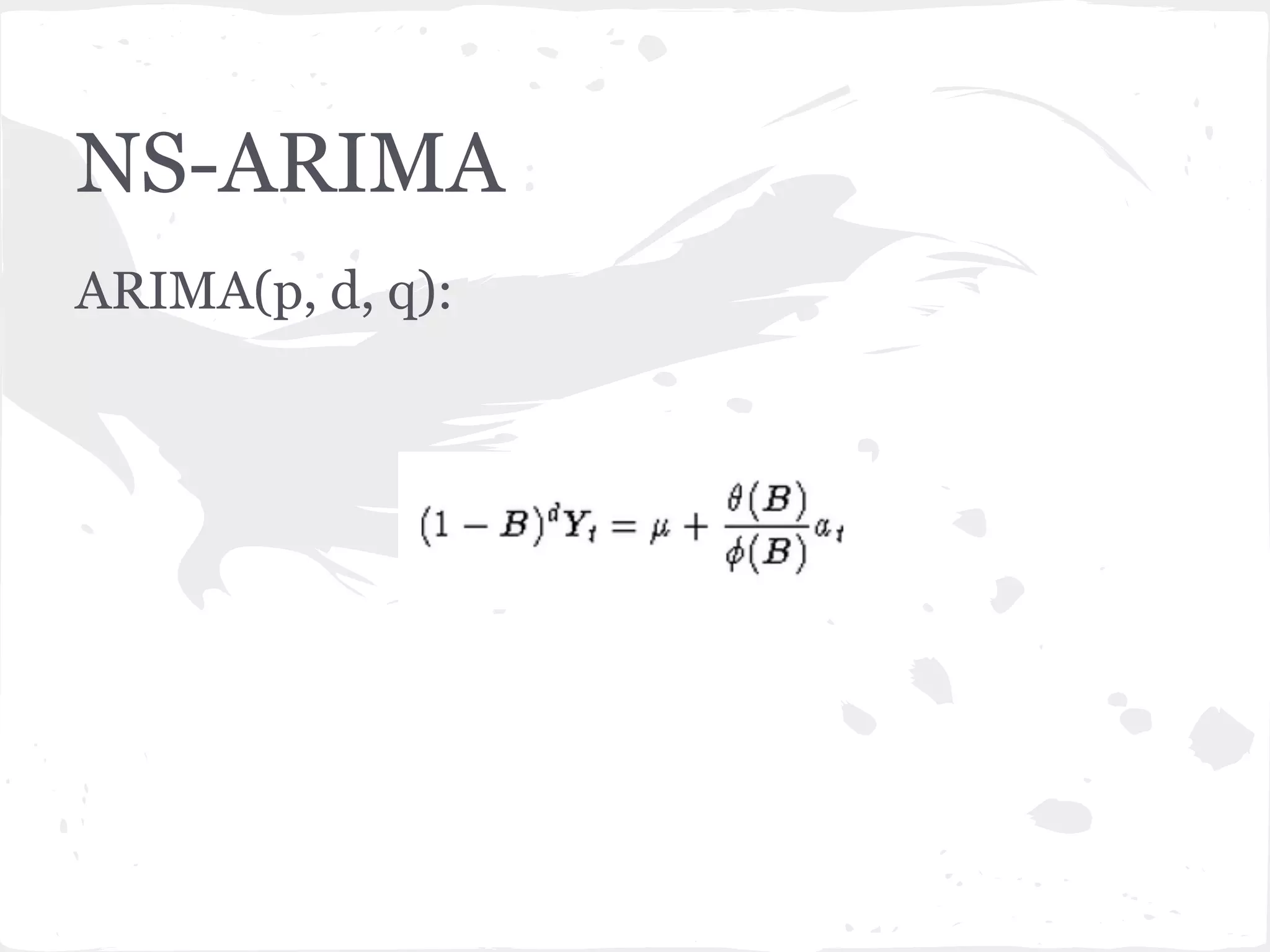

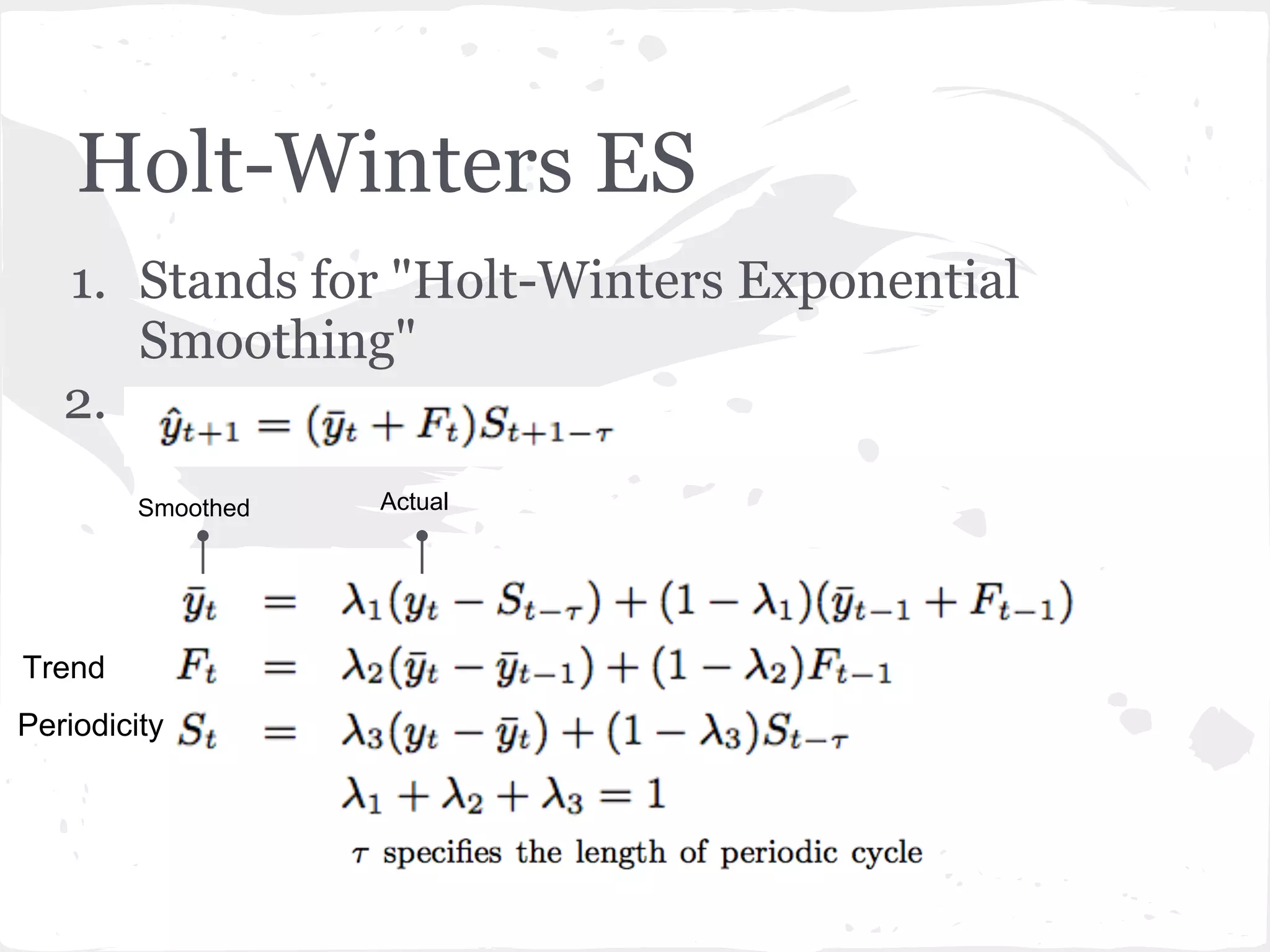

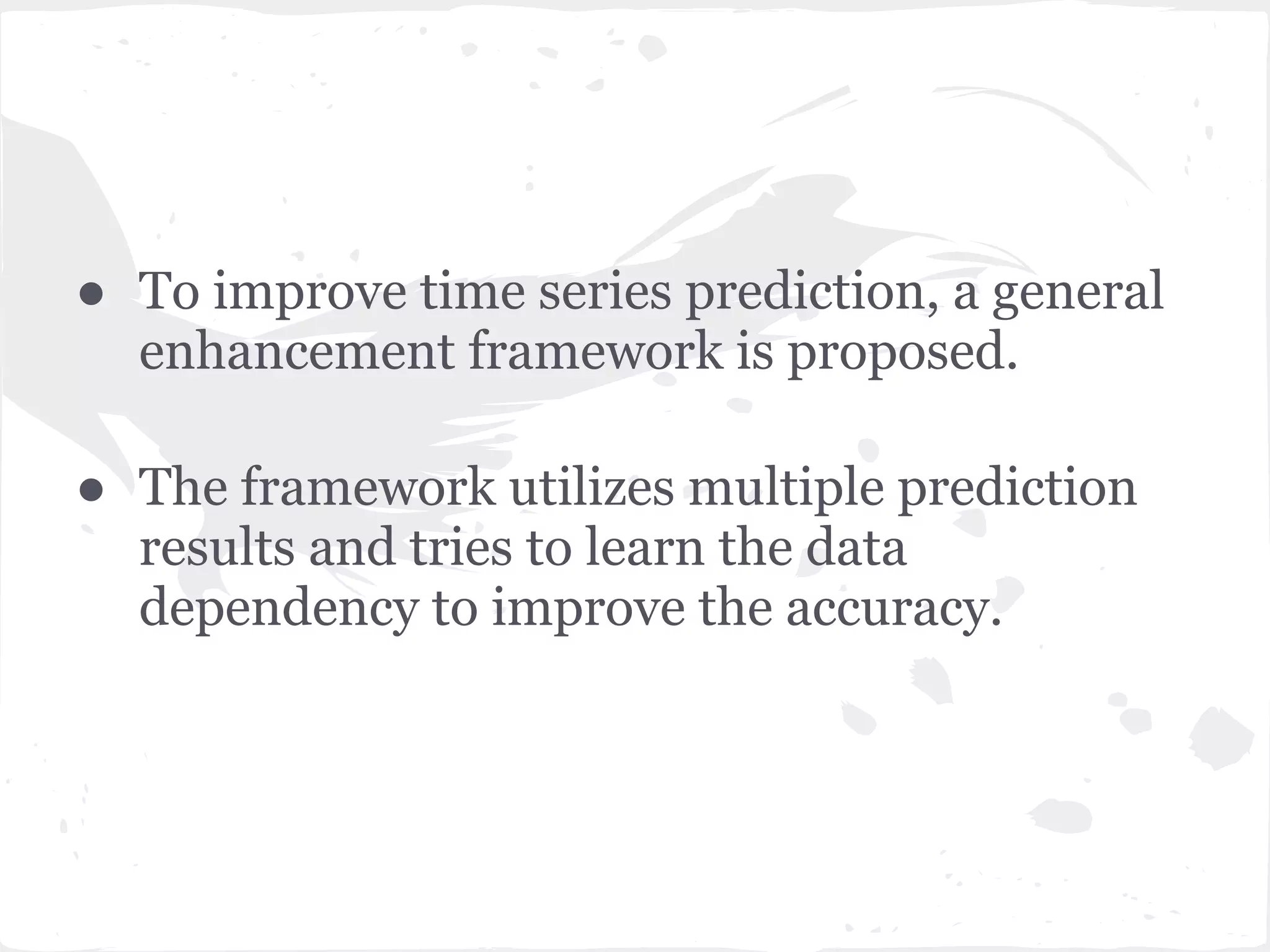

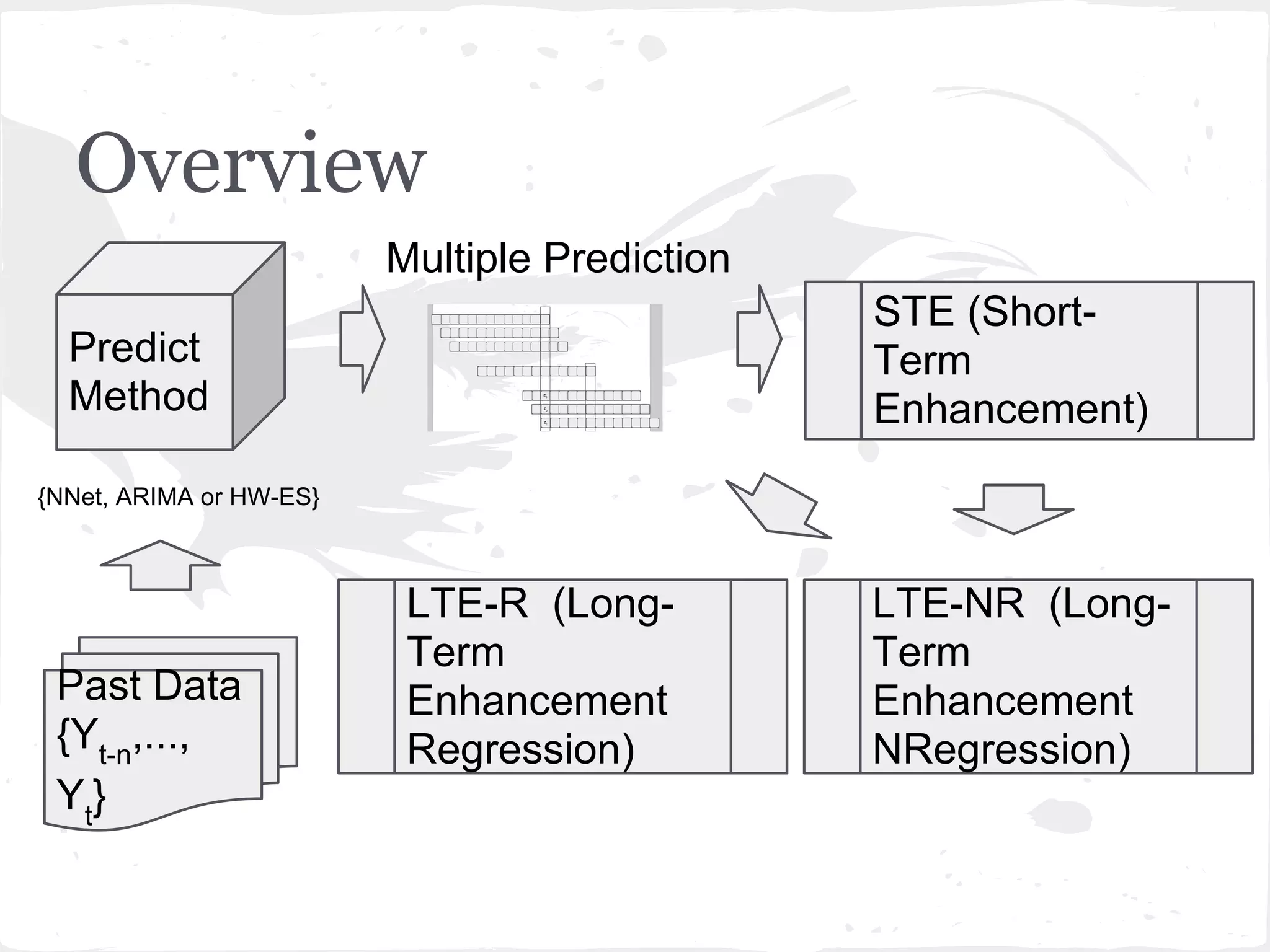

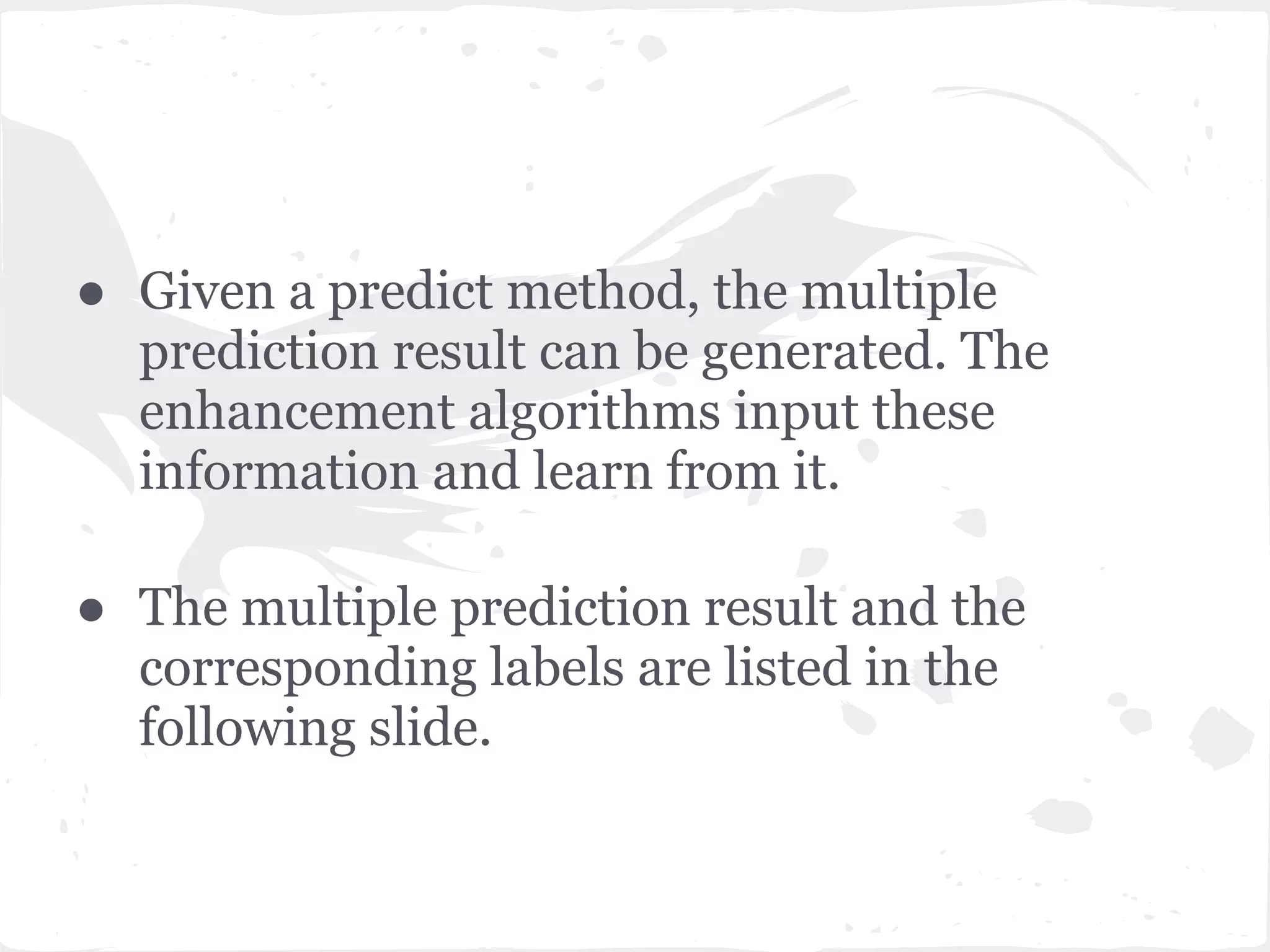

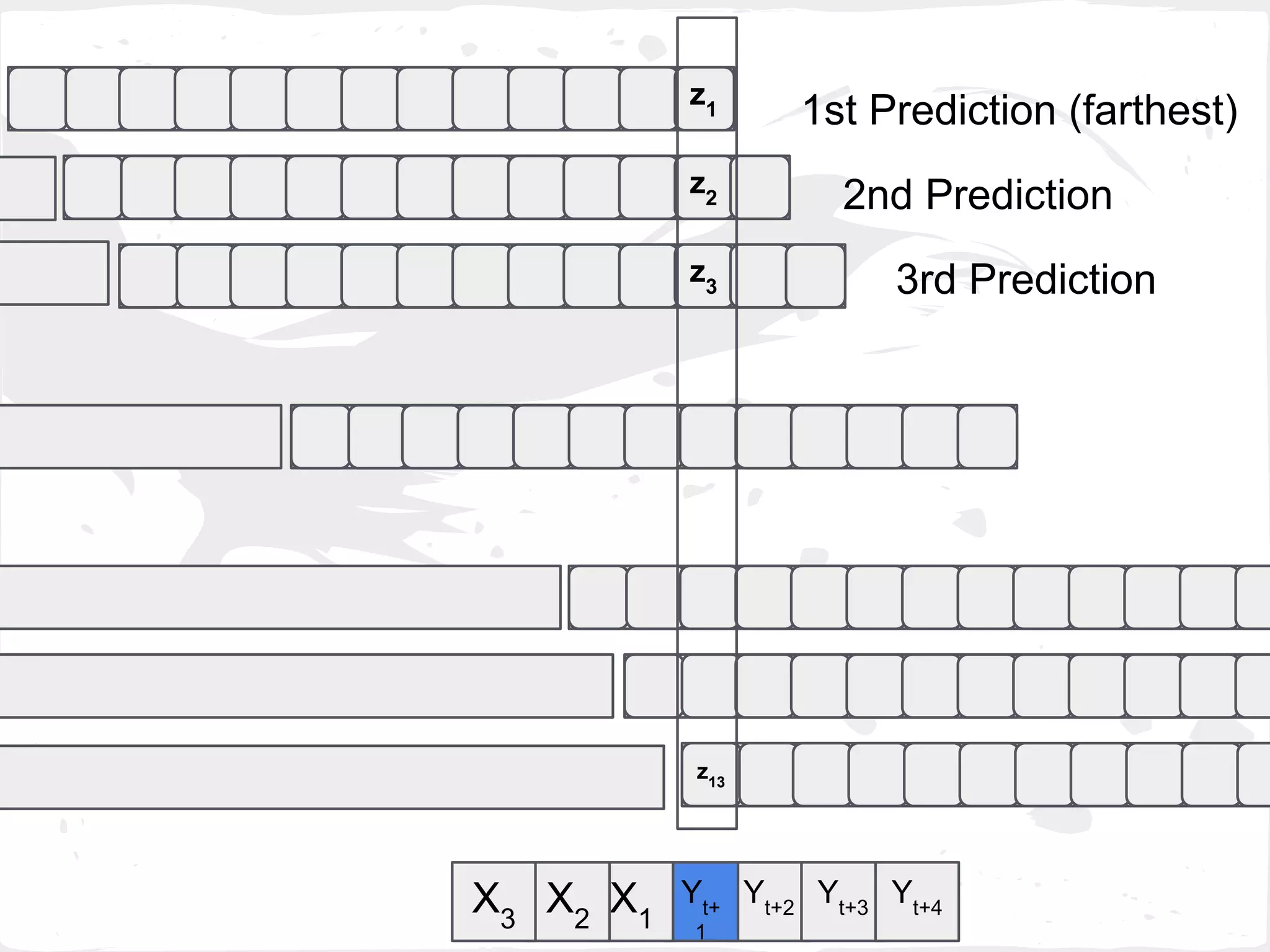

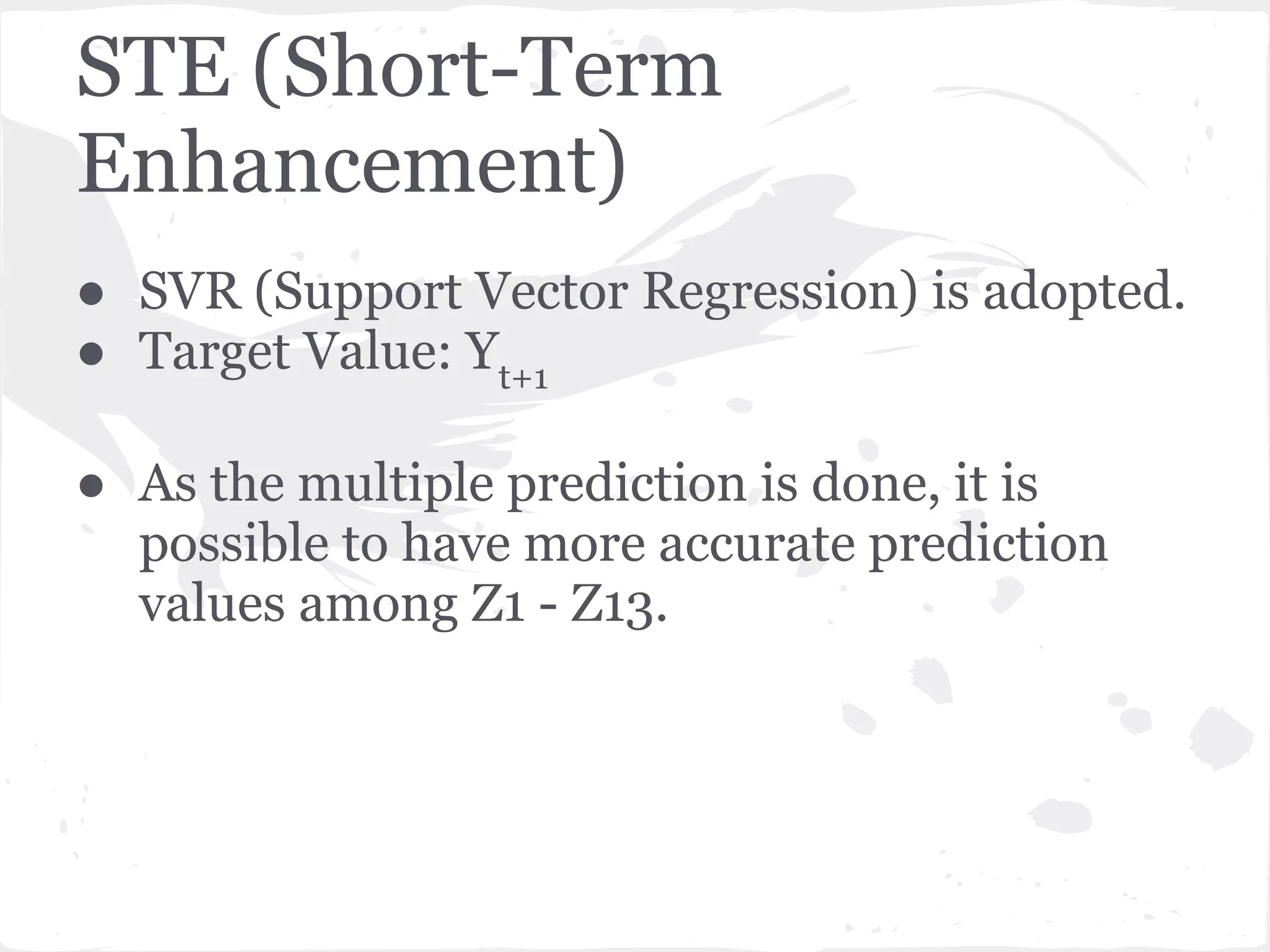

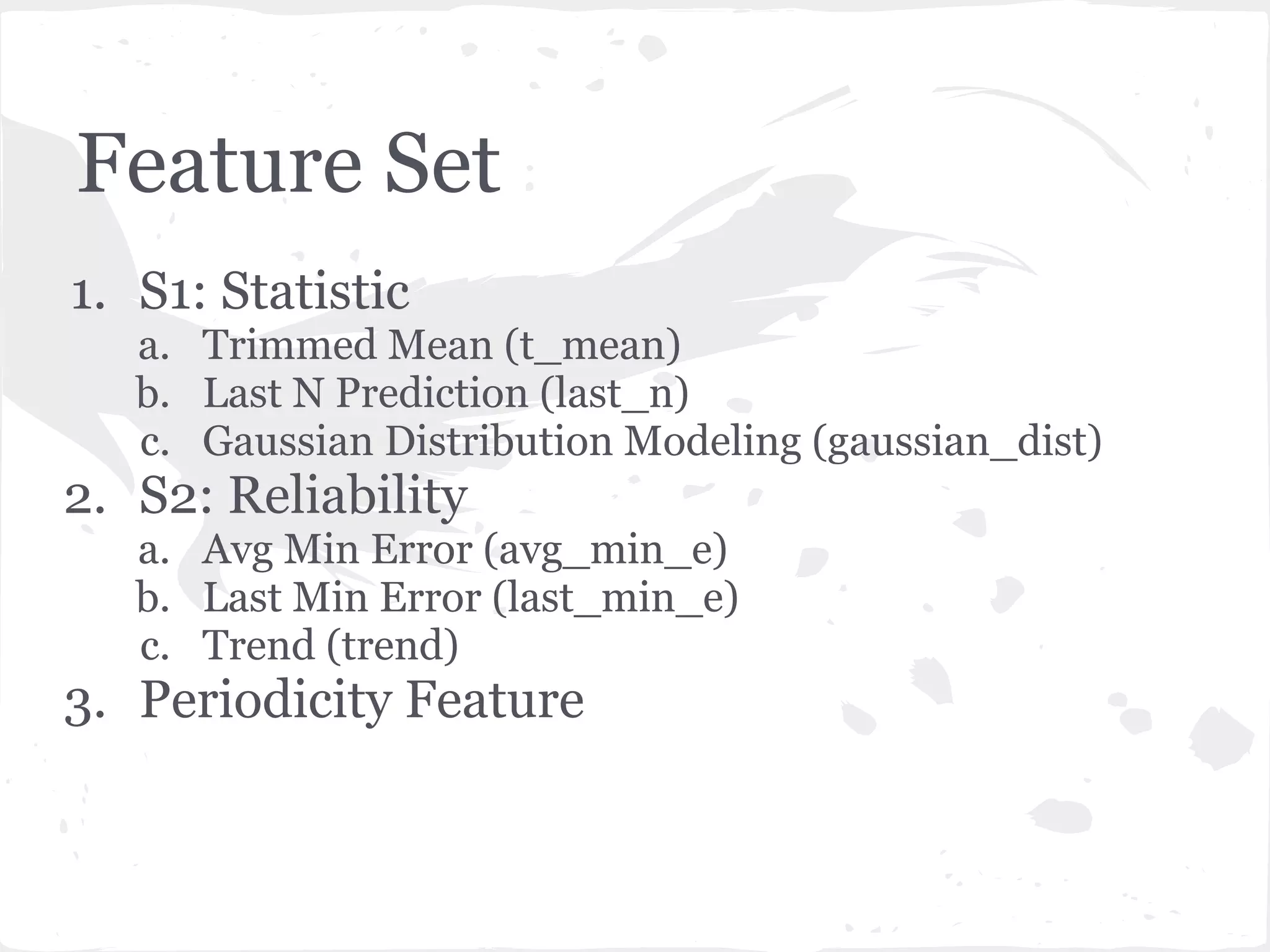

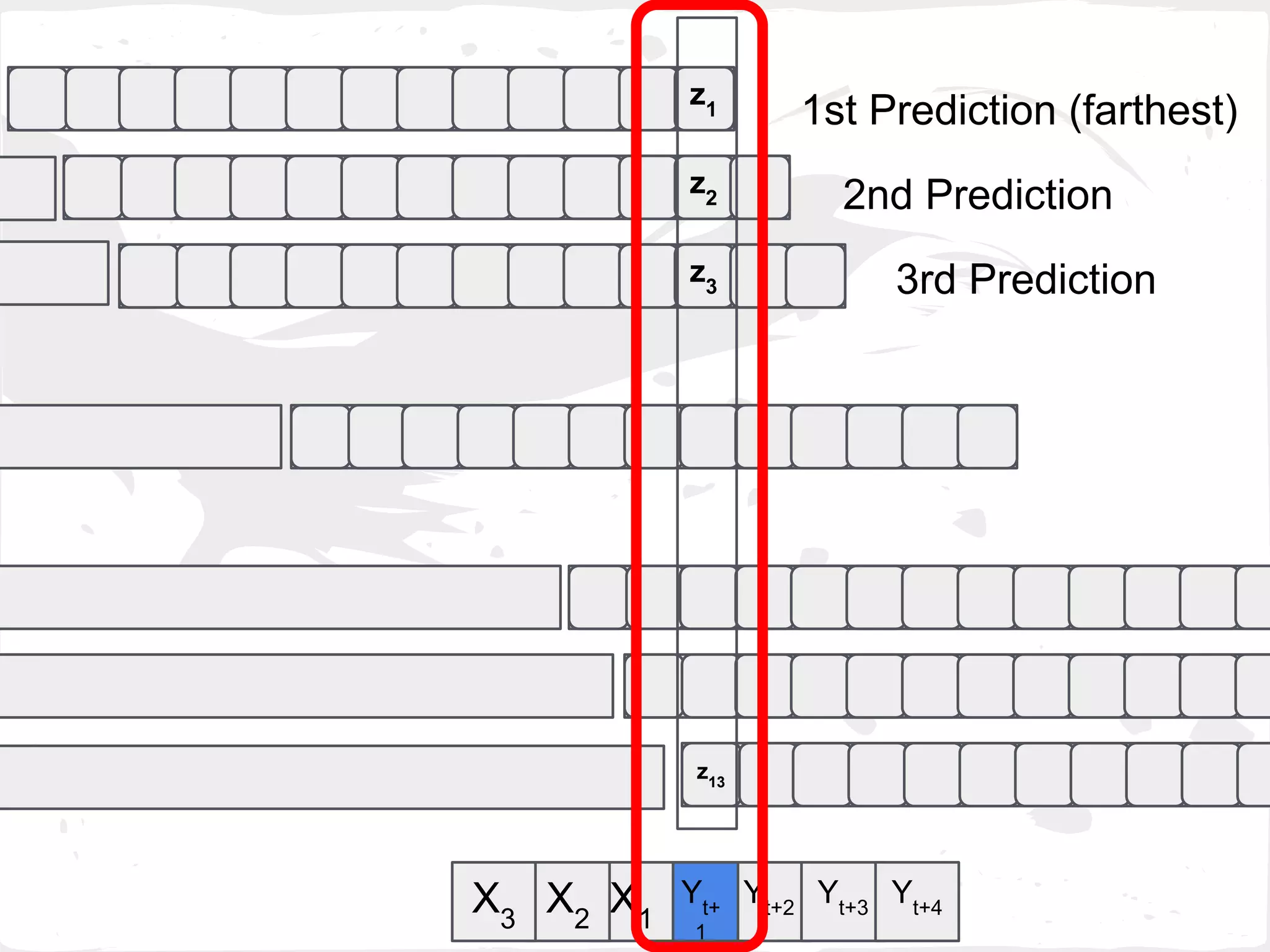

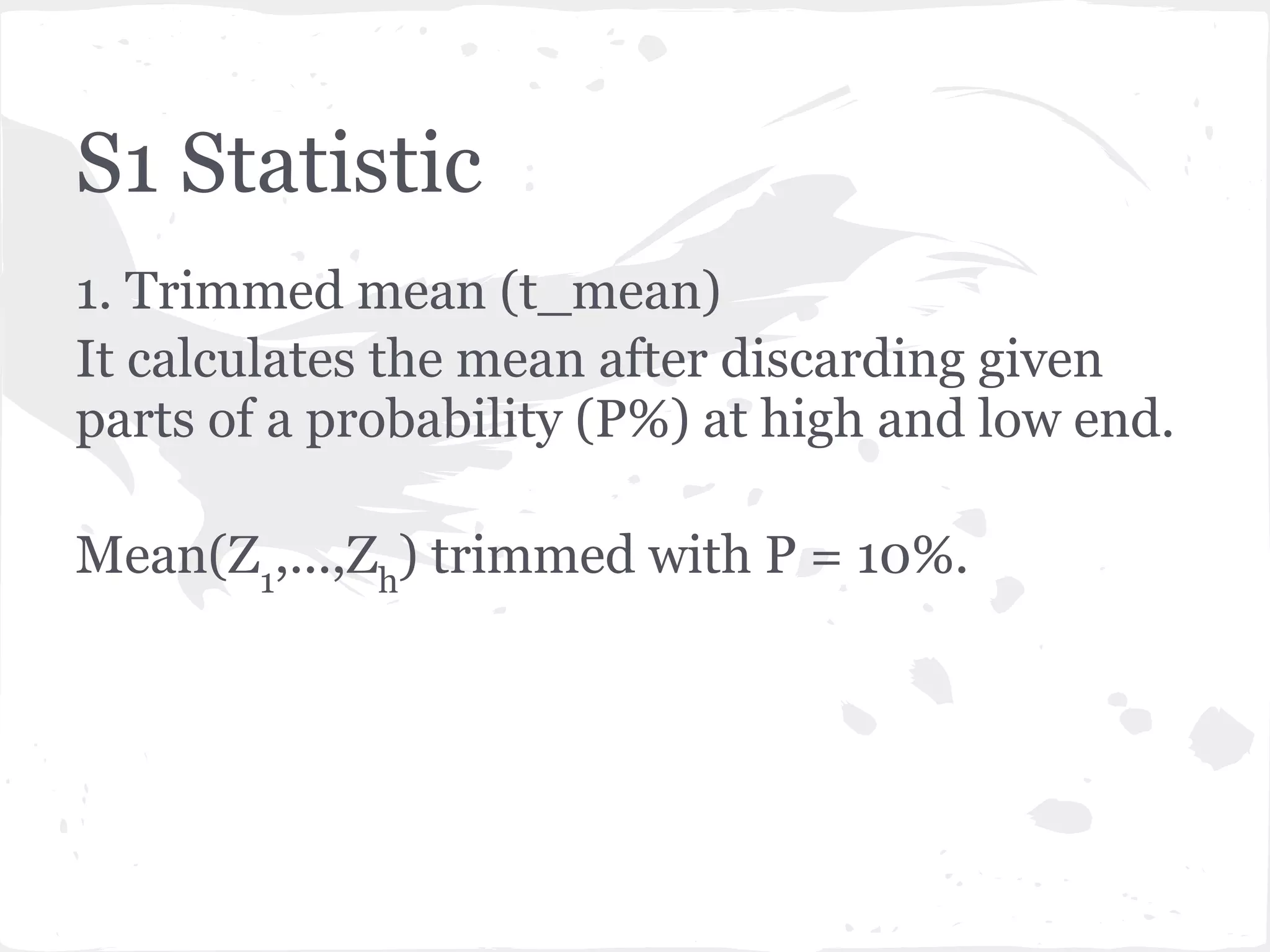

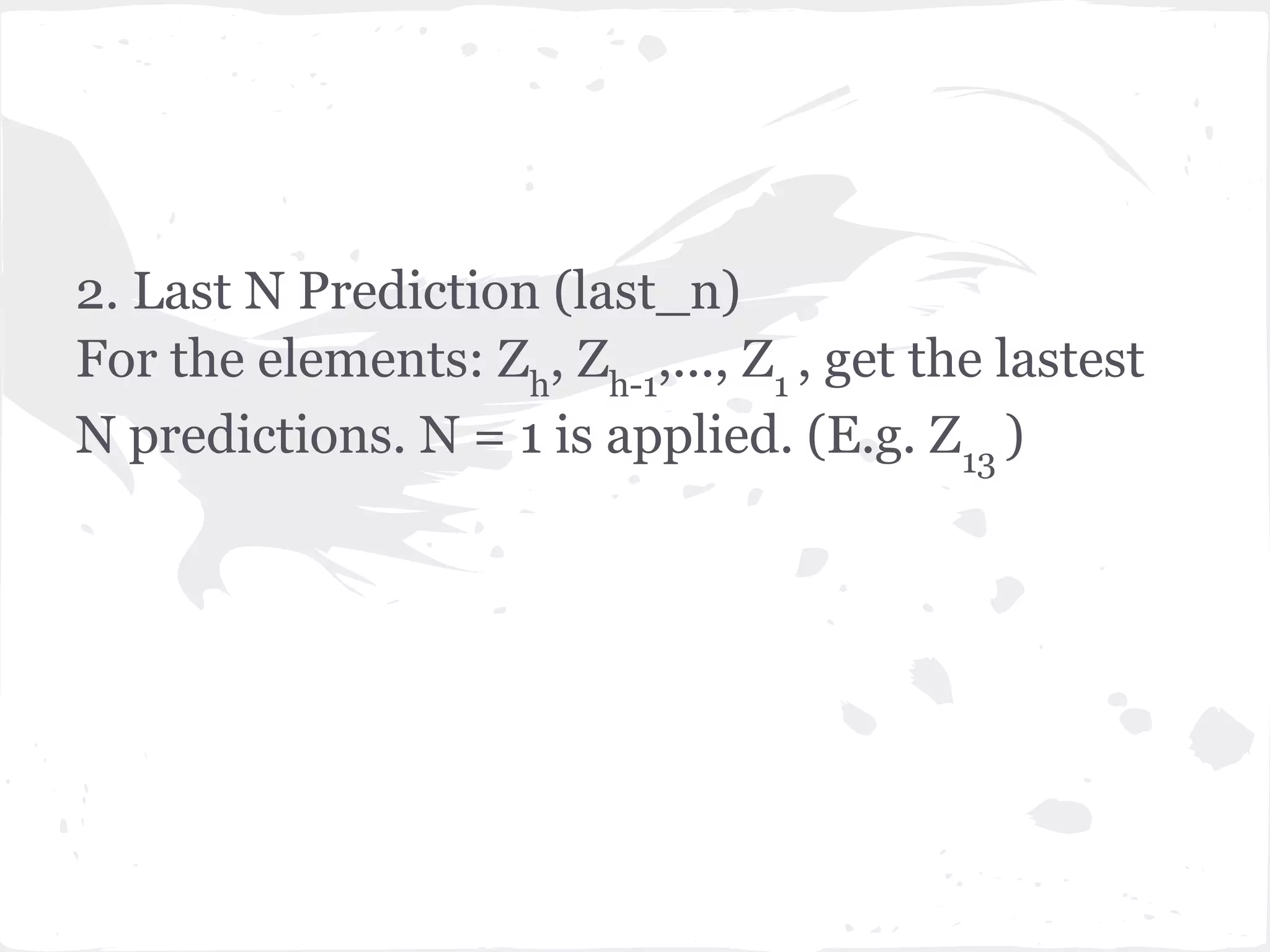

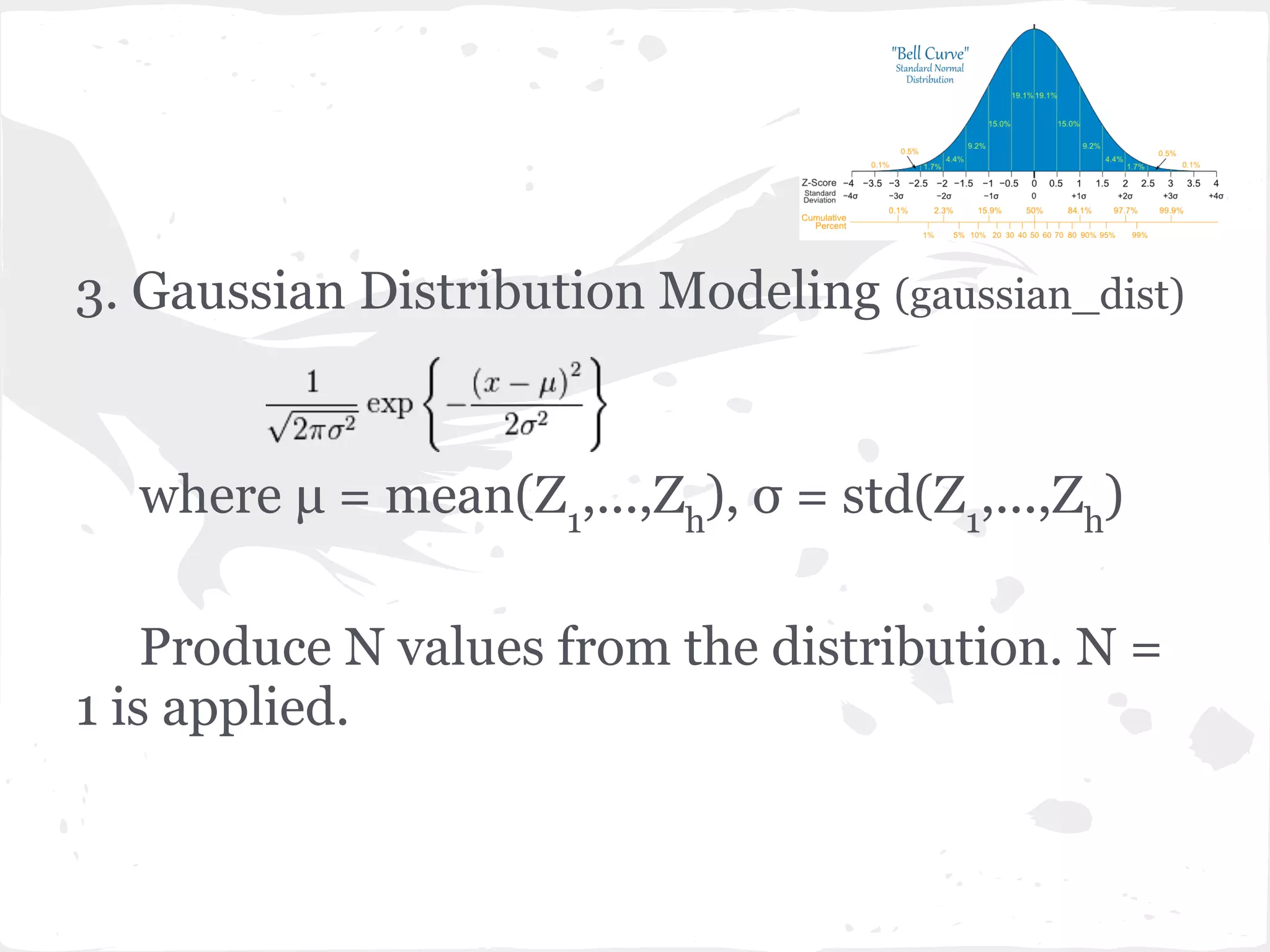

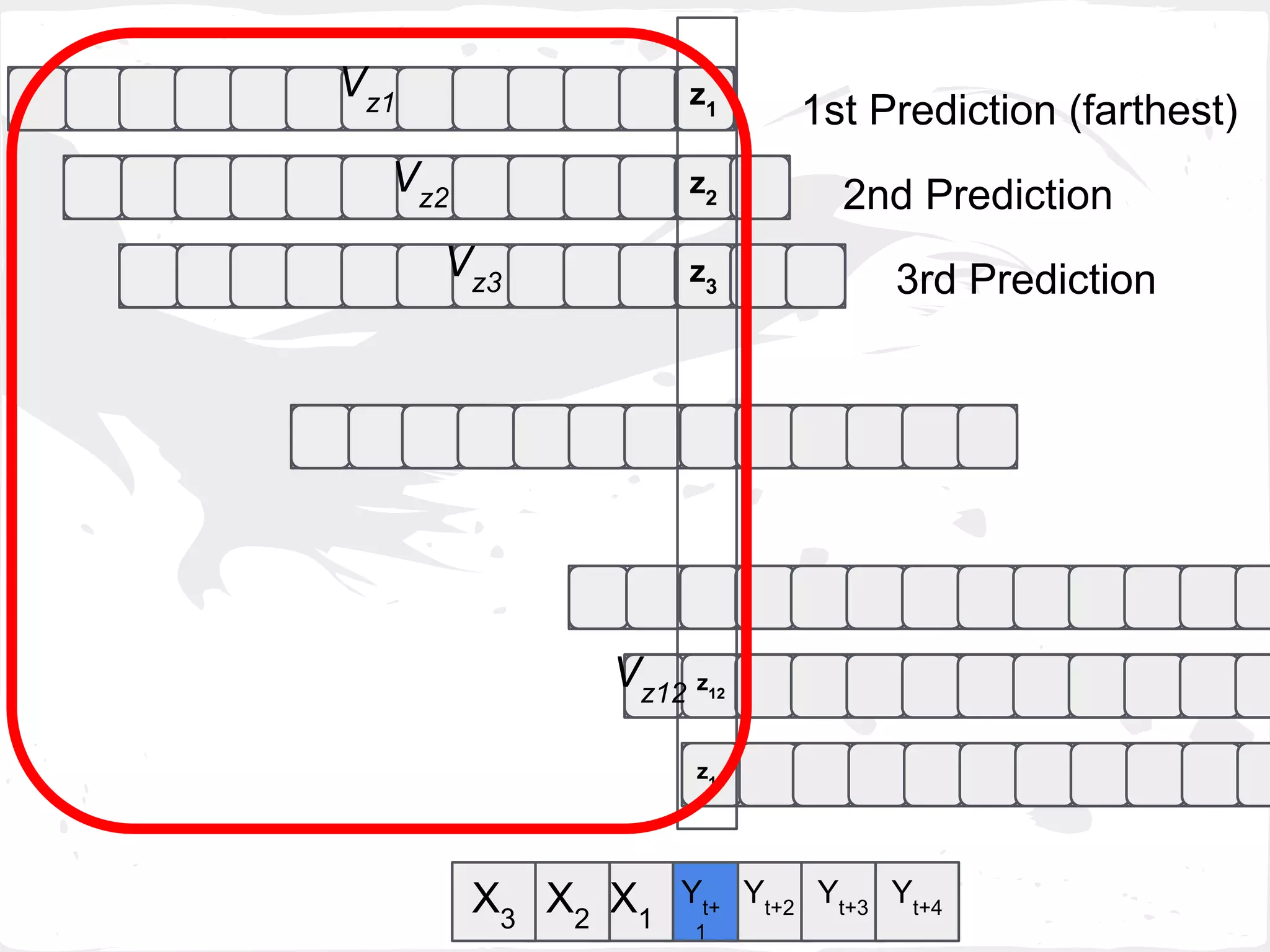

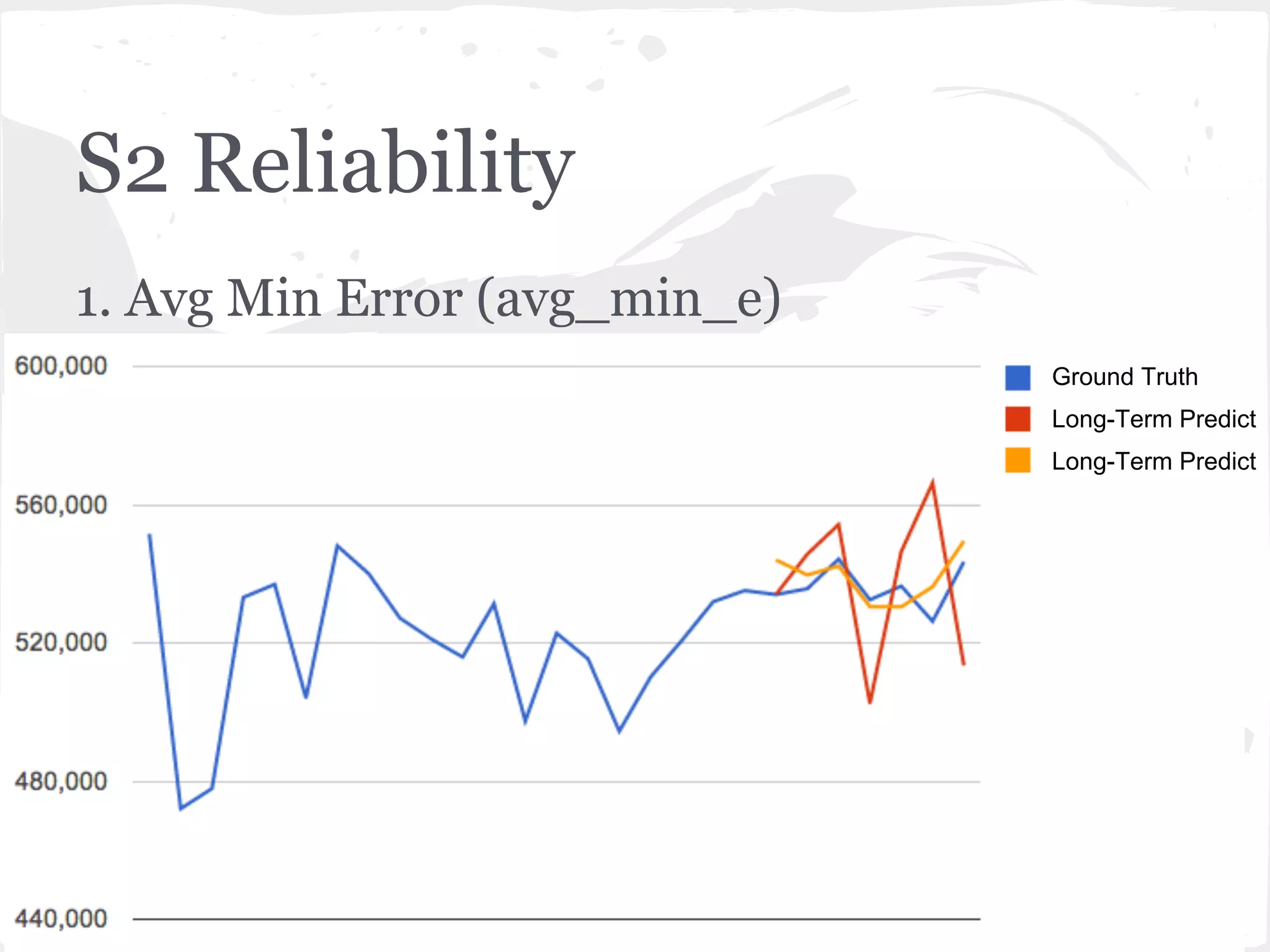

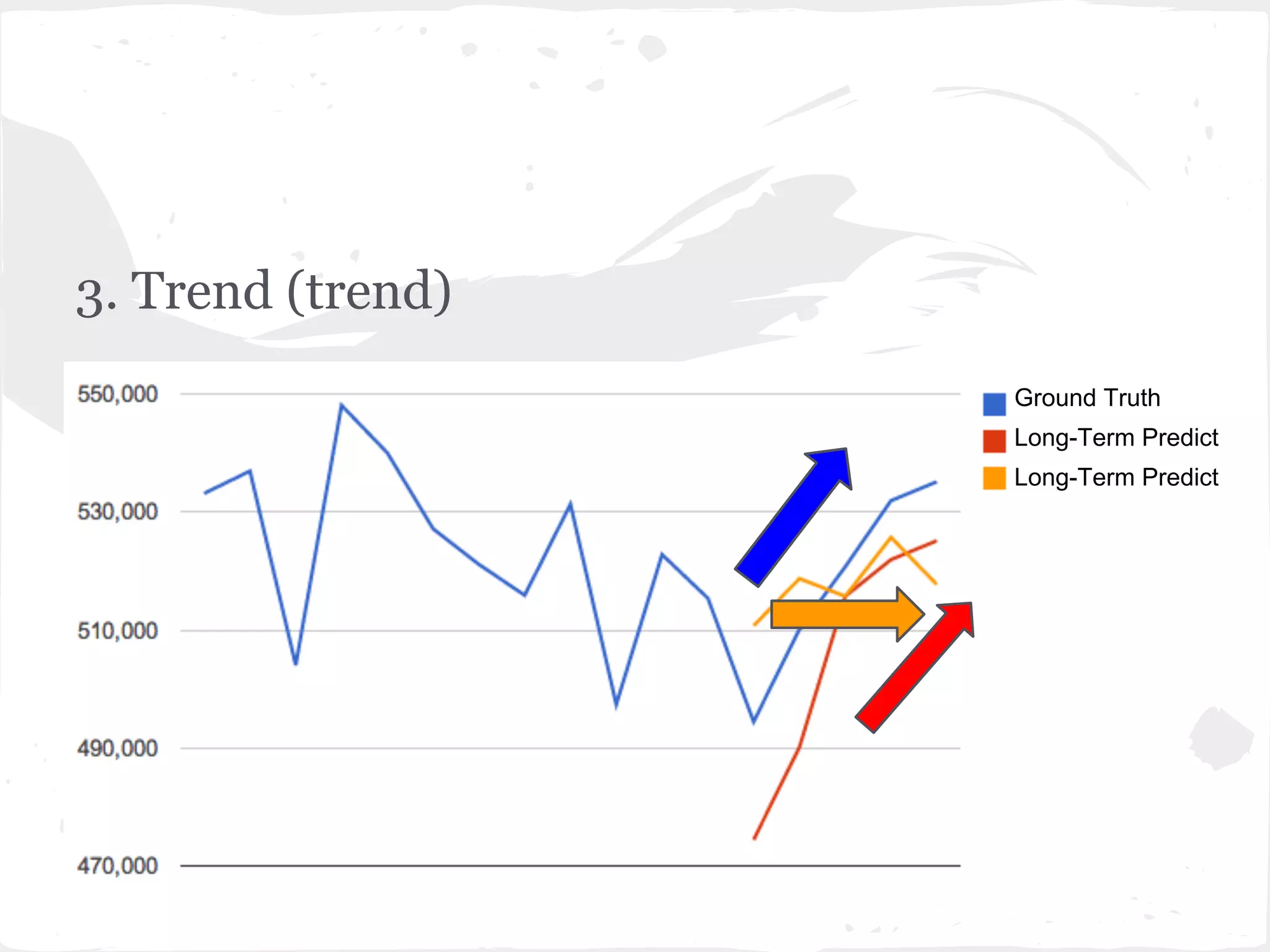

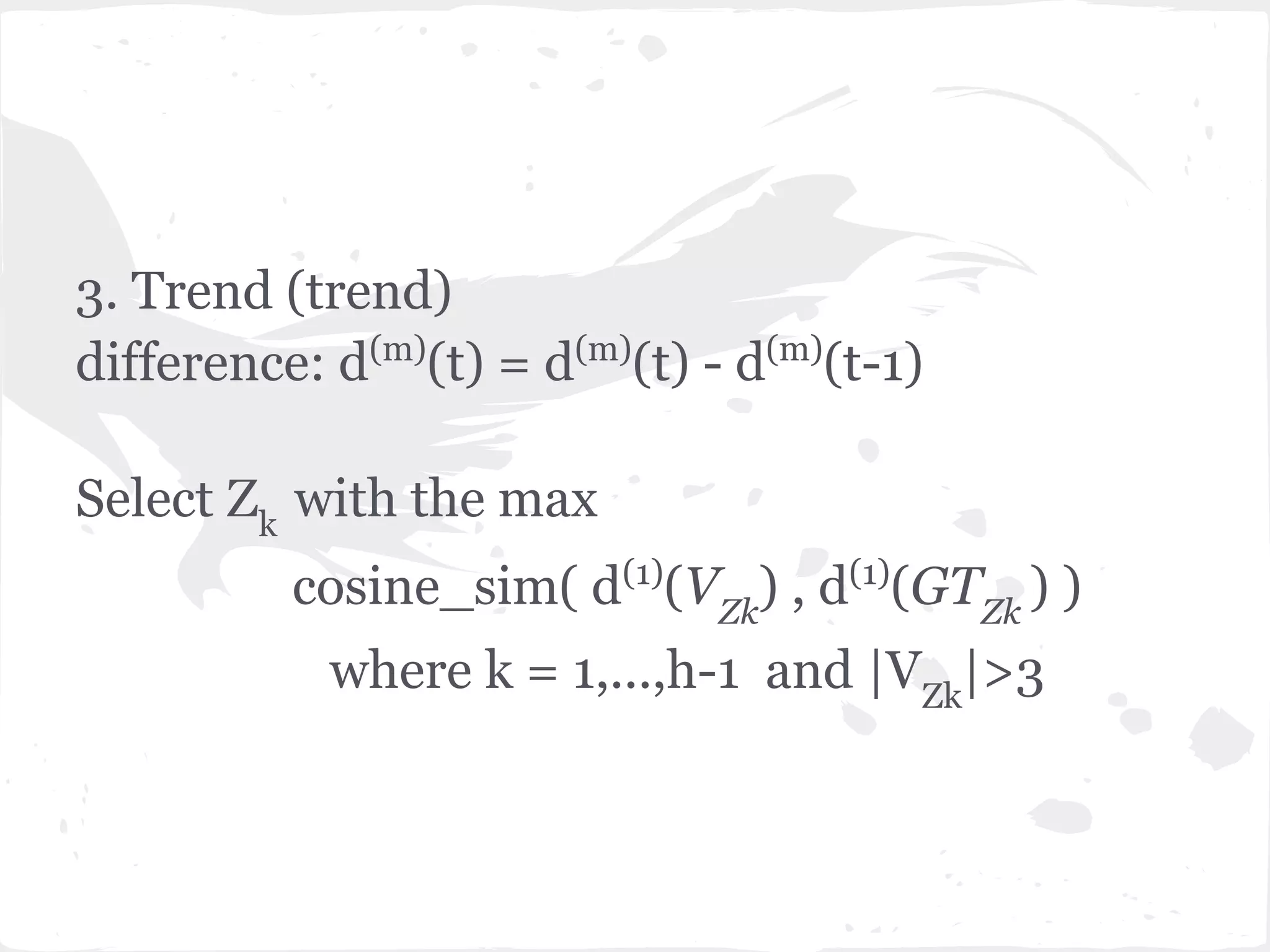

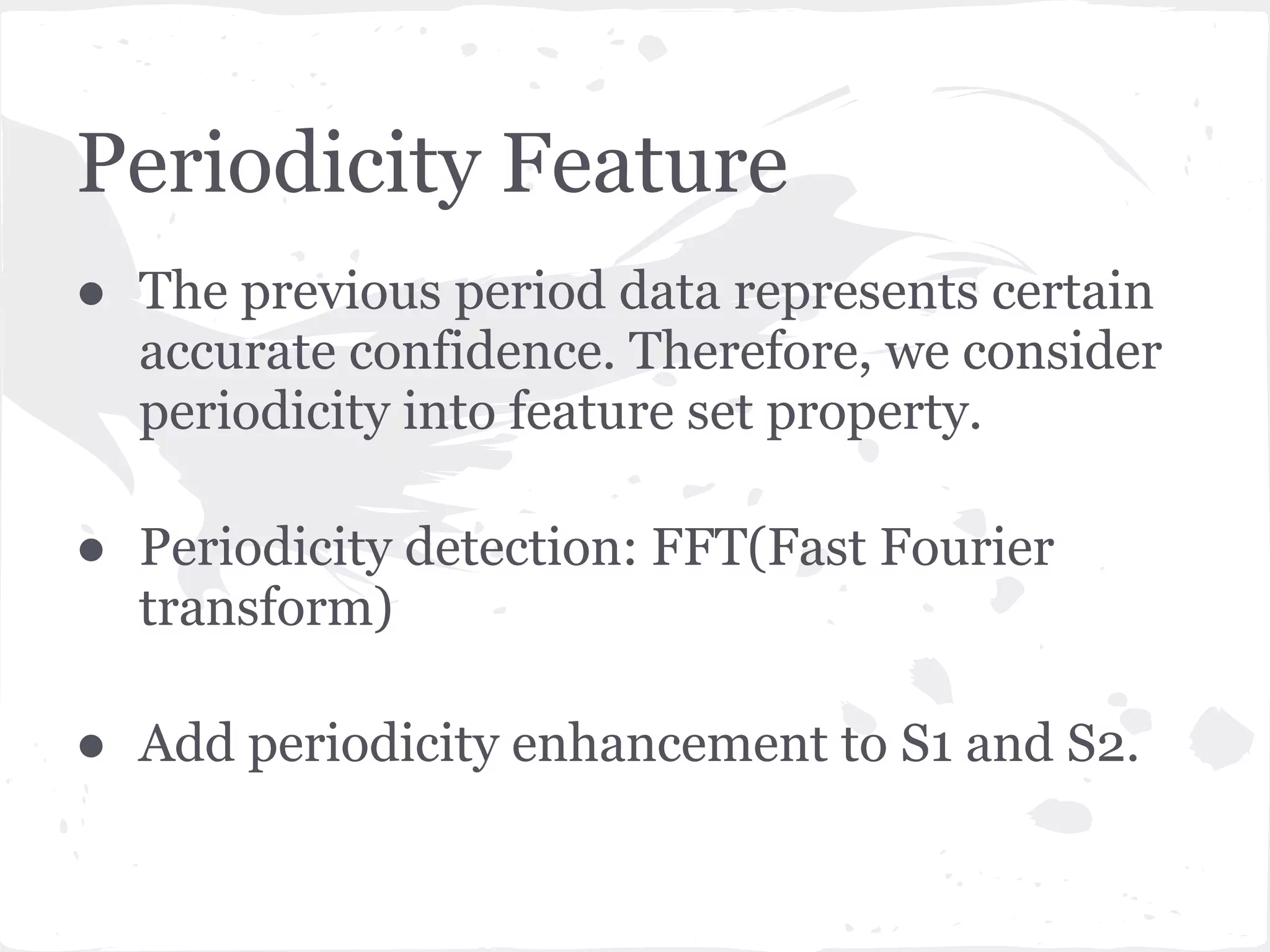

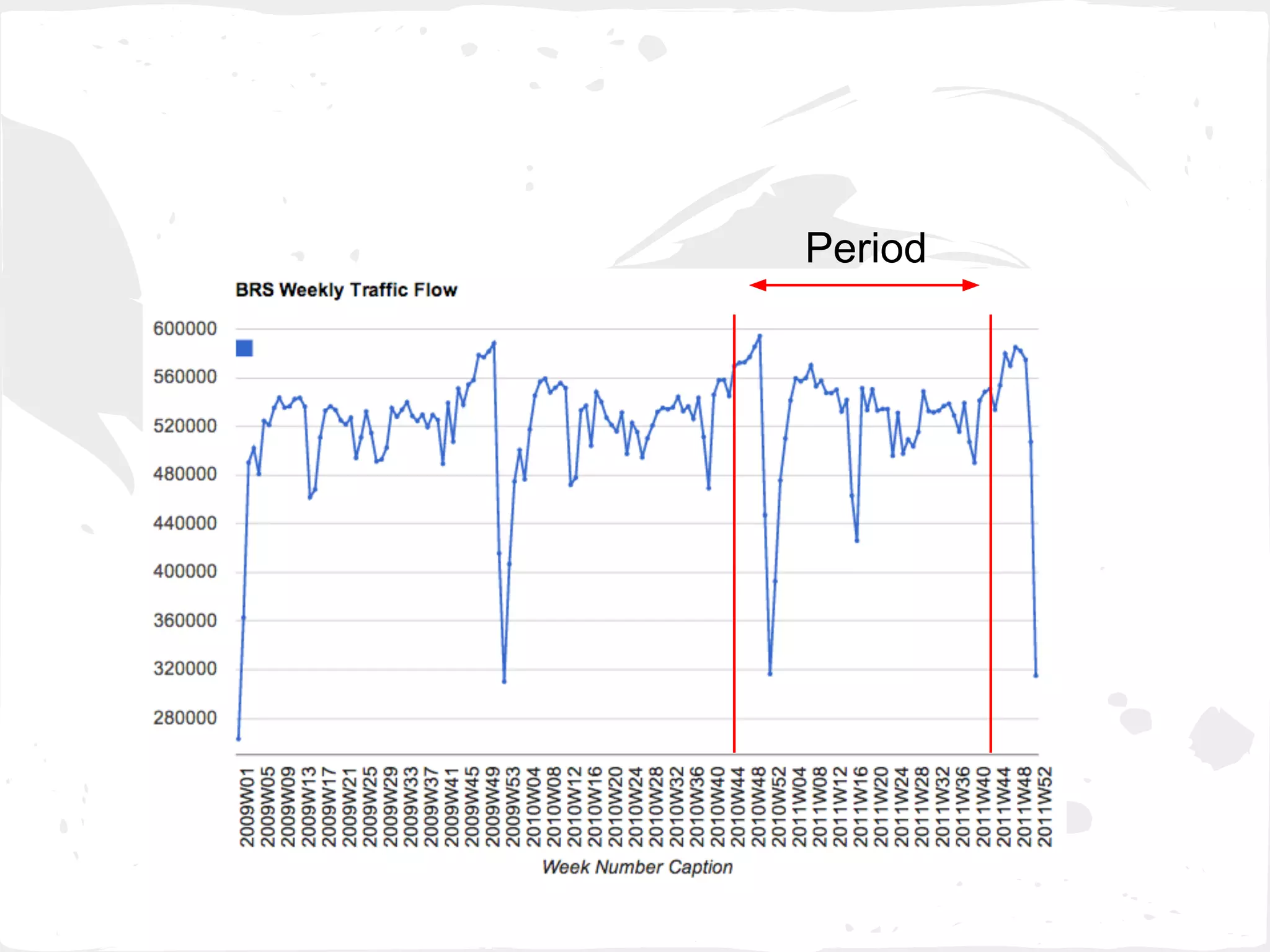

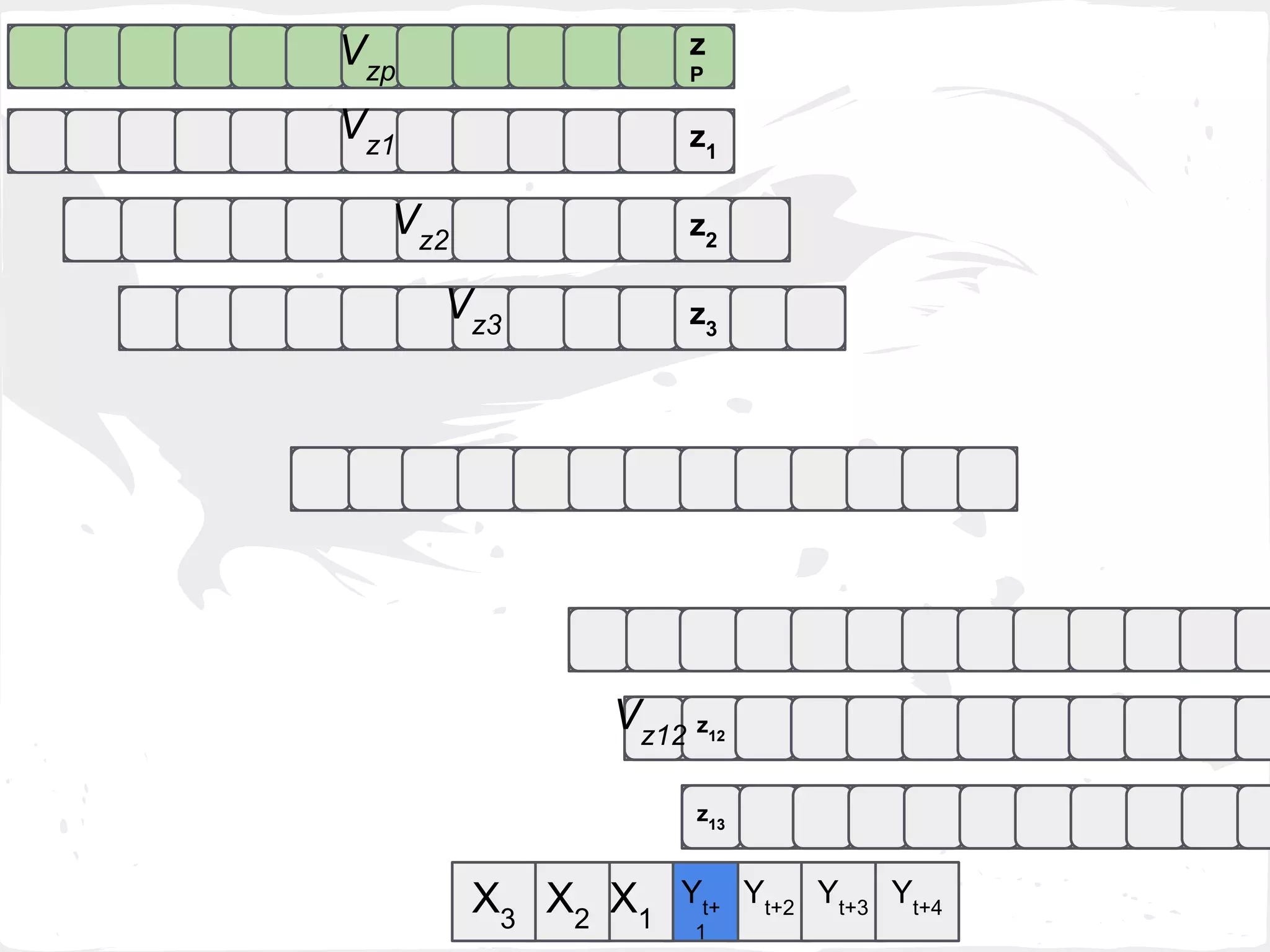

This document presents a general framework for enhancing time series prediction performance. It discusses using multiple predictions from a base method like neural networks, ARIMA or Holt-Winters to improve accuracy. Short-term enhancement uses support vector regression on statistic and reliability features of the multiple predictions to enhance 1-step ahead predictions. Long-term enhancement trains additional models on the short-term predictions to enhance longer-horizon predictions. The framework is evaluated on traffic flow data with prediction horizons of 1 week and 13 weeks.

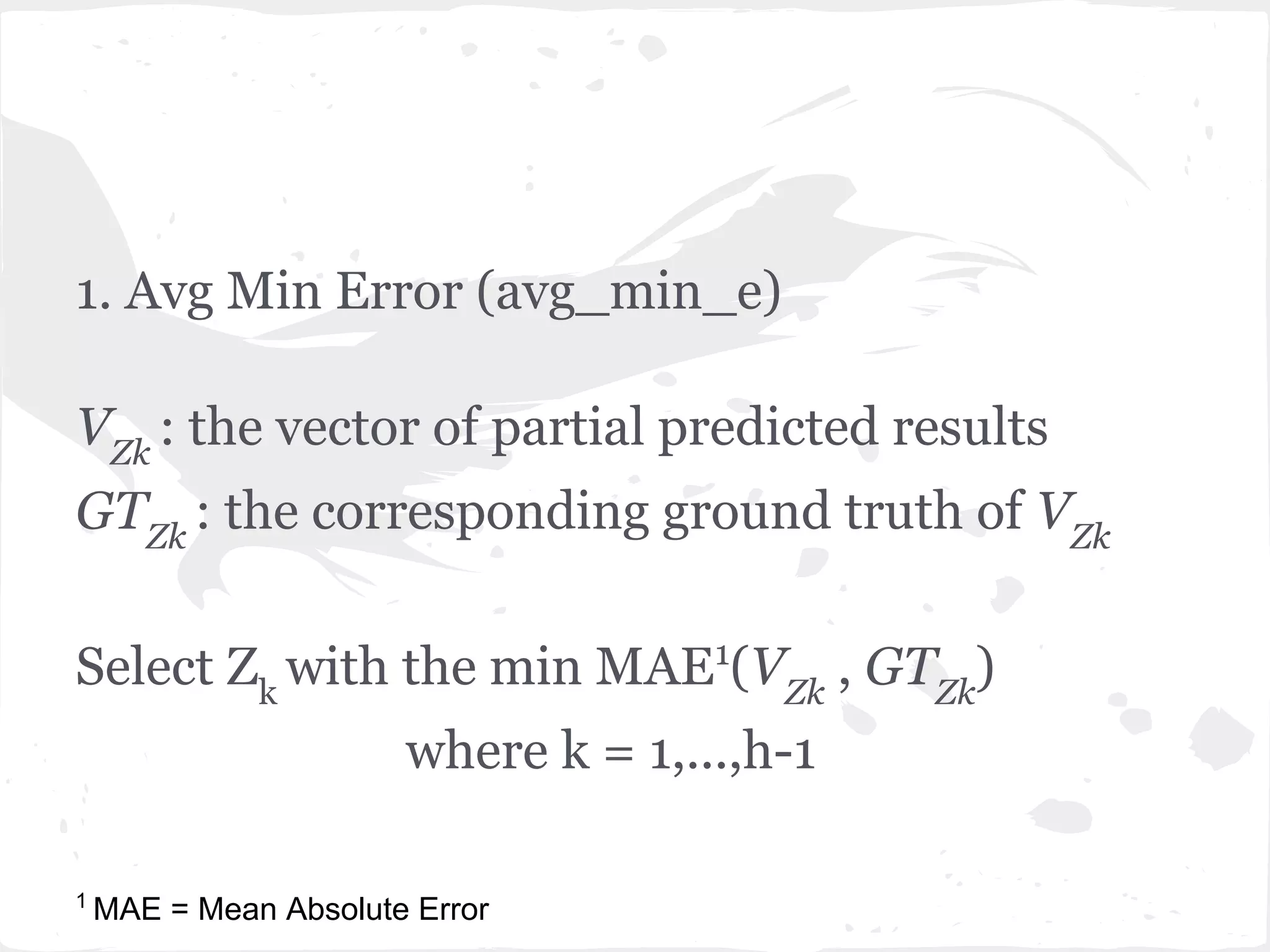

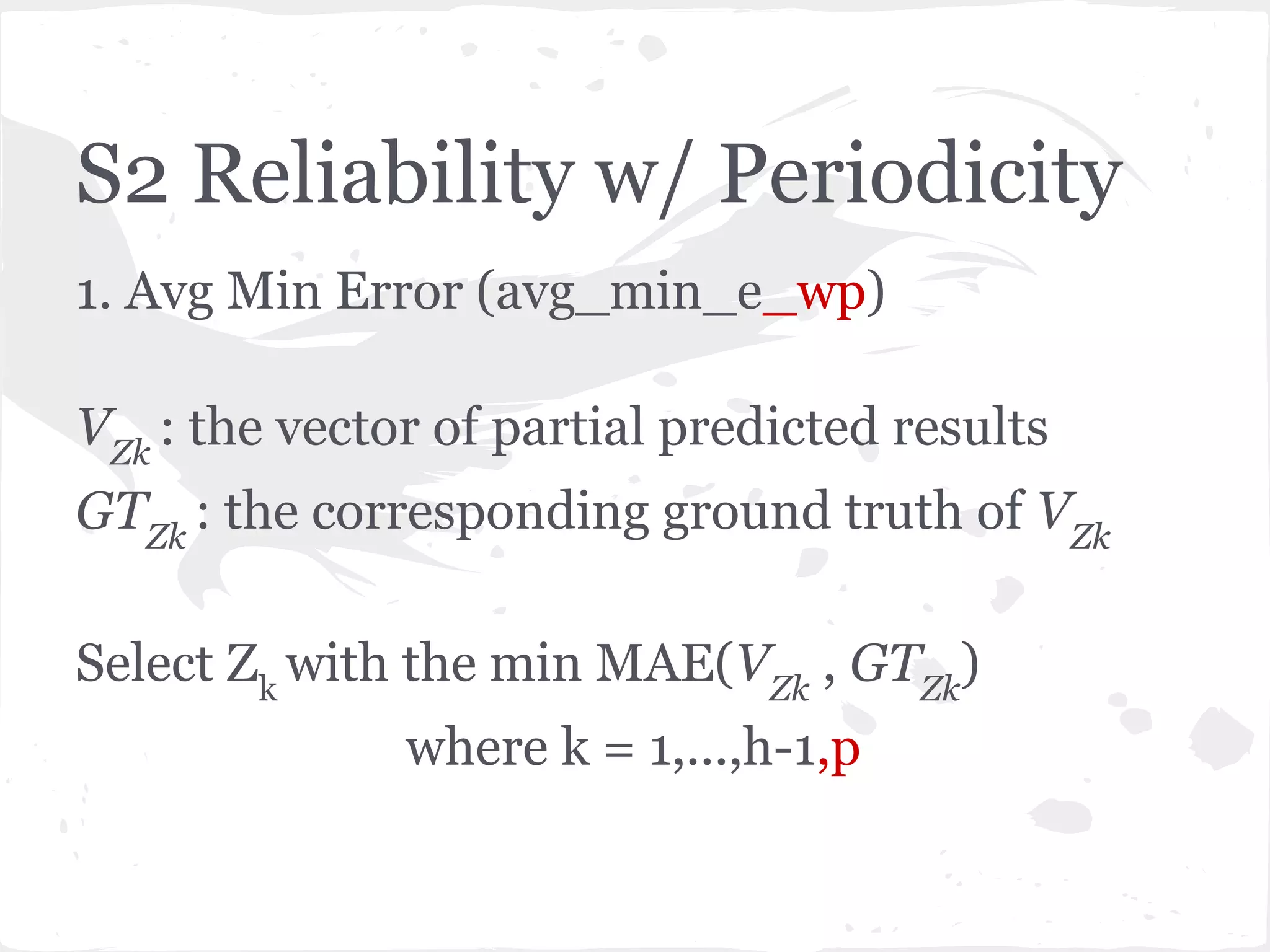

![2. Last Min Error (last_min_e)

Select Zk

with the min MAE( VZk

[1] , GTZk

[1] )

where k = 1,...,h-1](https://image.slidesharecdn.com/slideprintfororal-150808091422-lva1-app6891/75/A-General-Framework-for-Enhancing-Prediction-Performance-on-Time-Series-Data-60-2048.jpg)

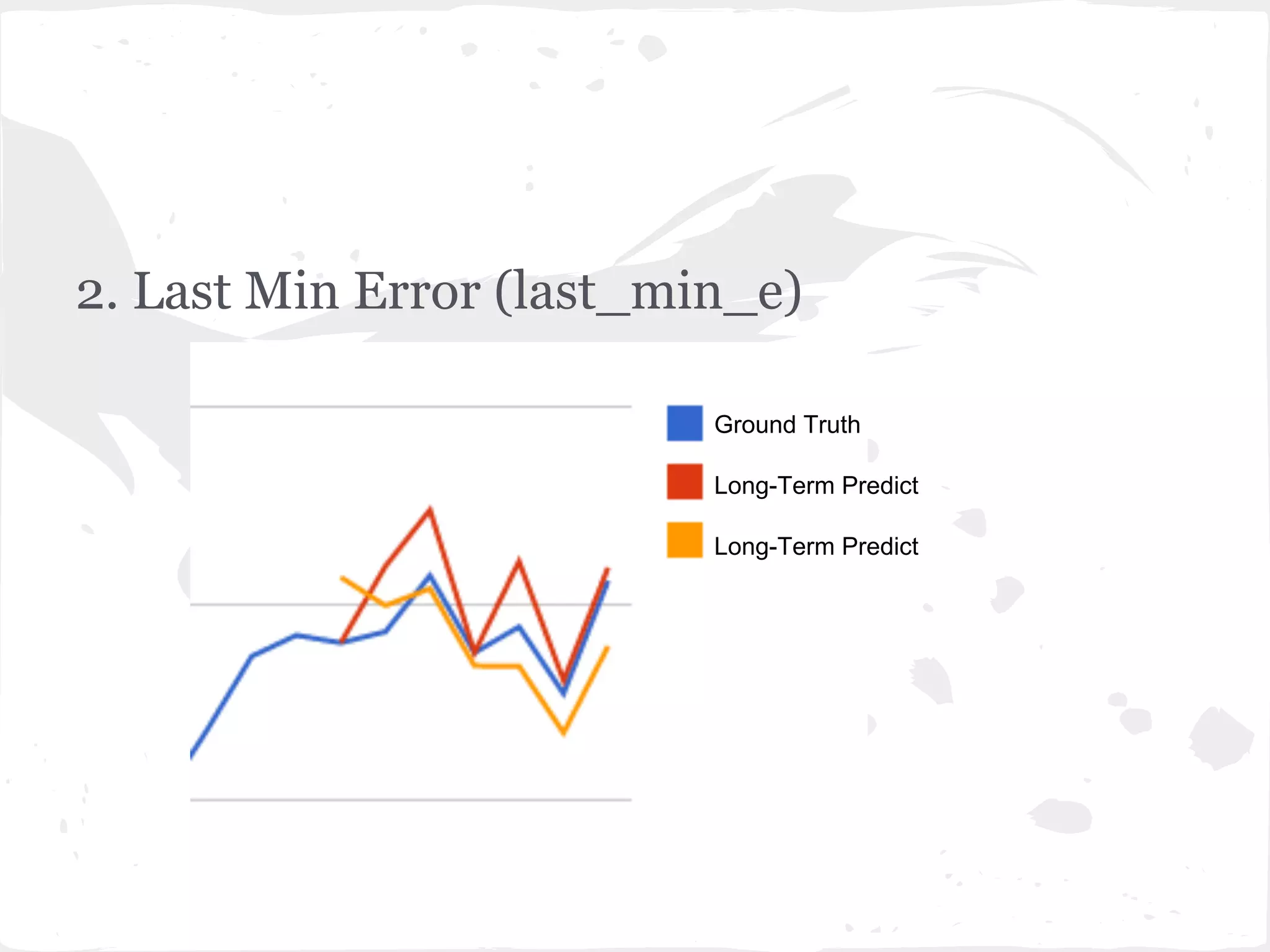

![2. Last Min Error (last_min_e_wp)

Select Zk

with the min MAE( VZk

[1] , GTZk

[1] )

where k = 1,...,h-1,p](https://image.slidesharecdn.com/slideprintfororal-150808091422-lva1-app6891/75/A-General-Framework-for-Enhancing-Prediction-Performance-on-Time-Series-Data-70-2048.jpg)