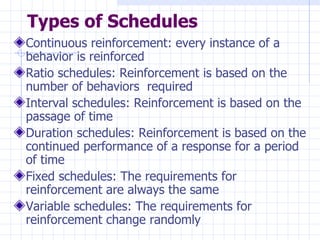

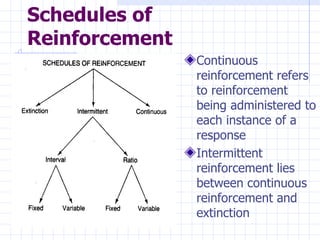

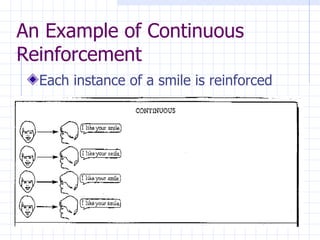

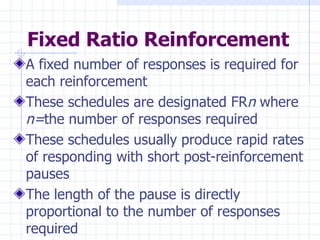

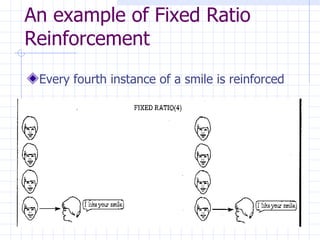

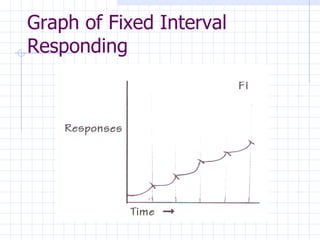

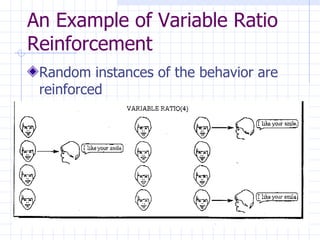

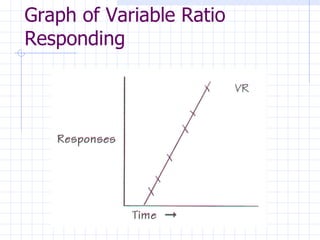

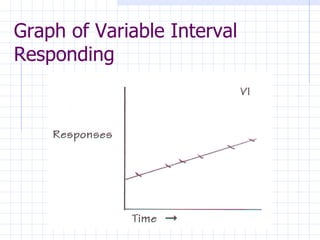

Schedules of reinforcement determine when behavior will be reinforced. Intermittent reinforcement reinforces behavior sometimes but not every time. This can build persistent behaviors that are resistant to extinction while using fewer reinforcers. Types of schedules include continuous, ratio, interval, and variable schedules. Behaviors reinforced intermittently or on variable schedules will be most resistant to extinction.