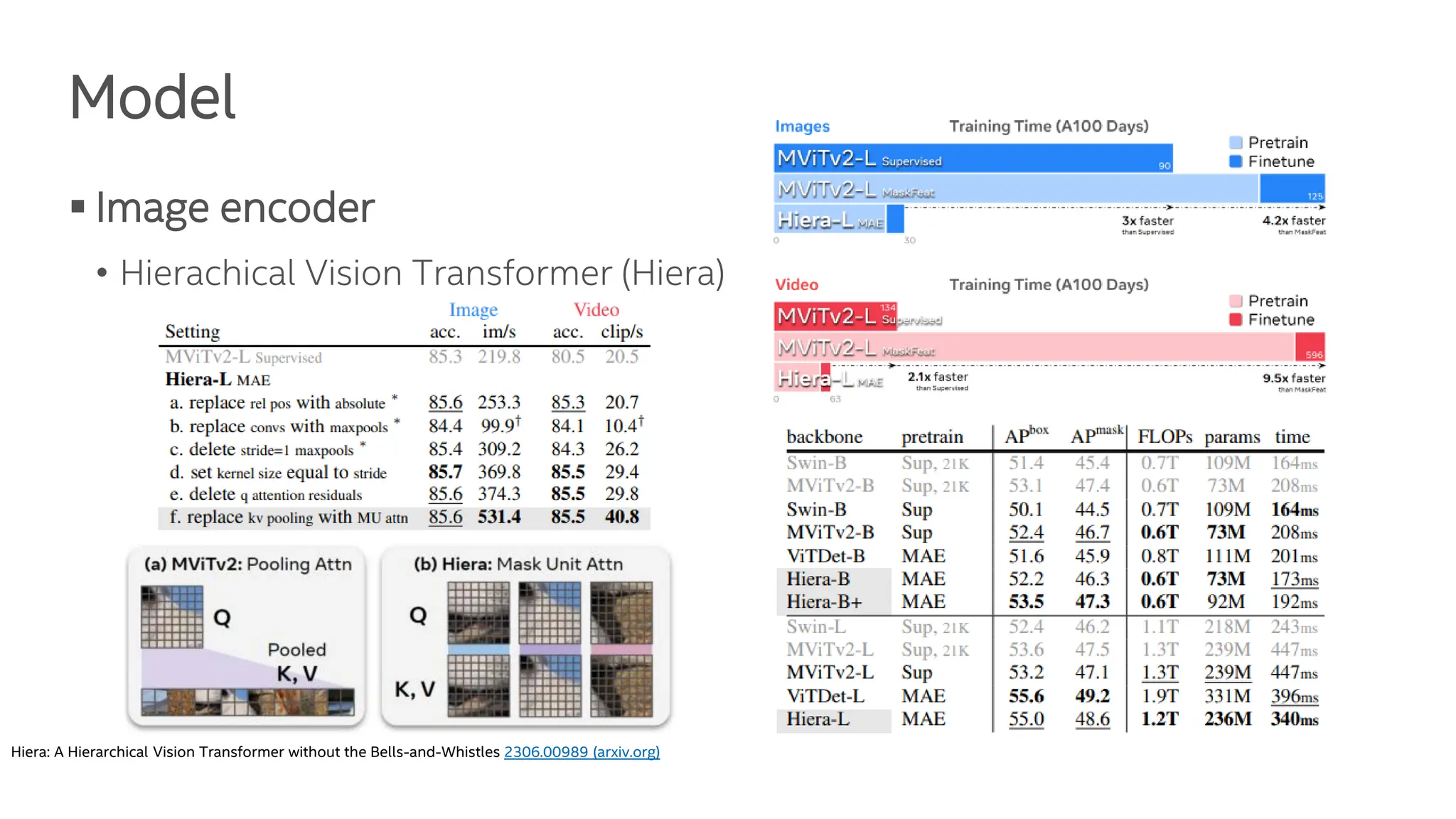

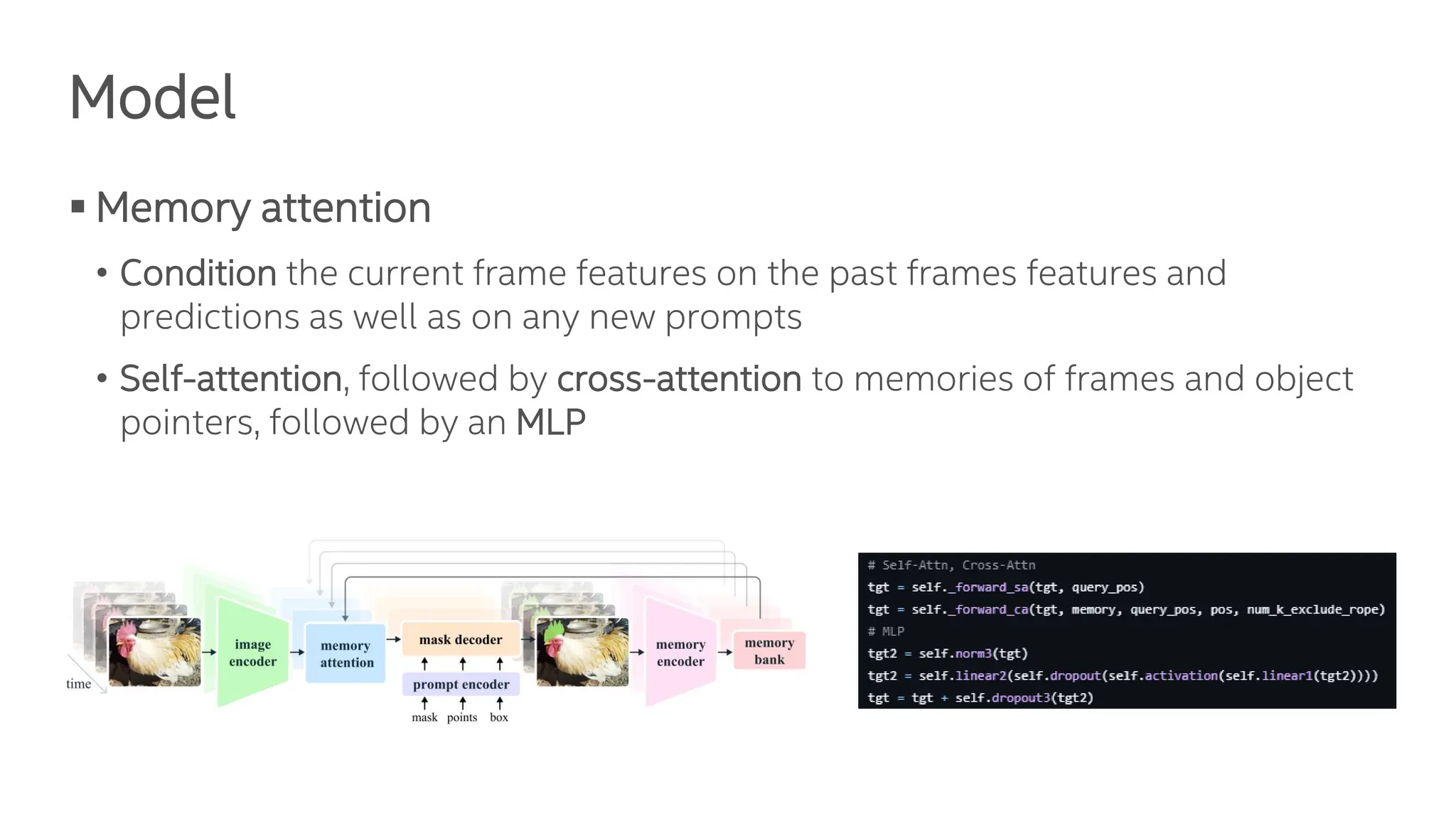

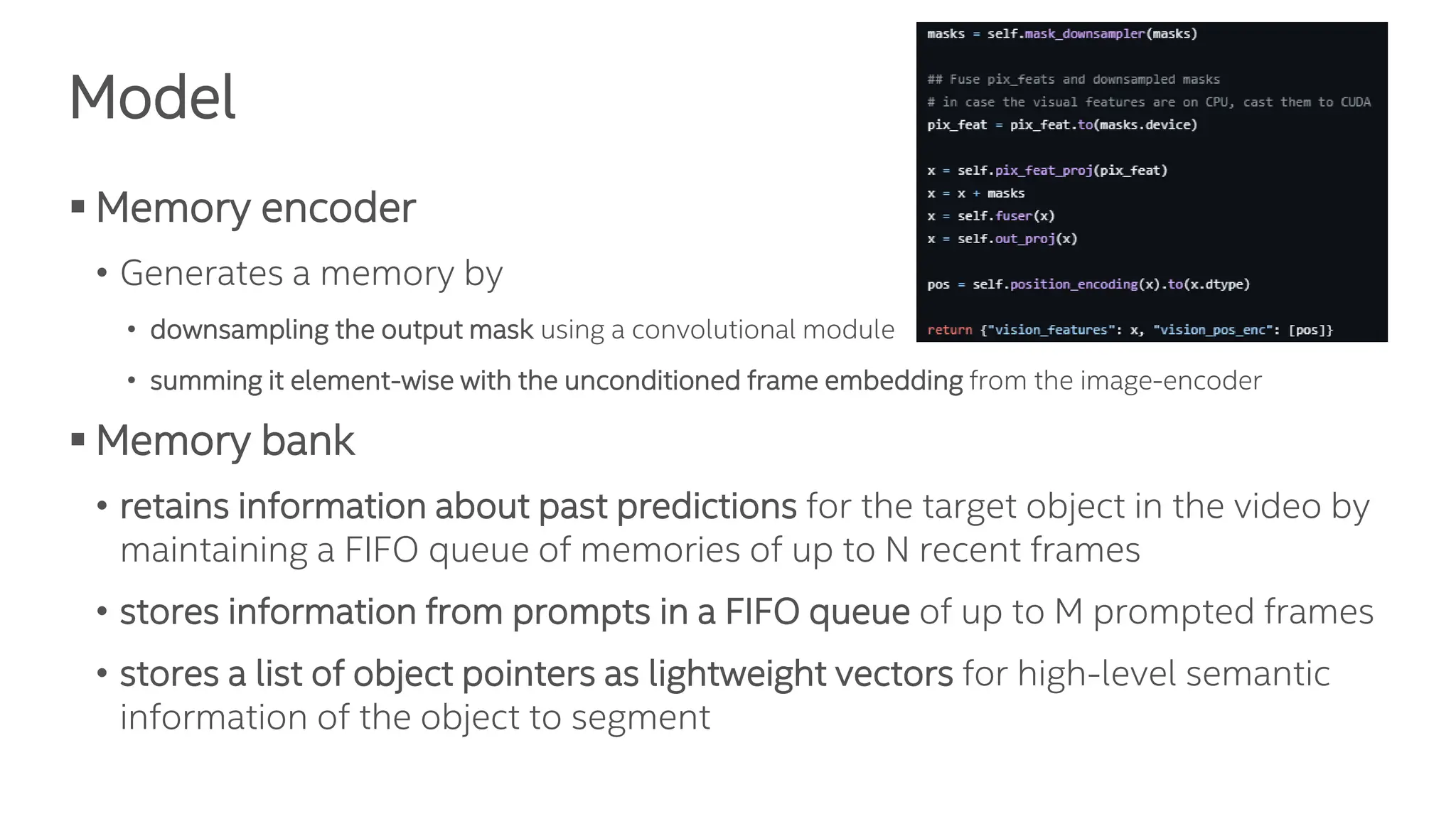

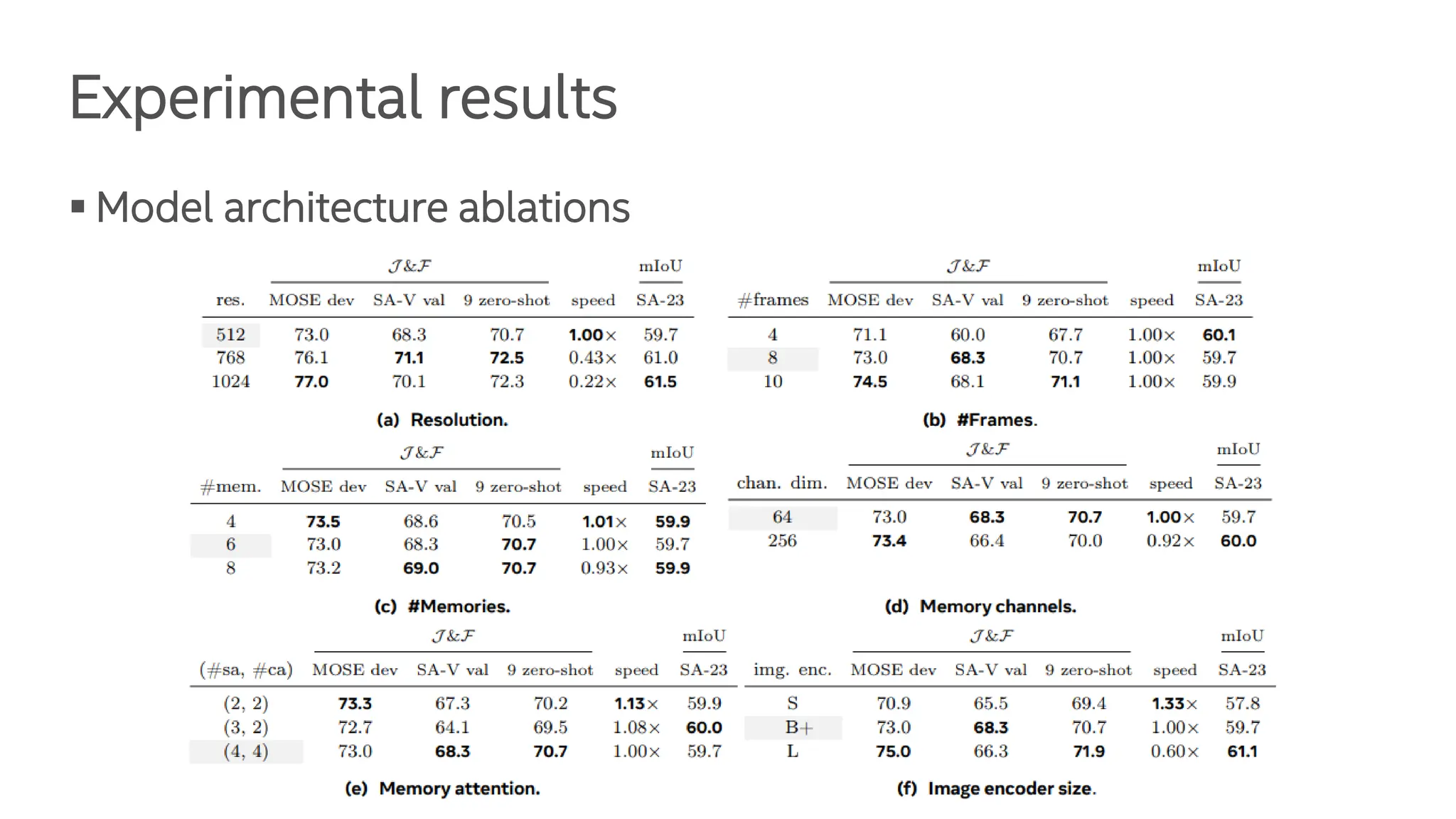

The document presents the Segment Anything Model 2 (SAM 2), a unified framework for promptable visual segmentation in videos and images. It details the architecture including memory attention, various image encoders, and the role of a memory bank in retaining past predictions. Additionally, it discusses training methodologies and experimental results highlighting SAM 2's performance on tasks like promptable video segmentation.