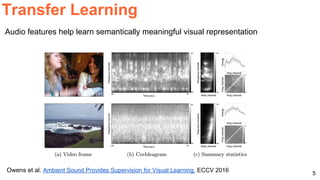

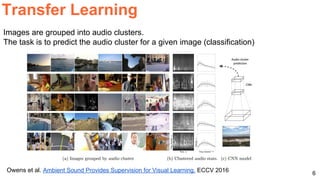

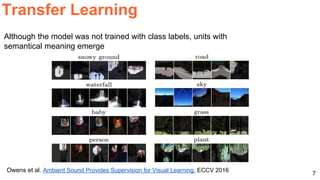

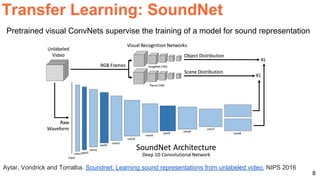

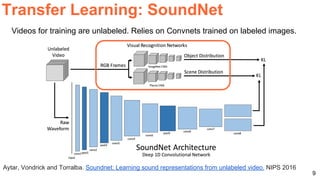

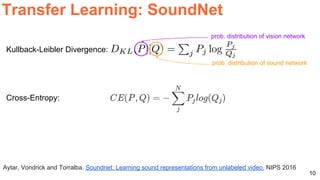

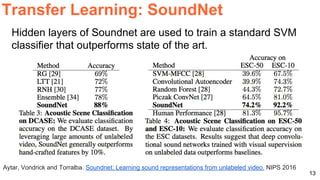

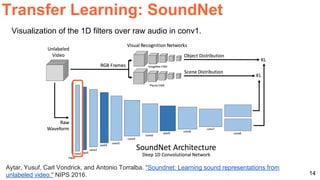

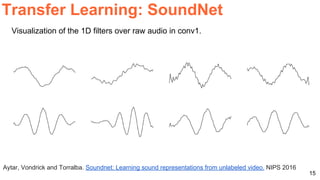

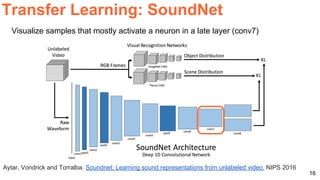

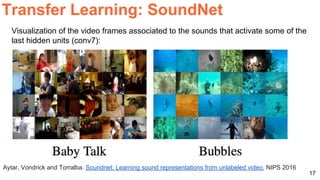

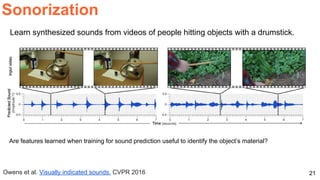

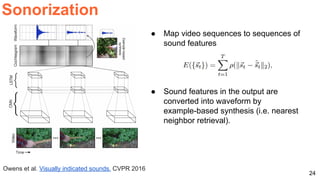

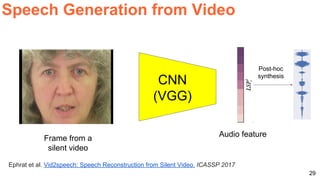

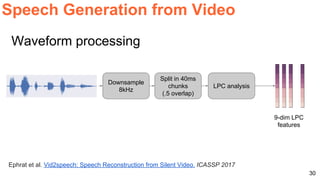

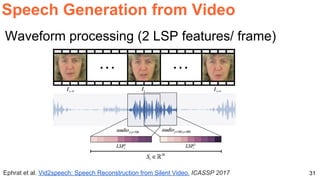

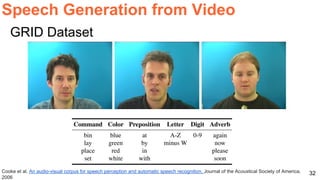

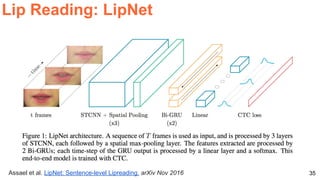

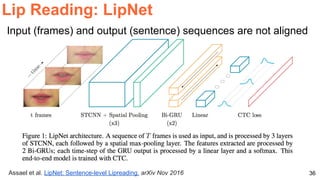

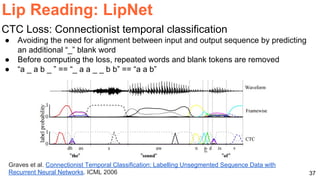

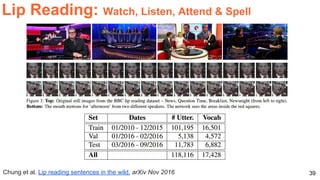

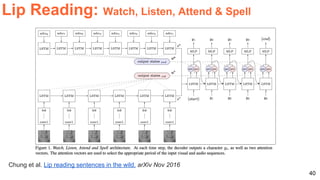

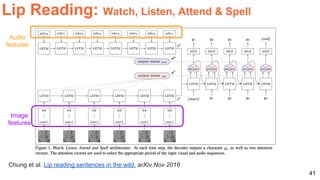

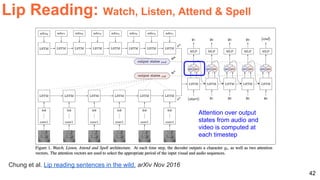

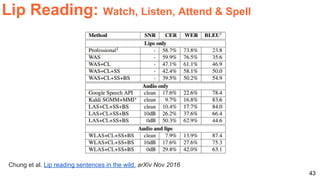

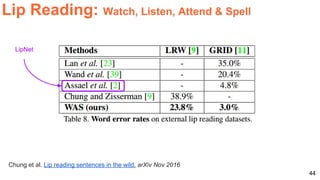

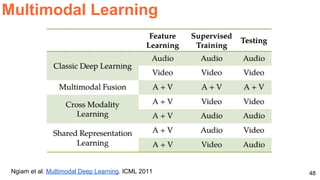

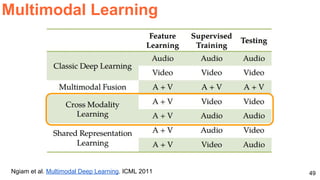

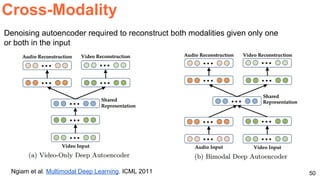

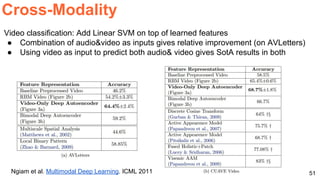

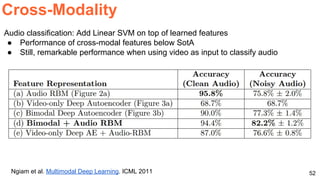

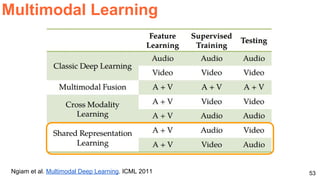

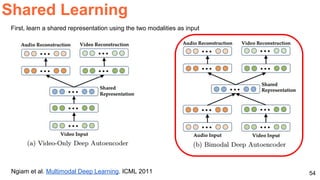

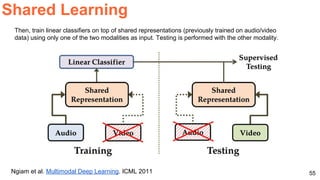

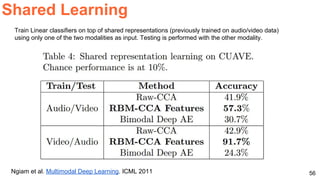

The document discusses various techniques in audio-visual learning, focusing on transfer learning, sonorization, speech generation, and lip reading. It references several studies, including SoundNet and Vid2Speech, which explore how sound can be learned from video data and vice versa. Additionally, the document highlights multimodal learning approaches and their effectiveness in improving audio and video classification tasks.