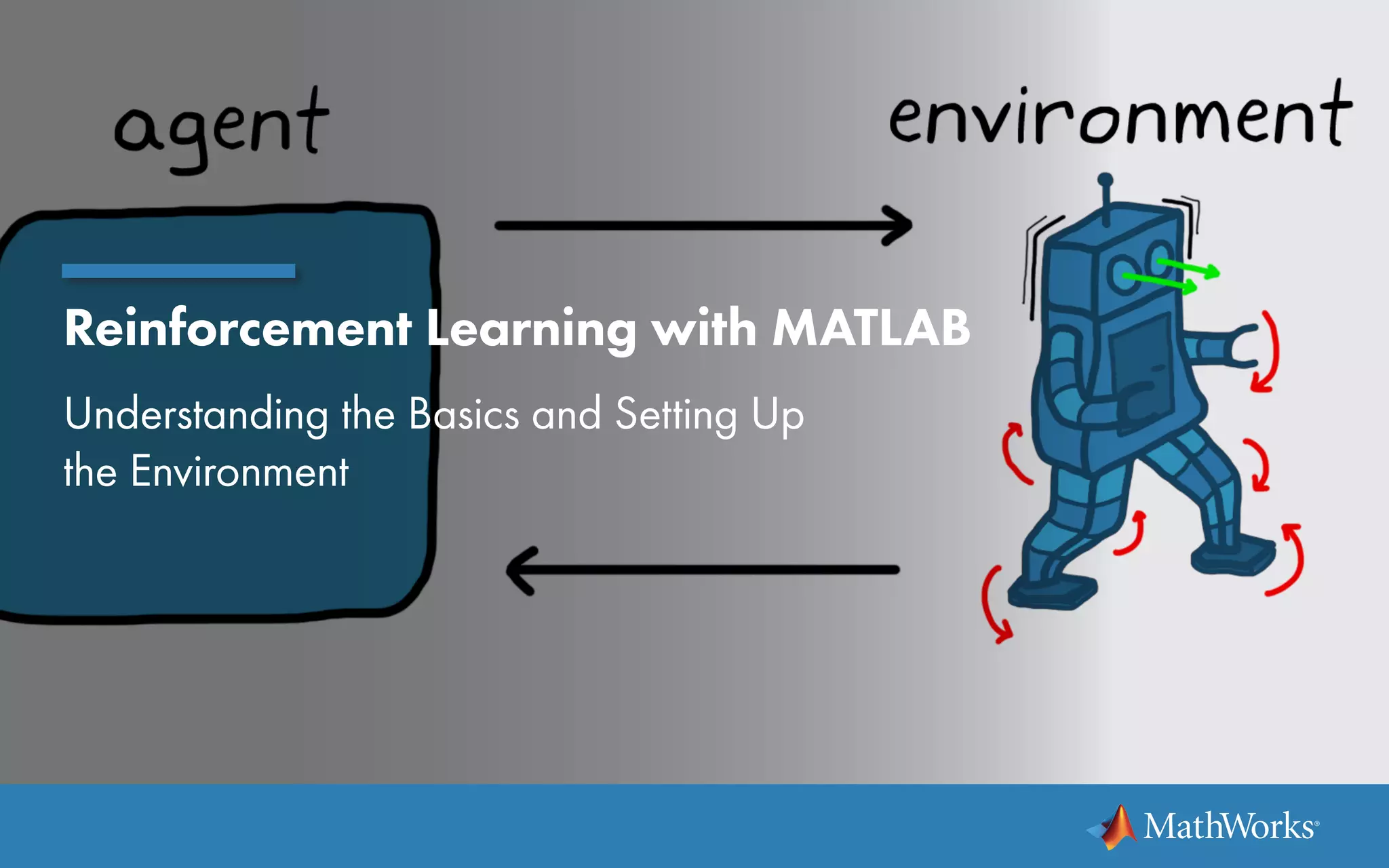

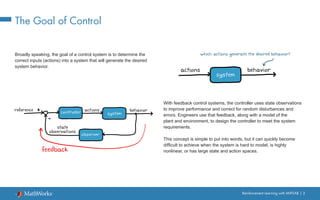

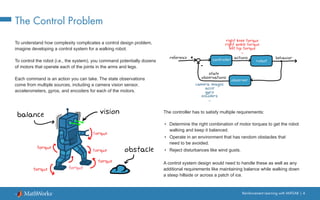

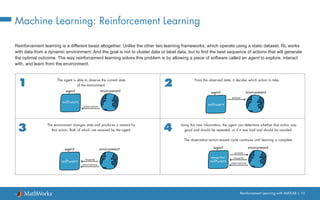

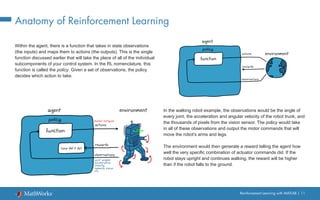

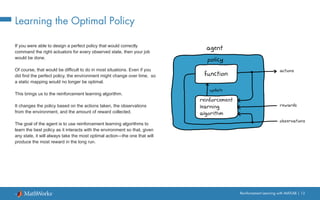

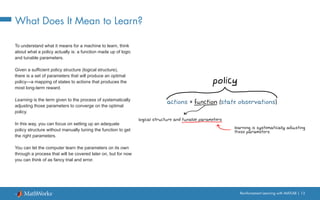

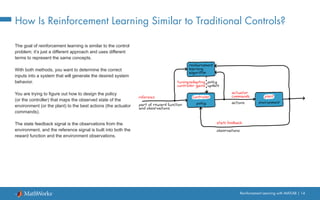

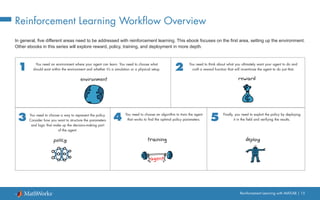

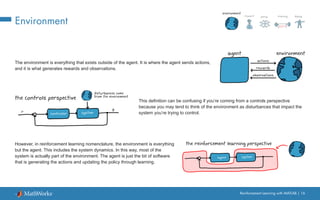

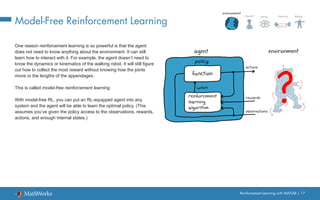

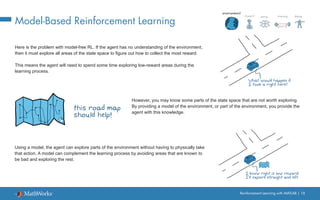

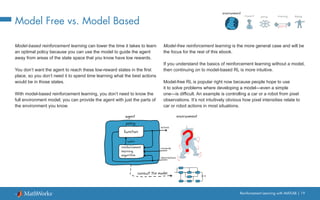

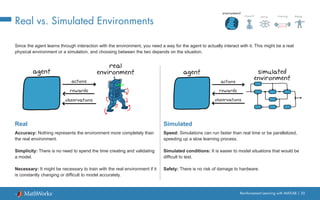

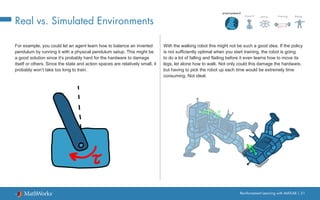

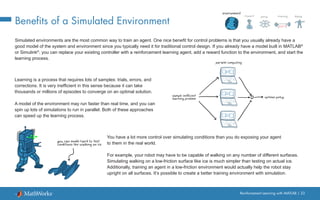

This document provides an introduction to reinforcement learning and discusses how it can be applied to control problems. It explains that reinforcement learning uses an agent that interacts with an environment to learn an optimal policy for mapping states to actions through trial and error. This document discusses key concepts in reinforcement learning including the environment, real versus simulated environments, model-free versus model-based learning, and the reinforcement learning workflow. It also explains how reinforcement learning is similar to traditional control approaches and how MATLAB can be used to set up reinforcement learning simulations and train agents.