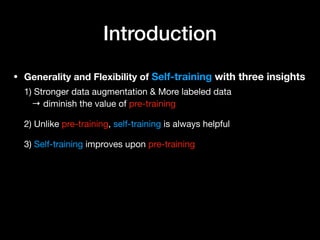

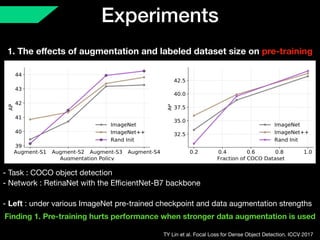

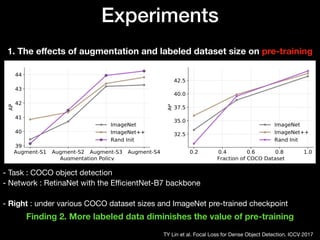

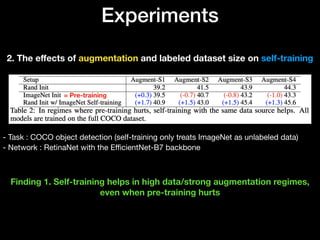

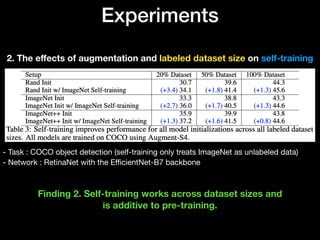

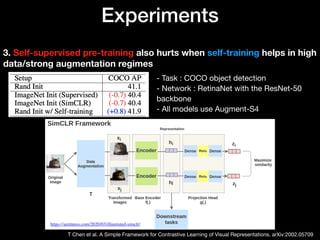

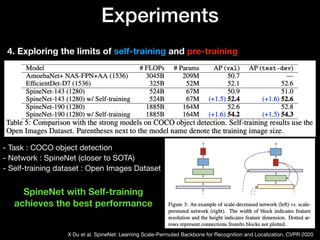

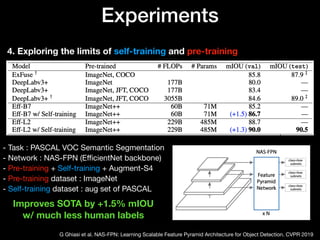

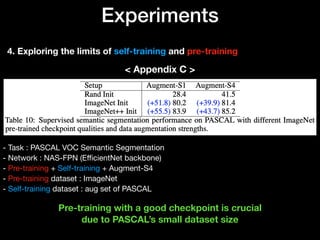

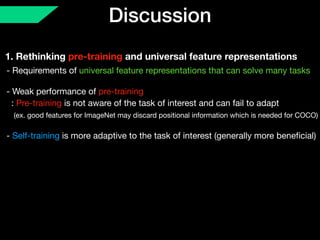

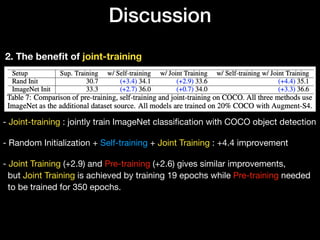

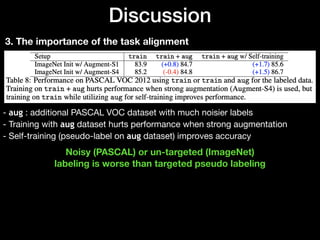

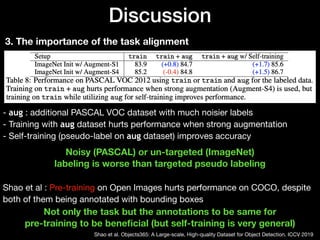

The document discusses the limitations of traditional pre-training methods in computer vision, particularly in relation to the COCO dataset, and highlights the advantages of self-training approaches. It presents findings that self-training improves performance across various conditions, especially when combined with strong data augmentation and larger labeled datasets. Overall, self-training is deemed more adaptable and beneficial compared to pre-training, though it requires more computational resources.

![Introduction

He et al. Rethinking ImageNet Pre-training. ICCV 2019

• Pre-training

- a dominant paradigm in computer vision (ex. ImageNet pre-training)

- However, ImageNet pre-training does not improve accuracy on COCO

[Kaiming He, ICCV 2019]](https://image.slidesharecdn.com/rethinkingpre-trainingandself-training-reviewcdm-200809112201/85/Review-Rethinking-Pre-training-and-Self-training-2-320.jpg)

![Introduction

He et al. Rethinking ImageNet Pre-training. ICCV 2019

• Pre-training

- a dominant paradigm in computer vision (ex. ImageNet pre-training)

- However, ImageNet pre-training does not improve accuracy on COCO

[Kaiming He, ICCV 2019]

• Self-training

- Steps (ex. Use ImageNet to help COCO object detection)

1) Discard the labels on ImageNet

2) Train an object detection on COCO, and use it to generate pseudo labels

on ImageNet

3) A new model is trained on the combined pseudo-labeled ImageNet and

labeled COCO data](https://image.slidesharecdn.com/rethinkingpre-trainingandself-training-reviewcdm-200809112201/85/Review-Rethinking-Pre-training-and-Self-training-3-320.jpg)