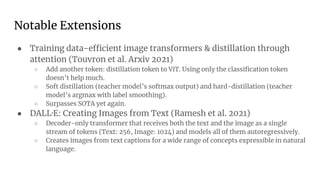

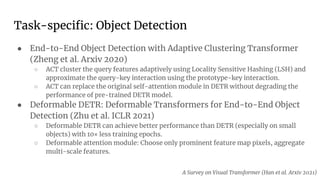

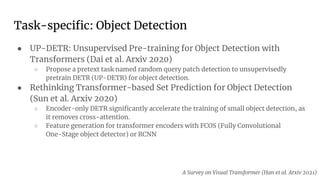

This document discusses key concepts in visual transformers including key-value-query attention, pooling, multi-head attention, and unsupervised representation learning. It then summarizes several state-of-the-art papers applying transformers to computer vision tasks like image classification using ViT, object detection using DETR, and generative pretraining from pixels. Additional works extending visual transformers to tasks like segmentation, video analysis, and captioning are also briefly mentioned.

![Unsupervised Representation Learning

● Input sequence x=(x1

, x2

, … )

● Autoregressive (AR)

○ ex) ELMo, GPT

○ No bidirectional context.

○ ELMO: Need to separately train forward/backward context.

● Auto Encoding (AE)

○ Corrupted input x’=(x1

, x2

, …, [MASK], … )

○ ex) BERT

○ Bi-directional self-attention

○ Different input distribution due to corruption

Understanding XLNet https://www.borealisai.com/en/blog/understanding-xlnet](https://image.slidesharecdn.com/20210209-jonas-visual-transformers-221129145450-56e929a5/85/Visual-Transformers-6-320.jpg)