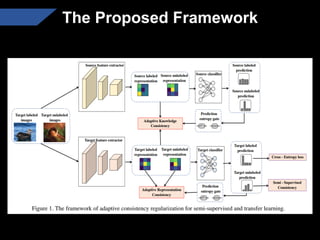

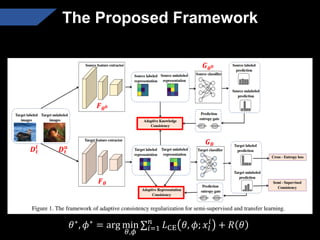

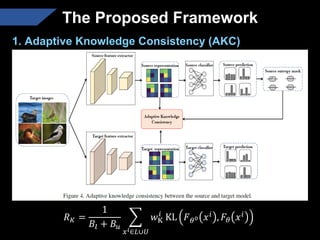

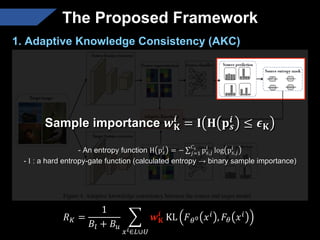

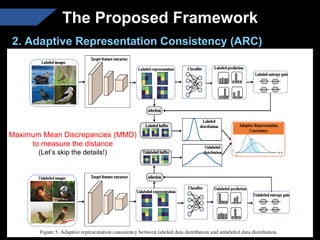

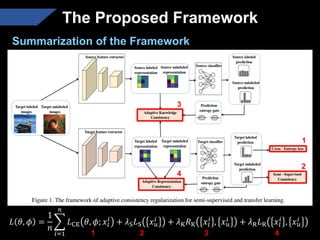

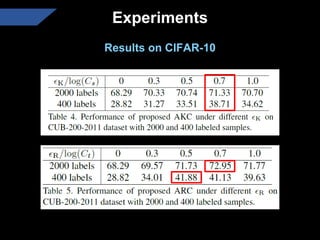

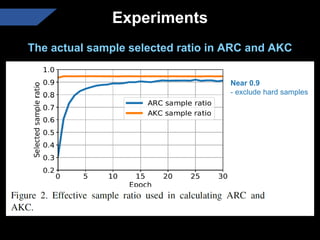

The document presents a semi-supervised transfer learning framework that enhances consistency regularization by incorporating adaptive knowledge consistency (AKC) and adaptive representation consistency (ARC). These methods utilize labeled and unlabeled data effectively, demonstrating competitive results against state-of-the-art semi-supervised learning techniques across various benchmarks. The proposed framework aims to improve transfer learning by addressing the domain gap and optimizing representation learning.

![Introduction

Transfer Learning

• The powerful pre-trained model

1) excellent transferability

2) generalization capacity

• Zhou et al.

1) the benefit of SSL are smaller when trained from a pre-trained model

2) combining SSL and transfer learning can solve the domain gap

[Zhou et al, When Semi-Supervised Learning Meets Transfer Learning: Training Strategies, Models and Datasets, arXiv 2018]](https://image.slidesharecdn.com/adaptiveconsistencyregularizationforsemi-supervisedtransferlearningreviewcdm-210307084235/85/Review-Adaptive-Consistency-Regularization-for-Semi-Supervised-Transfer-Learning-3-320.jpg)