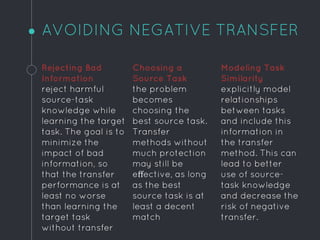

Transfer learning aims to improve learning outcomes for a target task by leveraging knowledge from a related source task. It does this by influencing the target task's assumptions based on what was learned from the source task. This can allow for faster and better generalized learning in the target task. However, there is a risk of negative transfer where performance decreases. To avoid this, methods examine task similarity and reject harmful source knowledge, or generate multiple mappings between source and target to identify the best match. The goal of transfer learning is to start higher, learn faster, and achieve better overall performance compared to learning the target task without transfer.