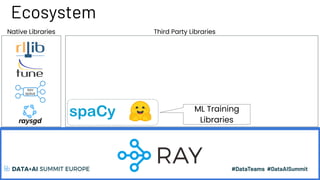

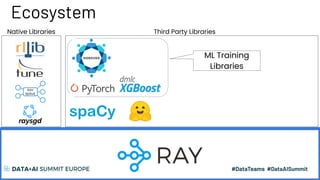

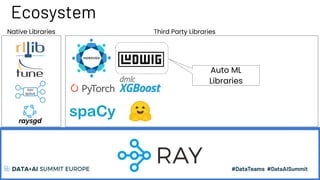

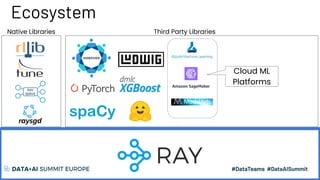

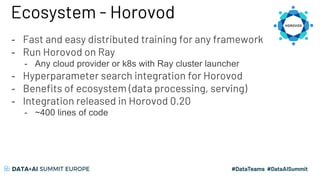

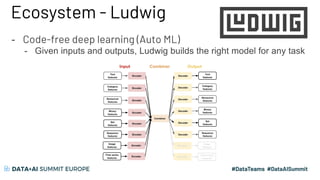

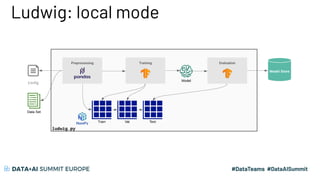

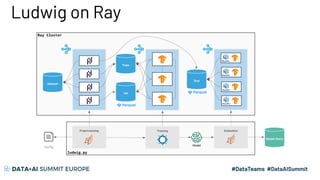

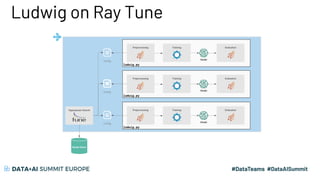

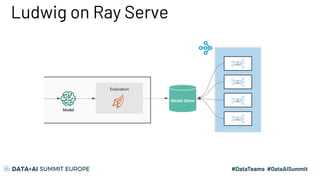

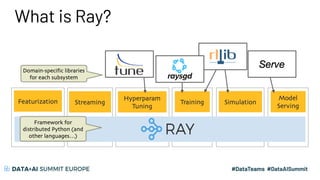

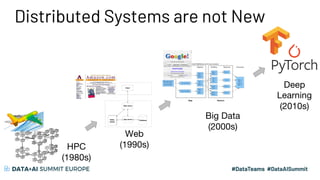

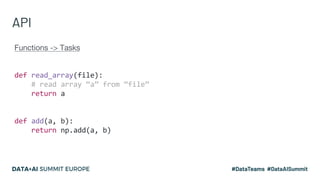

This document presents an overview of Ray, a framework for simplifying distributed computing, along with its ecosystem integrations like Horovod and Ludwig. It highlights Ray's compatibility with popular libraries and its role in enhancing scalability for machine learning tasks. Additionally, it discusses the benefits of using Ray for various applications, including hyperparameter tuning and serving in cloud environments.

![API

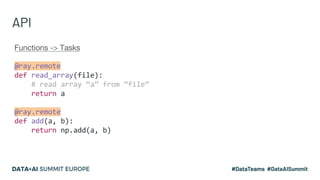

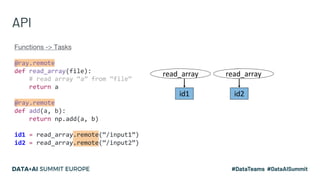

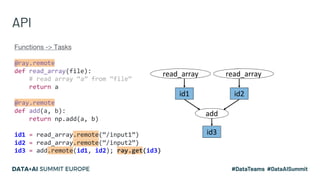

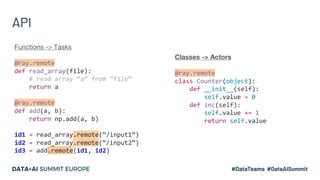

Functions -> Tasks

@ray.remote

def read_array(file):

# read array “a” from “file”

return a

@ray.remote

def add(a, b):

return np.add(a, b)

id1 = read_array.remote(“/input1”)

id2 = read_array.remote(“/input2”)

id3 = add.remote(id1, id2)

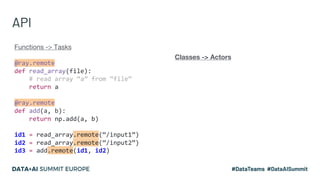

Classes -> Actors

@ray.remote

class Counter(object):

def __init__(self):

self.value = 0

def inc(self):

self.value += 1

return self.value

c = Counter.remote()

id4 = c.inc.remote()

id5 = c.inc.remote()

ray.get([id4, id5])](https://image.slidesharecdn.com/302addairliaw-201130191623/85/Ray-and-Its-Growing-Ecosystem-24-320.jpg)

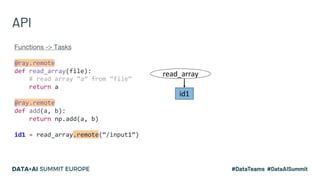

![API

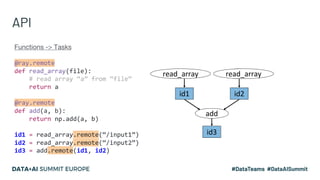

Functions -> Tasks

@ray.remote

def read_array(file):

# read array “a” from “file”

return a

@ray.remote(num_gpus=1)

def add(a, b):

return np.add(a, b)

id1 = read_array.remote(“/input1”)

id2 = read_array.remote(“/input2”)

id3 = add.remote(id1, id2)

Classes -> Actors

@ray.remote(num_gpus=1)

class Counter(object):

def __init__(self):

self.value = 0

def inc(self):

self.value += 1

return self.value

c = Counter.remote()

id4 = c.inc.remote()

id5 = c.inc.remote()

ray.get([id4, id5])](https://image.slidesharecdn.com/302addairliaw-201130191623/85/Ray-and-Its-Growing-Ecosystem-25-320.jpg)