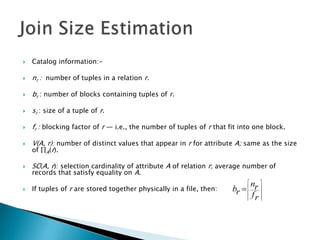

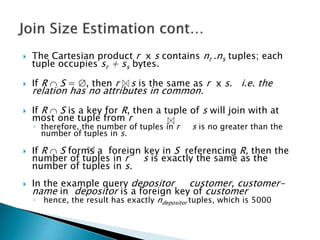

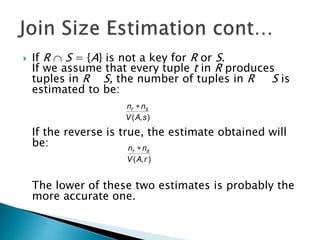

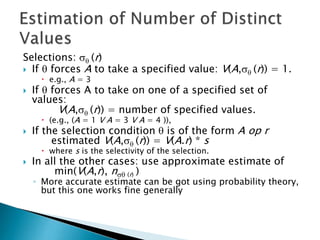

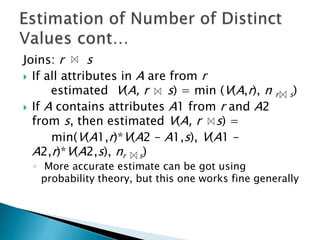

The document discusses query optimization in databases. Query optimization is the process of selecting the most efficient query evaluation plan to minimize costs and maximize performance. An optimized query will be executed faster using less system resources. Key factors considered during optimization include join size estimation, estimating the number of distinct values, and catalog information about relations.