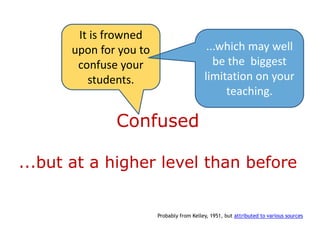

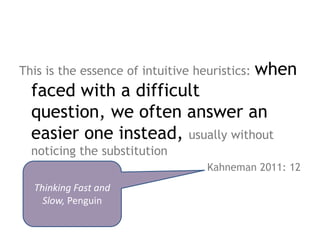

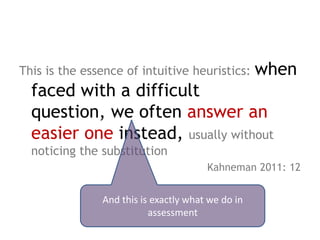

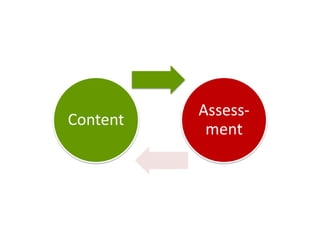

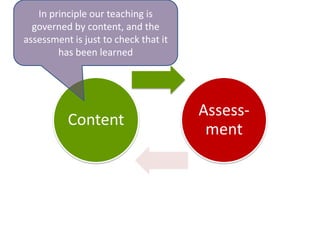

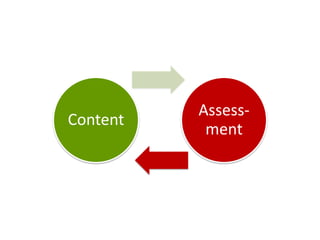

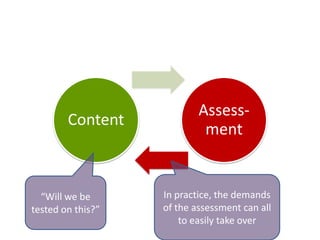

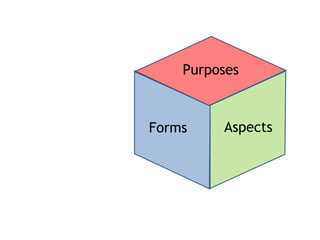

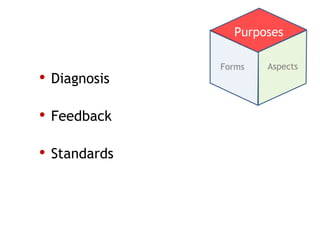

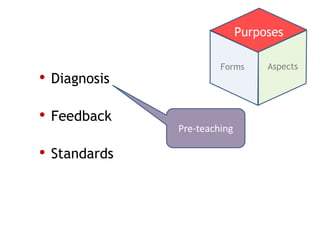

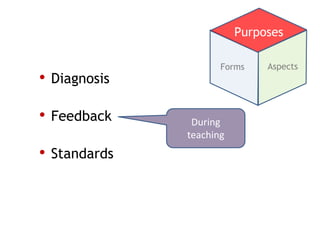

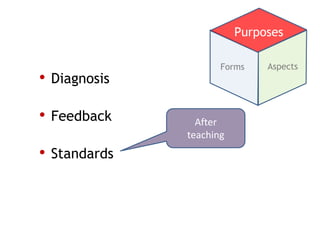

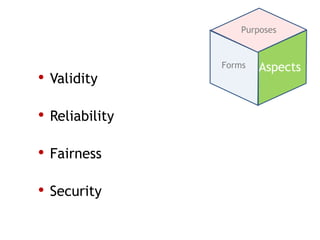

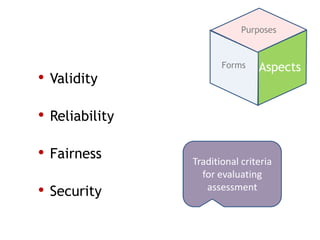

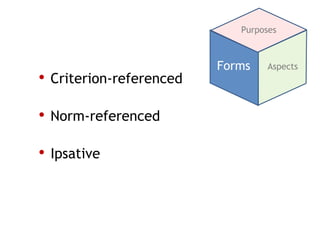

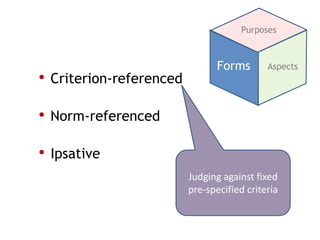

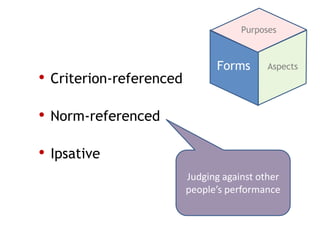

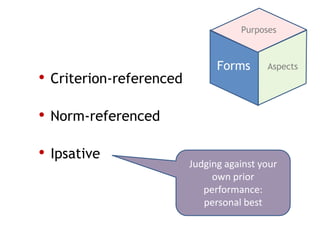

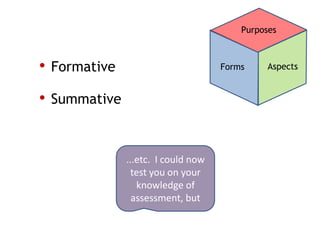

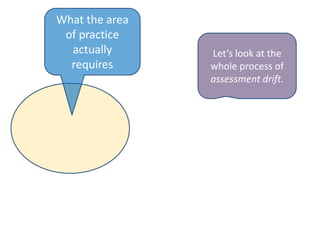

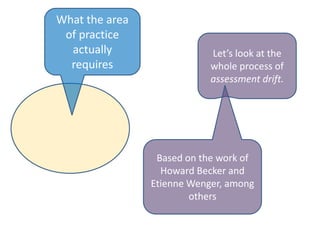

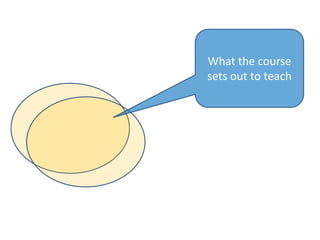

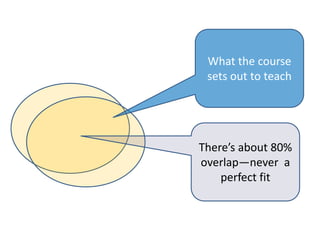

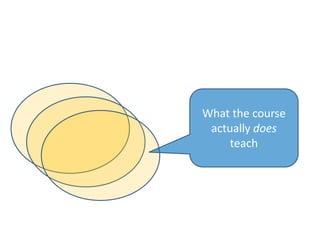

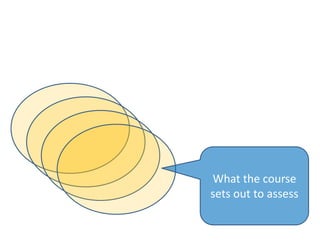

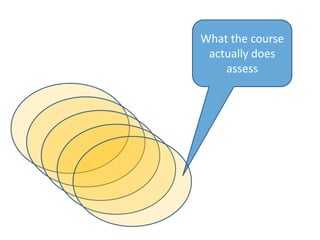

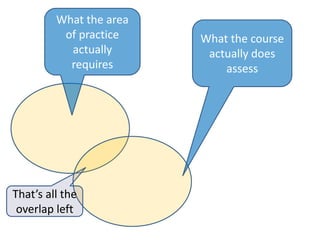

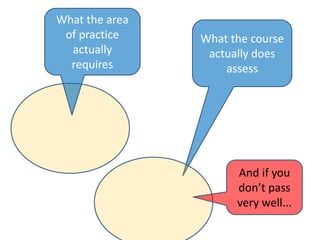

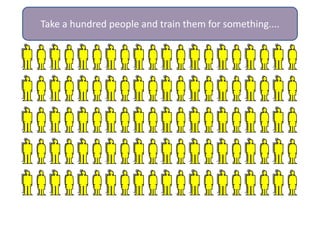

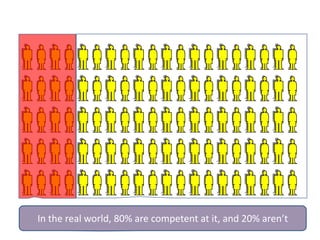

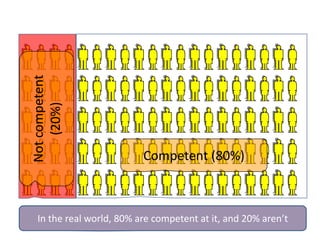

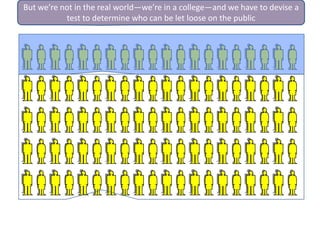

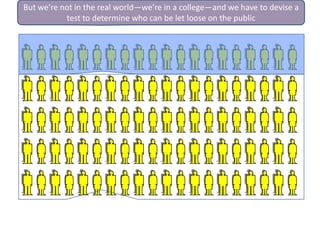

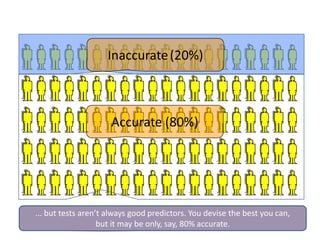

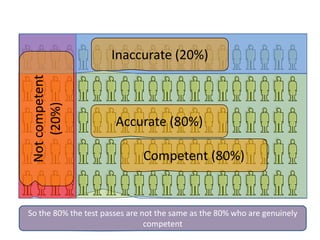

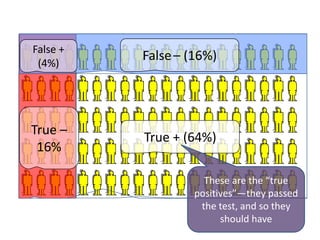

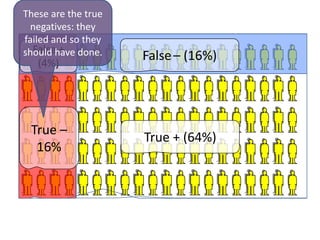

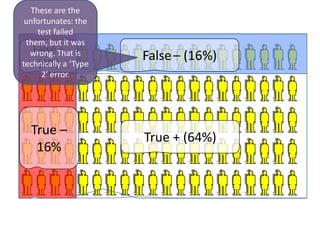

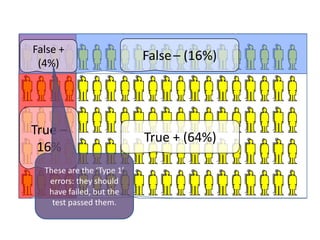

The document discusses the complexities and challenges of assessment in education, including issues of validity, reliability, and the impact of assessment on teaching and learning. It stresses the confusion that can arise from assessment practices and highlights the limitations of testing, specifically addressing false positives and negatives. Ultimately, it encourages a deeper understanding of the assessment process, while acknowledging that confusion can be constructive in enhancing learning.